Stay updated through online hackathons

Download as pptx, pdf0 likes1,380 views

Hackerday

1 of 35

Download to read offline

Recommended

What companies hiring data scientists and hadoop developers are looking for?

What companies hiring data scientists and hadoop developers are looking for?DeZyre

?

Companies in many industries, including oil and gas, insurance, social media, and government, are hiring data scientists and Hadoop developers. The document provides strategies for job seekers to demonstrate their qualifications to hiring managers, including illustrating how they can perform required tasks without extensive years of experience. It also outlines what interviewers look for, such as business acumen for data scientists. Salary ranges are provided for various big data roles from entry-level to experienced positions. Contact information is included at the end for follow up.Big Data Timeline

Big Data TimelineDeZyre

?

This document promotes additional reading on big data and Hadoop training by providing clickable links to read a complete article on the topic as well as learn more about big data and Hadoop training opportunities. It points the reader towards further resources without providing much summary or context of its own.How to program your way into data science?

How to program your way into data science?DeZyre

?

This document discusses how programming is essential for data science work. It explains that while data science builds on statistics, it now requires a diverse set of skills including programming. Programming is needed for tasks like data wrangling, analysis, modeling, deployment, and more. The document recommends Python or R as good options for the programming component of data science and provides examples of how programming supports functions like data exploration, modeling, building production systems, and more. Overall, it argues that programming proficiency is a core requirement for modern data science work.Big Data Use Cases

Big Data Use CasesDeZyre

?

This document discusses big data and Hadoop training. It provides links to read a complete article on 5 big data use cases and to learn more about IBM Certified big data and Hadoop training. Clicking the links would take the reader to more information on common big data uses and certification programs.Big data hadoop salary trends

Big data hadoop salary trendsDeZyre

?

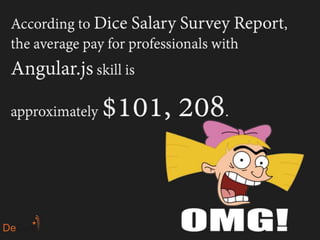

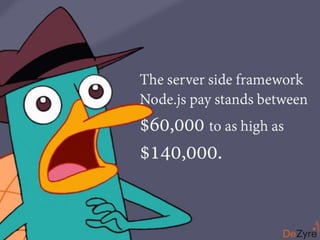

Average salaries for big data and Hadoop developers have increased 9.3% in the last year, now ranging from $119,250 to $168,250 annually. There are over 500 open big data jobs in San Francisco, where the average salary for Hadoop developers is $139,000, and senior Hadoop developers can earn over $178,000. The states with the most big data and Hadoop jobs are California, New York, New Jersey, and Texas.How to become a data scientist

How to become a data scientistDeZyre

?

This document provides guidance on becoming a data scientist by outlining important skills to learn like statistics, programming, visualization, and big data concepts. It recommends starting with hands-on SQL and statistical learning in R or Python, developing expertise in data visualization, and learning to apply techniques such as regression, classification, and recommendation engines. The document advises demonstrating what you've learned by applying for data scientist positions.How big data is transforming BI

How big data is transforming BIDeZyre

?

This document discusses how big data is transforming business intelligence. It outlines some of the pains of traditional BI, including maintaining large data warehouses and only considering structured data. The document advocates for an open source approach using Hadoop as an "extended data warehouse" to address these issues. Examples of recent Solocal Group projects involving real-time business analytics and a search power selector are provided. Advice is given on how companies can activate big data projects and start the BI transformation.Sports and Big data

Sports and Big dataDeZyre

?

Big Data analytics is revolutionizing the sports industry by helping teams and players analyze massive amounts of data to improve performance, prevent injuries, and enhance the fan experience. Sports teams are collecting data from cameras, sensors, wearables and other sources to analyze player performance, predict outcomes, and develop strategies. This data combined with analytics allows teams to gain competitive advantages and fans to more accurately predict winners. While big data provides insights, human experience and instincts are still needed to apply the strategies during games.Internet of Things

Internet of ThingsDeZyre

?

The document details the use of the Beaconstac analytics platform, which focuses on proximity marketing via beacons and utilizes event data analysis. It explains the integration of Hadoop and Amazon EMR for processing event logs and the management of data pipelines to generate insights, such as heat maps and user interactions. Production integration involves running a customized data pipeline that processes jobs and outputs results into Elastic Search.Big data in healthcare

Big data in healthcareDeZyre

?

Big data solutions are enabling healthcare providers to transform into more patient-centered, collaborative care models driven by analytics. As basic needs are met and advanced applications emerge, new use cases will arise from sources like wearable devices and sensors. Predictive analytics using big data can help fill gaps by predicting things like missed appointments, noncompliance, and patient trajectories in order to proactively manage care. However, barriers to using big data include a lack of expertise and the fact that big data has a different structure and is more unstructured than traditional databases.What is big data

What is big data DeZyre

?

Big data refers to extremely large data sets that are difficult to process using traditional data processing applications. Hadoop is an open-source software framework that structures big data for analytics purposes using a distributed computing architecture. Demand for big data skills like Hadoop development and administration is increasing significantly, with salaries offering healthy premiums, as more organizations use big data analytics to make important predictions. DeZyre offers job-skills training courses developed jointly with industry partners, delivered through an interactive online platform, to help people learn skills like Hadoop from experts and get certified.25 things that make Amazons Jeff Bezos, Jeff Bezos

25 things that make Amazons Jeff Bezos, Jeff BezosDeZyre

?

The document highlights key aspects of Jeff Bezos's leadership style and company culture at Amazon, such as his direct involvement with customer complaints and a unique meeting structure that emphasizes thorough preparation. Bezos is known for demanding high standards, intolerance for incompetence, and challenging employees to think critically and innovate. Many former employees describe Amazon's environment as a 'gladiator culture' marked by high pressure and a mix of startup agility and corporate structure.定制翱颁础顿学生卡加拿大安大略艺术与设计大学成绩单范本,翱颁础顿成绩单复刻

定制翱颁础顿学生卡加拿大安大略艺术与设计大学成绩单范本,翱颁础顿成绩单复刻taqyed

?

2025年极速办安大略艺术与设计大学毕业证【q薇1954292140】学历认证流程安大略艺术与设计大学毕业证加拿大本科成绩单制作【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻安大略艺术与设计大学成绩单信封,Buy OCAD University Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

安大略艺术与设计大学成绩单能够体现您的的学习能力,包括安大略艺术与设计大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一.安大略艺术与设计大学毕业证【q微1954292140】安大略艺术与设计大学成绩单、留信认证、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理国外各大学文凭(一对一专业服务,可全程监控跟踪进度)NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...

NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...Tamanna36

?

NVIDIA Triton Inference Server! ?

Learn how Triton streamlines AI model deployment with dynamic batching, support for TensorFlow, PyTorch, ONNX, and more, plus GPU-optimized performance. From YOLO11 object detection to NVIDIA Dynamo’s future, it’s your guide to scalable AI inference.

Check out the slides and share your thoughts! ?

#AI #NVIDIA #TritonInferenceServer #MachineLearningPrescriptive Process Monitoring Under Uncertainty and Resource Constraints: A...

Prescriptive Process Monitoring Under Uncertainty and Resource Constraints: A...Mahmoud Shoush

?

We introduced Black-Box Prescriptive Process Monitoring (BB-PrPM) – a reinforcement learning approach that learns when, whether, and how to intervene in business processes to boost performance under real-world constraints.

This work is presented at the International Conference on Advanced Information Systems Engineering CAiSE Conference #CAiSE2025

The Influence off Flexible Work Policies

The Influence off Flexible Work Policiessales480687

?

This topic explores how flexible work policies—such as remote work, flexible hours, and hybrid models—are transforming modern workplaces. It examines the impact on employee productivity, job satisfaction, work-life balance, and organizational performance. The topic also addresses challenges such as communication gaps, maintaining company culture, and ensuring accountability. Additionally, it highlights how flexible work arrangements can attract top talent, promote inclusivity, and adapt businesses to an evolving global workforce. Ultimately, it reflects the shift in how and where work gets done in the 21st century.最新版美国威斯康星大学河城分校毕业证(鲍奥搁贵毕业证书)原版定制

最新版美国威斯康星大学河城分校毕业证(鲍奥搁贵毕业证书)原版定制taqyea

?

2025原版威斯康星大学河城分校毕业证书pdf电子版【q薇1954292140】美国毕业证办理UWRF威斯康星大学河城分校毕业证书多少钱?【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻威斯康星大学河城分校成绩单信封,Buy University of Wisconsin-River Falls Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

威斯康星大学河城分校成绩单能够体现您的的学习能力,包括威斯康星大学河城分校课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理毕业证|办理文凭: 买大学毕业证|买大学文凭【q薇1954292140】威斯康星大学河城分校学位证明书如何办理申请?

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理美国成绩单威斯康星大学河城分校毕业证【q薇1954292140】国外大学毕业证, 文凭办理, 国外文凭办理, 留信网认证More Related Content

More from DeZyre (6)

How big data is transforming BI

How big data is transforming BIDeZyre

?

This document discusses how big data is transforming business intelligence. It outlines some of the pains of traditional BI, including maintaining large data warehouses and only considering structured data. The document advocates for an open source approach using Hadoop as an "extended data warehouse" to address these issues. Examples of recent Solocal Group projects involving real-time business analytics and a search power selector are provided. Advice is given on how companies can activate big data projects and start the BI transformation.Sports and Big data

Sports and Big dataDeZyre

?

Big Data analytics is revolutionizing the sports industry by helping teams and players analyze massive amounts of data to improve performance, prevent injuries, and enhance the fan experience. Sports teams are collecting data from cameras, sensors, wearables and other sources to analyze player performance, predict outcomes, and develop strategies. This data combined with analytics allows teams to gain competitive advantages and fans to more accurately predict winners. While big data provides insights, human experience and instincts are still needed to apply the strategies during games.Internet of Things

Internet of ThingsDeZyre

?

The document details the use of the Beaconstac analytics platform, which focuses on proximity marketing via beacons and utilizes event data analysis. It explains the integration of Hadoop and Amazon EMR for processing event logs and the management of data pipelines to generate insights, such as heat maps and user interactions. Production integration involves running a customized data pipeline that processes jobs and outputs results into Elastic Search.Big data in healthcare

Big data in healthcareDeZyre

?

Big data solutions are enabling healthcare providers to transform into more patient-centered, collaborative care models driven by analytics. As basic needs are met and advanced applications emerge, new use cases will arise from sources like wearable devices and sensors. Predictive analytics using big data can help fill gaps by predicting things like missed appointments, noncompliance, and patient trajectories in order to proactively manage care. However, barriers to using big data include a lack of expertise and the fact that big data has a different structure and is more unstructured than traditional databases.What is big data

What is big data DeZyre

?

Big data refers to extremely large data sets that are difficult to process using traditional data processing applications. Hadoop is an open-source software framework that structures big data for analytics purposes using a distributed computing architecture. Demand for big data skills like Hadoop development and administration is increasing significantly, with salaries offering healthy premiums, as more organizations use big data analytics to make important predictions. DeZyre offers job-skills training courses developed jointly with industry partners, delivered through an interactive online platform, to help people learn skills like Hadoop from experts and get certified.25 things that make Amazons Jeff Bezos, Jeff Bezos

25 things that make Amazons Jeff Bezos, Jeff BezosDeZyre

?

The document highlights key aspects of Jeff Bezos's leadership style and company culture at Amazon, such as his direct involvement with customer complaints and a unique meeting structure that emphasizes thorough preparation. Bezos is known for demanding high standards, intolerance for incompetence, and challenging employees to think critically and innovate. Many former employees describe Amazon's environment as a 'gladiator culture' marked by high pressure and a mix of startup agility and corporate structure.Recently uploaded (20)

定制翱颁础顿学生卡加拿大安大略艺术与设计大学成绩单范本,翱颁础顿成绩单复刻

定制翱颁础顿学生卡加拿大安大略艺术与设计大学成绩单范本,翱颁础顿成绩单复刻taqyed

?

2025年极速办安大略艺术与设计大学毕业证【q薇1954292140】学历认证流程安大略艺术与设计大学毕业证加拿大本科成绩单制作【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻安大略艺术与设计大学成绩单信封,Buy OCAD University Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

安大略艺术与设计大学成绩单能够体现您的的学习能力,包括安大略艺术与设计大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一.安大略艺术与设计大学毕业证【q微1954292140】安大略艺术与设计大学成绩单、留信认证、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理国外各大学文凭(一对一专业服务,可全程监控跟踪进度)NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...

NVIDIA Triton Inference Server, a game-changing platform for deploying AI mod...Tamanna36

?

NVIDIA Triton Inference Server! ?

Learn how Triton streamlines AI model deployment with dynamic batching, support for TensorFlow, PyTorch, ONNX, and more, plus GPU-optimized performance. From YOLO11 object detection to NVIDIA Dynamo’s future, it’s your guide to scalable AI inference.

Check out the slides and share your thoughts! ?

#AI #NVIDIA #TritonInferenceServer #MachineLearningPrescriptive Process Monitoring Under Uncertainty and Resource Constraints: A...

Prescriptive Process Monitoring Under Uncertainty and Resource Constraints: A...Mahmoud Shoush

?

We introduced Black-Box Prescriptive Process Monitoring (BB-PrPM) – a reinforcement learning approach that learns when, whether, and how to intervene in business processes to boost performance under real-world constraints.

This work is presented at the International Conference on Advanced Information Systems Engineering CAiSE Conference #CAiSE2025

The Influence off Flexible Work Policies

The Influence off Flexible Work Policiessales480687

?

This topic explores how flexible work policies—such as remote work, flexible hours, and hybrid models—are transforming modern workplaces. It examines the impact on employee productivity, job satisfaction, work-life balance, and organizational performance. The topic also addresses challenges such as communication gaps, maintaining company culture, and ensuring accountability. Additionally, it highlights how flexible work arrangements can attract top talent, promote inclusivity, and adapt businesses to an evolving global workforce. Ultimately, it reflects the shift in how and where work gets done in the 21st century.最新版美国威斯康星大学河城分校毕业证(鲍奥搁贵毕业证书)原版定制

最新版美国威斯康星大学河城分校毕业证(鲍奥搁贵毕业证书)原版定制taqyea

?

2025原版威斯康星大学河城分校毕业证书pdf电子版【q薇1954292140】美国毕业证办理UWRF威斯康星大学河城分校毕业证书多少钱?【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻威斯康星大学河城分校成绩单信封,Buy University of Wisconsin-River Falls Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

威斯康星大学河城分校成绩单能够体现您的的学习能力,包括威斯康星大学河城分校课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理毕业证|办理文凭: 买大学毕业证|买大学文凭【q薇1954292140】威斯康星大学河城分校学位证明书如何办理申请?

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理美国成绩单威斯康星大学河城分校毕业证【q薇1954292140】国外大学毕业证, 文凭办理, 国外文凭办理, 留信网认证Data Visualisation in data science for students

Data Visualisation in data science for studentsconfidenceascend

?

Data visualisation is explained in a simple manner.