Sixth sence technology-Dhruv Patel

Download as pptx, pdf3 likes782 views

World popular Technology made by Pranav Mistry-Electronics Engineer And i ma also Student of Elctronics Engineering

1 of 21

Downloaded 47 times

Ad

Recommended

Sixth Sence Technology

Sixth Sence TechnologyBeat Boyz

╠²

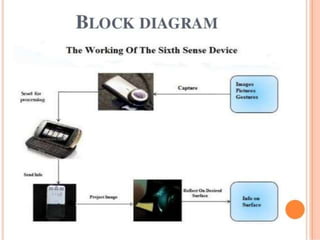

The document introduces Pranav Mistry, the inventor of Sixth Sense, a wearable gestural interface that blends the physical world with digital information. It describes the components and functionality of the device, which utilizes hand gestures for interaction and connects to a smartphone for processing. Sixth Sense can project various applications, such as virtual keypads, maps, and additional information about objects and people, making it a versatile user interface for real-time data interaction.Active Filter (Low Pass)

Active Filter (Low Pass)Saravanan Sukumaran

╠²

1. Low-pass filters allow low frequencies to pass through but attenuate frequencies higher than the cutoff frequency. They are implemented using a resistor and capacitor in conjunction with an op-amp amplifier.

2. A first-order low-pass filter has a single RC pair and rolls off at -20dB per decade above the cutoff frequency. Higher-order filters use multiple RC stages to achieve steeper roll-offs such as -40dB per decade for a second-order filter.

3. The cutoff frequency is the frequency at which the gain is 3dB below the maximum and is inversely proportional to the product of the resistor and capacitor values in each stage.The sixth sense technology complete ppt

The sixth sense technology complete pptatinav242

╠²

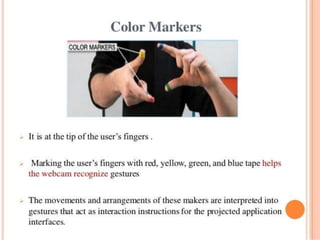

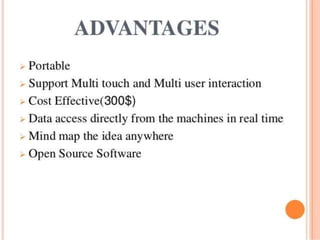

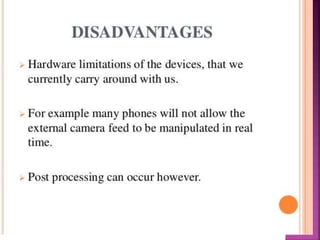

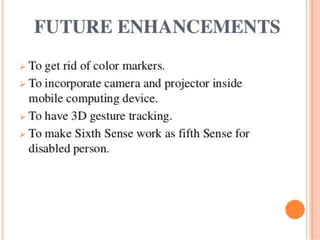

The document discusses Sixth Sense technology, a wearable gestural interface developed by Pranav Mistry that augments the physical world with digital information. It can project information onto surfaces using a camera and projector mounted on a necklace. Users interact with this information using natural hand gestures recognized by the camera. The technology allows applications like making calls, accessing maps, photos and more using gestures. While portable and low cost, limitations include hardware restrictions of mobile devices and need for color markers for gesture recognition.6th sence final

6th sence finalAsTrObOy12345

╠²

Sixth Sense is a wearable gestural interface developed by Pranav Mistry that augments physical objects with digital information. It consists of a camera, projector, and mirror worn on a user's finger that captures gestures and projects related information onto surfaces. The system processes images and gestures through a mobile device to access and display digital content. Some applications include getting product information by pointing at objects, viewing maps or photos by gesturing onto surfaces, and making calls through projected keypads. The Sixth Sense prototype demonstrates how such a system could serve as a transparent interface for accessing information about the physical world through natural hand motions.Virtual Mouse

Virtual MouseVivek Khutale

╠²

This document presents a virtual mouse system that uses computer vision and hand gesture recognition to control the mouse cursor and perform mouse tasks. The system aims to provide a more natural and convenient way to control the computer without requiring physical mouse hardware. It uses a webcam to detect colored fingertips and track hand movements in real-time. Image processing algorithms are employed for tasks like segmentation, denoising, finding the hand center and size, and detecting individual fingertips. Detected gestures are then mapped to mouse functions like cursor movement, left/right clicks, and scrolling. The document outlines the goals, design approach, and implementation details of the system, as well as advantages, limitations, and directions for future work.Filter dengan-op-amp

Filter dengan-op-ampherdwihascaryo

╠²

The document discusses different types of filters and their frequency responses. It describes that filters can be either analog and process continuous signals, or digital and process discrete signals. There are four main types of filters: lowpass, highpass, bandstop, and bandpass. The frequency response of these filters can be modeled using concepts like poles, zeros, break/corner frequencies, and Bode plots. Bode plots use logarithmic scales to show how the magnitude and phase of a filter's transfer function change over frequency.sixth sense technology by pranav mistery

sixth sense technology by pranav misteryAmitGajera

╠²

Sixth Sense technology, developed by Steve Mann and enhanced by Pranav Mistry at MIT Media Lab, integrates digital information into the physical world through a wearable device that combines a camera, projector, and mobile computing. It allows users to interact with their environment using hand gestures, turning any surface into a touch-screen for accessing information and applications. Despite its potential for various uses, the technology has limitations like dependency on specific operating systems and visibility issues in bright environments.Sixth sense technology ppt

Sixth sense technology pptMohammad Adil

╠²

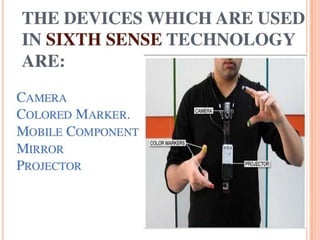

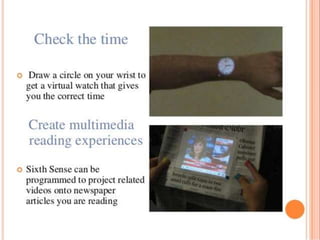

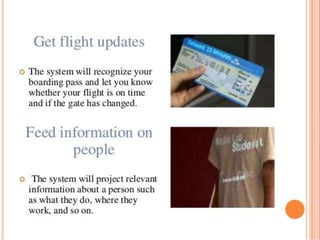

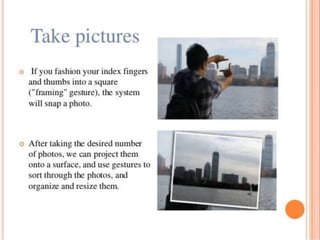

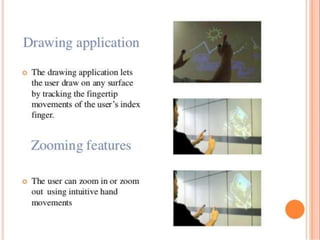

- The sixth sense technology allows users to interact with digital information by using hand gestures without any hardware devices. It was first developed in 1990 as a wearable computer and camera system.

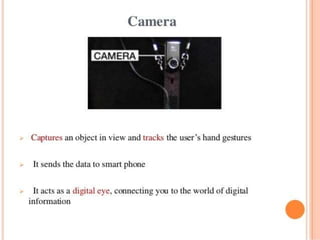

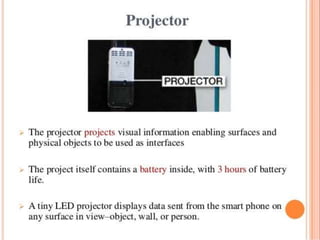

- The key components are a camera to track hand gestures, a projector to display information onto surfaces, and a mobile device to handle internet connectivity. The camera sends gesture data to the mobile device for processing using computer vision techniques.

- Applications include using hand gestures to draw on surfaces, get flight information by making circular gestures, and make calls by typing on an projected keypad. The technology aims to seamlessly connect the physical and digital world.Sixth sense by kuntal ppt

Sixth sense by kuntal pptKrishh Patel

╠²

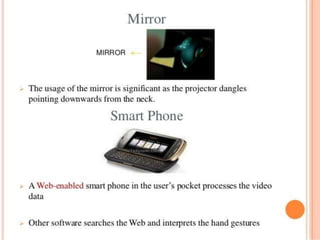

Sixth Sense is a wearable technology developed by Pranav Mistry that uses natural hand gestures to allow the user to interact with digital information that is projected onto physical surfaces around them. It consists of a camera, projector, mirror, and smartphone connected via Bluetooth. The camera tracks colored markers on the user's fingers to interpret gestures which are processed by the smartphone to project related images and information onto surrounding surfaces via the projector and mirror. Some proposed applications include making calls, getting directions, viewing photos or videos, and accessing information about products or books by pointing at them.Sixth Sense Technology

Sixth Sense TechnologySaugat Bhattacharjee

╠²

The document discusses the SixthSense wearable gestural interface, which integrates digital information into the physical world using hand gestures. It outlines the device's components, including a pocket projector, camera, mirror, and mobile computing device, and details its software components and applications. Additionally, it highlights the advantages and accolades of SixthSense, emphasizing its open-source nature and innovative design that allows for intuitive interaction with digital content.Sixth sense presentation

Sixth sense presentationMuhammad_Hassan142

╠²

Pranav Mistry, a PhD student at MIT Media Lab, developed the sixth sense technology. It is a wearable gestural interface that augments the physical world with digital information using hand gestures detected by a camera. The system includes a camera, projector, mirror, and smart phone to allow interactions like making calls, viewing maps, taking photos, and accessing information by pointing at physical objects or surfaces. While promising improved access to information, challenges include privacy and visibility of projections depending on lighting conditions.Sixth sense-final-ppt

Sixth sense-final-pptThedarkangel1

╠²

The document discusses Sixth Sense technology, a wearable gestural interface that enhances the physical world with digital information using natural hand gestures. It outlines the components of the technology, including a camera, projector, and smartphone, and showcases various applications such as making calls, navigating maps, and accessing product information. The system allows for real-time interaction and aims to provide a seamless user interface with intuitive control over digital content.SIXTH SENSE TECHNOLOGY (PRANAV MISTRY) -WEAR YOUR WORLD!!!

SIXTH SENSE TECHNOLOGY (PRANAV MISTRY) -WEAR YOUR WORLD!!!Fathima Mizna Kalathingal

╠²

Sixth Sense Technology, developed by Pranav Mistry, integrates digital information into the physical environment, allowing users to interact with it through natural hand gestures using a portable device comprised of a camera, projector, mirror, smartphone, and color markers. The technology enables various applications, such as making calls, checking maps, and accessing product information, but faces challenges regarding market release, privacy, and health concerns. Despite its advantages in portability and multi-user interaction, further engineering and modifications are necessary before it can be commercially available.Sixth Sense Technology

Sixth Sense TechnologyNavin Kumar

╠²

The document summarizes Sixth Sense technology, a wearable gestural interface that augments physical reality. It consists of a camera, projector, and mirror coupled in a pendant, along with colored markers. The camera tracks hand gestures to interact with projected information on surfaces. Applications include making calls, getting maps/product info, and more, using intuitive hand gestures. Sixth Sense bridges the physical and digital world through natural interactions.sixth sense technology 2014 ,by Richard Des Nieves,Bengaluru,kar,India.

sixth sense technology 2014 ,by Richard Des Nieves,Bengaluru,kar,India.Richard Des Nieves M

╠²

The document presents an overview of Sixth Sense technology, a wearable gestural interface developed by Pranav Mistry that enhances interaction with digital information in the physical world using hand gestures. It details the components, working prototype, applications, advantages, and future enhancements of the technology. Sixth Sense aims to make digital interaction more intuitive and accessible by projecting information onto various surfaces and recognizing gestures.A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and Privacy.pdf

A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and Privacy.pdfPriyanka Aash

╠²

A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and PrivacyEnhance GitHub Copilot using MCP - Enterprise version.pdf

Enhance GitHub Copilot using MCP - Enterprise version.pdfNilesh Gule

╠²

║▌║▌▀Ż deck related to the GitHub Copilot Bootcamp in Melbourne on 17 June 2025Curietech AI in action - Accelerate MuleSoft development

Curietech AI in action - Accelerate MuleSoft developmentshyamraj55

╠²

CurieTech AI in Action ŌĆō Accelerate MuleSoft Development

Overview:

This presentation demonstrates how CurieTech AIŌĆÖs purpose-built agents empower MuleSoft developers to create integration workflows faster, more accurately, and with less manual effort

linkedin.com

+12

curietech.ai

+12

meetups.mulesoft.com

+12

.

Key Highlights:

Dedicated AI agents for every stage: Coding, Testing (MUnit), Documentation, Code Review, and Migration

curietech.ai

+7

curietech.ai

+7

medium.com

+7

DataWeave automation: Generate mappings from tables or samplesŌĆö95%+ complete within minutes

linkedin.com

+7

curietech.ai

+7

medium.com

+7

Integration flow generation: Auto-create Mule flows based on specificationsŌĆöspeeds up boilerplate development

curietech.ai

+1

medium.com

+1

Efficient code reviews: Gain intelligent feedback on flows, patterns, and error handling

youtube.com

+8

curietech.ai

+8

curietech.ai

+8

Test & documentation automation: Auto-generate MUnit test cases, sample data, and detailed docs from code

curietech.ai

+5

curietech.ai

+5

medium.com

+5

Why Now?

Achieve 10├Ś productivity gains, slashing development time from hours to minutes

curietech.ai

+3

curietech.ai

+3

medium.com

+3

Maintain high accuracy with code quality matching or exceeding manual efforts

curietech.ai

+2

curietech.ai

+2

curietech.ai

+2

Ideal for developers, architects, and teams wanting to scale MuleSoft projects with AI efficiency

Conclusion:

CurieTech AI transforms MuleSoft development into an AI-accelerated workflowŌĆöletting you focus on innovation, not repetition.UserCon Belgium: Honey, VMware increased my bill

UserCon Belgium: Honey, VMware increased my billstijn40

╠²

VMwareŌĆÖs pricing changes have forced organizations to rethink their datacenter cost management strategies. While FinOps is commonly associated with cloud environments, the FinOps Foundation has recently expanded its framework to include ScopesŌĆöand Datacenter is now officially part of the equation. In this session, weŌĆÖll map the FinOps Framework to a VMware-based datacenter, focusing on cost visibility, optimization, and automation. YouŌĆÖll learn how to track costs more effectively, rightsize workloads, optimize licensing, and drive efficiencyŌĆöall without migrating to the cloud. WeŌĆÖll also explore how to align IT teams, finance, and leadership around cost-aware decision-making for on-prem environments. If your VMware bill keeps increasing and you need a new approach to cost management, this session is for you!The Future of Product Management in AI ERA.pdf

The Future of Product Management in AI ERA.pdfAlyona Owens

╠²

Hi, IŌĆÖm Aly Owens, I have a special pleasure to stand here as over a decade ago I graduated from CityU as an international student with an MBA program. I enjoyed the diversity of the school, ability to work and study, the network that came with being here, and of course the price tag for students here has always been more affordable than most around.

Since then I have worked for major corporations like T-Mobile and Microsoft and many more, and I have founded a startup. I've also been teaching product management to ensure my students save time and money to get to the same level as me faster avoiding popular mistakes. Today as IŌĆÖve transitioned to teaching and focusing on the startup, I hear everybody being concerned about Ai stealing their jobsŌĆ” WeŌĆÖll talk about it shortly.

But before that, I want to take you back to 1997. One of my favorite movies is ŌĆ£Fifth ElementŌĆØ. It wowed me with futuristic predictions when I was a kid and IŌĆÖm impressed by the number of these predictions that have already come true. Self-driving cars, video calls and smart TV, personalized ads and identity scanning. Sci-fi movies and books gave us many ideas and some are being implemented as we speak. But we often get ahead of ourselves:

Flying cars,Colonized planets, Human-like AI: not yet, Time travel, Mind-machine neural interfaces for everyone: Only in experimental stages (e.g. Neuralink).

Cyberpunk dystopias: Some vibes (neon signs + inequality + surveillance), but not total dystopia (thankfully).

On the bright side, we predict that the working hours should drop as Ai becomes our helper and there shouldnŌĆÖt be a need to work 8 hours/day. Nobody knows for sure but we can require that from legislation. Instead of waiting to see what the government and billionaires come up with, I say we should design our own future.

So, we as humans, when we donŌĆÖt know something - fear takes over. The same thing happened during the industrial revolution. In the Industrial Era, machines didnŌĆÖt steal jobsŌĆöthey transformed them but people were scared about their jobs. The AI era is making similar changes except it feels like robots will take the center stage instead of a human. First off, even when it comes to the hottest space in the military - drones, Ai does a fraction of work. AI algorithms enable real-time decision-making, obstacle avoidance, and mission optimization making drones far more autonomous and capable than traditional remote-controlled aircraft. Key technologies include computer vision for object detection, GPS-enhanced navigation, and neural networks for learning and adaptation. But guess what? There are only 2 companies right now that utilize Ai in drones to make autonomous decisions - Skydio and DJI.

Lessons Learned from Developing Secure AI Workflows.pdf

Lessons Learned from Developing Secure AI Workflows.pdfPriyanka Aash

╠²

Lessons Learned from Developing Secure AI WorkflowsSecuring Account Lifecycles in the Age of Deepfakes.pptx

Securing Account Lifecycles in the Age of Deepfakes.pptxFIDO Alliance

╠²

Securing Account Lifecycles in the Age of DeepfakesQuantum AI: Where Impossible Becomes Probable

Quantum AI: Where Impossible Becomes ProbableSaikat Basu

╠²

Imagine combining the "brains" of Artificial Intelligence (AI) with the "super muscles" of Quantum Computing. That's Quantum AI!

It's a new field that uses the mind-bending rules of quantum physics to make AI even more powerful.Smarter Aviation Data Management: Lessons from Swedavia Airports and Sweco

Smarter Aviation Data Management: Lessons from Swedavia Airports and SwecoSafe Software

╠²

Managing airport and airspace data is no small task, especially when youŌĆÖre expected to deliver it in AIXM format without spending a fortune on specialized tools. But what if there was a smarter, more affordable way?

Join us for a behind-the-scenes look at how Sweco partnered with Swedavia, the Swedish airport operator, to solve this challenge using FME and Esri.

Learn how they built automated workflows to manage periodic updates, merge airspace data, and support data extracts ŌĆō all while meeting strict government reporting requirements to the Civil Aviation Administration of Sweden.

Even better? Swedavia built custom services and applications that use the FME Flow REST API to trigger jobs and retrieve results ŌĆō streamlining tasks like securing the quality of new surveyor data, creating permdelta and baseline representations in the AIS schema, and generating AIXM extracts from their AIS data.

To conclude, FME expert Dean Hintz will walk through a GeoBorders reading workflow and highlight recent enhancements to FMEŌĆÖs AIXM (Aeronautical Information Exchange Model) processing and interpretation capabilities.

Discover how airports like Swedavia are harnessing the power of FME to simplify aviation data management, and how you can too.ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...

ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...Edge AI and Vision Alliance

╠²

For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/mpu-a-transformative-solution-for-next-gen-ai-at-the-edge-a-presentation-from-fotonation/

Petronel Bigioi, CEO of FotoNation, presents the ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the EdgeŌĆØ tutorial at the May 2025 Embedded Vision Summit.

In this talk, Bigioi introduces MPU+, a novel programmable, customizable low-power platform for real-time, localized intelligence at the edge. The platform includes an AI-augmented image signal processor that enables leading image and video quality.

In addition, it integrates ultra-low-power object and motion detection capabilities to enable always-on computer vision. A programmable neural processor provides flexibility to efficiently implement new neural networks. And additional specialized engines facilitate image stabilization and audio enhancements.9-1-1 Addressing: End-to-End Automation Using FME

9-1-1 Addressing: End-to-End Automation Using FMESafe Software

╠²

This session will cover a common use case for local and state/provincial governments who create and/or maintain their 9-1-1 addressing data, particularly address points and road centerlines. In this session, you'll learn how FME has helped Shelby County 9-1-1 (TN) automate the 9-1-1 addressing process; including automatically assigning attributes from disparate sources, on-the-fly QAQC of said data, and reporting. The FME logic that this presentation will cover includes: Table joins using attributes and geometry, Looping in custom transformers, Working with lists and Change detection.Wenn alles versagt - IBM Tape sch├╝tzt, was z├żhlt! Und besonders mit dem neust...

Wenn alles versagt - IBM Tape sch├╝tzt, was z├żhlt! Und besonders mit dem neust...Josef Weingand

╠²

IBM LTO10WebdriverIO & JavaScript: The Perfect Duo for Web Automation

WebdriverIO & JavaScript: The Perfect Duo for Web Automationdigitaljignect

╠²

In todayŌĆÖs dynamic digital landscape, ensuring the quality and dependability of web applications is essential. While Selenium has been a longstanding solution for automating browser tasks, the integration of WebdriverIO (WDIO) with Selenium and JavaScript marks a significant advancement in automation testing. WDIO enhances the testing process by offering a robust interface that improves test creation, execution, and management. This amalgamation capitalizes on the strengths of both tools, leveraging SeleniumŌĆÖs broad browser support and WDIOŌĆÖs modern, efficient approach to test automation. As automation testing becomes increasingly vital for faster development cycles and superior software releases, WDIO emerges as a versatile framework, particularly potent when paired with JavaScript, making it a preferred choice for contemporary testing teams.More Related Content

Viewers also liked (7)

Sixth sense by kuntal ppt

Sixth sense by kuntal pptKrishh Patel

╠²

Sixth Sense is a wearable technology developed by Pranav Mistry that uses natural hand gestures to allow the user to interact with digital information that is projected onto physical surfaces around them. It consists of a camera, projector, mirror, and smartphone connected via Bluetooth. The camera tracks colored markers on the user's fingers to interpret gestures which are processed by the smartphone to project related images and information onto surrounding surfaces via the projector and mirror. Some proposed applications include making calls, getting directions, viewing photos or videos, and accessing information about products or books by pointing at them.Sixth Sense Technology

Sixth Sense TechnologySaugat Bhattacharjee

╠²

The document discusses the SixthSense wearable gestural interface, which integrates digital information into the physical world using hand gestures. It outlines the device's components, including a pocket projector, camera, mirror, and mobile computing device, and details its software components and applications. Additionally, it highlights the advantages and accolades of SixthSense, emphasizing its open-source nature and innovative design that allows for intuitive interaction with digital content.Sixth sense presentation

Sixth sense presentationMuhammad_Hassan142

╠²

Pranav Mistry, a PhD student at MIT Media Lab, developed the sixth sense technology. It is a wearable gestural interface that augments the physical world with digital information using hand gestures detected by a camera. The system includes a camera, projector, mirror, and smart phone to allow interactions like making calls, viewing maps, taking photos, and accessing information by pointing at physical objects or surfaces. While promising improved access to information, challenges include privacy and visibility of projections depending on lighting conditions.Sixth sense-final-ppt

Sixth sense-final-pptThedarkangel1

╠²

The document discusses Sixth Sense technology, a wearable gestural interface that enhances the physical world with digital information using natural hand gestures. It outlines the components of the technology, including a camera, projector, and smartphone, and showcases various applications such as making calls, navigating maps, and accessing product information. The system allows for real-time interaction and aims to provide a seamless user interface with intuitive control over digital content.SIXTH SENSE TECHNOLOGY (PRANAV MISTRY) -WEAR YOUR WORLD!!!

SIXTH SENSE TECHNOLOGY (PRANAV MISTRY) -WEAR YOUR WORLD!!!Fathima Mizna Kalathingal

╠²

Sixth Sense Technology, developed by Pranav Mistry, integrates digital information into the physical environment, allowing users to interact with it through natural hand gestures using a portable device comprised of a camera, projector, mirror, smartphone, and color markers. The technology enables various applications, such as making calls, checking maps, and accessing product information, but faces challenges regarding market release, privacy, and health concerns. Despite its advantages in portability and multi-user interaction, further engineering and modifications are necessary before it can be commercially available.Sixth Sense Technology

Sixth Sense TechnologyNavin Kumar

╠²

The document summarizes Sixth Sense technology, a wearable gestural interface that augments physical reality. It consists of a camera, projector, and mirror coupled in a pendant, along with colored markers. The camera tracks hand gestures to interact with projected information on surfaces. Applications include making calls, getting maps/product info, and more, using intuitive hand gestures. Sixth Sense bridges the physical and digital world through natural interactions.sixth sense technology 2014 ,by Richard Des Nieves,Bengaluru,kar,India.

sixth sense technology 2014 ,by Richard Des Nieves,Bengaluru,kar,India.Richard Des Nieves M

╠²

The document presents an overview of Sixth Sense technology, a wearable gestural interface developed by Pranav Mistry that enhances interaction with digital information in the physical world using hand gestures. It details the components, working prototype, applications, advantages, and future enhancements of the technology. Sixth Sense aims to make digital interaction more intuitive and accessible by projecting information onto various surfaces and recognizing gestures.Recently uploaded (20)

A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and Privacy.pdf

A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and Privacy.pdfPriyanka Aash

╠²

A Constitutional Quagmire - Ethical Minefields of AI, Cyber, and PrivacyEnhance GitHub Copilot using MCP - Enterprise version.pdf

Enhance GitHub Copilot using MCP - Enterprise version.pdfNilesh Gule

╠²

║▌║▌▀Ż deck related to the GitHub Copilot Bootcamp in Melbourne on 17 June 2025Curietech AI in action - Accelerate MuleSoft development

Curietech AI in action - Accelerate MuleSoft developmentshyamraj55

╠²

CurieTech AI in Action ŌĆō Accelerate MuleSoft Development

Overview:

This presentation demonstrates how CurieTech AIŌĆÖs purpose-built agents empower MuleSoft developers to create integration workflows faster, more accurately, and with less manual effort

linkedin.com

+12

curietech.ai

+12

meetups.mulesoft.com

+12

.

Key Highlights:

Dedicated AI agents for every stage: Coding, Testing (MUnit), Documentation, Code Review, and Migration

curietech.ai

+7

curietech.ai

+7

medium.com

+7

DataWeave automation: Generate mappings from tables or samplesŌĆö95%+ complete within minutes

linkedin.com

+7

curietech.ai

+7

medium.com

+7

Integration flow generation: Auto-create Mule flows based on specificationsŌĆöspeeds up boilerplate development

curietech.ai

+1

medium.com

+1

Efficient code reviews: Gain intelligent feedback on flows, patterns, and error handling

youtube.com

+8

curietech.ai

+8

curietech.ai

+8

Test & documentation automation: Auto-generate MUnit test cases, sample data, and detailed docs from code

curietech.ai

+5

curietech.ai

+5

medium.com

+5

Why Now?

Achieve 10├Ś productivity gains, slashing development time from hours to minutes

curietech.ai

+3

curietech.ai

+3

medium.com

+3

Maintain high accuracy with code quality matching or exceeding manual efforts

curietech.ai

+2

curietech.ai

+2

curietech.ai

+2

Ideal for developers, architects, and teams wanting to scale MuleSoft projects with AI efficiency

Conclusion:

CurieTech AI transforms MuleSoft development into an AI-accelerated workflowŌĆöletting you focus on innovation, not repetition.UserCon Belgium: Honey, VMware increased my bill

UserCon Belgium: Honey, VMware increased my billstijn40

╠²

VMwareŌĆÖs pricing changes have forced organizations to rethink their datacenter cost management strategies. While FinOps is commonly associated with cloud environments, the FinOps Foundation has recently expanded its framework to include ScopesŌĆöand Datacenter is now officially part of the equation. In this session, weŌĆÖll map the FinOps Framework to a VMware-based datacenter, focusing on cost visibility, optimization, and automation. YouŌĆÖll learn how to track costs more effectively, rightsize workloads, optimize licensing, and drive efficiencyŌĆöall without migrating to the cloud. WeŌĆÖll also explore how to align IT teams, finance, and leadership around cost-aware decision-making for on-prem environments. If your VMware bill keeps increasing and you need a new approach to cost management, this session is for you!The Future of Product Management in AI ERA.pdf

The Future of Product Management in AI ERA.pdfAlyona Owens

╠²

Hi, IŌĆÖm Aly Owens, I have a special pleasure to stand here as over a decade ago I graduated from CityU as an international student with an MBA program. I enjoyed the diversity of the school, ability to work and study, the network that came with being here, and of course the price tag for students here has always been more affordable than most around.

Since then I have worked for major corporations like T-Mobile and Microsoft and many more, and I have founded a startup. I've also been teaching product management to ensure my students save time and money to get to the same level as me faster avoiding popular mistakes. Today as IŌĆÖve transitioned to teaching and focusing on the startup, I hear everybody being concerned about Ai stealing their jobsŌĆ” WeŌĆÖll talk about it shortly.

But before that, I want to take you back to 1997. One of my favorite movies is ŌĆ£Fifth ElementŌĆØ. It wowed me with futuristic predictions when I was a kid and IŌĆÖm impressed by the number of these predictions that have already come true. Self-driving cars, video calls and smart TV, personalized ads and identity scanning. Sci-fi movies and books gave us many ideas and some are being implemented as we speak. But we often get ahead of ourselves:

Flying cars,Colonized planets, Human-like AI: not yet, Time travel, Mind-machine neural interfaces for everyone: Only in experimental stages (e.g. Neuralink).

Cyberpunk dystopias: Some vibes (neon signs + inequality + surveillance), but not total dystopia (thankfully).

On the bright side, we predict that the working hours should drop as Ai becomes our helper and there shouldnŌĆÖt be a need to work 8 hours/day. Nobody knows for sure but we can require that from legislation. Instead of waiting to see what the government and billionaires come up with, I say we should design our own future.

So, we as humans, when we donŌĆÖt know something - fear takes over. The same thing happened during the industrial revolution. In the Industrial Era, machines didnŌĆÖt steal jobsŌĆöthey transformed them but people were scared about their jobs. The AI era is making similar changes except it feels like robots will take the center stage instead of a human. First off, even when it comes to the hottest space in the military - drones, Ai does a fraction of work. AI algorithms enable real-time decision-making, obstacle avoidance, and mission optimization making drones far more autonomous and capable than traditional remote-controlled aircraft. Key technologies include computer vision for object detection, GPS-enhanced navigation, and neural networks for learning and adaptation. But guess what? There are only 2 companies right now that utilize Ai in drones to make autonomous decisions - Skydio and DJI.

Lessons Learned from Developing Secure AI Workflows.pdf

Lessons Learned from Developing Secure AI Workflows.pdfPriyanka Aash

╠²

Lessons Learned from Developing Secure AI WorkflowsSecuring Account Lifecycles in the Age of Deepfakes.pptx

Securing Account Lifecycles in the Age of Deepfakes.pptxFIDO Alliance

╠²

Securing Account Lifecycles in the Age of DeepfakesQuantum AI: Where Impossible Becomes Probable

Quantum AI: Where Impossible Becomes ProbableSaikat Basu

╠²

Imagine combining the "brains" of Artificial Intelligence (AI) with the "super muscles" of Quantum Computing. That's Quantum AI!

It's a new field that uses the mind-bending rules of quantum physics to make AI even more powerful.Smarter Aviation Data Management: Lessons from Swedavia Airports and Sweco

Smarter Aviation Data Management: Lessons from Swedavia Airports and SwecoSafe Software

╠²

Managing airport and airspace data is no small task, especially when youŌĆÖre expected to deliver it in AIXM format without spending a fortune on specialized tools. But what if there was a smarter, more affordable way?

Join us for a behind-the-scenes look at how Sweco partnered with Swedavia, the Swedish airport operator, to solve this challenge using FME and Esri.

Learn how they built automated workflows to manage periodic updates, merge airspace data, and support data extracts ŌĆō all while meeting strict government reporting requirements to the Civil Aviation Administration of Sweden.

Even better? Swedavia built custom services and applications that use the FME Flow REST API to trigger jobs and retrieve results ŌĆō streamlining tasks like securing the quality of new surveyor data, creating permdelta and baseline representations in the AIS schema, and generating AIXM extracts from their AIS data.

To conclude, FME expert Dean Hintz will walk through a GeoBorders reading workflow and highlight recent enhancements to FMEŌĆÖs AIXM (Aeronautical Information Exchange Model) processing and interpretation capabilities.

Discover how airports like Swedavia are harnessing the power of FME to simplify aviation data management, and how you can too.ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...

ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...Edge AI and Vision Alliance

╠²

For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/mpu-a-transformative-solution-for-next-gen-ai-at-the-edge-a-presentation-from-fotonation/

Petronel Bigioi, CEO of FotoNation, presents the ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the EdgeŌĆØ tutorial at the May 2025 Embedded Vision Summit.

In this talk, Bigioi introduces MPU+, a novel programmable, customizable low-power platform for real-time, localized intelligence at the edge. The platform includes an AI-augmented image signal processor that enables leading image and video quality.

In addition, it integrates ultra-low-power object and motion detection capabilities to enable always-on computer vision. A programmable neural processor provides flexibility to efficiently implement new neural networks. And additional specialized engines facilitate image stabilization and audio enhancements.9-1-1 Addressing: End-to-End Automation Using FME

9-1-1 Addressing: End-to-End Automation Using FMESafe Software

╠²

This session will cover a common use case for local and state/provincial governments who create and/or maintain their 9-1-1 addressing data, particularly address points and road centerlines. In this session, you'll learn how FME has helped Shelby County 9-1-1 (TN) automate the 9-1-1 addressing process; including automatically assigning attributes from disparate sources, on-the-fly QAQC of said data, and reporting. The FME logic that this presentation will cover includes: Table joins using attributes and geometry, Looping in custom transformers, Working with lists and Change detection.Wenn alles versagt - IBM Tape sch├╝tzt, was z├żhlt! Und besonders mit dem neust...

Wenn alles versagt - IBM Tape sch├╝tzt, was z├żhlt! Und besonders mit dem neust...Josef Weingand

╠²

IBM LTO10WebdriverIO & JavaScript: The Perfect Duo for Web Automation

WebdriverIO & JavaScript: The Perfect Duo for Web Automationdigitaljignect

╠²

In todayŌĆÖs dynamic digital landscape, ensuring the quality and dependability of web applications is essential. While Selenium has been a longstanding solution for automating browser tasks, the integration of WebdriverIO (WDIO) with Selenium and JavaScript marks a significant advancement in automation testing. WDIO enhances the testing process by offering a robust interface that improves test creation, execution, and management. This amalgamation capitalizes on the strengths of both tools, leveraging SeleniumŌĆÖs broad browser support and WDIOŌĆÖs modern, efficient approach to test automation. As automation testing becomes increasingly vital for faster development cycles and superior software releases, WDIO emerges as a versatile framework, particularly potent when paired with JavaScript, making it a preferred choice for contemporary testing teams.OWASP Barcelona 2025 Threat Model Library

OWASP Barcelona 2025 Threat Model LibraryPetraVukmirovic

╠²

Threat Model Library Launch at OWASP Barcelona 2025

https://owasp.org/www-project-threat-model-library/Cracking the Code - Unveiling Synergies Between Open Source Security and AI.pdf

Cracking the Code - Unveiling Synergies Between Open Source Security and AI.pdfPriyanka Aash

╠²

Cracking the Code - Unveiling Synergies Between Open Source Security and AICoordinated Disclosure for ML - What's Different and What's the Same.pdf

Coordinated Disclosure for ML - What's Different and What's the Same.pdfPriyanka Aash

╠²

Coordinated Disclosure for ML - What's Different and What's the SameAI Agents and FME: A How-to Guide on Generating Synthetic Metadata

AI Agents and FME: A How-to Guide on Generating Synthetic MetadataSafe Software

╠²

In the world of AI agents, semantics is king. Good metadata is thus essential in an organization's AI readiness checklist. But how do we keep up with the massive influx of new data? In this talk we go over the tips and tricks in generating synthetic metadata for the consumption of human users and AI agents alike.ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...

ŌĆ£MPU+: A Transformative Solution for Next-Gen AI at the Edge,ŌĆØ a Presentation...Edge AI and Vision Alliance

╠²

Ad