Deep Learning in Python with Tensorflow for Finance

- 1. Learning to Trade with Q-Reinforcement Learning (A tensorflow and Python focus) Ben Ball & David Samuel www.prediction-machines.com

- 2. Special thanks to -

- 3. Algorithmic Trading (e.g., HFT) vs Human Systematic Trading Often looking at opportunities existing in the microsecond time horizon. Typically using statistical microstructure models and techniques from machine learning. Automated but usually hand crafted signals, exploits, and algorithms. Predicting the next tick Systematic strategies learned from experience of ˇ°reading market behaviorˇ±, strategies operating over tens of seconds or minutes. Predicting complex plays

- 4. Take inspiration from Deep Mind ¨C Learning to play Atari video games

- 5. + Input FC ReLU FC ReLU Functional pass-though Output Could we do something similar for trading markets? O O O O O O *Network images from http://www.asimovinstitute.org/neural-network-zoo/

- 6. Introduction to Reinforcement Learning

- 7. How does a child learn to ride a bike? Lots of this leading to this rather than this . . .

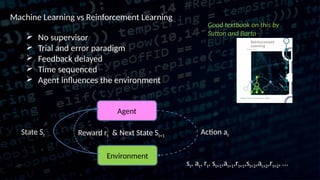

- 8. Machine Learning vs Reinforcement Learning ? No supervisor ? Trial and error paradigm ? Feedback delayed ? Time sequenced ? Agent influences the environment Agent Environment Action atState St Reward rt & Next State St+1 Good textbook on this by Sutton and Barto - st, at, rt, st+1,at+1,rt+1,st+2,at+2,rt+2, ˇ

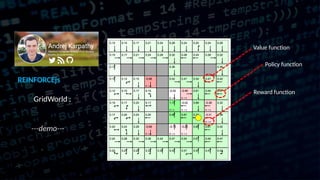

- 9. REINFORCEjs GridWorld : ---demo--- Value function Policy function Reward function

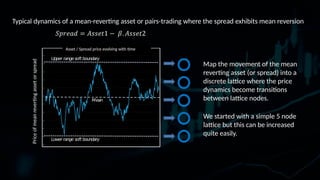

- 11. Typical dynamics of a mean-reverting asset or pairs-trading where the spread exhibits mean reversion Upper rangesoft boundary Lower range soft boundary Mean Priceofmeanrevertingassetorspread Map the movement of the mean reverting asset (or spread) into a discrete lattice where the price dynamics become transitions between lattice nodes. We started with a simple 5 node lattice but this can be increased quite easily. Asset / Spread price evolving with time

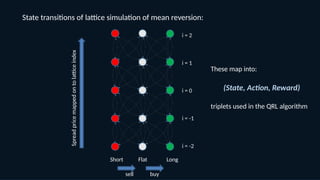

- 12. State transitions of lattice simulation of mean reversion: Short LongFlat Spreadpricemappedontolatticeindex i = 0 i = -1 i = -2 i = 1 i = 2 sell buy These map into: (State, Action, Reward) triplets used in the QRL algorithm

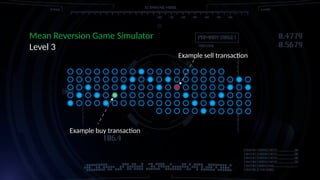

- 13. Mean Reversion Game Simulator Level 3 Example buy transaction Example sell transaction

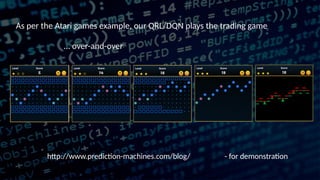

- 14. http://www.prediction-machines.com/blog/ - for demonstration As per the Atari games example, our QRL/DQN plays the trading game ˇ over-and-over

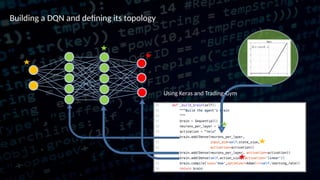

- 15. Building a DQN and defining its topology Using Keras and Trading-Gym

- 16. + Input FC ReLU FC ReLU Functional pass-though Output + Input FC ReLU FC ReLU Functional pass-though Output Double Dueling DQN (vanilla DQN does not converge well but this method works much better) target networktraining network lattice position (long,short,flat) state value of Buy value of Sell value of Do Nothing

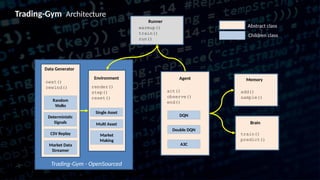

- 17. Trading-Gym Architecture Runner warmup() train() run() Children class Agent act() observe() end() DQN Double DQN A3C Abstract class Memory add() sample() Brain train() predict() Data Generator Random Walks Deterministic Signals CSV Replay Market Data Streamer Single Asset Multi Asset Market Making Environment render() step() reset() next() rewind() Trading-Gym - OpenSourced

- 18. Prediction Machines release of Trading-Gym environment into OpenSource - - demo - -

- 19. TensorFlow TradingBrain released soon TensorFlow TradingGym available now with Brain and DQN example Prediction Machines release of Trading-Gym environment into OpenSource

- 20. References: Insights In Reinforcement Learning (PhD thesis) by Hado van Hasselt Human-level control though deep reinforcement learning V Mnih, K Kavukcuoglu, D Silver, AA Rusu, J Veness, MG Bellemare, ... Nature 518 (7540), 529-533 Deep Reinforcement Learning with Double Q-Learning H Van Hasselt, A Guez, D Silver AAAI, 2094-2100 Prioritized experience replay T Schaul, J Quan, I Antonoglou, D Silver arXiv preprint arXiv:1511.05952 Dueling Network Architectures for Deep Reinforcement Learning Z Wang, T Schaul, M Hessel, H van Hasselt, M Lanctot, N de Freitas The 33rd International Conference on Machine Learning, 1995¨C2003

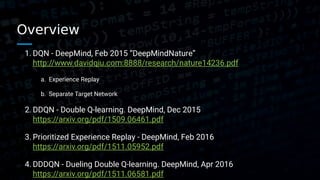

- 22. Overview 1. DQN - DeepMind, Feb 2015 ˇ°DeepMindNatureˇ± http://www.davidqiu.com:8888/research/nature14236.pdf a. Experience Replay b. Separate Target Network 2. DDQN - Double Q-learning. DeepMind, Dec 2015 https://arxiv.org/pdf/1509.06461.pdf 3. Prioritized Experience Replay - DeepMind, Feb 2016 https://arxiv.org/pdf/1511.05952.pdf 4. DDDQN - Dueling Double Q-learning. DeepMind, Apr 2016 https://arxiv.org/pdf/1511.06581.pdf

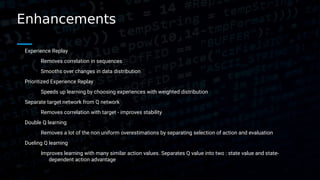

- 23. Enhancements Experience Replay Removes correlation in sequences Smooths over changes in data distribution Prioritized Experience Replay Speeds up learning by choosing experiences with weighted distribution Separate target network from Q network Removes correlation with target - improves stability Double Q learning Removes a lot of the non uniform overestimations by separating selection of action and evaluation Dueling Q learning Improves learning with many similar action values. Separates Q value into two : state value and state- dependent action advantage

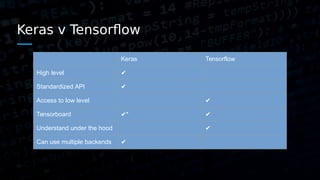

- 24. Keras v Tensorflow Keras Tensorflow High level ? Standardized API ? Access to low level ? Tensorboard ?* ? Understand under the hood ? Can use multiple backends ?

- 25. Install Tensorflow My installation was on CentOS in docker with GPU*, but also did locally on Ubuntu 16 for this demo. *Built from source for maximum speed. CentOS instructions were adapted from: https://blog.abysm.org/2016/06/building-tensorflow-centos-6/ Ubuntu install was from: https://www.tensorflow.org/install/install_sources

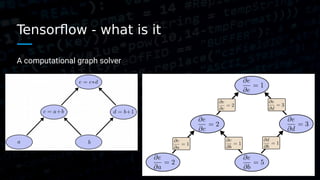

- 26. Tensorflow - what is it A computational graph solver

- 27. Tensorflow key API Namespaces for organizing the graph and showing in tensorboard with tf.variable_scope('prediction'): Sessions with tf.Session() as sess: Create variables and placeholders var = tf.placeholder('int32', [None, 2, 3], name='varnameˇŻ) self.global_step = tf.Variable(0, trainable=False) Session.run or variable.eval to run parts of the graph and retrieve values pred_action = self.q_action.eval({self.s_t['p']: s_t_plus_1}) q_t, loss= self.sess.run([q['p'], loss], {target_q_t: target_q_t, action: action})

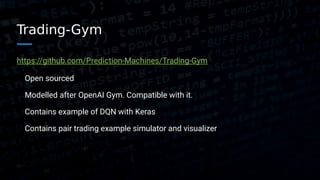

- 28. Trading-Gym https://github.com/Prediction-Machines/Trading-Gym Open sourced Modelled after OpenAI Gym. Compatible with it. Contains example of DQN with Keras Contains pair trading example simulator and visualizer

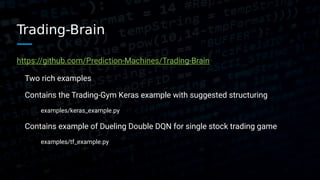

- 29. Trading-Brain https://github.com/Prediction-Machines/Trading-Brain Two rich examples Contains the Trading-Gym Keras example with suggested structuring examples/keras_example.py Contains example of Dueling Double DQN for single stock trading game examples/tf_example.py

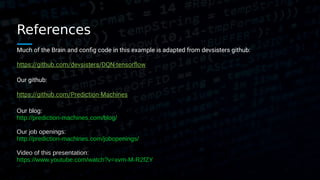

- 30. References Much of the Brain and config code in this example is adapted from devsisters github: https://github.com/devsisters/DQN-tensorflow Our github: https://github.com/Prediction-Machines Our blog: http://prediction-machines.com/blog/ Our job openings: http://prediction-machines.com/jobopenings/ Video of this presentation: https://www.youtube.com/watch?v=xvm-M-R2fZY

![Tensorflow key API

Namespaces for organizing the graph and showing in tensorboard

with tf.variable_scope('prediction'):

Sessions

with tf.Session() as sess:

Create variables and placeholders

var = tf.placeholder('int32', [None, 2, 3], name='varnameˇŻ)

self.global_step = tf.Variable(0, trainable=False)

Session.run or variable.eval to run parts of the graph and retrieve values

pred_action = self.q_action.eval({self.s_t['p']: s_t_plus_1})

q_t, loss= self.sess.run([q['p'], loss], {target_q_t: target_q_t, action: action})](https://image.slidesharecdn.com/pythonmeetuppresentation-170707022217/85/Deep-Learning-in-Python-with-Tensorflow-for-Finance-27-320.jpg)