Graph Neural Network (???)

Download as pptx, pdf8 likes9,870 views

Graph Neural Network? ???? ??? ??? ?? ???????.

1 of 30

Downloaded 204 times

Ad

Recommended

[????] Graph Convolutional Network (GCN)

[????] Graph Convolutional Network (GCN)Donghyeon Kim

?

* ???? ???? ???? ???? ??? ???? Graph Convolutional Network (GCN) ??? ?? ???? ??? ?????.

* ??????? ???? ??? A-GIST ???? ??????.

* ???? (???, ???): https://youtu.be/naG9umGoX7MIntroduction to Graph Neural Networks: Basics and Applications - Katsuhiko Is...

Introduction to Graph Neural Networks: Basics and Applications - Katsuhiko Is...Preferred Networks

?

The document provides an introduction to Graph Neural Networks (GNNs), explaining their ability to compute representations of graph-structured data and outlining their applications in various industrial and academic contexts. It discusses the fundamental model of GNN, which involves approximated graph convolution, and highlights use cases such as node classification, protein interface prediction, and scene graph generation in computer vision. Additionally, it addresses theoretical challenges associated with GNNs, including issues of oversmoothing and representation power limits.Graph Neural Network - Introduction

Graph Neural Network - IntroductionJungwon Kim

?

This document provides an overview of graph neural networks (GNNs). GNNs are a type of neural network that can operate on graph-structured data like molecules or social networks. GNNs learn representations of nodes by propagating information between connected nodes over many layers. They are useful when relationships between objects are important. Examples of applications include predicting drug properties from molecular graphs and program understanding by modeling code as graphs. The document explains how GNNs differ from RNNs and provides examples of GNN variations, datasets, and frameworks.Wasserstein GAN ?? ???? I

Wasserstein GAN ?? ???? ISungbin Lim

?

? ????? Martin Arjovsky, Soumith Chintala, L©”on Bottou ? Wasserstein GAN (https://arxiv.org/abs/1701.07875v2) ?? ? Example 1 ? ???? ?????Introduction to Graph neural networks @ Vienna Deep Learning meetup

Introduction to Graph neural networks @ Vienna Deep Learning meetupLiad Magen

?

The document provides an overview of graph neural networks (GNNs), including definitions, mathematical representations, and practical applications such as graph classification, node classification, and edge prediction. It discusses various frameworks for working with graphs, including NetworkX and PyTorch Geometric, and highlights potential challenges in implementing GNNs, such as overfitting and vanishing gradients. Additional resources and links for further reading on the subject are also included.Graph neural networks overview

Graph neural networks overviewRodion Kiryukhin

?

The document provides an overview of graph neural networks (GNNs) and discusses their relevance in processing graph-structured data compared to traditional methods such as network embedding and graph kernel techniques. It categorizes GNNs into several types including recurrent GNNs, convolutional GNNs, graph autoencoders, and spatial-temporal GNNs, each with unique architectures and applications. The paper also outlines key historical developments and the evolution of GNN methodologies in the context of deep learning and relational data analysis.1???? GAN(Generative Adversarial Network) ?? ????

1???? GAN(Generative Adversarial Network) ?? ????NAVER Engineering

?

This document provides an overview of Generative Adversarial Networks (GANs) along with various implementations and their applications in machine learning. It discusses the fundamental concepts of supervised and unsupervised learning, the architecture of GANs including the roles of the generator and discriminator, and various GAN variants such as DCGAN and ACGAN. Additionally, it highlights practical implementations in PyTorch and recent advancements in GAN technology.?????? ?? ?

?????? ?? ?NAVER Engineering

?

The document discusses autoencoders as a method for unsupervised learning, focusing on their role in nonlinear dimensionality reduction, representation learning, and generative model learning. It covers various autoencoder types including denoising and variational autoencoders, and provides insights into training methods and loss functions, particularly emphasizing the maximum likelihood perspective. It also touches upon applications of autoencoders such as retrieval, generation, and regression.rnn BASICS

rnn BASICSPriyanka Reddy

?

1. Recurrent neural networks can model sequential data like time series by incorporating hidden state that has internal dynamics. This allows the model to store information for long periods of time.

2. Two key types of recurrent networks are linear dynamical systems and hidden Markov models. Long short-term memory networks were developed to address the problem of exploding or vanishing gradients in training traditional recurrent networks.

3. Recurrent networks can learn tasks like binary addition by recognizing patterns in the inputs over time rather than relying on fixed architectures like feedforward networks. They have been successfully applied to handwriting recognition.Tips for data science competitions

Tips for data science competitionsOwen Zhang

?

This document provides tips for winning data science competitions by summarizing a presentation about strategies and techniques. It discusses the structure of competitions, sources of competitive advantage like feature engineering and the right tools, and validation approaches. It also summarizes three case studies where the speaker applied these lessons, including encoding categorical variables and building diverse blended models. The key lessons are to focus on proper validation, leverage domain knowledge through features, and apply what is learned to real-world problems.Graph Neural Network in practice

Graph Neural Network in practicetuxette

?

This document summarizes and compares two popular Python libraries for graph neural networks - Spektral and PyTorch Geometric. It begins by providing an overview of the basic functionality and architecture of each library. It then discusses how each library handles data loading and mini-batching of graph data. The document reviews several common message passing layer types implemented in both libraries. It provides an example comparison of using each library for a node classification task on the Cora dataset. Finally, it discusses a graph classification comparison in PyTorch Geometric using different message passing and pooling layers on the IMDB-binary dataset.Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

Convolutional Neural Networks on Graphs with Fast Localized Spectral FilteringSOYEON KIM

?

The document discusses the application of convolutional neural networks (CNNs) on graphs, particularly for unstructured data such as social, biological, and infrastructure networks. It highlights methods for formulating convolution and down-sampling on graphs, including the design of localized convolutional filters and graph coarsening techniques. The findings suggest that utilizing spectral graph theory can lead to efficient graph filtering and pooling, with proposed contributions towards linear complexity in filter design.Brief intro : Invariance and Equivariance

Brief intro : Invariance and Equivariance?? ?

?

The document discusses the limitations of conventional convolutional neural networks (CNNs), particularly their equivariance under translation but not under rotation. It introduces group convolutional networks (g-CNNs) that utilize a group of filters to achieve equivariance, allowing for better recognition of features across different orientations. Additionally, it explores the concept of capsules in neural networks, which represent instantiation parameters for objects and contribute to understanding equivariance.RoFormer: Enhanced Transformer with Rotary Position Embedding

RoFormer: Enhanced Transformer with Rotary Position Embeddingtaeseon ryu

?

The document presents a novel rotary position embedding (rope) model that combines absolute and relative position encodings to enhance transformer architectures. It reviews existing methods of position embedding, proposes the formulation of the rope, and discusses its properties and experimental results. The findings suggest that the rope significantly preserves relative positional information while encoding absolute positions in token embeddings.Normalization ??

Normalization ?? ?? ?

?

??? ?? Nishida Geio?? Normalization ???? ? ??? ?????.

Normalization? ? ?????? ????

Batch, Weight, Layer Normalization?? ??? ?? ??? ??

????? 3??? ??? ? ?????

??? ????? ?? ??? Fisher Information Matrix? ?????, ?? ???? ????? ??? ? ???.

Photo-realistic Single Image Super-resolution using a Generative Adversarial ...

Photo-realistic Single Image Super-resolution using a Generative Adversarial ...Hansol Kang

?

The document discusses the methodology and results of using Generative Adversarial Networks (GANs) for photo-realistic single image super-resolution (SRGAN). It covers the architecture, perceptual loss functions, and experimental results using various datasets, demonstrating the effectiveness of adversarial loss in improving image quality. Additionally, it includes source code examples for the generator and discriminator components of the SRGAN framework.ź░źķźšźŪ®`ź┐Ęų╬÷ ╚ļķTŠÄ

ź░źķźšźŪ®`ź┐Ęų╬÷ ╚ļķTŠÄĒśę▓ ╔Į┐┌

?

ź░źķźšĘų╬÷ź╩źżź╚ (ź©ź¾źĖź╦źóŽ“ż▒)

https://dllab.connpass.com/event/159148/

ź░źķźš«Æż▀▐zż▀ź═ź├ź╚ź’®`ź»ż╩ż╔Īóź░źķźš╔Žż╬ÖCąĄč¦┴Ģ/╔Ņīėč¦┴Ģ╝╝ągż╬Ė┼ꬿ╦ż─żżżŲż┤šh├„żĘż▐ż╣ĪŻConvolutional neural network from VGG to DenseNet

Convolutional neural network from VGG to DenseNetSungminYou

?

This document summarizes recent developments in convolutional neural networks (CNNs) for image recognition, including residual networks (ResNets) and densely connected convolutional networks (DenseNets). It reviews CNN structure and components like convolution, pooling, and ReLU. ResNets address degradation problems in deep networks by introducing identity-based skip connections. DenseNets connect each layer to every other layer to encourage feature reuse, addressing vanishing gradients. The document outlines the structures of ResNets and DenseNets and their advantages over traditional CNNs.Introduction to multiple object tracking

Introduction to multiple object trackingFan Yang

?

This document provides an introduction to multiple object tracking (MOT). It discusses the goal of MOT as detecting and linking target objects across frames. It describes common MOT approaches including using boxes or masks to represent objects. The document also categorizes MOT based on factors like whether it tracks a single or multiple classes, in 2D or 3D, using a single or multiple cameras. It reviews old and new evaluation metrics for MOT and highlights state-of-the-art methods on various MOT datasets. In conclusion, it notes that while MOT research is interesting, standardized evaluation metrics and protocols still need improvement.CNN ???? ??? ??? ??? (VGG ?? ??)

CNN ???? ??? ??? ??? (VGG ?? ??)Lee Seungeun

?

?? ?????? ???? ?? ?? ?????. ??? ??? ?? ?? ??? ????? ????? ?????.

*???? 6? ??? classical CNN architecture(??? ?? ??)?? ReLU - Pool - ReLu?? ?? ??? ReLU? ??? ?????. ReLU - Pool?? ReLU ??? ? ?? ? redundant ?? ?????(Kyung Mo Kweon ??? ?????)Winning Kaggle 101: Introduction to Stacking

Winning Kaggle 101: Introduction to StackingTed Xiao

?

This document provides an introduction to stacking, an ensemble machine learning method. Stacking involves training a "metalearner" to optimally combine the predictions from multiple "base learners". The stacking algorithm was developed in the 1990s and improved upon with techniques like cross-validation and the "Super Learner" which combines models in a way that is provably asymptotically optimal. H2O implements an efficient stacking method called H2O Ensemble which allows for easily finding the best combination of algorithms like GBM, DNNs, and more to improve predictions.ź░źķźšźŪ®`ź┐ż╬╗·ąĄč¦Ž░ż╦ż¬ż▒żļ╠žÅš▒ĒŽųż╬╔Ķ╝Ųż╚覎░

ź░źķźšźŪ®`ź┐ż╬╗·ąĄč¦Ž░ż╦ż¬ż▒żļ╠žÅš▒ĒŽųż╬╔Ķ╝Ųż╚覎░Ichigaku Takigawa

?

╚š▒ŠÅĻė├╩²└Ēč¦╗ß 2017─ĻČ╚ ─Ļ╗ß

2017─Ļ9į┬8╚š(Į)

[ [蹊┐▓┐╗ß OS] ÖCąĄč¦┴Ģ]

http://annual2017.jsiam.org/program#1422

ź░źķźšż╚żĘżŲ▒Ē¼FżĄżņż┐īØŽ¾ż¼ČÓ╩²żóżļł÷║ŽĪóżĮż╬źŪ®`ź┐ź╗ź├ź╚ż╬ĮyėŗĄ─ź╚źņź¾ź╔ż“└ĒĮŌż╣żļż┐żßż╬Ūķł¾╝╝ągż¼▒žę¬ż╚ż╩żļĪŻż│ż╬─┐Ą─ż╬ż┐żßĪóśöĪ®ż╩Į╠ĤĖČżŁč¦┴ĢĘĮ╩Įż¼¼Fį┌ż▐żŪż╦蹊┐żĄżņżŲżŁż┐ĪŻ▒Š░k▒ĒżŪżŽĪó░k▒Ēš▀ż¼ķvż’ż├żŲżŁż┐╔·├³┐Ųč¦?▓─┴Ž┐Ų覿╬└²ż╦ż─żżżŲĪóĄ═Ęųūė╗»║Ž╬’ż╬ź░źķźšź©ź¾ź│®`ź╔żõ╠žÅš┴┐ėŗ╦Ńż╬╩ųĘ©Īóź╣źč®`ź╣č¦┴Ģżõ╗žÄó╔Łż“ė├żżż┐Į╠ĤĖČżŁč¦┴ĢĪó╝░żėūŅĮ³ż╬įÆŅ}ż╦ż─żżżŲż╬Ė┼ꬿ“ĮBĮķż╣żļĪŻGraph convolution (ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴)

Graph convolution (ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴)yukihiro domae

?

ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴ż╬GraphConvolution ż╦ż─żżżŲĮŌšh[DL▌åši╗ß]Energy-based generative adversarial networks

[DL▌åši╗ß]Energy-based generative adversarial networksDeep Learning JP

?

2017/10/30

Deep Learning JP:

http://deeplearning.jp/seminar-2/Graph Convolutional Neural Networks

Graph Convolutional Neural Networks ?? ?

?

The document presents an overview of Graph Convolutional Neural Networks, focusing on their applications in deep learning, especially in the industrial and medical fields. It discusses various methodologies, including the process of data collection, prediction, and modeling within deep learning, as well as graph theory concepts relevant to neural networks. Notably, the document highlights the potential of GCN in predicting protein structures and interactions, linking advancements in AI with biotechnology.More Related Content

What's hot (20)

1???? GAN(Generative Adversarial Network) ?? ????

1???? GAN(Generative Adversarial Network) ?? ????NAVER Engineering

?

This document provides an overview of Generative Adversarial Networks (GANs) along with various implementations and their applications in machine learning. It discusses the fundamental concepts of supervised and unsupervised learning, the architecture of GANs including the roles of the generator and discriminator, and various GAN variants such as DCGAN and ACGAN. Additionally, it highlights practical implementations in PyTorch and recent advancements in GAN technology.?????? ?? ?

?????? ?? ?NAVER Engineering

?

The document discusses autoencoders as a method for unsupervised learning, focusing on their role in nonlinear dimensionality reduction, representation learning, and generative model learning. It covers various autoencoder types including denoising and variational autoencoders, and provides insights into training methods and loss functions, particularly emphasizing the maximum likelihood perspective. It also touches upon applications of autoencoders such as retrieval, generation, and regression.rnn BASICS

rnn BASICSPriyanka Reddy

?

1. Recurrent neural networks can model sequential data like time series by incorporating hidden state that has internal dynamics. This allows the model to store information for long periods of time.

2. Two key types of recurrent networks are linear dynamical systems and hidden Markov models. Long short-term memory networks were developed to address the problem of exploding or vanishing gradients in training traditional recurrent networks.

3. Recurrent networks can learn tasks like binary addition by recognizing patterns in the inputs over time rather than relying on fixed architectures like feedforward networks. They have been successfully applied to handwriting recognition.Tips for data science competitions

Tips for data science competitionsOwen Zhang

?

This document provides tips for winning data science competitions by summarizing a presentation about strategies and techniques. It discusses the structure of competitions, sources of competitive advantage like feature engineering and the right tools, and validation approaches. It also summarizes three case studies where the speaker applied these lessons, including encoding categorical variables and building diverse blended models. The key lessons are to focus on proper validation, leverage domain knowledge through features, and apply what is learned to real-world problems.Graph Neural Network in practice

Graph Neural Network in practicetuxette

?

This document summarizes and compares two popular Python libraries for graph neural networks - Spektral and PyTorch Geometric. It begins by providing an overview of the basic functionality and architecture of each library. It then discusses how each library handles data loading and mini-batching of graph data. The document reviews several common message passing layer types implemented in both libraries. It provides an example comparison of using each library for a node classification task on the Cora dataset. Finally, it discusses a graph classification comparison in PyTorch Geometric using different message passing and pooling layers on the IMDB-binary dataset.Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

Convolutional Neural Networks on Graphs with Fast Localized Spectral FilteringSOYEON KIM

?

The document discusses the application of convolutional neural networks (CNNs) on graphs, particularly for unstructured data such as social, biological, and infrastructure networks. It highlights methods for formulating convolution and down-sampling on graphs, including the design of localized convolutional filters and graph coarsening techniques. The findings suggest that utilizing spectral graph theory can lead to efficient graph filtering and pooling, with proposed contributions towards linear complexity in filter design.Brief intro : Invariance and Equivariance

Brief intro : Invariance and Equivariance?? ?

?

The document discusses the limitations of conventional convolutional neural networks (CNNs), particularly their equivariance under translation but not under rotation. It introduces group convolutional networks (g-CNNs) that utilize a group of filters to achieve equivariance, allowing for better recognition of features across different orientations. Additionally, it explores the concept of capsules in neural networks, which represent instantiation parameters for objects and contribute to understanding equivariance.RoFormer: Enhanced Transformer with Rotary Position Embedding

RoFormer: Enhanced Transformer with Rotary Position Embeddingtaeseon ryu

?

The document presents a novel rotary position embedding (rope) model that combines absolute and relative position encodings to enhance transformer architectures. It reviews existing methods of position embedding, proposes the formulation of the rope, and discusses its properties and experimental results. The findings suggest that the rope significantly preserves relative positional information while encoding absolute positions in token embeddings.Normalization ??

Normalization ?? ?? ?

?

??? ?? Nishida Geio?? Normalization ???? ? ??? ?????.

Normalization? ? ?????? ????

Batch, Weight, Layer Normalization?? ??? ?? ??? ??

????? 3??? ??? ? ?????

??? ????? ?? ??? Fisher Information Matrix? ?????, ?? ???? ????? ??? ? ???.

Photo-realistic Single Image Super-resolution using a Generative Adversarial ...

Photo-realistic Single Image Super-resolution using a Generative Adversarial ...Hansol Kang

?

The document discusses the methodology and results of using Generative Adversarial Networks (GANs) for photo-realistic single image super-resolution (SRGAN). It covers the architecture, perceptual loss functions, and experimental results using various datasets, demonstrating the effectiveness of adversarial loss in improving image quality. Additionally, it includes source code examples for the generator and discriminator components of the SRGAN framework.ź░źķźšźŪ®`ź┐Ęų╬÷ ╚ļķTŠÄ

ź░źķźšźŪ®`ź┐Ęų╬÷ ╚ļķTŠÄĒśę▓ ╔Į┐┌

?

ź░źķźšĘų╬÷ź╩źżź╚ (ź©ź¾źĖź╦źóŽ“ż▒)

https://dllab.connpass.com/event/159148/

ź░źķźš«Æż▀▐zż▀ź═ź├ź╚ź’®`ź»ż╩ż╔Īóź░źķźš╔Žż╬ÖCąĄč¦┴Ģ/╔Ņīėč¦┴Ģ╝╝ągż╬Ė┼ꬿ╦ż─żżżŲż┤šh├„żĘż▐ż╣ĪŻConvolutional neural network from VGG to DenseNet

Convolutional neural network from VGG to DenseNetSungminYou

?

This document summarizes recent developments in convolutional neural networks (CNNs) for image recognition, including residual networks (ResNets) and densely connected convolutional networks (DenseNets). It reviews CNN structure and components like convolution, pooling, and ReLU. ResNets address degradation problems in deep networks by introducing identity-based skip connections. DenseNets connect each layer to every other layer to encourage feature reuse, addressing vanishing gradients. The document outlines the structures of ResNets and DenseNets and their advantages over traditional CNNs.Introduction to multiple object tracking

Introduction to multiple object trackingFan Yang

?

This document provides an introduction to multiple object tracking (MOT). It discusses the goal of MOT as detecting and linking target objects across frames. It describes common MOT approaches including using boxes or masks to represent objects. The document also categorizes MOT based on factors like whether it tracks a single or multiple classes, in 2D or 3D, using a single or multiple cameras. It reviews old and new evaluation metrics for MOT and highlights state-of-the-art methods on various MOT datasets. In conclusion, it notes that while MOT research is interesting, standardized evaluation metrics and protocols still need improvement.CNN ???? ??? ??? ??? (VGG ?? ??)

CNN ???? ??? ??? ??? (VGG ?? ??)Lee Seungeun

?

?? ?????? ???? ?? ?? ?????. ??? ??? ?? ?? ??? ????? ????? ?????.

*???? 6? ??? classical CNN architecture(??? ?? ??)?? ReLU - Pool - ReLu?? ?? ??? ReLU? ??? ?????. ReLU - Pool?? ReLU ??? ? ?? ? redundant ?? ?????(Kyung Mo Kweon ??? ?????)Winning Kaggle 101: Introduction to Stacking

Winning Kaggle 101: Introduction to StackingTed Xiao

?

This document provides an introduction to stacking, an ensemble machine learning method. Stacking involves training a "metalearner" to optimally combine the predictions from multiple "base learners". The stacking algorithm was developed in the 1990s and improved upon with techniques like cross-validation and the "Super Learner" which combines models in a way that is provably asymptotically optimal. H2O implements an efficient stacking method called H2O Ensemble which allows for easily finding the best combination of algorithms like GBM, DNNs, and more to improve predictions.ź░źķźšźŪ®`ź┐ż╬╗·ąĄč¦Ž░ż╦ż¬ż▒żļ╠žÅš▒ĒŽųż╬╔Ķ╝Ųż╚覎░

ź░źķźšźŪ®`ź┐ż╬╗·ąĄč¦Ž░ż╦ż¬ż▒żļ╠žÅš▒ĒŽųż╬╔Ķ╝Ųż╚覎░Ichigaku Takigawa

?

╚š▒ŠÅĻė├╩²└Ēč¦╗ß 2017─ĻČ╚ ─Ļ╗ß

2017─Ļ9į┬8╚š(Į)

[ [蹊┐▓┐╗ß OS] ÖCąĄč¦┴Ģ]

http://annual2017.jsiam.org/program#1422

ź░źķźšż╚żĘżŲ▒Ē¼FżĄżņż┐īØŽ¾ż¼ČÓ╩²żóżļł÷║ŽĪóżĮż╬źŪ®`ź┐ź╗ź├ź╚ż╬ĮyėŗĄ─ź╚źņź¾ź╔ż“└ĒĮŌż╣żļż┐żßż╬Ūķł¾╝╝ągż¼▒žę¬ż╚ż╩żļĪŻż│ż╬─┐Ą─ż╬ż┐żßĪóśöĪ®ż╩Į╠ĤĖČżŁč¦┴ĢĘĮ╩Įż¼¼Fį┌ż▐żŪż╦蹊┐żĄżņżŲżŁż┐ĪŻ▒Š░k▒ĒżŪżŽĪó░k▒Ēš▀ż¼ķvż’ż├żŲżŁż┐╔·├³┐Ųč¦?▓─┴Ž┐Ų覿╬└²ż╦ż─żżżŲĪóĄ═Ęųūė╗»║Ž╬’ż╬ź░źķźšź©ź¾ź│®`ź╔żõ╠žÅš┴┐ėŗ╦Ńż╬╩ųĘ©Īóź╣źč®`ź╣č¦┴Ģżõ╗žÄó╔Łż“ė├żżż┐Į╠ĤĖČżŁč¦┴ĢĪó╝░żėūŅĮ³ż╬įÆŅ}ż╦ż─żżżŲż╬Ė┼ꬿ“ĮBĮķż╣żļĪŻGraph convolution (ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴)

Graph convolution (ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴)yukihiro domae

?

ź╣ź┌ź»ź╚źļźóźūźĒ®`ź┴ż╬GraphConvolution ż╦ż─żżżŲĮŌšh[DL▌åši╗ß]Energy-based generative adversarial networks

[DL▌åši╗ß]Energy-based generative adversarial networksDeep Learning JP

?

2017/10/30

Deep Learning JP:

http://deeplearning.jp/seminar-2/Graph Convolutional Neural Networks

Graph Convolutional Neural Networks ?? ?

?

The document presents an overview of Graph Convolutional Neural Networks, focusing on their applications in deep learning, especially in the industrial and medical fields. It discusses various methodologies, including the process of data collection, prediction, and modeling within deep learning, as well as graph theory concepts relevant to neural networks. Notably, the document highlights the potential of GCN in predicting protein structures and interactions, linking advancements in AI with biotechnology.Graph Neural Network (???)

- 2. ???? ?... Ī± GCN(Graph Convolutional Network)? ?? ???? ???? Ī ??? ? ??ĪŁ Ī± GNN? ?? ??? ???? ????

- 3. Graph Neural Network?? Ī± Graph ??? ??? ?? Neural Network Ī ??: Graph Ī ??: Label Ī÷ ??(0.95, 0.81, 0.4, ĪŁ) Ī÷ ??? (Protein, Carbon-dioxide, etc.) Ī÷ ?? (Drug/Not Drug, etc.)

- 4. ? Graph Neural Network? Ī± ??? ??? ?? ??? ?? ?? Ī RNN? ?? Cell?? Recursive? ??? ?? Ī± ????? ???? ?? Ī ?? ??? ?? Connection Ī ????? ??? ?? ???? ?? ??? ??

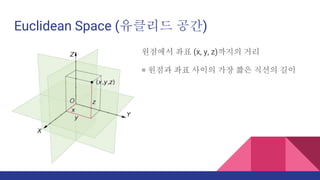

- 5. Euclidean Space (???? ??) ???? ?? (x, y, z)??? ?? = ??? ?? ??? ?? ?? ??? ??

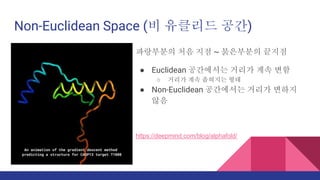

- 6. Non-Euclidean Space (? ???? ??) ????? ?? ?? ~ ????? ??? Ī± Euclidean ????? ??? ?? ?? Ī ??? ?? ???? ?? Ī± Non-Euclidean ????? ??? ??? ?? https://deepmind.com/blog/alphafold/

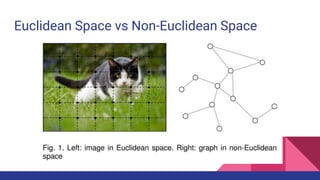

- 7. Euclidean Space vs Non-Euclidean Space

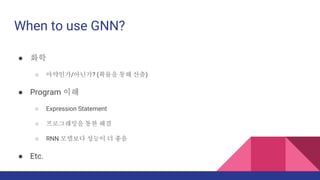

- 8. When to use GNN? Ī± ?? Ī ????/???? (??? ?? ??) Ī± Program ?? Ī Expression Statement Ī ?????? ?? ?? Ī RNN ???? ??? ? ?? Ī± Etc.

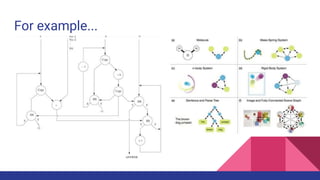

- 10. For example...

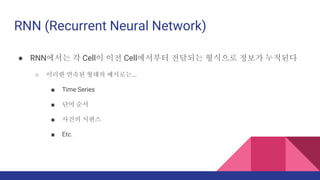

- 11. RNN (Recurrent Neural Network) Ī± RNN??? ? Cell? ?? Cell???? ???? ???? ??? ???? Ī ??? ??? ??? ????... Ī÷ Time Series Ī÷ ?? ?? Ī÷ ??? ??? Ī÷ Etc.

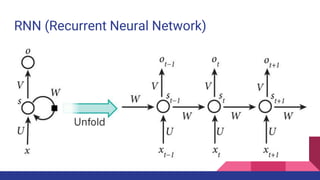

- 12. RNN (Recurrent Neural Network)

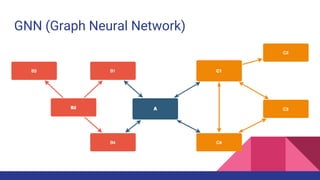

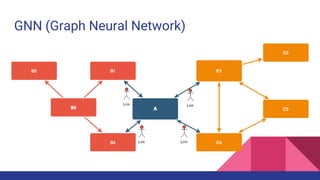

- 13. GNN (Graph Neural Network)

- 14. GNN (Graph Neural Network)

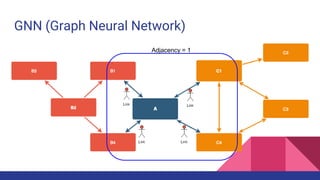

- 15. GNN (Graph Neural Network) Adjacency = 1

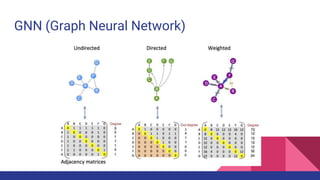

- 16. GNN (Graph Neural Network)

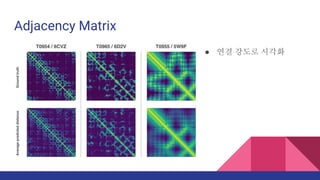

- 17. Adjacency Matrix Ī± ?? ??? ???

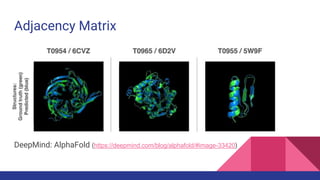

- 18. Adjacency Matrix DeepMind: AlphaFold (https://deepmind.com/blog/alphafold/#image-33420)

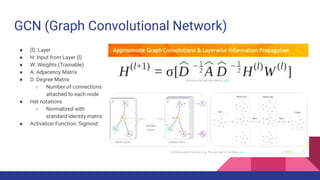

- 19. GCN (Graph Convolutional Network) Ī± (l): Layer Ī± H: Input from Layer (l) Ī± W: Weights (Trainable) Ī± A: Adjacency Matrix Ī± D: Degree Matrix Ī Number of connections attached to each node Ī± Hat notations Ī Normalized with standard Identity matrix Ī± Activation Function: Sigmoid

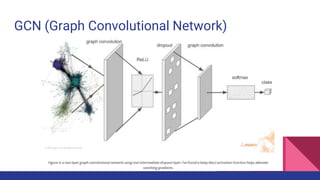

- 20. GCN (Graph Convolutional Network)

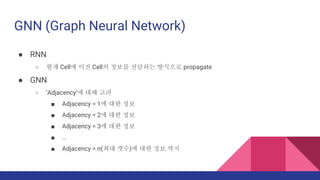

- 21. GNN (Graph Neural Network) Ī± RNN Ī ?? Cell? ?? Cell? ??? ???? ???? propagate Ī± GNN Ī Ī«AdjacencyĪ»? ?? ?? Ī÷ Adjacency = 1? ?? ?? Ī÷ Adjacency = 2? ?? ?? Ī÷ Adjacency = 3? ?? ?? Ī÷ ĪŁ Ī÷ Adjacency = n(?? ??)? ?? ?? ??

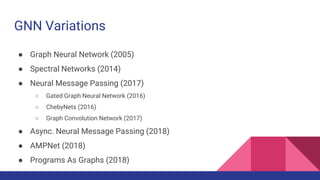

- 22. GNN Variations Ī± Graph Neural Network (2005) Ī± Spectral Networks (2014) Ī± Neural Message Passing (2017) Ī Gated Graph Neural Network (2016) Ī ChebyNets (2016) Ī Graph Convolution Network (2017) Ī± Async. Neural Message Passing (2018) Ī± AMPNet (2018) Ī± Programs As Graphs (2018)

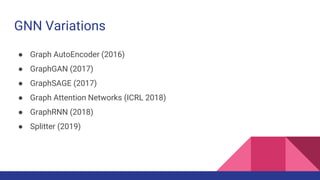

- 23. GNN Variations Ī± Graph AutoEncoder (2016) Ī± GraphGAN (2017) Ī± GraphSAGE (2017) Ī± Graph Attention Networks (ICRL 2018) Ī± GraphRNN (2018) Ī± Splitter (2019)

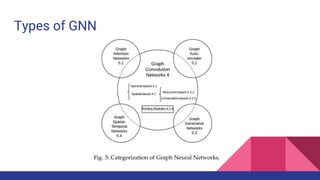

- 24. Types of GNN

- 25. Datasets for GNN https://github.com/shiruipan/graph_datasets ** Not listed in link, but Ī«ZacharyĪ»s karate clubĪ» is a commonly used social network. ** (https://towardsdatascience.com/how-to-do- deep-learning-on-graphs-with-graph- convolutional-networks-7d2250723780)

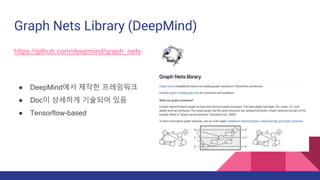

- 26. Graph Nets Library (DeepMind) https://github.com/deepmind/graph_nets Ī± DeepMind?? ??? ????? Ī± Doc? ???? ???? ?? Ī± Tensorflow-based

- 27. DGL (Deep Graph Library) https://www.dgl.ai/ Ī± ?? ??? (2018) Ī± NYU, NYU-???, Amazon ?? ??

- 28. PyTorch Geometric https://github.com/rusty1s/pytorch_geometric Ī± PyTorch Extension Ī ?? ?? X Ī± DGL?? ?? 15? ?? ? ??? ??? ??

- 29. Geometric Deep Learning http://geometricdeeplearning.com/ Ī± Not a Framework Ī± Many references for GNN (Not a lot of descriptions)

- 30. References Microsoft - Graph Neural Networks: Variations and Applications (https://www.youtube.com/watch?v=cWIeTMklzNg) Graph Theory - Adjacency Matrices (https://www.ebi.ac.uk/training/online/course/network-analysis-protein-interaction-data- introduction/introduction-graph-theory/graph-0) DeepMind - AlphaFold (https://deepmind.com/blog/alphafold/)