Introduction to Fundamentals of RNNs

- 1. RNNs Under the hood On the Surface Elvis Saravia

- 2. Icebreaker Can we predict the future based on our current decisions? 2 ˇ°Which research direction should I take?ˇ± Elvis Saravia

- 3. Outline ˇńPart 1: Review Neural Network Essentials ˇńPart 2: Sequential Modeling ˇńPart 3: Introduction to Recurrent Neural Networks ˇńPart 4: RNNs with Tensorflow 3 Elvis Saravia

- 4. Part 1 4 The Neural Network Elvis Saravia

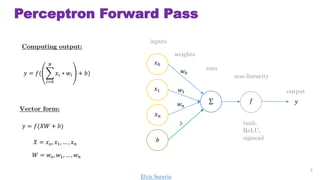

- 5. Perceptron Forward Pass 5 ˇĆ ? non-linearity sum weights inputs ?0 ?1 ? ? ? ?0 ?1 ? ? ? ? output ? = ?( ?=0 ? ?? ? ?? + ?) Computing output: ? = ?(?? + ?) ? = ? ?, ?1, ˇ , ? ? ? = ? ?, ?1, ˇ , ? ? tanh, ReLU, sigmoid Vector form: Elvis Saravia

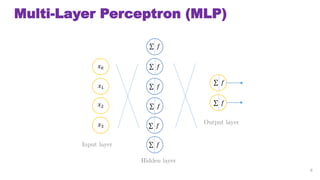

- 6. Multi-Layer Perceptron (MLP) 6 Output layer Hidden layer Input layer ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ?0 ?1 ?2 ?3

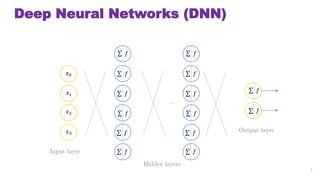

- 7. Deep Neural Networks (DNN) 7 Output layer Hidden layers Input layer ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ?0 ?1 ?2 ?3 ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇ

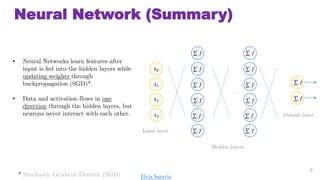

- 8. Neural Network (Summary) ? Neural Networks learn features after input is fed into the hidden layers while updating weights through backpropagation (SGD)*. ? Data and activation flows in one direction through the hidden layers, but neurons never interact with each other. 8 Output layer Hidden layers Input layer ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ?0 ?1 ?2 ?3 ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇ * Stochastic Gradient Descent (SGD) Elvis Saravia

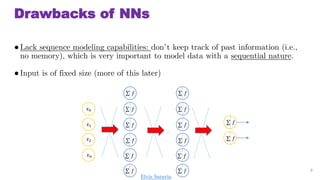

- 9. Drawbacks of NNs ˇńLack sequence modeling capabilities: donˇŻt keep track of past information (i.e., no memory), which is very important to model data with a sequential nature. ˇńInput is of fixed size (more of this later) 9 ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ?0 ?1 ?2 ? ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇ Elvis Saravia

- 10. Part 2 10 Sequential Modeling Elvis Saravia

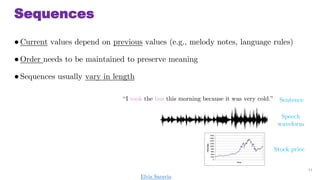

- 11. Sequences ˇ°I took the bus this morning because it was very cold.ˇ± 11 Stock price Speech waveform Sentence ˇńCurrent values depend on previous values (e.g., melody notes, language rules) ˇńOrder needs to be maintained to preserve meaning ˇńSequences usually vary in length Elvis Saravia

- 12. Modeling Sequences How to represent a sequence? 12 I love the coldness [ 1 1 0 1 0 0 1 ] ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ?0 ?1 ?2 ? ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇ ? Bag of Words (BOW) WhatˇŻs the problem with the BOW representation?

- 13. Problem with BOW Bag of words does not preserve order, therefore no semantics can be captured 13 ˇ°The food was good, not bad at allˇ± ˇ°The food was bad, not good at allˇ± vs How to differentiate meaning of both sentences? [ 1 1 0 1 1 0 1 0 1 0 0 1 1 ]

- 14. One-Hot Encoding ˇń Preserve order by maintaining order within feature vector 14 On Monday it was raining [ 0 0 0 1 0 0 0 1 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 ] We preserved order but what is the problem here?

- 15. Problem with One-Hot Encoding ˇń One-hot encoding cannot deal with variations of the same sequence. 15 On Monday it was raining It was raining on Monday [ 0 0 0 1 0 0 0 1 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 ] [ 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 1 0 0 ] Elvis Saravia

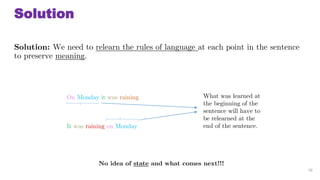

- 16. Solution Solution: We need to relearn the rules of language at each point in the sentence to preserve meaning. 16 On Monday it was raining It was raining on Monday No idea of state and what comes next!!! What was learned at the beginning of the sentence will have to be relearned at the end of the sentence.

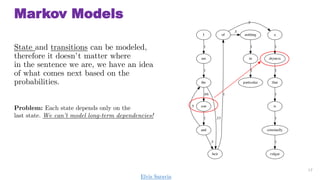

- 17. Markov Models State and transitions can be modeled, therefore it doesnˇŻt matter where in the sentence we are, we have an idea of what comes next based on the probabilities. 17 Problem: Each state depends only on the last state. We canˇŻt model long-term dependencies! Elvis Saravia

- 18. Long-term dependencies We need information from the far past and future to accurately model sequences. 18 In Italy, I had a great time and I learnt some of the _____ language Elvis Saravia

- 19. 19 ItˇŻs time for Recurrent Neural Networks (RNNs)!!! Elvis Saravia

- 20. Part 3 20 Recurrent Neural Networks (RNNs)

- 21. Recurrent Neural Networks (RNNs) ˇńRNNs model sequential information by assuming long-term dependencies between elements of a sequence. ˇńRNNs maintain word order and share parameters across the sequence (i.e., no need to relearn rules). ˇńRNNs are recurrent because they perform the same task for every element of a sequence, with the output being depended on the previous computations. ˇńRNNs memorize information that has been computed so far, so they deal well with long-term dependencies. 21

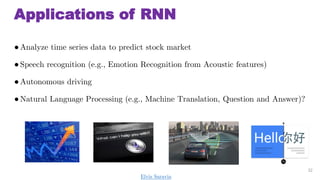

- 22. Applications of RNN ˇńAnalyze time series data to predict stock market ˇńSpeech recognition (e.g., Emotion Recognition from Acoustic features) ˇńAutonomous driving ˇńNatural Language Processing (e.g., Machine Translation, Question and Answer)? 22 Elvis Saravia

- 23. Some examples Google Magenta Project (Melody composer) ¨C (https://magenta.tensorflow.org) Sentence Generator - (http://goo.gl/onkPNd) Image Captioning ¨C (http://goo.gl/Nwx7Kh) 23 Elvis Saravia

- 24. RNNs Main Components - Recurrent neurons - Unrolling recurrent neurons - Layer of recurrent neurons - Memory cell containing hidden state 24 Elvis Saravia

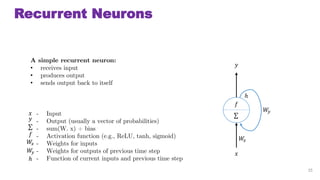

- 25. Recurrent Neurons ? ? A simple recurrent neuron: ? receives input ? produces output ? sends output back to itself ˇĆ ? ? ? ˇĆ ? - Input - Output (usually a vector of probabilities) - sum(W. x) + bias - Activation function (e.g., ReLU, tanh, sigmoid) - Weights for inputs - Weights for outputs of previous time step - Function of current inputs and previous time step ?? ?? ?? ?? 25 ? ?

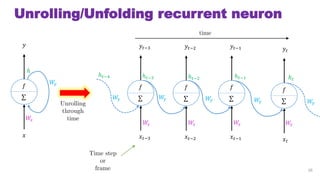

- 26. Unrolling/Unfolding recurrent neuron ? ? ˇĆ ? ???3 ???3 ˇĆ ? ???2 ???2 ˇĆ ? ???1 ???1 ˇĆ ? ?? ?? ˇĆ ? Unrolling through time Time step or frame 26 ?? ?? ?? ?? ?? ?? ?? ?? ?? ? ??3 ? ??2 ? ??1 ? ? time ? ??4 ?? ?? ?

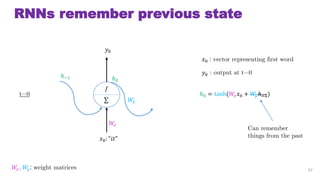

- 27. RNNs remember previous state 27 ?0: "??" ?0 ˇĆ ? ?? ?? ?0 : vector representing first word ??, ??: weight matrices ?0 : output at t=0 ?0 = tanh(?? ?0 + ????1)t=0 Can remember things from the past ??1 ?0

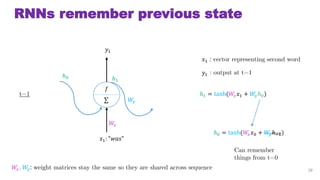

- 28. RNNs remember previous state 28 ?1: "???" ?1 ˇĆ ? ?? ?? ?1 : vector representing second word ??, ??: weight matrices stay the same so they are shared across sequence ?1 : output at t=1 ?1 = tanh(?? ?1 + ???0)t=1 ?0 ?1 ?0 = tanh(?? ?0 + ????1) Can remember things from t=0

- 29. Overview ? ? ˇĆ ? ???3 ???3 ˇĆ ? ???2 ???2 ˇĆ ? ???1 ???1 ˇĆ ? ?? ?? ˇĆ ? Unrolling through time scalar [0,1,2] [3,4,5] [6,7,8] [9,0,1] ?? = ? ?? ?? + ?? ???1 + ? 29[9,8,7] [0,0,0] [6,5,4] [3,2,1] batch

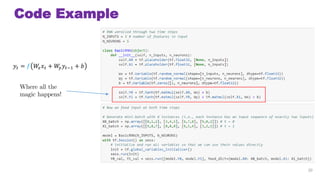

- 30. Code Example 30 Where all the magic happens! ?? = ? ?? ?? + ?? ???1 + ?

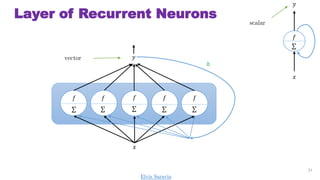

- 31. Layer of Recurrent Neurons ? ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ? ? scalar vector 31 ? Elvis Saravia

- 32. Unrolling Layer ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ˇĆ ? ? ? Unrolling through time ?0 ?0 ?1 ?1 ?2 ?2 [1,2,3] [1,2,3] [1,2,3] [4,5,6] [7,8,9] [0,0,0] Yt = ? ?? ???1 . ? + ? , ? = ?? ?? Y? = ? ??. ?? + ???1. ?? + ? vector form 32 ?? ?? ?? ?? ?? ?? ?0 ?1 ?2

- 33. Variations of RNNs: Input / Output ?0 ?0 ?1 ?1 ?2 ?2 ?3 ?3 sequence to sequence - Stock price - Other time series ?0 ?0 0 ?1 0 ?2 0 ?3 vector to sequence - Image captioning ?0 ?0 ?1 ?1 0 ?ˇä2 0 ?ˇä3 0 ?ˇä3 Encoder Decoder - Translation: - E: Sequence to vector - D: Vector to sequence 33 ?0 ?0 ?1 ?1 ?2 ?2 ?3 ?3 sequence to vector - Sentiment analysis ([-1,+1]) - Other classification tasks vector of probabilities over classes a.k.a softmax

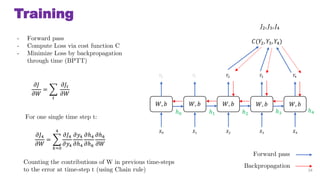

- 34. Training ?0 ?0 ?1 ?1 ?2 ?2 ?3 ?3 ?, ? ?, ? ?, ? ?, ? ?4 ?4 ?, ? ?(?2, ?3, ?4) Forward pass Backpropagation 34 - Forward pass - Compute Loss via cost function C - Minimize Loss by backpropagation through time (BPTT) ??4 ?? = ?=0 4 ??4 ??4 ??4 ??4 ??4 ?? ? ?? ? ?? ?? ?? = ? ??? ?? For one single time step t: ?2, ?3, ?4 Counting the contributions of W in previous time-steps to the error at time-step t (using Chain rule) ?0 ?1 ?2 ?3 ?4

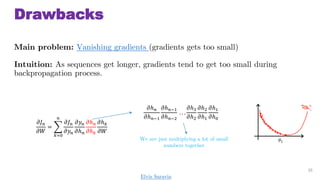

- 35. Drawbacks Main problem: Vanishing gradients (gradients gets too small) Intuition: As sequences get longer, gradients tend to get too small during backpropagation process. 35 ?? ? ?? = ?=0 ? ?? ? ??? ??? ?? ? ?? ? ?? ? ?? ? ?? ?? ? ?? ??1 ?? ??1 ?? ??2 . . . ??3 ??2 ??2 ??1 ??1 ??0 We are just multiplying a lot of small numbers together Elvis Saravia

- 36. Solutions Long Short-Term Memory Networks ¨C Deal with vanishing gradient problem, therefore more reliable to model long-term dependencies, especially for very long sequences. 36 Elvis Saravia

- 37. RNN Extensions Extended Readings: ˇđ Bidirectional RNNs ¨C passing states in both directions ˇđ Deep (Bidirectional) RNNs ¨C stacking RNNs ˇđ LSTM networks ¨C Adaptation of RNNs 37 Elvis Saravia

- 38. In general ˇńRNNs are great for analyzing sequences of any arbitrary length. ˇńRNNs are considered ˇ°anticipatoryˇ± models ˇńRNNs are also considered creative learning models as they can, for example, predict set of musical notes to play next in melody, and selects an appropriate one. 38 Elvis Saravia

- 39. Part 3 39 RNNs in Tensorflow Elvis Saravia

- 40. Demo ˇńBuilding RNNS in Tensorflow ˇńTrainining RNNS in Tensorflow ˇńImage Classification ˇńText Classification 40 Elvis Saravia

- 41. References ˇńIntroduction to RNNs - http://www.wildml.com/2015/09/recurrent-neural- networks-tutorial-part-1-introduction-to-rnns/ ˇńNTHU Machine Learning Course - https://goo.gl/B4EqMi ˇńHands-On Machine Learning with Scikit-Learn and Tensorflow (Book) 41 Elvis Saravia

![Modeling Sequences

How to represent a sequence?

12

I love the coldness [ 1 1 0 1 0 0 1 ]

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

?0

?1

?2

? ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇĆ ?

ˇ

?

Bag of Words (BOW)

WhatˇŻs the problem with the BOW representation?](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-12-320.jpg)

![Problem with BOW

Bag of words does not preserve order, therefore no semantics can be captured

13

ˇ°The food was good, not bad at allˇ±

ˇ°The food was bad, not good at allˇ±

vs

How to differentiate meaning of both sentences?

[ 1 1 0 1 1 0 1 0 1 0 0 1 1 ]](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-13-320.jpg)

![One-Hot Encoding

ˇń Preserve order by maintaining order within feature vector

14

On Monday it was raining

[ 0 0 0 1 0 0 0 1 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 ]

We preserved order but what is the problem here?](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-14-320.jpg)

![Problem with One-Hot Encoding

ˇń One-hot encoding cannot deal with variations of the same sequence.

15

On Monday it was raining

It was raining on Monday

[ 0 0 0 1 0 0 0 1 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 ]

[ 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 1 0 0 ]

Elvis Saravia](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-15-320.jpg)

![Overview

?

?

ˇĆ

?

???3

???3

ˇĆ

?

???2

???2

ˇĆ

?

???1

???1

ˇĆ

?

??

??

ˇĆ

?

Unrolling

through

time

scalar

[0,1,2] [3,4,5] [6,7,8] [9,0,1]

?? = ? ?? ?? + ?? ???1 + ?

29[9,8,7] [0,0,0] [6,5,4] [3,2,1]

batch](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-29-320.jpg)

![Unrolling Layer

ˇĆ

?

ˇĆ

?

ˇĆ

?

ˇĆ

?

ˇĆ

?

?

?

Unrolling

through

time

?0

?0

?1

?1

?2

?2

[1,2,3] [1,2,3] [1,2,3]

[4,5,6] [7,8,9] [0,0,0]

Yt = ? ?? ???1 . ? + ? , ? =

??

??

Y? = ? ??. ?? + ???1. ?? + ?

vector

form

32

?? ?? ??

?? ?? ??

?0 ?1 ?2](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-32-320.jpg)

![Variations of RNNs: Input / Output

?0

?0

?1

?1

?2

?2

?3

?3

sequence to sequence

- Stock price

- Other time series

?0

?0

0

?1

0

?2

0

?3

vector to sequence

- Image captioning

?0

?0

?1

?1

0

?ˇä2

0

?ˇä3

0

?ˇä3

Encoder Decoder

- Translation:

- E: Sequence to vector

- D: Vector to sequence

33

?0

?0

?1

?1

?2

?2

?3

?3

sequence to vector

- Sentiment analysis ([-1,+1])

- Other classification tasks

vector of

probabilities

over classes

a.k.a softmax](https://image.slidesharecdn.com/introductiontofundamentalsofrnns-171224042158/85/Introduction-to-Fundamentals-of-RNNs-33-320.jpg)