Applied AI - 2017-07-11 - Learning From Sets

- 1. LEARNING FROM SETS ANDREW CLEGG

- 2. IN A NUTSHELL ABOUT ME ? Yelp (starting next week!) ? Etsy, Pearson, Last.fm, AstraZeneca, consulting ? Bioinformatics, information retrieval, natural language processing (UCL/Birkbeck) ? Main interests: search, recommendations, personalization ? @andrew_clegg ? http://andrewclegg.org/

- 3. LEARNING DEEP REPRESENTATIONS FOR UNORDERED ITEM SETS

- 4. LEARNING FROM ITEM COLLECTIONS PROBLEM STATEMENT ? A lot of real-world data consists of collections of objects ? UserĪ»s session on a website (list of events) ? Products in a shopping cart (bag of items) ? Product titles (list of words) ? Songs played in a userĪ»s history (list of items) ? Movies liked in a userĪ»s signup flow (set of items)

- 5. LEARNING FROM ITEM COLLECTIONS PROBLEM STATEMENT ? A lot of real-world data consists of collections of objects ? UserĪ»s session on a website (list of events) Ī¬ ORDERED ? Products in a shopping cart (bag of items) Ī¬ ORDERED OR NOT ? Product titles (list of words) Ī¬ ORDEREDĪŁ OR NOT? ? Songs played in a userĪ»s history (list of items) Ī¬ ORDERED ? Movies liked in a userĪ»s signup flow (set of items) Ī¬ UNORDERED

- 6. LEARNING FROM ITEM COLLECTIONS PROBLEM STATEMENT ? Learning representations for variable-length sequences is Ī░easyĪ▒ ? RNNs, LSTMs, GRUs ? Input = sequence of embeddings ? Output = embedding for whole sequence ? Very effective but not always the cheapest or easiest to train ? But what if the data is unordered? ? What if itĪ»s ordered, but that ordering is uninformative?

- 7. HOW CAN WE LEARN A SINGLE EMBEDDING FROM A BAG OR SET OF ITEM EMBEDDINGS?

- 8. (WHICH MIGHT NOT WORK VERY WELL) REALLY SIMPLE APPROACH ? Learn item embeddings in an unsupervised manner ? e.g. Ī░Item2VecĪ▒, Barkan & Koenigstein 2016 ? word2vec (skip-gram with negative sampling) on item IDs ? Average them together to get an embedding for the set/bag ? Often used in text mining / IR as a baseline or lower bound ? e.g. Ī░word centroid distanceĪ▒ from Kusner et al 2015

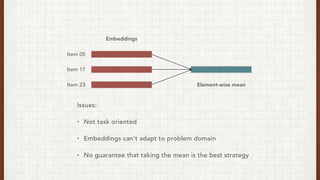

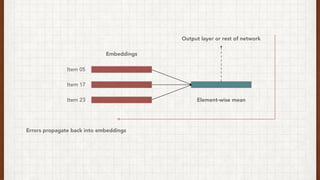

- 9. Embeddings Item 05 Item 17 Item 23 Element-wise mean Issues: ? Not task oriented ? Embeddings canĪ»t adapt to problem domain ? No guarantee that taking the mean is the best strategy

- 10. LEARN EMBEDDINGS WHILE TRAINING ON A TASK NEURAL BAG-OF-ITEMS ? Common baseline in NLP tasks: neural bag-of-words ? Initialize embeddings randomly ? Or from unsupervised pre-training, or third-party data ? Take mean (or sometimes sum) ? Feed into network, update embeddings via backprop

- 11. Embeddings Item 05 Item 17 Item 23 Element-wise mean Output layer or rest of network Errors propagate back into embeddings

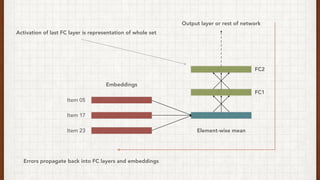

- 12. COMPOSE EMBEDDINGS VIA NON-LINEAR TRANSFORMATIONS DEEP AVERAGING NETWORKS ? Ī░Deep Unordered Composition Rivals Syntactic Methods for Text ClassificationĪ▒ (Iyyer et al 2015) ? Developed for sentiment classification & question answering ? Proposed as a cheap alternative to recursive neural networks ? In a nutshell: ? DonĪ»t use mean of embeddings directly ? Take mean and pass it through some fully-connected layers ? Probably prior art somewhere?

- 13. Embeddings Item 05 Item 17 Item 23 Element-wise mean Output layer or rest of network Errors propagate back into FC layers and embeddings FC2 FC1 Activation of last FC layer is representation of whole set

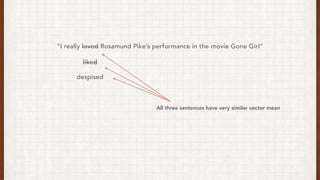

- 14. Ī¬ Iyyer et al THE DEEP LAYERS OF THE DAN AMPLIFY TINY DIFFERENCES IN THE VECTOR AVERAGE THAT ARE PREDICTIVE OF THE OUTPUT LABELS. Ī▒ Ī░

- 15. Ī░I really loved Rosamund PikeĪ»s performance in the movie Gone GirlĪ▒

- 16. Ī░I really loved Rosamund PikeĪ»s performance in the movie Gone GirlĪ▒ liked

- 17. Ī░I really loved Rosamund PikeĪ»s performance in the movie Gone GirlĪ▒ liked despised

- 18. Ī░I really loved Rosamund PikeĪ»s performance in the movie Gone GirlĪ▒ despised All three sentences have very similar vector mean liked

- 19. REMOVING ENTIRE EMBEDDINGS FROM THE MEAN WORD DROPOUT ? Additional contribution: alternative dropout scheme ? DonĪ»t add dropout after fully-connected layers ? Instead, randomly drop words from the input sentences ? Maybe somewhat specific to sentiment and question answering? ? Most words in a sentence donĪ»t affect the sentiment ? Most words in a sentence donĪ»t describe the actual answer

- 21. PREDICTING GROCERY RE-ORDERS INSTACART KAGGLE CONTEST Simplified version of task, for trying out DANs: ? Given previous order (n of ~50K products)ĪŁ ? Predict what % of items in it will be re-ordered in next order ? Use only the items in the previous order (not user, metadata etc.)

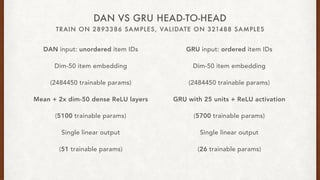

- 22. TRAIN ON 2893386 SAMPLES, VALIDATE ON 321488 SAMPLES DAN VS GRU HEAD-TO-HEAD DAN input: unordered item IDs Dim-50 item embedding (2484450 trainable params) Mean + 2x dim-50 dense ReLU layers (5100 trainable params) Single linear output (51 trainable params) GRU input: ordered item IDs Dim-50 item embedding (2484450 trainable params) GRU with 25 units + ReLU activation (5700 trainable params) Single linear output (26 trainable params)

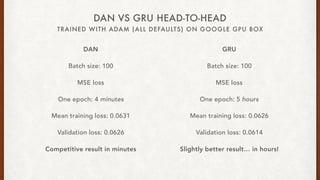

- 23. TRAINED WITH ADAM (ALL DEFAULTS) ON GOOGLE GPU BOX DAN VS GRU HEAD-TO-HEAD DAN Batch size: 100 MSE loss One epoch: 4 minutes Mean training loss: 0.0631 Validation loss: 0.0626 Competitive result in minutes GRU Batch size: 100 MSE loss One epoch: 5 hours Mean training loss: 0.0626 Validation loss: 0.0614 Slightly better resultĪŁ in hours!

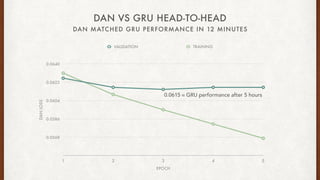

- 24. DAN MATCHED GRU PERFORMANCE IN 12 MINUTES DAN VS GRU HEAD-TO-HEAD DANLOSS 0.0568 0.0586 0.0604 0.0622 0.0640 EPOCH 1 2 3 4 5 VALIDATION TRAINING 0.0615 Īų GRU performance after 5 hours

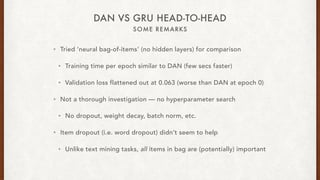

- 25. SOME REMARKS DAN VS GRU HEAD-TO-HEAD ? Tried Ī«neural bag-of-itemsĪ» (no hidden layers) for comparison ? Training time per epoch similar to DAN (few secs faster) ? Validation loss flattened out at 0.063 (worse than DAN at epoch 0) ? Not a thorough investigation Ī¬ no hyperparameter search ? No dropout, weight decay, batch norm, etc. ? Item dropout (i.e. word dropout) didnĪ»t seem to help ? Unlike text mining tasks, all items in bag are (potentially) important

- 26. ANY QUESTIONS? THANKS! ? Code available on GitHub: ? andrewclegg/insta-keras ? Feel free to grab me afterwards to chat about anything ? Or ping me on Twitter: ? @andrew_clegg