20181221 q-trader

- 1. Reinforcement learning for trading with Python December 21, 2018 ĪÏĪóĪĘĪęPython Taku Yoshioka

- 2. ? A previous work on github: q-trader ? Q-learning ? Trading model ? State, action and reward ? Implementation ? Results

- 4. edwardhdlu/q-trader ? An implementation of Q-learning applied to (short- term) stock trading

- 5. Action value function (Q-value) ? Expected discounted cumlative future reward given the current state and action then following the optimal policy (mapping from state to action) Q(st, at) = E " X k=0 k rt+k | s = st, a = at # : discount factor t: time step st: state at: action rt: (immediate) reward

- 6. Q-learning ? An algorithm of Reinforcement learning for learning the optimal Q-value Qnew (st, at) = (1 ?)Qold (st, at) + ?(rt + max a Q(st+1, a)) ? 2 (0, 1): learning rate ? For applying Q-learning, collect samples in episodes represented as tuples (st, at, rt, st+1)

- 7. Deep Q-learning ? Representing action value function with a deep network and minimizing loss function L = X t2D (Q(st, at) yt) 2 yt = rt + max a Q(st+1, a) Note ? In contrast to supervised learning, the target value involves the current network outputs. Thus network parameters should be gradually updated ? The order of samples in minibatches can be randomized to decorrelate sequential samples (replay buffer) D: minibatch

- 8. Trading model ? Based on closing prices ? Three actions: buy (1 unit), sell (1 unit), sit ? Ignoring transaction fee ? Immediate transaction ? Limited number of units we can keep

- 9. State ? State: n-step time window of the 1-step differences of past stock prices ? Sigmoid function for input scaling issue st = (dt ?+1, ĄĪ ĄĪ ĄĪ , dt 1, dt) dt = sigmoid (pt pt 1) pt: price at time t (discrete) ?: time window size (steps)

- 10. Reward ? Depending on action ? Sit: 0 ? Buy: a negative constant (con?gurable) ? Sell: pro?t (sell price - bought price) This needs to be appropriately designed

- 11. Implementation ? Training (train.py) Initialization, sample collection, training loop ? Environment (environment.py) Loading stock data, making state transition with reward ? Agent (agent.py) Feature extraction, Q-learning, e-greedy exploration, saving model ? Results examination (learning_curve.py, plot_learning_curve.py, evaluate.py) Compute learning curve for given data, plotting

- 12. Con?guration ? For comparison of various experimental settings stock_name : ^GSPC window_size : 10 episode_count: 500 result_dir : ./models/config batch_size: 32 clip_reward : True reward_for_buy: -10 gamma : 0.995 learning_rate : 0.001 optimizer : Adam inventory_max : 10

- 13. from ruamel.yaml import YAML with open(sys.argv[1]) as f: yaml = YAML() config = yaml.load(f) stock_name = config["stock_name"] window_size = config["window_size"] episode_count = config["episode_count"] result_dir = config["result_dir"] batch_size = config["batch_size"] Parsing con?g ?le ? Get parameters as a dict

- 14. stock_name, window_size, episode_count = sys.argv[1], int(sys.argv[2]), int( sys.argv[3]) batch_size = 32 agent = Agent(window_size) # Environment env = SimpleTradeEnv(stock_name, window_size, agent) train.py ? Instantiate RL agent and environment for trading

- 15. # Loop over episodes for e in range(episode_count + 1): # Initialization before starting an episode state = env.reset() agent.inventory = [] done = False # Loop in an episode while not done: action = agent.act(state) next_state, reward, done, _ = env.step(action) agent.memory.append((state, action, reward, next_state, done)) state = next_state if len(agent.memory) > batch_size: agent.expReplay(batch_size) if e % 10 == 0: agent.model.save("models/model_ep" + str(e)) ? Loop over episodes ? Collect samples and train the model (expReplay) ? Train model every 10 episodes

- 16. environment.py ? Loading stock data class SimpleTradeEnv(object): def __init__(self, stock_name, window_size, agent, inventory_max, clip_reward=True, reward_for_buy=-20, print_trade=True): self.data = getStockDataVec(stock_name) self.window_size = window_size self.agent = agent self.print_trade = print_trade self.reward_for_buy = reward_for_buy self.clip_reward = clip_reward self.inventory_max = inventory_max

- 17. ? Computing reward for action and making state transition def step(self, action): # 0: Sit # 1: Buy # 2: Sell assert(action in (0, 1, 2)) # Reward if action == 0: reward = 0 elif action == 1: # Following slide else: if len(self.agent.inventory) > 0: # Following slide # State transition next_state = getState(self.data, self.t + 1, self.window_size + 1, self.agent) done = True if self.t == len(self.data) - 2 else False self.t += 1 return next_state, reward, done, {}

- 18. ? Reward for buy elif action == 1: if len(self.agent.inventory) < self.inventory_max: reward = self.reward_for_buy self.agent.inventory.append(self.data[self.t]) if self.print_trade: print("Buy: " + formatPrice(self.data[self.t])) else: reward = 0 if self.print_trade: print("Buy: not possible")

- 19. ? Reward for sell else: if len(self.agent.inventory) > 0: bought_price = self.agent.inventory.pop(0) profit = self.data[self.t] - bought_price reward = max(profit, 0) if self.clip_reward else profit self.total_profit += profit if self.print_trade: print("Sell: " + formatPrice(self.data[self.t]) + " | Profit: " + formatPrice(reward))

- 20. # returns an an n-day state representation ending at time t def getState(data, t, n, agent): d = t - n + 1 block = data[d:t + 1] if d >= 0 else -d * [data[0]] + data[0:t + 1] # pad # with t0 res = [] for i in range(n - 1): res.append(sigmoid(block[i + 1] - block[i])) return agent.modify_state(np.array([res])) ? Computing 1-step differences of time series of stock prices ? Adding information if the agent has bought def modify_state(self, state): if len(self.inventory) > 0: state = np.hstack((state, [[1]])) else: state = np.hstack((state, [[0]])) return state (in agent.py)

- 21. agent.py ? Implements RL agent ? Load pre-trained model when evaluation (is_eval) class Agent: def __init__(self, state_size, is_eval=False, model_name="", result_dir="", gamma=0.95, learning_rate=0.001, optimizer="Adam"): self.state_size = state_size # normalized previous days self.action_size = 3 # sit, buy, sell self.memory = deque(maxlen=1000) self.inventory = [] self.model_name = model_name self.is_eval = is_eval self.gamma = gamma self.epsilon = 1.0 self.epsilon_min = 0.01 self.epsilon_decay = 0.995 self.learning_rate = learning_rate self.optimizer = optimizer self.model = load_model(result_dir + "/" + model_name) if is_eval else self._model()

- 22. def _model(self): model = Sequential() model.add(Dense(units=64, input_dim=self.state_size, activation="relu")) model.add(Dense(units=32, activation="relu")) model.add(Dense(units=8, activation="relu")) model.add(Dense(self.action_size, activation="linear")) model.compile(loss="mse", optimizer=Adam(lr=0.001)) return model def act(self, state): if not self.is_eval and np.random.rand() <= self.epsilon: return random.randrange(self.action_size) options = self.model.predict(state) return np.argmax(options[0]) ? Implements action value function by a neural network ? Accept state vector as input, output values for each action ? Specify square loss and ADAM optimizer ? Take argmax action or epsilon-greedy for exploration

- 23. ? Compute a target value with the current network output ? Update parameters once (epochs=1) def expReplay(self, batch_size): subsamples = random.sample(list(self.memory), len(self.memory)) states, targets = [], [] for state, action, reward, next_state, done in subsamples: target = reward if not done: target = reward + self.gamma * np.amax(self.model.predict(next_state)[0]) target_f = self.model.predict(state) target_f[0][action] = target states.append(state) targets.append(target_f) self.model.fit(np.vstack(states), np.vstack(targets), epochs=1, verbose=0, batch_size=batch_size) if self.epsilon > self.epsilon_min: self.epsilon *= self.epsilon_decay

- 24. Running scripts # Training with Q-learning $ python train.py config/config2.yaml ? Training # Learning curve on training data $ python learning_curve.py config/config2.yaml ^GSPC ? Learning curve

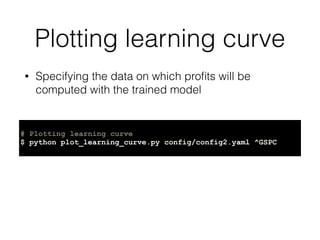

- 25. Plotting learning curve # Plotting learning curve $ python plot_learning_curve.py config/config2.yaml ^GSPC ? Specifying the data on which pro?ts will be computed with the trained model

- 26. Training data (^GSPC) Test data (^GSPC_2011) ? Not working well: increasing pro?t on test data, but decreasing on training data ? We usually see over?tting to training data

- 27. Plotting trading behavior ? Specifying the data and model (?lename) # Plotting trading on a model $ python evaluate.py config/config2.yaml ^GSPC_2011 model_ep500

![from ruamel.yaml import YAML

with open(sys.argv[1]) as f:

yaml = YAML()

config = yaml.load(f)

stock_name = config["stock_name"]

window_size = config["window_size"]

episode_count = config["episode_count"]

result_dir = config["result_dir"]

batch_size = config["batch_size"]

Parsing con?g ?le

? Get parameters as a dict](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-13-320.jpg)

![stock_name, window_size, episode_count = sys.argv[1], int(sys.argv[2]), int(

sys.argv[3])

batch_size = 32

agent = Agent(window_size)

# Environment

env = SimpleTradeEnv(stock_name, window_size, agent)

train.py

? Instantiate RL agent and environment for trading](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-14-320.jpg)

![# Loop over episodes

for e in range(episode_count + 1):

# Initialization before starting an episode

state = env.reset()

agent.inventory = []

done = False

# Loop in an episode

while not done:

action = agent.act(state)

next_state, reward, done, _ = env.step(action)

agent.memory.append((state, action, reward, next_state, done))

state = next_state

if len(agent.memory) > batch_size:

agent.expReplay(batch_size)

if e % 10 == 0:

agent.model.save("models/model_ep" + str(e))

? Loop over episodes

? Collect samples and train the model (expReplay)

? Train model every 10 episodes](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-15-320.jpg)

![? Reward for buy

elif action == 1:

if len(self.agent.inventory) < self.inventory_max:

reward = self.reward_for_buy

self.agent.inventory.append(self.data[self.t])

if self.print_trade:

print("Buy: " + formatPrice(self.data[self.t]))

else:

reward = 0

if self.print_trade:

print("Buy: not possible")](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-18-320.jpg)

![? Reward for sell

else:

if len(self.agent.inventory) > 0:

bought_price = self.agent.inventory.pop(0)

profit = self.data[self.t] - bought_price

reward = max(profit, 0) if self.clip_reward else profit

self.total_profit += profit

if self.print_trade:

print("Sell: " + formatPrice(self.data[self.t]) +

" | Profit: " + formatPrice(reward))](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-19-320.jpg)

![# returns an an n-day state representation ending at time t

def getState(data, t, n, agent):

d = t - n + 1

block = data[d:t + 1] if d >= 0 else -d * [data[0]] + data[0:t + 1] # pad # with t0

res = []

for i in range(n - 1):

res.append(sigmoid(block[i + 1] - block[i]))

return agent.modify_state(np.array([res]))

? Computing 1-step differences of time series of

stock prices

? Adding information if the agent has bought

def modify_state(self, state):

if len(self.inventory) > 0:

state = np.hstack((state, [[1]]))

else:

state = np.hstack((state, [[0]]))

return state

(in agent.py)](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-20-320.jpg)

![agent.py

? Implements RL agent

? Load pre-trained model when evaluation (is_eval)

class Agent:

def __init__(self, state_size, is_eval=False, model_name="", result_dir="", gamma=0.95,

learning_rate=0.001, optimizer="Adam"):

self.state_size = state_size # normalized previous days

self.action_size = 3 # sit, buy, sell

self.memory = deque(maxlen=1000)

self.inventory = []

self.model_name = model_name

self.is_eval = is_eval

self.gamma = gamma

self.epsilon = 1.0

self.epsilon_min = 0.01

self.epsilon_decay = 0.995

self.learning_rate = learning_rate

self.optimizer = optimizer

self.model = load_model(result_dir + "/" + model_name) if is_eval else self._model()](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-21-320.jpg)

![def _model(self):

model = Sequential()

model.add(Dense(units=64, input_dim=self.state_size, activation="relu"))

model.add(Dense(units=32, activation="relu"))

model.add(Dense(units=8, activation="relu"))

model.add(Dense(self.action_size, activation="linear"))

model.compile(loss="mse", optimizer=Adam(lr=0.001))

return model

def act(self, state):

if not self.is_eval and np.random.rand() <= self.epsilon:

return random.randrange(self.action_size)

options = self.model.predict(state)

return np.argmax(options[0])

? Implements action value function by a neural network

? Accept state vector as input, output values for each action

? Specify square loss and ADAM optimizer

? Take argmax action or epsilon-greedy for exploration](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-22-320.jpg)

![? Compute a target value with the current network output

? Update parameters once (epochs=1)

def expReplay(self, batch_size):

subsamples = random.sample(list(self.memory), len(self.memory))

states, targets = [], []

for state, action, reward, next_state, done in subsamples:

target = reward

if not done:

target = reward + self.gamma * np.amax(self.model.predict(next_state)[0])

target_f = self.model.predict(state)

target_f[0][action] = target

states.append(state)

targets.append(target_f)

self.model.fit(np.vstack(states), np.vstack(targets), epochs=1, verbose=0,

batch_size=batch_size)

if self.epsilon > self.epsilon_min:

self.epsilon *= self.epsilon_decay](https://image.slidesharecdn.com/20181221-q-trader-181223112639/85/20181221-q-trader-23-320.jpg)