A Case Study on the Use of Developmental Evaluation for Navigating Uncertainty during Social Innovation

- 1. A Case Study on the Use of Developmental Evaluation for Navigating Uncertainty during Social Innovation Chi Yan Lam, MEd AEA 2012 @chiyanlam October 25, 2012 Assessment and Evaluation Group, QueenŌĆÖs University ║▌║▌▀Żs available now at www.chiyanlam.com

- 2. ŌĆ£The signi’¼ücant problems we have cannot be solved at the same level of thinking with which we created them.ŌĆØ http://yareah.com/wp-content/uploads/2012/04/einstein.jpg

- 3. Developmental Evaluation in 1994 ŌĆó collaborative, long-term partnership ŌĆó purpose: program development ŌĆó observation: clients who eschew clear,speci’¼üc, measurable goals

- 4. Developmental Evaluation in 2011 ŌĆó takes on a responsive, collaborative, adaptive orientation to evaluation ŌĆó complexity concepts ŌĆó systems thinking ŌĆó social innovation

- 5. Developmental Evaluation (Patton, 1994, 2011) DE supports innovation development Evaluator works collaboratively with to guide adaptation to emergent and social innovators to conceptualize, dynamic realities in complex design, and test new approaches in environments long-term, ongoing process of adaptation, intentional change and DE brings to innovation and adaptation development. the processes of: Primary functions of evaluator: ŌĆó asking evaluative questions ŌĆó elucidate the innovation and ŌĆó applying evaluation logic adaptation processes ŌĆó gathering and reporting eval data ŌĆó track their implications and results to inform support project/ program/product, and/or ŌĆó facilitate ongoing, real-time data- organizational development in real based decision-making in the time. Thus, feedback is rapid. developmental process.

- 6. Developmental Evaluation (Patton, 1994, 2011) DE supports innovation development to Evaluator works collaboratively with guide adaptation to emergent and social innovators to conceptualize, dynamic realities in complex design, and test new approaches in environments long-term, ongoing process of adaptation, intentional change and DE brings to innovation and adaptation development. the processes of: Primary functions of evaluator: ŌĆó asking evaluative questions ŌĆó elucidate the innovation and ŌĆó applying evaluation logic adaptation processes ŌĆó gathering and reporting eval ŌĆó track their implications and results data to inform support project/ program/product, and/or ŌĆó facilitate ongoing, real-time organizational development in real data-based decision-making time. Thus, feedback is rapid. in the developmental process.

- 7. Developmental Evaluation (Patton, 1994, 2011) Improvement DE supports innovation development to guide adaptation to emergent and dynamic realities in complex Evaluator works collaboratively with social innovators to conceptualize, design, and test new approaches in environments long-term, ongoing process of adaptation, intentional change and DE brings to innovation and adaptation development. the processes of: ŌĆó asking evaluative questions vs Primary functions of evaluator: ŌĆó elucidate the innovation and ŌĆó applying evaluation logic adaptation processes ŌĆó gathering and reporting eval data ŌĆó track their implications and results to inform support project/ Development ŌĆó facilitate ongoing, real-time data- program/product, and/or organizational development in real based decision-making in the time. Thus, feedback is rapid. developmental process. .

- 8. Developmental Evaluation is reality testing.

- 10. ŌĆó DE: novel, yet- to-be developed empirical & practical basis ŌĆó Research on Evaluation: ŌĆó scope and limitations ŌĆó utility & suitability diff. context ŌĆó Legitimize DE

- 11. Overview ŌĆó Theoretical Overview (only brie’¼éy) ŌĆó Case Context ŌĆó Case Study

- 12. Research Purpose to learn about the capacity of developmental evaluation to support innovation development. (from nothing to something) 12

- 13. Research Questions 1. To what extent does Assessment Pilot Initiative qualify as a developmental evaluation? 2. What contribution does developmental evaluation make to enable and promote program development? 3. To what extent does developmental evaluation address the needs of the developers in ways that inform program development? 4. What insights, if any, can be drawn from this development about the roles and the responsibilities of the developmental evaluator? 13

- 14. Social Innovation ŌĆó SI aspire to change and transform social realities (Westley, Zimmerman, & Patton, 2006) ŌĆó generating ŌĆ£novel solutions to social problems that are more effective, ef’¼ücient, sustainable, or just than existing solutions and for which the value created accrues primarily to society as a whole rather than private individualsŌĆØ (Phills, Deiglmeier, & Miller, 2008) 14

- 15. Complexity Thinking Situational Analysis Complexity Concepts ŌĆ£sensemakingŌĆØ frameworks that attunes the evaluators to certain things 15

- 16. Simple Complicated Complex C ŌĆó predictable ŌĆó replicable ŌĆó ŌĆó predictable replicable ŌĆó unpredictable ŌĆódif’¼ücult to replicate h ŌĆó a ŌĆó known known ŌĆó unknown ŌĆó many variables/parts ŌĆó many interacting ŌĆó causal if-then working in tandem in variables/parts models ŌĆó sequence requires expertise/training ŌĆó systems thinking? o ŌĆó complex dynamics? ŌĆó causal if-then models (Westley, Zimmerman, Patton, 2008) s

- 18. Complexity Concepts ŌĆó understanding dynamical behaviour of systems ŌĆó description of behaviour over time ŌĆó metaphors for describing change ŌĆó how things change ŌĆó NOT predictive, not explanatory ŌĆó (existence of some underlying principles; rules- driven behaviour) 18

- 19. Complexity Concepts ŌĆó Nonlinearity (butter’¼éy ’¼éaps its wings, black swan); cause and effect ŌĆó Emergence: new behaviour emerge from interaction... canŌĆÖt really predetermine indicators ŌĆó Adaptation: systems respond and adapt to each other, to environments ŌĆó Uncertainty: processes and outcomes are unpredictable, uncontrollable, and unknowable in advance. ŌĆó Dynamical: interactions within, between, among subsystems change in an unpredictable way. ŌĆó Co-evolution: change in response to adaptation. (growing old together) 19

- 20. Systems Thinking ŌĆó Pays attention to the in’¼éuences and relationships between systems in reference to the whole ŌĆó a system is a dynamic, complex, structured functional unit ŌĆó there is ’¼éow and exchanges between systems ŌĆó systems are situated within a particular context 20

- 21. Complex Adaptive Dynamic Systems

- 22. Practicing DE ŌĆó Adapative to context, agile in methods, responsive to needs ŌĆó evaluative thinking - critical thinking ŌĆó bricoleur ŌĆó ŌĆ£purpose-and-relationship-driven not [research] method drivenŌĆØ(Patton, 2011, p. 288)

- 23. Five Uses of DE (Patton, 2011, p. 194) Five Purposes and Uses 1. Ongoing development in adapting program, strategy, policy, etc. 2. Adapting effective principles to a local context 3. Developing a rapid response 4. Preformative development of a potentially broad- impact, scalable innovation 5. Major systems change and cross-scale developmental evaluation 23

- 24. Method & Methodology ŌĆó Questions drive method (Greene, 2007; Teddlie and Tashakkori, 2009) ŌĆó Qualitative Case Study ŌĆó understanding the intricacies into the phenomenon and the context ŌĆó Case is a ŌĆ£speci’¼üc, unique, bounded systemŌĆØ (Stake, 2005, p. 436). ŌĆó Understanding the systemŌĆÖs activity, and its function and interactions. ŌĆó Qualitative research to describe, understand, and infer meaning. 24

- 25. Data Sources ŌĆó Three pillars of data 1. Program development records 2. Development Artifacts 3. Interviews with clients on the signi’¼ücance of various DE episodes 25

- 26. Data Analysis 1. Reconstructing evidentiary base 2. Identifying developmental episodes 3. Coding for developmental moments 4. Time-series analysis 26

- 27. ! 27

- 28. Assessment Pilot Initiative ŌĆó Describes the innovative efforts of a team of 3 teacher educators promoting contemporary notions of classroom assessment ŌĆó Teaching and Learning Constraints ($, time, space) ŌĆó Interested in integrating Social Media into Teacher Education (classroom assessment) ŌĆó The thinking was that assessment learning requires learners to actively engage with peers and challenge their own experiences and conceptions of assessment. 28

- 29. 29

- 30. Book-ending: Concluding Conditions ŌĆó 22 teacher candidates participated in a hybrid, blended learning pilot. They tweeted about their own experiences around trying to put into practice contemporary notions of assessment ŌĆó Guided by the script: ŌĆ£Think Tweet ShareŌĆØ - grounded in e- learning and learning theories ŌĆó Developmental evaluation guided this exploration, between the instructors, evaluator, and teacher candidates as a collective in this participatory learning experience. ŌĆó DE became integrated; Program became agile and responsive by design 30

- 31. 31

- 32. How the innovation came to be...

- 33. Key Developmental Episodes ŌĆó Ep 1: Evolving understanding in using social media for professional learning. ŌĆó Ep 2: Explicating values through Appreciative Inquiry for program development. ŌĆó Ep 3: Enhancing collaboration through structured communication ŌĆó Ep 4: Program development through the use of evaluative data Again, you can't connect the dots looking forward; you can only connect them looking backwards. - Steve Jobs 33

- 34. (Wicked) Uncertainty ŌĆó uncertain about how to proceed ŌĆó uncertain about in what direction to proceed (given many choices) ŌĆó uncertain how teacher candidates would respond to the intervention ŌĆó the more questions we answered , the more questions we raised. ŌĆó Typical of DE: ŌĆó Clear, Measurable, and Speci’¼üc Outcomes ŌĆó Use of planning frameworks. ŌĆó Traditional evaluation cycles wouldnŌĆÖt work. 34

- 35. How the innovation came to be... ŌĆó Reframing what constituted ŌĆ£dataŌĆØ ŌĆó not intentional, but an adaptive response ŌĆó informational needs concerning development; collected, analyzed, interpreted ŌĆó relevant theories, concepts, ideas; introduced to catalyze thinking. Led to learning and un-learning. 35

- 36. Major Findings RQ1: To what extent does API qualify as a developmental evaluation? 1. Preformative development of a potentially broad-impact, scalable innovation 2. Patton: Did something get developed? Ō£Ś (Improvement vs development vs innovation) Ō£ö Ō£ö 36

- 37. RQ2: What contribution does DE make to enable and promote program development? 1. Lent a data-informed process to innovation 2. Implication: responsiveness ŌĆó program-in-action became adaptive to the emergent needs of users 3. Consequence: resolving uncertainty 37

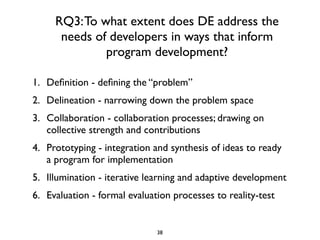

- 38. RQ3: To what extent does DE address the needs of developers in ways that inform program development? 1. De’¼ünition - de’¼üning the ŌĆ£problemŌĆØ 2. Delineation - narrowing down the problem space 3. Collaboration - collaboration processes; drawing on collective strength and contributions 4. Prototyping - integration and synthesis of ideas to ready a program for implementation 5. Illumination - iterative learning and adaptive development 6. Evaluation - formal evaluation processes to reality-test 38

- 39. Implications to Evaluation ŌĆó One of the ’¼ürst documented case study into developmental evaluation ŌĆó Contributions into understanding, analyzing and reporting development as a process ŌĆó Delineating the kinds of roles and responsibilities that promote development ŌĆó The notion of design emerges from this study 39

- 41. ŌĆó Program as co-created ŌĆó Attending to the ŌĆ£theoryŌĆØ of the program ŌĆó DE as a way to drive the innovating process ŌĆó Six foci of development ŌĆó Designing programs?

- 42. Design and Design Thinking 42

- 43. Design+Design Thinking ŌĆ£Design is the systematic exploration into the complexity of options (in program values, assumptions, output, impact, and technologies) and decision-making processes that results in purposeful decisions about the features and components of a program-in-development that is informed by the best conception of the complexity surrounding a social need. Design is dependent on the existence and validity of highly situated and contextualized knowledge about the realities of stakeholders at a site of innovation. The design process ’¼üts potential technologies, ideas, and concepts to recon’¼ügure the social realities. This results in the emergence of a program that is adaptive and responsive to the needs of program users.ŌĆØ (Lam, 2011, p. 137-138) 43

- 44. Implications to Evaluation Practice 1. Manager 2. Facilitator of learning 3. Evaluator 4. Innovation thinker 44

- 45. Limitations ŌĆó Contextually bound, so not generalizable ŌĆó but it does add knowledge to the ’¼üeld ŌĆó Data of the study is only as good as the data collected from the evaluation ŌĆó better if I had captured the program-in-action ŌĆó Analysis of the outcome of API could help strength the case study ŌĆó but not necessary to achieving the research foci ŌĆó Cross-case analysis would be a better method for generating understanding. 45

- 46. Thank You! LetŌĆÖs Connect! @chiyanlam chi.lam@QueensU.ca

Editor's Notes

- Welcome! Thanks for coming today. It truly exciting to speak on the topic of develpmental evaluation and many thanks to the evaluation use tig for making this possible. Today, I want to share the results of a project I”ve been working on for some time, and in doing so, challenge our collective thinking around the space of possibilty created by developmental evaluation. For this paper, i want to focus on the innovation space and on the process of innovating. The slides are already available at www.chiyanlam.com and will be available shortly under the Eval Use TIG elibrary. \n

- Let me frame with presentation with a quote by Albert Einstein.\n

- in 1994, Patton made the observation that some clients resisted typical formative/summative evaluation approaches because of the work that they do. They themselves don’t see a point in freezing a program in time in order to have it assessed and evaluated. He described an approach where he worked collaboratively, as part of the team, to help render evaluative data that would help these program staff adapt and evolve their programs. So, the process of engaging in “developmental evaluation” becomes paramount.\n

- Fast forward to 2011, ... These are concepts that I will only touch on briefly. \n

- Developmental evaluation is positioned to be a response to evaluators who work in complex space. \n\nFirst described by Patton in 1994, and futher elaborated in 2011, Developmental Evaluation proposes a collaborative and participatory approach to involving the evaluator in the development process. \n\n\n

- \n\n

- Developmental evaluation is positioned to be a response to evaluators who work in complex space. \n\nFirst described by Patton in 1994, and futher elaborated in 2011, Developmental Evaluation proposes a collaborative and participatory approach to involving the evaluator in the development process. \n\n\n

- So what underlies DE is a commitment to reality testing. Patton positions it as one of the approaches one could taken within a utilization-focused framework: formative/summative/developmental. \n

- You only need to conferences like this one to hear the buzz and curiosity over developmental evaluation. In february, a webinar was offered by UNICEF, and over 500+... \n

- If we move beyond the excitement and buzz around DE, we see that DE is still very new. There is not a lot of empirical or practical basis to the arguments. If we are serious about the utilization about DE, \n

- So, in the remaining time, I want us to dig deep into a case of developmental evaluation. \n

- \n

- \n

- The observation that Patton made is very acute back in 1994 --- SI don’t rest, programs don’t stand still, addressing social problems means aiming a moving target that shifts as society changes...\n

- \n

- Let’s put it in a learning context. Simple would be teaching you CPR. I”ll keep the steps simple, rehearse it many times, so that you can do it when needed. \nComplicated would be teaching teacher candidates how to plan a lesson while taken into consideration curriculum expectations, learning objectives, isntructional methods/strategies, and assessment methods\nComplex - preparing TC to become a professional. We have many diff. parts (prac, foci, prof classes), think, behave, and participate like a contributing member of the profession. \n

- \n

- \n

- \n

- \n

- \n

- \n

- \n

- Document analysis of 10 design meetings over a 10-month period\n reveals the developmental “footprint”\n tracks and identifies specific developmental concerns being unpacked at a certain point in time of the project\n Interviews with the core design team members (2 instructors, 1 lead TA)\n illuminates which aspects of the DE was found helpful by the program designers\n

- \n

- \n

- \n

- The instructors of the case were responsible for teaching teacher candidates enrolled in a teacher education program classroom assessment. \n\nIn the field of teacher education, particularly in classroom assessment, the field is experiencing a very tricky situation where teachers are not assessing in the ways that we know helps with student learning. Much of what teachers do currently focuses on traditional notions of testing. At the level of teacher education, the problem is felt more acutely, because teacher candidates are not necessarily exhibiting the kinds of practice we would like to see from them. At my institution, we have two instructors responsible for delivering a module in classroom assessment in 7 hours total. In brief, there are many constraints that we’ve to work around, many of which we have little control over.\n\nWhat we do have control over is how we deliver that instruction. After a survey of different options, we were interested in integrating social media into teacher education as a way of building a community of learners. Our thinking was that assessment learning requires learners to actively engage with peers and to challenge their own experiences and conceptions of assessment.\n\nThat was the vision that guided our work. \n

- So those were the beginning conditions. let me fast forward and describe for you how far we got in the evaluation. Then we’ll look at how the innovation came to be.\n\n

- \n

- \n

- \n

- The Development was marked by several key representative episodes:\n1 - creating a learning environment\n2 - use of AI; to help explicate values, so to gain clarity into the QUALITY of the program. \n3 - an example of how DE promotes collaboration\n4 - the use of DE findings to engage clients in sense-making in order to formulate next steps. \n

- When I look back at the data, uncertainty was evident throughout the evaluation. the team was uncertain about...\n

- ... typically data in an evaluation are made at the program-level, descirbing quality... \n

- So let’s return now and unpack the case. \n

- \n

- \n

- \n

- \n

- \n

- \n

- \n

- \n

- \n

- \n

![Practicing DE

ŌĆó Adapative to context, agile in methods,

responsive to needs

ŌĆó evaluative thinking - critical thinking

ŌĆó bricoleur

ŌĆó ŌĆ£purpose-and-relationship-driven not

[research] method drivenŌĆØ(Patton, 2011, p.

288)](https://image.slidesharecdn.com/aea2012-de-121024233558-phpapp02/85/A-Case-Study-on-the-Use-of-Developmental-Evaluation-for-Navigating-Uncertainty-during-Social-Innovation-22-320.jpg)