A popular clustering algorithm is known as K-means, which will follow an iterative approach to update the parameters of each clusters.

Download as PPTX, PDF0 likes5 views

abount research methodology

1 of 12

Download to read offline

Recommended

Machine learning (10)

Machine learning (10)NYversity

╠²

This document summarizes part of a lecture on factor analysis from an machine learning course. It introduces the factor analysis model, which posits that observed data is generated by an underlying latent variable that is mapped to the observed space with noise. It describes the factor analysis model mathematically as a joint Gaussian distribution between the latent and observed variables. It also derives the E-step and M-step updates for performing maximum likelihood estimation of the factor analysis model parameters using EM algorithm.PRML Chapter 9

PRML Chapter 9Sunwoo Kim

╠²

1. The document discusses mixture models and the Expectation-Maximization (EM) algorithm. It covers K-means clustering, Gaussian mixture models, and applying EM to estimate parameters for these models.

2. EM is a general technique for finding maximum likelihood solutions for probabilistic models with latent variables. It works by iteratively computing expectations of the latent variables given current parameter estimates (E-step) and maximizing the likelihood function with respect to the parameters (M-step).

3. This process is guaranteed to increase the likelihood at each iteration until convergence. EM can be applied to problems like Gaussian mixtures, Bernoulli mixtures, and Bayesian linear regression by treating certain variables as latent.8.clustering algorithm.k means.em algorithm

8.clustering algorithm.k means.em algorithmLaura Petrosanu

╠²

The document discusses different clustering algorithms, including k-means and EM clustering. K-means aims to partition items into k clusters such that each item belongs to the cluster with the nearest mean. It works iteratively to assign items to centroids and recompute centroids until the clusters no longer change. EM clustering generalizes k-means by computing probabilities of cluster membership based on probability distributions, with the goal of maximizing the overall probability of items given the clusters. Both algorithms are used to group similar items in applications like market segmentation.Neural nw k means

Neural nw k meansEng. Dr. Dennis N. Mwighusa

╠²

k-Means is a rather simple but well known algorithms for grouping objects, clustering. Again all objects need to be represented as a set of numerical features. In addition the user has to specify the number of groups (referred to as k) he wishes to identify. Each object can be thought of as being represented by some feature vector in an n dimensional space, n being the number of all features used to describe the objects to cluster. The algorithm then randomly chooses k points in that vector space, these point serve as the initial centers of the clusters. Afterwards all objects are each assigned to center they are closest to. Usually the distance measure is chosen by the user and determined by the learning task. After that, for each cluster a new center is computed by averaging the feature vectors of all objects assigned to it. The process of assigning objects and recomputing centers is repeated until the process converges. The algorithm can be proven to converge after a finite number of iterations. Several tweaks concerning distance measure, initial center choice and computation of new average centers have been explored, as well as the estimation of the number of clusters k. Yet the main principle always remains the same. In this project we will discuss about K-means clustering algorithm, implementation and its application to the problem of unsupervised learning

Application of Graphic LASSO in Portfolio Optimization_Yixuan Chen & Mengxi J...

Application of Graphic LASSO in Portfolio Optimization_Yixuan Chen & Mengxi J...Mengxi Jiang

╠²

- The document describes using graphical lasso to estimate the precision matrix of stock returns and apply portfolio optimization.

- Graphical lasso estimates the precision matrix instead of the covariance matrix to allow for sparsity. This makes the estimation more efficient for large datasets.

- The study uses 8 different models to simulate stock return data and compares the performance of graphical lasso, sample covariance, and shrinkage estimators on portfolio optimization of in-sample and out-of-sample test data. Graphical lasso performed best on out-of-sample test data, showing it can generate portfolios that generalize well.Lecture 18: Gaussian Mixture Models and Expectation Maximization

Lecture 18: Gaussian Mixture Models and Expectation Maximizationbutest

╠²

This document discusses Gaussian mixture models (GMMs) and the expectation-maximization (EM) algorithm. GMMs model data as coming from a mixture of Gaussian distributions, with each data point assigned soft responsibilities to the different components. EM is used to estimate the parameters of GMMs and other latent variable models. It iterates between an E-step, where responsibilities are computed based on current parameters, and an M-step, where new parameters are estimated to maximize the expected complete-data log-likelihood given the responsibilities. EM converges to a local optimum for fitting GMMs to data.03 Data Mining Techniques

03 Data Mining TechniquesValerii Klymchuk

╠²

This document provides an overview of data mining techniques discussed in Chapter 3, including parametric and nonparametric models, statistical perspectives on point estimation and error measurement, Bayes' theorem, decision trees, neural networks, genetic algorithms, and similarity measures. Nonparametric techniques like neural networks, decision trees, and genetic algorithms are particularly suitable for data mining applications involving large, dynamically changing datasets.Introduction to Support Vector Machines

Introduction to Support Vector MachinesSilicon Mentor

╠²

For more info visit us at: http://www.siliconmentor.com/

Support vector machines are widely used binary classifiers known for its ability to handle high dimensional data that classifies data by separating classes with a hyper-plane that maximizes the margin between them. The data points that are closest to hyper-plane are known as support vectors. Thus the selected decision boundary will be the one that minimizes the generalization error (by maximizing the margin between classes).Optimising Data Using K-Means Clustering Algorithm

Optimising Data Using K-Means Clustering AlgorithmIJERA Editor

╠²

K-means is one of the simplest unsupervised learning algorithms that solve the well known clustering problem. The procedure follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters) fixed a priori. The main idea is to define k centroids, one for each cluster. These centroids should be placed in a cunning way because of different location causes different result. So, the better choice is to place them as much as possible far away from each other.Parameter Optimisation for Automated Feature Point Detection

Parameter Optimisation for Automated Feature Point DetectionDario Panada

╠²

Parameter optimization for an automated feature point detection model was explored. Increasing the number of random displacements up to 20 improved performance but additional increases did not. Larger patch sizes consistently improved performance. Increasing the number of decision trees did not affect performance for this single-stage model, unlike previous findings for a two-stage model. Overall, some parameter tuning was found to enhance the model's accuracy but not all parameters significantly impacted results.Cs229 notes9

Cs229 notes9VuTran231

╠²

This document summarizes part of a lecture on factor analysis from Andrew Ng's CS229 course. It begins by reviewing maximum likelihood estimation of Gaussian distributions and its issues when the number of data points n is smaller than the dimension d. It then introduces the factor analysis model, which models data x as coming from a latent lower-dimensional variable z through x = ╬╝ + ╬øz + ╬Ą, where ╬Ą is Gaussian noise. The EM algorithm is derived for estimating the parameters of this model.Enhance The K Means Algorithm On Spatial Dataset

Enhance The K Means Algorithm On Spatial DatasetAlaaZ

╠²

The document describes an enhancement to the standard k-means clustering algorithm. The enhancement aims to improve computational speed by storing additional information from each iteration, such as the closest cluster and distance for each data point. This avoids needing to recompute distances to all cluster centers in subsequent iterations if a point does not change clusters. The complexity of the enhanced algorithm is reduced from O(nkl) to O(nk) where n is points, k is clusters, and l is iterations.Data Science - Part VII - Cluster Analysis

Data Science - Part VII - Cluster AnalysisDerek Kane

╠²

This lecture provides an overview of clustering techniques, including K-Means, Hierarchical Clustering, and Gaussian Mixed Models. We will go through some methods of calibration and diagnostics and then apply the technique on a recognizable dataset.Image Processing

Image ProcessingTuyen Pham

╠²

This document compares three image restoration techniques - Iterated Geometric Harmonics, Markov Random Fields, and Wavelet Decomposition - for removing noise from images. It describes each technique and the process used to test them. Noise was artificially added to images using different noise generation functions. Wavelet Decomposition and Markov Random Fields were then used to detect the noise locations. These noise locations were then used to create versions of the noisy images suitable for reconstruction via Iterated Geometric Harmonics. The reconstructed images were then compared to the original to evaluate the performance of each technique.01.02 linear equations

01.02 linear equationsAndres Mendez-Vazquez

╠²

This document provides an introduction to systems of linear equations and matrix operations. It defines key concepts such as matrices, matrix addition and multiplication, and transitions between different bases. It presents an example of multiplying two matrices using NumPy. The document outlines how systems of linear equations can be represented using matrices and discusses solving systems using techniques like Gauss-Jordan elimination and elementary row operations. It also introduces the concepts of homogeneous and inhomogeneous systems.A Condensation-Projection Method For The Generalized Eigenvalue Problem

A Condensation-Projection Method For The Generalized Eigenvalue ProblemScott Donald

╠²

This document describes a condensation-projection method for solving large generalized eigenvalue problems. The method works by selecting a small number of "master" variables to represent the full problem. The remaining "slave" variables are eliminated, resulting in a much smaller eigenvalue problem involving just the master variables. Good approximations of selected eigenvalues and eigenvectors of the original large problem can be obtained from the condensed problem if the master variables approximate the desired eigenvectors well. The method is well-suited for parallel computing.Icitam2019 2020 book_chapter

Icitam2019 2020 book_chapterBan Bang

╠²

1. The document describes a heuristic approach for solving the cluster traveling salesman problem (CTSP) using genetic algorithms.

2. The proposed algorithm divides nodes into pre-specified clusters, uses GA to find a Hamiltonian path for each cluster, then combines the optimized cluster paths to form a full tour.

3. The algorithm was tested on symmetric TSPLIB instances and shown to find high quality solutions faster than two other metaheuristic approaches for CTSP.Data analysis of weather forecasting

Data analysis of weather forecastingTrupti Shingala, WAS, CPACC, CPWA, JAWS, CSM

╠²

Performed analysis on Temperature, Wind Speed, Humidity and Pressure data-sets and implemented decision tree & clustering to predict possibility of rain

Created graphs and plots using algorithms such as k-nearest neighbors, naïve bayes, decision tree and k means clusteringoverviewPCA

overviewPCAEdwin Heredia

╠²

Principal component analysis (PCA) is a dimensionality reduction technique that identifies important contributing components in big data. It works by transforming the data to a new coordinate system such that the greatest variance by some projection of the data lies on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on. Specifically, PCA analyzes the covariance matrix of the data to obtain its eigenvectors and eigenvalues. It then chooses principal components corresponding to the largest eigenvalues, which identify the directions with the most variance in the data.2008 spie gmm

2008 spie gmmPioneer Natural Resources

╠²

This document describes a new method for training Gaussian mixture classifiers for hyperspectral image classification. The method uses dynamic pruning, splitting, and merging of Gaussian mixture kernels to automatically determine the appropriate number of components during training. This "structural learning" approach is employed to model and classify hyperspectral imagery data. Experimental results on AVIRIS hyperspectral data sets suggest this approach is a potential alternative to traditional Gaussian mixture modeling and classification using expectation-maximization.1607.01152.pdf

1607.01152.pdfAnkitBiswas31

╠²

This document proposes methods for evaluating the quality of unsupervised anomaly detection algorithms when labeled data is unavailable. It introduces two label-free performance criteria called Excess-Mass (EM) and Mass-Volume (MV) curves, which are based on existing concepts but adapted here. To address issues with high-dimensional data, a feature subsampling methodology is described. An experiment evaluates three anomaly detection algorithms on various datasets using the proposed EM and MV criteria, finding they accurately discriminate algorithm performance compared to labeled ROC and PR criteria.Clustering’╝Ük-means, expect-maximization and gaussian mixture model

Clustering’╝Ük-means, expect-maximization and gaussian mixture modeljins0618

╠²

This document discusses K-means clustering, Expectation Maximization (EM), and Gaussian mixture models (GMM). It begins with an overview of unsupervised learning and introduces K-means as a simple clustering algorithm. It then describes EM as a general algorithm for maximum likelihood estimation that can be applied to problems like GMM. GMM is presented as a density estimation technique that models data using a weighted sum of Gaussian distributions. EM is described as a method for estimating the parameters of a GMM from data.Machine learning (5)

Machine learning (5)NYversity

╠²

This document discusses regularization and model selection techniques for machine learning models. It describes cross-validation methods like hold-out validation and k-fold cross validation that evaluate models on held-out data to select models that generalize well. Feature selection is discussed as an important application of model selection. Bayesian statistics and placing prior distributions on parameters is introduced as a regularization technique that favors models with smaller parameter values.Cis435 week03

Cis435 week03ashish bansal

╠²

This document discusses techniques for bounding summations, which are important for analyzing algorithms. It covers four main methods: using induction, bounding the terms, splitting the summations, and approximation by integrals. It also provides examples and explanations of each technique. Counting theory, probability distributions, random variables, and randomized algorithms are briefly introduced. Randomized Quicksort is presented as an example of a randomized algorithm that avoids worst-case behavior by introducing randomness into the partitioning step.Numeros complejos y_azar

Numeros complejos y_azarRodrigo Bulnes Aguilar

╠²

This document summarizes a new stochastic optimization method called Complex Simultaneous Perturbation Stochastic Approximation (CSPSA) that can directly optimize real-valued functions of complex variables. CSPSA estimates the complex gradient of the target function within the field of complex numbers and generates a sequence of complex estimates that converges to the optimal solution. The method has advantages over existing approaches that optimize in the real domain, as calculations are simpler using complex variables and the complex structure can improve performance. Numerical tests on quantum state tomography demonstrate CSPSA achieves solutions orders of magnitude closer to the true minimum compared to other methods using the same resources.How to manage Customer Tips with Odoo 17 Point Of Sale

How to manage Customer Tips with Odoo 17 Point Of SaleCeline George

╠²

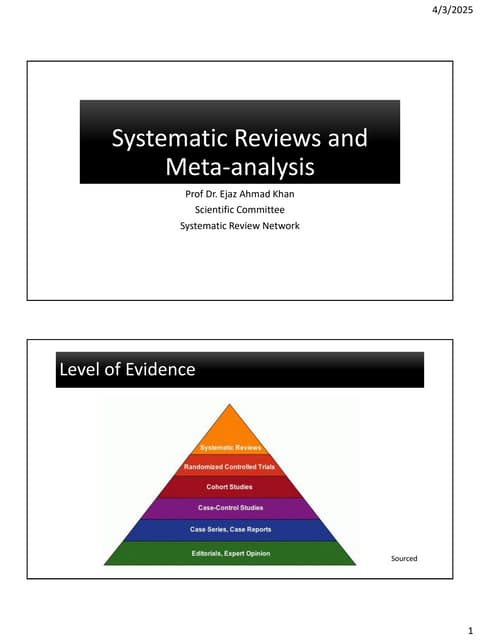

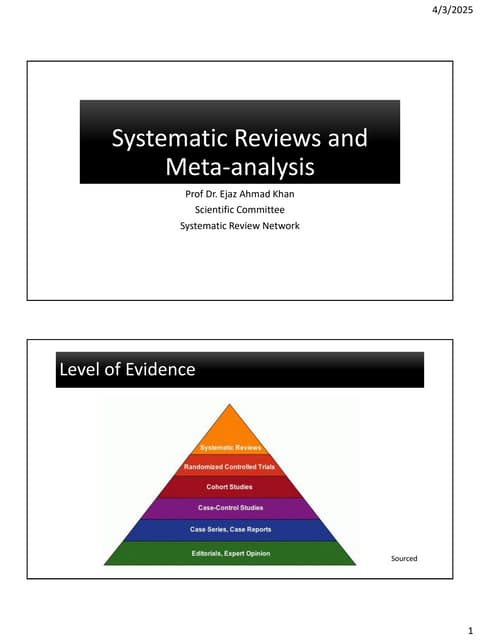

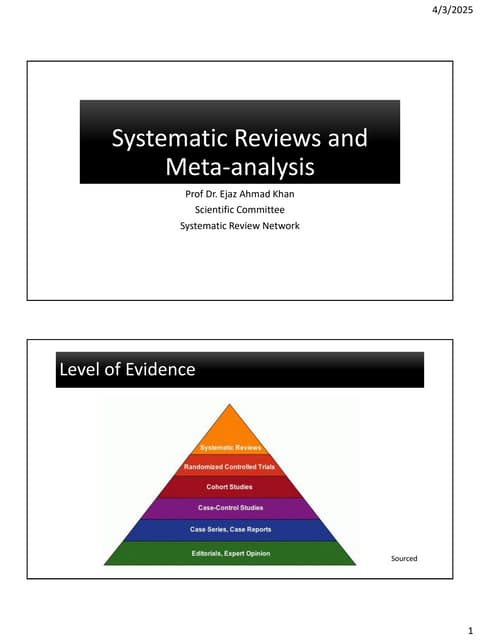

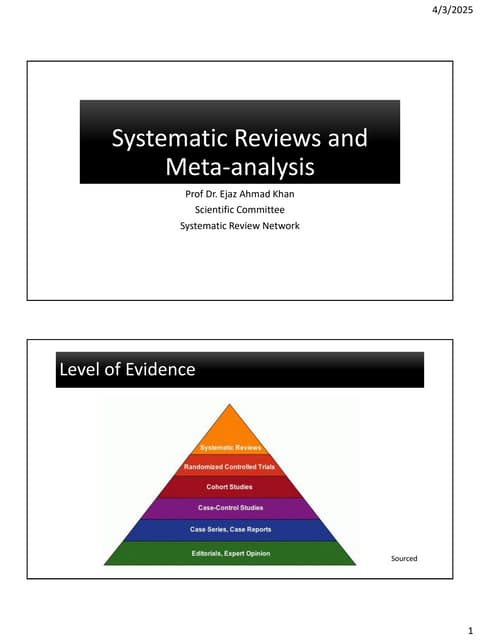

In the context of point-of-sale (POS) systems, a tip refers to the optional amount of money a customer leaves for the service they received. It's a way to show appreciation to the cashier, server, or whoever provided the service.Introduction to Systematic Reviews - Prof Ejaz Khan

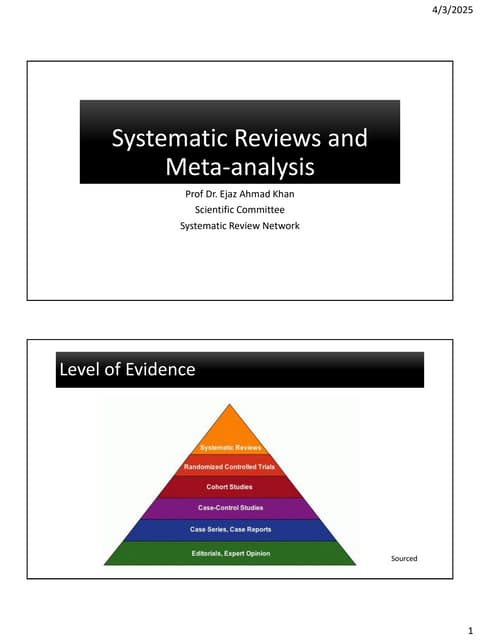

Introduction to Systematic Reviews - Prof Ejaz KhanSystematic Reviews Network (SRN)

╠²

A Systematic Review:

Provides a clear and transparent process

ŌĆó Facilitates efficient integration of information for rational decision

making

ŌĆó Demonstrates where the effects of health care are consistent and

where they do vary

ŌĆó Minimizes bias (systematic errors) and reduce chance effects

ŌĆó Can be readily updated, as needed.

ŌĆó Meta-analysis can provide more precise estimates than individual

studies

ŌĆó Allows decisions based on evidence , whole of it and not partialMore Related Content

Similar to A popular clustering algorithm is known as K-means, which will follow an iterative approach to update the parameters of each clusters. (20)

Introduction to Support Vector Machines

Introduction to Support Vector MachinesSilicon Mentor

╠²

For more info visit us at: http://www.siliconmentor.com/

Support vector machines are widely used binary classifiers known for its ability to handle high dimensional data that classifies data by separating classes with a hyper-plane that maximizes the margin between them. The data points that are closest to hyper-plane are known as support vectors. Thus the selected decision boundary will be the one that minimizes the generalization error (by maximizing the margin between classes).Optimising Data Using K-Means Clustering Algorithm

Optimising Data Using K-Means Clustering AlgorithmIJERA Editor

╠²

K-means is one of the simplest unsupervised learning algorithms that solve the well known clustering problem. The procedure follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters) fixed a priori. The main idea is to define k centroids, one for each cluster. These centroids should be placed in a cunning way because of different location causes different result. So, the better choice is to place them as much as possible far away from each other.Parameter Optimisation for Automated Feature Point Detection

Parameter Optimisation for Automated Feature Point DetectionDario Panada

╠²

Parameter optimization for an automated feature point detection model was explored. Increasing the number of random displacements up to 20 improved performance but additional increases did not. Larger patch sizes consistently improved performance. Increasing the number of decision trees did not affect performance for this single-stage model, unlike previous findings for a two-stage model. Overall, some parameter tuning was found to enhance the model's accuracy but not all parameters significantly impacted results.Cs229 notes9

Cs229 notes9VuTran231

╠²

This document summarizes part of a lecture on factor analysis from Andrew Ng's CS229 course. It begins by reviewing maximum likelihood estimation of Gaussian distributions and its issues when the number of data points n is smaller than the dimension d. It then introduces the factor analysis model, which models data x as coming from a latent lower-dimensional variable z through x = ╬╝ + ╬øz + ╬Ą, where ╬Ą is Gaussian noise. The EM algorithm is derived for estimating the parameters of this model.Enhance The K Means Algorithm On Spatial Dataset

Enhance The K Means Algorithm On Spatial DatasetAlaaZ

╠²

The document describes an enhancement to the standard k-means clustering algorithm. The enhancement aims to improve computational speed by storing additional information from each iteration, such as the closest cluster and distance for each data point. This avoids needing to recompute distances to all cluster centers in subsequent iterations if a point does not change clusters. The complexity of the enhanced algorithm is reduced from O(nkl) to O(nk) where n is points, k is clusters, and l is iterations.Data Science - Part VII - Cluster Analysis

Data Science - Part VII - Cluster AnalysisDerek Kane

╠²

This lecture provides an overview of clustering techniques, including K-Means, Hierarchical Clustering, and Gaussian Mixed Models. We will go through some methods of calibration and diagnostics and then apply the technique on a recognizable dataset.Image Processing

Image ProcessingTuyen Pham

╠²

This document compares three image restoration techniques - Iterated Geometric Harmonics, Markov Random Fields, and Wavelet Decomposition - for removing noise from images. It describes each technique and the process used to test them. Noise was artificially added to images using different noise generation functions. Wavelet Decomposition and Markov Random Fields were then used to detect the noise locations. These noise locations were then used to create versions of the noisy images suitable for reconstruction via Iterated Geometric Harmonics. The reconstructed images were then compared to the original to evaluate the performance of each technique.01.02 linear equations

01.02 linear equationsAndres Mendez-Vazquez

╠²

This document provides an introduction to systems of linear equations and matrix operations. It defines key concepts such as matrices, matrix addition and multiplication, and transitions between different bases. It presents an example of multiplying two matrices using NumPy. The document outlines how systems of linear equations can be represented using matrices and discusses solving systems using techniques like Gauss-Jordan elimination and elementary row operations. It also introduces the concepts of homogeneous and inhomogeneous systems.A Condensation-Projection Method For The Generalized Eigenvalue Problem

A Condensation-Projection Method For The Generalized Eigenvalue ProblemScott Donald

╠²

This document describes a condensation-projection method for solving large generalized eigenvalue problems. The method works by selecting a small number of "master" variables to represent the full problem. The remaining "slave" variables are eliminated, resulting in a much smaller eigenvalue problem involving just the master variables. Good approximations of selected eigenvalues and eigenvectors of the original large problem can be obtained from the condensed problem if the master variables approximate the desired eigenvectors well. The method is well-suited for parallel computing.Icitam2019 2020 book_chapter

Icitam2019 2020 book_chapterBan Bang

╠²

1. The document describes a heuristic approach for solving the cluster traveling salesman problem (CTSP) using genetic algorithms.

2. The proposed algorithm divides nodes into pre-specified clusters, uses GA to find a Hamiltonian path for each cluster, then combines the optimized cluster paths to form a full tour.

3. The algorithm was tested on symmetric TSPLIB instances and shown to find high quality solutions faster than two other metaheuristic approaches for CTSP.Data analysis of weather forecasting

Data analysis of weather forecastingTrupti Shingala, WAS, CPACC, CPWA, JAWS, CSM

╠²

Performed analysis on Temperature, Wind Speed, Humidity and Pressure data-sets and implemented decision tree & clustering to predict possibility of rain

Created graphs and plots using algorithms such as k-nearest neighbors, naïve bayes, decision tree and k means clusteringoverviewPCA

overviewPCAEdwin Heredia

╠²

Principal component analysis (PCA) is a dimensionality reduction technique that identifies important contributing components in big data. It works by transforming the data to a new coordinate system such that the greatest variance by some projection of the data lies on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on. Specifically, PCA analyzes the covariance matrix of the data to obtain its eigenvectors and eigenvalues. It then chooses principal components corresponding to the largest eigenvalues, which identify the directions with the most variance in the data.2008 spie gmm

2008 spie gmmPioneer Natural Resources

╠²

This document describes a new method for training Gaussian mixture classifiers for hyperspectral image classification. The method uses dynamic pruning, splitting, and merging of Gaussian mixture kernels to automatically determine the appropriate number of components during training. This "structural learning" approach is employed to model and classify hyperspectral imagery data. Experimental results on AVIRIS hyperspectral data sets suggest this approach is a potential alternative to traditional Gaussian mixture modeling and classification using expectation-maximization.1607.01152.pdf

1607.01152.pdfAnkitBiswas31

╠²

This document proposes methods for evaluating the quality of unsupervised anomaly detection algorithms when labeled data is unavailable. It introduces two label-free performance criteria called Excess-Mass (EM) and Mass-Volume (MV) curves, which are based on existing concepts but adapted here. To address issues with high-dimensional data, a feature subsampling methodology is described. An experiment evaluates three anomaly detection algorithms on various datasets using the proposed EM and MV criteria, finding they accurately discriminate algorithm performance compared to labeled ROC and PR criteria.Clustering’╝Ük-means, expect-maximization and gaussian mixture model

Clustering’╝Ük-means, expect-maximization and gaussian mixture modeljins0618

╠²

This document discusses K-means clustering, Expectation Maximization (EM), and Gaussian mixture models (GMM). It begins with an overview of unsupervised learning and introduces K-means as a simple clustering algorithm. It then describes EM as a general algorithm for maximum likelihood estimation that can be applied to problems like GMM. GMM is presented as a density estimation technique that models data using a weighted sum of Gaussian distributions. EM is described as a method for estimating the parameters of a GMM from data.Machine learning (5)

Machine learning (5)NYversity

╠²

This document discusses regularization and model selection techniques for machine learning models. It describes cross-validation methods like hold-out validation and k-fold cross validation that evaluate models on held-out data to select models that generalize well. Feature selection is discussed as an important application of model selection. Bayesian statistics and placing prior distributions on parameters is introduced as a regularization technique that favors models with smaller parameter values.Cis435 week03

Cis435 week03ashish bansal

╠²

This document discusses techniques for bounding summations, which are important for analyzing algorithms. It covers four main methods: using induction, bounding the terms, splitting the summations, and approximation by integrals. It also provides examples and explanations of each technique. Counting theory, probability distributions, random variables, and randomized algorithms are briefly introduced. Randomized Quicksort is presented as an example of a randomized algorithm that avoids worst-case behavior by introducing randomness into the partitioning step.Numeros complejos y_azar

Numeros complejos y_azarRodrigo Bulnes Aguilar

╠²

This document summarizes a new stochastic optimization method called Complex Simultaneous Perturbation Stochastic Approximation (CSPSA) that can directly optimize real-valued functions of complex variables. CSPSA estimates the complex gradient of the target function within the field of complex numbers and generates a sequence of complex estimates that converges to the optimal solution. The method has advantages over existing approaches that optimize in the real domain, as calculations are simpler using complex variables and the complex structure can improve performance. Numerical tests on quantum state tomography demonstrate CSPSA achieves solutions orders of magnitude closer to the true minimum compared to other methods using the same resources.Recently uploaded (20)

How to manage Customer Tips with Odoo 17 Point Of Sale

How to manage Customer Tips with Odoo 17 Point Of SaleCeline George

╠²

In the context of point-of-sale (POS) systems, a tip refers to the optional amount of money a customer leaves for the service they received. It's a way to show appreciation to the cashier, server, or whoever provided the service.Introduction to Systematic Reviews - Prof Ejaz Khan

Introduction to Systematic Reviews - Prof Ejaz KhanSystematic Reviews Network (SRN)

╠²

A Systematic Review:

Provides a clear and transparent process

ŌĆó Facilitates efficient integration of information for rational decision

making

ŌĆó Demonstrates where the effects of health care are consistent and

where they do vary

ŌĆó Minimizes bias (systematic errors) and reduce chance effects

ŌĆó Can be readily updated, as needed.

ŌĆó Meta-analysis can provide more precise estimates than individual

studies

ŌĆó Allows decisions based on evidence , whole of it and not partialU.S. Department of Education certification

U.S. Department of Education certificationMebane Rash

╠²

Request to certify compliance with civil rights lawsAll India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025

All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025National Council of Open Schooling Research and Training

╠²

All India Council of Vocational Skills (AICSVS) and National Council of Open Schooling Research and Training (NCOSRT), Global International University, Asia Book of World Records (ABWRECORDS), International a joint Accreditation Commission of Higher Education (IACOHE)The prospectus is going to be published in the year 20253. AI Trust Layer, Governance ŌĆō Explainability, Security & Compliance.pdf

3. AI Trust Layer, Governance ŌĆō Explainability, Security & Compliance.pdfMukesh Kala

╠²

AI Trust Layer, Governance ŌĆō Explainability, Security & ComplianceKnownsense 2025 Finals-U-25 General Quiz.pdf

Knownsense 2025 Finals-U-25 General Quiz.pdfPragya - UEM Kolkata Quiz Club

╠²

Knownsense is the General Quiz conducted by Pragya the Official Quiz Club of the University of Engineering and Management Kolkata in collaboration with Ecstasia the official cultural fest of the University of Engineering and Management Kolkata Purchase Analysis in Odoo 17 - Odoo ║▌║▌▀Żs

Purchase Analysis in Odoo 17 - Odoo ║▌║▌▀ŻsCeline George

╠²

Purchase is one of the important things as a part of a business. It is essential to analyse everything that is happening inside the purchase and keep tracking. In Odoo 17, the reporting section is inside the purchase module, which is purchase analysis.A-Z GENERAL QUIZ | THE QUIZ CLUB OF PSGCAS | 14TH MARCH 2025.pptx

A-Z GENERAL QUIZ | THE QUIZ CLUB OF PSGCAS | 14TH MARCH 2025.pptxQuiz Club of PSG College of Arts & Science

╠²

Behold a thrilling general quiz set brought to you by THE QUIZ CLUB OF PSG COLLEGE OF ARTS & SCIENCE, COIMBATORE, made of 26 questions for the each letter of the alphabet and covering everything above the earth and under the sky.

Explore the trivia , knowledge , curiosity

So, get seated for an enthralling quiz ride.

Quizmaster : THANVANTH N A (Batch of 2023-26), THE QUIZ CLUB OF PSG COLLEGE OF ARTS & SCIENCE, CoimbatorePass SAP C_C4H47_2503 in 2025 | Latest Exam Questions & Study Material

Pass SAP C_C4H47_2503 in 2025 | Latest Exam Questions & Study MaterialJenny408767

╠²

Pass SAP C_C4H47_2503 with expert-designed practice tests & real questions. Start preparing today with ERPPrep.com and boost your SAP Sales Cloud career! ANORECTAL MALFORMATIONS: NURSING MANAGEMENT PPT.pptx

ANORECTAL MALFORMATIONS: NURSING MANAGEMENT PPT.pptxPRADEEP ABOTHU

╠²

Anorectal malformations refer to a range of congenital anomalies that involve the anus, rectum, and sometimes the urinary and genital organs. They result from abnormal development during the embryonic stage, leading to incomplete or absent formation of the rectum, anus, or both.

Unit No 4- Chemotherapy of Malignancy.pptx

Unit No 4- Chemotherapy of Malignancy.pptxAshish Umale

╠²

In the Pharmacy profession there are many dangerous diseases from which the most dangerous is cancer. Here we study about the cancer as well as its treatment that is supportive to the students of semester VI of Bachelor of Pharmacy. Cancer is a disease of cells of characterized by Progressive, Persistent, Perverted (abnormal), Purposeless and uncontrolled Proliferation of tissues. There are many types of cancer that are harmful to the human body which are responsible to cause the disease condition. The position 7 of guanine residues in DNA is especially susceptible. Cyclophosphamide is a prodrug converted to the active metabolite aldophosphamide in the liver. Procarbazine is a weak MAO inhibitor; produces sedation and other CNS effects, and can interact with foods and drugs. Methotrexate is one of the most commonly used anticancer drugs. Methotrexate (MTX) is a folic acid antagonist. 6-MP and 6-TG are activated to their ribonucleotides, which inhibit purine ring biosynthesis and nucleotide inter conversion. Pyrimidine analogue used in antineoplastic, antifungal and anti psoriatic agents.

5-Fluorouracil (5-FU) is a pyrimidine analog. It is a complex diterpin taxane obtained from bark of the Western yew tree. Actinomycin D is obtained from the fungus of Streptomyces species. Gefitinib and Erlotinib inhibit epidermal growth factor receptor (EGFR) tyrosine kinase. Sunitinib inhibits multiple receptor tyrosine kinases like platelet derived growth factor (PDGF) Rituximab target antigen on the B cells causing lysis of these cells.

Prednisolone is 4 times more potent than hydrocortisone, also more selective glucocorticoid, but fluid retention does occur with high doses. Estradiol is a major regulator of growth for the subset of breast cancers that express the estrogen receptor (ER, ESR1).

Finasteride and dutasteride inhibit conversion of testosterone to dihydrotestosterone in prostate (and other tissues), have palliative effect in advanced carcinoma prostate; occasionally used. Chemotherapy in most cancers (except curable cancers) is generally palliative and suppressive. Chemotherapy is just one of the modes in the treatment of cancer. Other modes like radiotherapy and surgery are also employed to ensure 'total cell kill'.General Quiz at ChakraView 2025 | Amlan Sarkar | Ashoka Univeristy | Prelims ...

General Quiz at ChakraView 2025 | Amlan Sarkar | Ashoka Univeristy | Prelims ...Amlan Sarkar

╠²

Prelims (with answers) + Finals of a general quiz originally conducted on 9th February, 2025.

This was the closing quiz of the 2025 edition of ChakraView - the annual quiz fest of Ashoka University.

Feedback welcome at amlansarkr@gmail.com Knownsense 2025 prelims- U-25 General Quiz.pdf

Knownsense 2025 prelims- U-25 General Quiz.pdfPragya - UEM Kolkata Quiz Club

╠²

General College Quiz conducted by Pragya the Official Quiz Club of the University of Engineering and Management Kolkata in collaboration with Ecstasia the official cultural fest of the University of Engineering and Management Kolkata.Enhancing SoTL through Generative AI -- Opportunities and Ethical Considerati...

Enhancing SoTL through Generative AI -- Opportunities and Ethical Considerati...Sue Beckingham

╠²

This presentation explores the role of generative AI (GenAI) in enhancing the Scholarship of Teaching and Learning (SoTL), using FeltenŌĆÖs five principles of good practice as a guiding framework. As educators within higher education institutions increasingly integrate GenAI into teaching and research, it is vital to consider how these tools can support scholarly inquiry into student learning, while remaining contextually grounded, methodologically rigorous, collaborative, and appropriately public.

Through practical examples and case-based scenarios, the session demonstrates how generative GenAI can assist in analysing critical reflection of current practice, enhancing teaching approaches and learning materials, supporting SoTL research design, fostering student partnerships, and amplifying the reach of scholarly outputs. Attendees will gain insights into ethical considerations, opportunities, and limitations of GenAI in SoTL, as well as ideas for integrating GenAI tools into their own scholarly teaching practices. The session invites critical reflection and dialogue about the responsible use of GenAI to enhance teaching, learning, and scholarly impact.

Conrad "Accessibility Essentials: Introductory Seminar"

Conrad "Accessibility Essentials: Introductory Seminar"National Information Standards Organization (NISO)

╠²

This presentation was provided by Lettie Conrad of LibLynx and San Jos├® University during the initial session of the NISO training series "Accessibility Essentials." Session One: The Introductory Seminar was held April 3, 2025.All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025

All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025National Council of Open Schooling Research and Training

╠²

A-Z GENERAL QUIZ | THE QUIZ CLUB OF PSGCAS | 14TH MARCH 2025.pptx

A-Z GENERAL QUIZ | THE QUIZ CLUB OF PSGCAS | 14TH MARCH 2025.pptxQuiz Club of PSG College of Arts & Science

╠²

Conrad "Accessibility Essentials: Introductory Seminar"

Conrad "Accessibility Essentials: Introductory Seminar"National Information Standards Organization (NISO)

╠²

A popular clustering algorithm is known as K-means, which will follow an iterative approach to update the parameters of each clusters.

- 3. ’é¢ Here, ╬╝1 and ╬╝2 are the centroids of each cluster and are parameters that identify each of these. ’é¢ A popular clustering algorithm is known as K-means, which will follow an iterative approach to update the parameters of each clusters. ’é¢ More specifically, what it will do is to compute the means (or centroids) of each cluster, and then calculate their distance to each of the data points. ’é¢ The latter are then labeled as part of the cluster that is identified by their closest centroid. ’é¢ This process is repeated until some convergence criterion is met

- 4. ’é¢ One important characteristic of K-means is that it is a hard clustering method, which means that it will associate each point to one and only one cluster. ’é¢ A limitation to this approach is that there is no uncertainty measure or probability that tells us how much a data point is associated with a specific cluster. ’é¢ This is exactly what Gaussian Mixture Models, or simply GMMs, attempt to do.

- 5. ’é¢ A Gaussian Mixture is a function that is comprised of several Gaussians, each identified by k {1,ŌĆ”, Ōłł K}, where K is the number of clusters of the dataset. ’é¢ Each Gaussian k in the mixture is comprised of the following parameters: ’é¢ A mean ╬╝ that defines its centre. ’é¢ A covariance ╬Ż that defines its width. This would be equivalent to the dimensions of an ellipsoid in a multivariate scenario. ’é¢ A mixing probability ŽĆ that defines how big or small the Gaussian function will be.

- 7. ’é¢ There are three Gaussian functions, hence K = 3. ’é¢ Each Gaussian explains the data contained in each of the three clusters available. ’é¢ The mixing coefficients are themselves probabilities and must meet this condition:

- 8. ’é¢ How do we determine the optimal values for these parameters? ’é¢ To achieve this we must ensure that each Gaussian fits the data points belonging to each cluster. ’é¢ This is exactly what maximum likelihood does. ’é¢ In general, the Gaussian density function is given by:

- 9. ’é¢ Where x represents our data points, D is the number of dimensions of each data point. ’é¢ ╬╝ and ╬Ż are the mean and covariance, respectively. ’é¢ If we have a dataset comprised of N = 1000 three- dimensional points (D = 3), then x will be a 1000 ├Ś 3 matrix. ’é¢ ╬╝ will be a 1 ├Ś 3 vector, and ╬Ż will be a 3 ├Ś 3 matrix.

- 10. ’é¢ we will also find it useful to take the log of this equation, which is given by: ’é¢ If we differentiate this equation with respect to the mean and covariance and then equate it to zero, then we will be able to find the optimal values for these parameters, and the solutions will correspond to the Maximum Likelihood Estimates (MLE).

- 11. Expectation - maximizatio n algorithm ’é¢ Finding the parameters would prove to be very hard ’é¢ Fortunately, there is an iterative method we can use to achieve this purpose. ’é¢ It is called the Expectation ŌĆö Maximization, or simply EM algorithm. ’é¢ It is widely used for optimization problems where the objective function has complexities such as the one just encountered for the GMM case. ’é¢ Let the parameters of our model be

- 12. ’é¢ Step 1: Initialise ╬Ė accordingly. For instance, we can use the results obtained by a previous K-Means run as a good starting point for our algorithm. ’é¢ Step 2 (Expectation step): Evaluate ’é¢ Step 3 (Maximization step): Find the revised parameters ╬Ė* using: