analyzing hdfs files using apace spark and mapreduce FixedLengthInputformat

- 1. Analyzing HDFS Files using Apace Spark and Mapreduce FixedLengthInputFormat leoricklin@gmail.com the source code is here

- 2. MAPREDUCE-1176: FixedLengthInputFormat and FixedLengthRecordReader (fixed in 2.3.0) by Mariappan Asokan, BitsOfInfo Addition of FixedLengthInputFormat and FixedLengthRecordReader in the org. apache.hadoop.mapreduce.lib.input package. These two classes can be used when you need to read data from files containing fixed length (fixed width) records. Such files have no CR/LF (or any combination thereof), no delimiters etc, but each record is a fixed length, and extra data is padded with spaces.

- 3. One 2GB gigantic line within a file issue [stackoverflow] Considering the String class' length method returns an int, the maximum length that would be returned by the method would be Integer. MAX_VALUE, which is 2^31 - 1 (or approximately 2 billion.) In terms of lengths and indexing of arrays, (such as char[], which is probably the way the internal data representation is implemented for Strings),... val rdd = sc.textFile("hdfs:///user/leo/test.txt/nolr2G-1.txt") rdd.count ... ERROR Executor: Exception in task 0.0 in stage 14.0 (TID 236) java.lang.OutOfMemoryError: Java heap space at java.util.Arrays.copyOf(Arrays.java:2271)

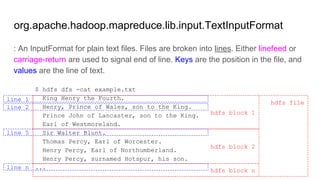

- 4. hdfs file org.apache.hadoop.mapreduce.lib.input.TextInputFormat : An InputFormat for plain text files. Files are broken into lines. Either linefeed or carriage-return are used to signal end of line. Keys are the position in the file, and values are the line of text. $ hdfs dfs -cat example.txt King Henry the Fourth. Henry, Prince of Wales, son to the King. Prince John of Lancaster, son to the King. Earl of Westmoreland. Sir Walter Blunt. Thomas Percy, Earl of Worcester. Henry Percy, Earl of Northumberland. Henry Percy, surnamed Hotspur, his son. ... line 1 line 2 line n hdfs block 1 hdfs block 2 line 5 hdfs block n

- 5. Split & Record ŌØÅ An input split is a chunk of the input that is processed by a single map. ŌØÅ Each split is divided into records, and the map processes each recordŌĆöa key- value pairŌĆöin turn. ŌØÅ By default the split size is dfs.block.size.[1] HDFS Text File Split 1 (block 1) Split 2 (block 2) Record 1 (line 1) Record 2 (line 2) Record 3 (line 3) Record 4 (line 4) Record 5 (line 5) Record 6 (line 6) HDFS Text File Split Split Split Split Split Split Split Record > 2GB CR/LF Normal text file One 2GB gigantic line within a file [1] Tom White, ŌĆ£Hadoop:The Definitive Guide, 3rd EdtionŌĆØ, p.234, 2012

- 6. File Ōæócombine last part of previous block with first part of current block Split Check length of records with FixedLengthInputFormat (1) fixed blk fixed blk Record Record Record len len len len len len len ŌæĀRead blocks of fixed length except the last one ŌæĪsplit blocks by n and compute length of each record HDFS File Split Split Record Record Record Record Record Record CR/LF blk blk blk blk blk blk blk blk blk blk blk blk blk blk blk Split-1 Split-2 len len len Record Record Record len len len Ōæżsort splits by reading position ŌæŻgroup splits by each file len Ōæźcombine last part of previous block with first part of next block

- 7. Split Check length of records with FixedLengthInputFormat (2) HDFS File Split Split Split Split Split Split Split Record > 2GB blk blk blk blk blk blk blk blk blk blk blk blk blk blk blk Fixed Length Record len len len ŌæĀRead blocks of fixed length except the last one ŌæĪsplit blocks by n and compute length of each record Ō×écombine last part of previous block with first part of next block File Record ŌæŻgroup splits by file Ōæżsort splits by reading position Ōæźcombine last part of previous block with first part of next block len len fixed blk fixed blk Split-1 Split-2 len

- 8. Validation (1) $ hdfs dfs -ls /user/leo/test/ -rw-r--r-- 1 leo leo 2147483669 2015-10-06 07:40 /user/leo/test/nolr2G-1.txt -rw-r--r-- 1 leo leo 2147483669 2015-10-06 09:19 /user/leo/test/nolr2G-2.txt -rw-r--r-- 1 leo leo 2147483669 2015-10-07 00:53 /user/leo/test/nolr2G-3.txt $ hdfs dfs -cat /user/leo/test/nolr2G-1.txt 01234567890123456789...........0123456789 0123456789 scala> recordLenOfFile.map{ case (path, stat) => f"[${path}][${stat.toString()}]"}.collect(). foreach(println) ... INFO TaskSetManager: Finished task 47.0 in stage 9.0 (TID 223) in 16 ms on localhost (48/48) ... [hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-1.txt][stats: (count: 1, mean: 2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0] [hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-2.txt][stats: (count: 1, mean: 2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0] [hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-3.txt][stats: (count: 1, mean: 2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0] 2GB + 10 bytes + ŌĆ£nŌĆØ + 10 bytes The output shows how many lines and the statistics for the length of lines in each file. Here we found there exists one line of 2147483648 chars.

- 9. Validation (2) $ hdfs dfs -ls /user/leo/test.2/ -rw-r--r-- 1 leo leo 5258688 2015-10-07 06:56 test.2/all-bible-1.txt -rw-r--r-- 1 leo leo 5258688 2015-10-07 06:56 test.2/all-bible-2.txt -rwxr-xr-x 1 leo leo 5258688 2015-10-06 02:12 test.2/all-bible-3.txt $ hdfs dfs -cat test.2/all-bible-1.txt|wc -l 117154 scala> recordLenOfFile.map{ case (path, stat) => f"[${path}][${stat.toString()}]"}.collect(). foreach(println) ... INFO TaskSetManager: Finished task 0.0 in stage 13.0 (TID 233) in 115 ms on localhost (3/3) ... [hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-2.txt][stats: (count: 116854, mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0] [hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-3.txt][stats: (count: 116854, mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0] [hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-1.txt][stats: (count: 116854, mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0]

![One 2GB gigantic line within a file issue

[stackoverflow] Considering the String class' length method returns an int, the

maximum length that would be returned by the method would be Integer.

MAX_VALUE, which is 2^31

- 1 (or approximately 2 billion.)

In terms of lengths and indexing of arrays, (such as char[], which is probably the

way the internal data representation is implemented for Strings),...

val rdd = sc.textFile("hdfs:///user/leo/test.txt/nolr2G-1.txt")

rdd.count

...

ERROR Executor: Exception in task 0.0 in stage 14.0 (TID 236)

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:2271)](https://image.slidesharecdn.com/121-151113033528-lva1-app6892/85/analyzing-hdfs-files-using-apace-spark-and-mapreduce-FixedLengthInputformat-3-320.jpg)

![Split & Record

ŌØÅ An input split is a chunk of the input that is processed by a single map.

ŌØÅ Each split is divided into records, and the map processes each recordŌĆöa key-

value pairŌĆöin turn.

ŌØÅ By default the split size is dfs.block.size.[1]

HDFS Text File

Split 1 (block 1) Split 2 (block 2)

Record 1

(line 1)

Record 2

(line 2)

Record 3

(line 3)

Record 4

(line 4)

Record 5

(line 5)

Record 6

(line 6)

HDFS Text File

Split Split Split Split Split Split Split

Record > 2GB

CR/LF

Normal

text file

One 2GB

gigantic

line

within a

file

[1] Tom White, ŌĆ£Hadoop:The Definitive Guide, 3rd EdtionŌĆØ, p.234, 2012](https://image.slidesharecdn.com/121-151113033528-lva1-app6892/85/analyzing-hdfs-files-using-apace-spark-and-mapreduce-FixedLengthInputformat-5-320.jpg)

![Validation (1)

$ hdfs dfs -ls /user/leo/test/

-rw-r--r-- 1 leo leo 2147483669 2015-10-06 07:40 /user/leo/test/nolr2G-1.txt

-rw-r--r-- 1 leo leo 2147483669 2015-10-06 09:19 /user/leo/test/nolr2G-2.txt

-rw-r--r-- 1 leo leo 2147483669 2015-10-07 00:53 /user/leo/test/nolr2G-3.txt

$ hdfs dfs -cat /user/leo/test/nolr2G-1.txt

01234567890123456789...........0123456789

0123456789

scala> recordLenOfFile.map{ case (path, stat) => f"[${path}][${stat.toString()}]"}.collect().

foreach(println)

...

INFO TaskSetManager: Finished task 47.0 in stage 9.0 (TID 223) in 16 ms on localhost (48/48)

...

[hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-1.txt][stats: (count: 1, mean:

2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0]

[hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-2.txt][stats: (count: 1, mean:

2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0]

[hdfs://sandbox.hortonworks.com:8020/user/leo/test/nolr2G-3.txt][stats: (count: 1, mean:

2147483648.000000, stdev: 0.000000, max: 2147483648.000000, min: 2147483648.000000), NaN: 0]

2GB + 10 bytes + ŌĆ£nŌĆØ + 10 bytes

The output shows how many lines and

the statistics for the length of lines in each

file.

Here we found there exists one line of

2147483648 chars.](https://image.slidesharecdn.com/121-151113033528-lva1-app6892/85/analyzing-hdfs-files-using-apace-spark-and-mapreduce-FixedLengthInputformat-8-320.jpg)

![Validation (2)

$ hdfs dfs -ls /user/leo/test.2/

-rw-r--r-- 1 leo leo 5258688 2015-10-07 06:56 test.2/all-bible-1.txt

-rw-r--r-- 1 leo leo 5258688 2015-10-07 06:56 test.2/all-bible-2.txt

-rwxr-xr-x 1 leo leo 5258688 2015-10-06 02:12 test.2/all-bible-3.txt

$ hdfs dfs -cat test.2/all-bible-1.txt|wc -l

117154

scala> recordLenOfFile.map{ case (path, stat) => f"[${path}][${stat.toString()}]"}.collect().

foreach(println)

...

INFO TaskSetManager: Finished task 0.0 in stage 13.0 (TID 233) in 115 ms on localhost (3/3)

...

[hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-2.txt][stats: (count: 116854,

mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0]

[hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-3.txt][stats: (count: 116854,

mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0]

[hdfs://sandbox.hortonworks.com:8020/user/leo/test.2/all-bible-1.txt][stats: (count: 116854,

mean: 43.866937, stdev: 30.647162, max: 88.000000, min: 1.000000), NaN: 0]](https://image.slidesharecdn.com/121-151113033528-lva1-app6892/85/analyzing-hdfs-files-using-apace-spark-and-mapreduce-FixedLengthInputformat-9-320.jpg)