Artificial drone intelligence technology

- 1. PROVISIONAL PATENT APPLICATION Title of Invention: Artificial Drone Intelligence for Interior Navigation, 3D Mapping, Fly-Through-Window Delivery, and Crash Avoidance & Mitigation ABSTRACT The present invention consists of a drone platform that flies indoors, avoiding obstacles, remaining separated from the walls, making a 3d map of its environment, entering buildings through windows, doors or openings, in order to deliver packages, make films, identify intruders, or other use-cases. This drone is created for home and personal use, as well as business use, and allows the user to install and run many diverse applications (i.e.: for indoor or outdoor filming, for food or product delivery, such as medicine, for private security at home, etc). The Drone is designed to run multiple programs, thereby making it a versatile drone platform. SUMMARY In accordance with the principles of the invention, an autonomous airborne drone is provided, which has capabilities for indoor obstacle avoidance, 3d mapping, and general computer vision. Further, this invention is capable of performing three functions like: filming indoors or outdoors, delivering food or medicine, and even providing security at home and sending alerts if someone breaks into the house, whereas prior technologies were only capable of performing a single function and did not include obstacle avoidance, 3d mapping, or computer vision, especially in combination. This invention uses multiple cameras and sensors to understand its environment. Artificial Intelligence algorithms are also included in order to perform sensor fusion map environments with SLAM, and also recognize and track people based on their faces and body movements. In addition, this new drone platform promises a much simpler device than found in the market, with the ability to run drone applications and games. Adaptability is the key feature and technology for this drone. TECHNOLOGY The drone has a CUDA-enabled GPU and mobile CPU on board, to perform deep learning and artificial intelligence. The drone performs sensor fusion, using ultrasonic sensors, multiple RGB cameras, some depth cameras, stereoscopy, IMUs (accelerometers, gyroscopes, compass, barometer) and also GPS. All this data is processed in order to calculate the distance from the drone to the walls and other objects as well as the drone's absolute and relative location. A computer vision algorithm called SLAM uses structure from motion, SIFT features, optical flow, and linear algebra, in

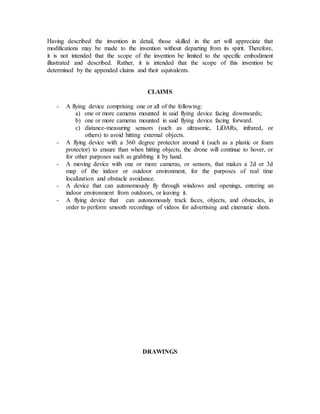

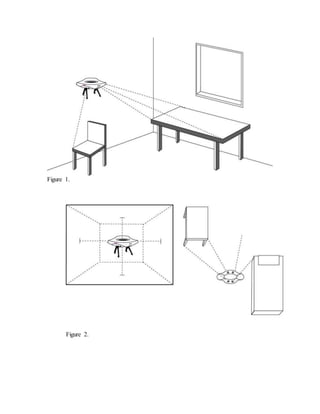

- 2. order to make a 3D map of the indoor environment and reconstruct it in point clouds. It also uses this map and data to localize the drone in real time while flying indoors. Other novel features include the exterior bumper protection surrounding the drone, which allows for the drone to lightly bump into the walls or other objects without stopping its propellers and making it fall to the ground. The drone can hit the wall, bounce back, and continue flying. This protector also contains ultrasonic sensors pointing in 4 or more directions, allowing the drone to detect when it is about to hit a wall, and therefore tilting backwards to avoid hitting it. Besides having the ultrasonic distance-measuring sensors (which can also be infrared or LiDARs in the future), it also has bumper sensors and pressure sensors that detect when the drone is literally hitting an object and it detects with what force. Therefore, the drone can calculate whether the object is a wall which doesn't move, or whether it is an object that the drone is pushing. BRIEF DESCRIPTION OF THE DRAWING FIG. 1 illustrates a drone which uses downward-facing camera and forward-looking camera to detect, identify and track objects. Algorithms used include deep-learning, optical flow, boosted classifiers, stereo-matching, and 3d reconstruction. FIG. 2 illustrates how the drone avoids hitting the walls by situation itself at least a few centimeters away from the wall (seen on the left part of the drawing). It uses a combination of ultrasonic sensors and stereo/depth camera vision algorithms, using sensor fusion. The drone makes a 3D model to map the indoor environment using multiple cameras, such as two (stereo) cameras facing forward or a downward-looking camera (seen on the right part of the drawing). The drone also has a 360 degree guard around all propellers, to absorb the impact when hitting an object, bouncing back and continuing to fly unharmed (also seen on the right part of the drawing). FIG. 3 illustrates drone delivery through window with Artificial Intelligence. Step 1: The drone observes window key features and aligns with it. It uses the SIFT algorithm and deep learning approaches. Step 2: The drone enters window through an equidistant center point, using vision and ultrasonic sensors, as well as LiDARs, to keep its distance from the frame. Step 3: The drone finds a landing-spot marker, using its downward-facing cameras and QR code recognition, as well as image texture tracking. Step 4: The user can then retrieve the payload, letting the drone return to the warehouse.

- 3. DETAILED DESCRIPTION The present invention is a drone with multiple sensors, cameras, advanced computers. Also, the drone uses Artificial Intelligence and SLAM 3D mapping algorithms. The parts that make up the drone are: the flying vehicle (including a flight controller, ESCs, servos, motors, propellers, frame), the on-board computer (such as a CPU or GPU), the protection around the vehicle, the color and depth cameras, the distance- measuring sensors (such as ultrasonic, LiDAR, infrared, Radar, etc), the IMUs (such as accelerometers, gyroscopes, barometer, compass, GPS), the optical flow sensor, a mobile internet module, the bump or pressure sensors, and the holder for packages with sensors to detect the presence of a package. All sensors connect to the on-board computer and/or the flight controller (such as a microcontroller). The flight controller is also connected to the computer via USB or other communication channels. The cameras are also connected to the computer and the mobile internet module too. The flight controller can process some sensor fusion such as IMUs (accelerometers, gyroscopes, compass, barometer, optical flow, and GPS), and it can use them for its control systems (such as PID loops). The computer can also have those sensors and also color or depth cameras, and it can receive information about the other sensors by connecting to the flight controller via USB. The drone first determines whether it should film or deliver packages, based on the presence or not of a package in its package holder. If no package is present, then the drone will start in film mode, but if a package is present, it will start in deliver mode. When the drone is in film mode, it will take off vertically, fly around the room or house, and film, while making a 3d map, avoiding obstacles, and pointing the camera at people or interesting objects. When the drone is in delivery mode, it will find a window, leave the indoor environment, fly outdoors using GPS and SLAM, find the delivery house and an open window, enter through it and land on a table. After the package is removed, it will find a window, exit, fly to the store, enter through its window and land at a designated area or table. Referring now to the drawings, FIG. 1 describes how the drone utilizes its downward and forward facing camera to detect and identify objects. The algorithms used are deep- learning, optical flow, boosted classifiers and 3d reconstruction. FIG. 2 illustrates how the drone avoids hitting a wall using a combination of ultrasonic sensors and stereo depth camera vision algorithms using sensor fusion. The drone uses a 3d-model to map the indoor environment using multiple cameras. The drone also has a

- 4. 360 degree guard around all its propellers to absorb the impact when hitting an object, bouncing back and flying away unharmed. FIG. 3 illustrates the drone delivery through a window, using Artificial Intelligence. The drone observes key features in the frame of the window and aligns with in the center of the opening. It uses SIFT (Scale Invariant Feature Transform) algorithm and deep learning approaches for this purpose. The drone enters the window through an equidistant center point, using vision and ultrasonic sensors to keeps its distance. The drone identifies a landing space using its downward facing camera, QR code recognition and image texture tracking. The user can then retrieve the payload, letting the drone return to the warehouse. For indoor filming, the user needs to leave the drone on a table, or the floor, or hold it in the air with their hands, and turn it on. When the drone turns on, it will take off, hover at a certain height (usually between 1 meter and 2 meters from the ground), and it will start flying around the indoor environment, filming different parts of it smoothly and fluidly, and at the same time constructing a 3d point cloud map of the interior. It will then use that map in order to better understand where it is located, and therefore it will become smoother in flying around the environment. The drone will begin by using its distance sensors (such as infrared, LiDARs, ultrasonic), in order to avoid hitting walls and obstacles. It will remain some centimeters away from every wall and object. However, once it has created an accurate map of the place, it will begin to rely on the cameras and the map more than on the distance sensors. At that point, the drone will more fluidly navigate the place, taking smooth shots with its camera. It can also point the camera at people's faces or other interesting objects automatically, while panning and moving through the place. For window delivery, the seller needs to place an object in the drone's bottom compartment, such as a box or a package. The package can be take-out chinese food, a new iPhone, or medicine such as the morning-after pill. The seller needs to turn the drone on by pressing a button. The drone will recognize it has a package loaded. The seller needs to then connect to the drone via a phone app or computer website and insert the specific address or GPS coordinates for the delivery. The drone will then take-off, go through any available windows, fly outdoors at a height of more than 25 meters, until it reaches the destination. Then, it will begin looking for an open window in the house, and it will fly through the center of the window to avoid any potential collisions. Then, it will fly indoors and hover over a table. Then, it will land vertically and announce through the speakers that the delivery is ready. The buyer can then remove the package. The drone will identify that the package has been removed, and it will fly again through the window and back to the seller, entering through the window of the shop and landing on a table. While a specific embodiment has been shown and described, many variations are possible. With time, additional features may be employed. The particular shape or configuration of the platform or the interior configuration may be changed to suit the system or equipment with which it is used.

- 5. Having described the invention in detail, those skilled in the art will appreciate that modifications may be made to the invention without departing from its spirit. Therefore, it is not intended that the scope of the invention be limited to the specific embodiment illustrated and described. Rather, it is intended that the scope of this invention be determined by the appended claims and their equivalents. CLAIMS - A flying device comprising one or all of the following: a) one or more cameras mounted in said flying device facing downwards; b) one or more cameras mounted in said flying device facing forward. c) distance-measuring sensors (such as ultrasonic, LiDARs, infrared, or others) to avoid hitting external objects. - A flying device with a 360 degree protector around it (such as a plastic or foam protector) to ensure than when hitting objects, the drone will continue to hover, or for other purposes such as grabbing it by hand. - A moving device with one or more cameras, or sensors, that makes a 2d or 3d map of the indoor or outdoor environment, for the purposes of real time localization and obstacle avoidance. - A device that can autonomously fly through windows and openings, entering an indoor environment from outdoors, or leaving it. - A flying device that can autonomously track faces, objects, and obstacles, in order to perform smooth recordings of videos for advertising and cinematic shots. DRAWINGS

- 7. Figure 3.