Artificial Neural Networks ppt.pptx for final sem cse

- 1. www.studymafia.org Submitted To: Submitted By: www.studymafia.org www.studymafia.org Seminar On Artificial Neural Networks

- 2. ’é© INTRODUCTION ’é© HISTORY ’é© BIOLOGICAL NEURON MODEL ’é© ARTIFICIAL NEURON MODEL ’é© ARTIFICIAL NEURAL NETWORK ’é© NEURAL NETWORK ARCHITECTURE ’é© LEARNING ’é© BACKPROPAGATION ALGORITHM ’é© APPLICATIONS ’é© ADVANTAGES ’é© CONCLUSION

- 3. ’é© ŌĆ£NeuralŌĆ£ is an adjective for neuron, and ŌĆ£networkŌĆØ denotes a graph like structure. ’é© Artificial Neural Networks are also referred to as ŌĆ£neural netsŌĆØ , ŌĆ£artificial neural systemsŌĆØ, ŌĆ£parallel distributed processing systemsŌĆØ, ŌĆ£connectionist systemsŌĆØ. ’é© For a computing systems to be called by these pretty names, it is necessary for the system to have a labeled directed graph structure where nodes performs some simple computations. ’é© ŌĆ£Directed GraphŌĆØ consists of set of ŌĆ£nodesŌĆØ(vertices) and a set of ŌĆ£connectionsŌĆØ(edges/links/arcs) connecting pair of nodes. ’é© A graph is said to be ŌĆ£labeled graphŌĆØ if each connection is associated with a label to identify some property of the connection

- 4. Fig 1: AND gate graph This graph cannot be considered a neural network since the connections between the nodes are fixed and appear to play no other role than carrying the inputs to the node that computed their conjunction. Fig 2: AND gate network The graph structure which connects the weights modifiable using a learning algorithm, qualifies the computing system to be called an artificial neural networks. x2ŽĄ{0,1} x1 x2 x1ŽĄ{0,1} o = x1 AND x2 multiplier (x1 w1) (x2w2) o = x1 AND x2 x1 x2 w1 w2 ŌĆó The field of neural network was pioneered by BERNARD WIDROW of Stanford University in 1950ŌĆÖs. CONTDŌĆ”

- 5. ’é© late-1800's - Neural Networks appear as an analogy to biological systems ’é© 1960's and 70's ŌĆō Simple neural networks appear ’éĪ Fall out of favor because the perceptron is not effective by itself, and there were no good algorithms for multilayer nets ’é© 1986 ŌĆō Backpropagation algorithm appears ’éĪ Neural Networks have a resurgence in popularity

- 6. ’é© Records (examples) need to be represented as a (possibly large) set of tuples of <attribute, value> ’é© The output values can be represented as a discrete value, a real value, or a vector of values ’é© Tolerant to noise in input data ’é© Time factor ’éĪ It takes long time for training ’éĪ Once trained, an ANN produces output values (predictions) fast ’é© It is hard for human to interpret the process of prediction by ANN

- 7. Four parts of a typical nerve cell : - ’é© DENDRITES: Accepts the inputs ’é© SOMA : Process the inputs ’é© AXON : Turns the processed inputs into outputs. ’é© SYNAPSES : The electrochemical contact between the neurons.

- 8. ’é© Inputs to the network are represented by the mathematical symbol, xn ’é© Each of these inputs are multiplied by a connection weight , wn sum = w1 x1 + ŌĆ”ŌĆ”+ wnxn ’é© These products are simply summed, fed through the transfer function, f( ) to generate a result and then output. f w1 w2 xn x2 x1 wn f(w1 x1 + ŌĆ”ŌĆ”+ wnxn)

- 9. Biological Terminology Artificial Neural Network Terminology Neuron Node/Unit/Cell/Neurode Synapse Connection/Edge/Link Synaptic Efficiency Connection Strength/Weight Firing frequency Node output

- 10. ’é© Artificial Neural Network (ANNs) are programs designed to solve any problem by trying to mimic the structure and the function of our nervous system. ’é© Neural networks are based on simulated neurons, Which are joined together in a variety of ways to form networks. ’é© Neural network resembles the human brain in the following two ways: - * A neural network acquires knowledge through learning. *A neural networkŌĆÖs knowledge is stored within the interconnection strengths known as synaptic weight.

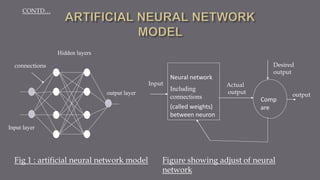

- 11. output layer connections Input layer Hidden layers Neural network Including connections (called weights) between neuron Comp are Actual output Desired output Input output Figure showing adjust of neural network Fig 1 : artificial neural network model CONTDŌĆ”

- 12. The neural network in which every node is connected to every other nodes, and these connections may be either excitatory (positive weights), inhibitory (negative weights), or irrelevant (almost zero weights). These are networks in which nodes are partitioned into subsets called layers, with no connections from layer j to k if j > k. Input node Input node output node output node Hidden node Layer 1 Layer2 Layer0 Layer3 (Input layer) (Output layer) Hidden Layer Fig: fully connected network fig: layered network

- 13. This is the subclass of the layered networks in which there is no intra- layer connections. In other words, a connection may exist between any node in layer i and any node in layer j for i < j, but a connection is not allowed for i=j. fig : Feedforward network This is a subclass of acyclic networks in which a connection is allowed from a node in layer i only to nodes in layer i+1 Layer 1 Layer2 Layer0 Layer3 (Input layer) (Output layer) Hidden Layer Layer 1 Layer2 Layer0 Layer3 (Input layer) (Output layer) Hidden Layer Fig : Acyclic network CONTDŌĆ”

- 14. Many problems are best solved using neural networks whose architecture consists of several modules, with sparse interconnections between them. Modules can be organized in several different ways as Hierarchial organization, Successive refinement, Input modularity Fig : Modular neural network CONTDŌĆ”

- 15. ’é© Neurons in an animalŌĆÖs brain are ŌĆ£hard wiredŌĆØ. It is equally obvious that animals, especially higher order animals, learn as they grow. ’é© How does this learning occur? ’é© What are possible mathematical models of learning? ’é© In artificial neural networks, learning refers to the method of modifying the weights of connections between the nodes of a specified network. ’é© The learning ability of a neural network is determined by its architecture and by the algorithmic method chosen for training.

- 16. UNSUPERVISED LEARNING ’é© This is learning by doing. ’é© In this approach no sample outputs are provided to the network against which it can measure its predictive performance for a given vector of inputs. ’é© One common form of unsupervised learning is clustering where we try to categorize data in different clusters by their similarity. ŌĆó A teacher is available to indicate whether a system is performing correctly, or to indicate the amount of error in system performance. Here a teacher is a set of training data. ŌĆó The training data consist of pairs of input and desired output values that are traditionally represented in data vectors. ŌĆó Supervised learning can also be referred as classification, where we have a wide range of classifiers, (Multilayer perceptron, k nearest neighbor..etc) SUPERVISED LEARNING CONTDŌĆ”

- 17. ’é© The backpropagation algorithm (Rumelhart and McClelland, 1986) is used in layered feed-forward Artificial Neural Networks. ’é© Back propagation is a multi-layer feed forward, supervised learning network based on gradient descent learning rule. ’é© we provide the algorithm with examples of the inputs and outputs we want the network to compute, and then the error (difference between actual and expected results) is calculated. ’é© The idea of the backpropagation algorithm is to reduce this error, until the Artificial Neural Network learns the training data.

- 18. ’é© The activation function of the artificial neurons in ANNs implementing the backpropagation algorithm is a weighted sum (the sum of the inputs xi multiplied by their respective weights wji) ’é© The most common output function is the sigmoidal function: ’é© Since the error is the difference between the actual and the desired output, the error depends on the weights, and we need to adjust the weights in order to minimize the error. We can define the error function for the output of each neuron: Inputs, x Weights, v weights, w output Fig: Basic Block of Back propagation neural network

- 19. ’é© The backpropagation algorithm now calculates how the error depends on the output, inputs, and weights. the adjustment of each weight (╬öwji ) will be the negative of a constant eta (╬Ę) multiplied by the dependance of the ŌĆ£wjiŌĆØ previous weight on the error of the network. ’é© First, we need to calculate how much the error depends on the output ’é© Next, how much the output depends on the activation, which in turn depends on the weights ’é© And so, the adjustment to each weight will be CONTDŌĆ”

- 20. ’é© If we want to adjust vik, the weights (letŌĆÖs call them vik ) of a previous layer, we need first to calculate how the error depends not on the weight, but in the input from the previous layer i.e. replacing w by x as shown in below equation. where ’é© and Inputs, x Weights, v weights, w output CONTDŌĆ”

- 21. ’é© Neural Networks in Practice ’é© Neural networks in medicine ŌĆó Modelling and Diagnosing the Cardiovascular System ŌĆó Electronic noses ŌĆó Instant Physician ’é© Neural Networks in business ’ā║ Marketing ’ā║ Credit Evaluation

- 22. ’é© It involves human like thinking. ’é© They handle noisy or missing data. ’é© They can work with large number of variables or parameters. ’é© They provide general solutions with good predictive accuracy. ’é© System has got property of continuous learning. ’é© They deal with the non-linearity in the world in which we live.

- 23. ŌĆó Artificial neural networks are inspired by the learning processes that take place in biological systems. ŌĆó Artificial neurons and neural networks try to imitate the working mechanisms of their biological counterparts. ŌĆó Learning can be perceived as an optimisation process. ŌĆó Biological neural learning happens by the modification of the synaptic strength. Artificial neural networks learn in the same way. ŌĆó The synapse strength modification rules for artificial neural networks can be derived by applying mathematical optimisation methods.

- 24. ŌĆó Learning tasks of artificial neural networks can be reformulated as function approximation tasks. ŌĆó Neural networks can be considered as nonlinear function approximating tools (i.e., linear combinations of nonlinear basis functions), where the parameters of the networks should be found by applying optimisation methods. ŌĆó The optimisation is done with respect to the approximation error measure. ŌĆó In general it is enough to have a single hidden layer neural network (MLP, RBF or other) to learn the approximation of a nonlinear function. In such cases general optimisation can be applied to find the change rules for the synaptic weights.

- 25. ’é© www.google.com ’é© www.wikipedia.com ’é© www.studymafia.org

- 26. Thanks