Ashutosh pycon

1 like490 views

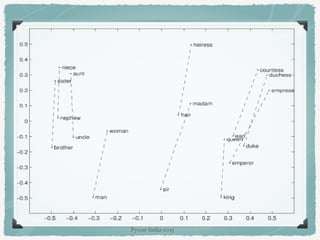

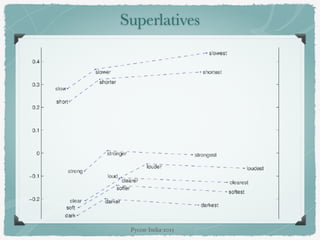

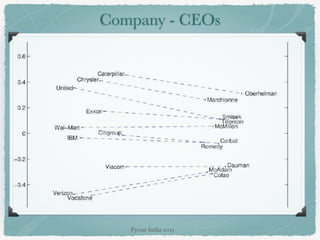

The document discusses the use of natural language processing (NLP) for solving logical puzzles, highlighting various methods and models including co-occurrence matrices and word embeddings like Word2Vec. It emphasizes the importance of representing words programmatically and the challenges of scalability and dimensionality in NLP. The presentation also covers the skip-gram model and demonstrates how word similarity and semantic relationships can be analyzed in low-dimensional vector spaces.

1 of 33

Downloaded 10 times

![How do we represent a word?

Index in vocab..

30th word [0,0,ŌĆ”ŌĆ”.1,0,0,0,ŌĆ”0]

30

45th word [0,0,ŌĆ”ŌĆ”.0,0,0,ŌĆ”0,1,ŌĆ”0]

45

How would a processor know that ŌĆśgoodŌĆÖ at index 30 is

synonym for ŌĆśniceŌĆÖ at index 45

Pycon-India-2015](https://image.slidesharecdn.com/ashutosh-pycon-151005185853-lva1-app6892/85/Ashutosh-pycon-9-320.jpg)

![Dimension of similarity

Test for linear relationships, examined by Mikolov et al.

a:b :: c: ?

man: woman :: king : ?

man

king

woman

queen

1

0.75

0.5

0.25

0

0.25 0.5 0.75 1

+ king [0.5, 0.2]

- man [0.25, 0.5]

+ woman [0.6, 1 ]

ŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆö-

queen [0.85, 0.7]

Pycon-India-2015](https://image.slidesharecdn.com/ashutosh-pycon-151005185853-lva1-app6892/85/Ashutosh-pycon-28-320.jpg)

Ad

Recommended

Ai lecture 07(unit03)

Ai lecture 07(unit03)vikas dhakane

╠²

1) Logic and inferences are important aspects of artificial intelligence as they allow systems to think and act rationally by making decisions based on available information and drawing conclusions.

2) Inference is the process of generating conclusions from facts and evidence. Formal logic represents knowledge through logical sentences using propositional or first-order logic.

3) Propositional logic uses symbolic variables to represent propositions that can be either true or false. Compound propositions combine simpler propositions using logical connectives like "and" and "or". Truth tables define the values of logical connectives.Interpreter Design Pattern real-time examples

Interpreter Design Pattern real-time examplesRAKESH P

╠²

The interpreter design pattern allows for the evaluation of language grammar and expressions, facilitating the development of domain-specific languages. It provides the capability to define a language's grammar and includes interpreters that translate this grammar. This pattern is particularly prevalent in compilers, including those for Java, which convert source code into byte-code.AI Lesson 09

AI Lesson 09Assistant Professor

╠²

This document provides an overview of constraint satisfaction problems (CSPs). It defines a CSP as a problem where variables must be assigned values from their domains to satisfy constraints. Examples of CSPs include the n-queens puzzle, map coloring, Boolean satisfiability, and cryptarithmetic problems. A CSP is represented as a constraint graph with nodes as variables and edges as binary constraints. The goal is to assign values to each variable to satisfy all constraints.Spark algorithms

Spark algorithmsAshutosh Trivedi

╠²

The document discusses two Spark algorithms: outlier detection on categorical data and KNN join. It describes how the algorithms work, including mapping attributes to scores for outlier detection and using z-order curves to map points to a single dimension for KNN joins. It also provides performance results and best practices for implementing the algorithms in Spark and discusses applications in graph algorithms.GraphX and Pregel - Apache Spark

GraphX and Pregel - Apache SparkAshutosh Trivedi

╠²

The document presents an overview of Spark GraphX and Pregel, focusing on graph representation, algorithms, and practical applications such as feedback vertex set computation. It discusses implementation challenges, best practices, and the use of Spark for large-scale distributed computations and parallel graph algorithms. The document also includes examples and references for further exploration of the presented topics.Natural Language Processing

Natural Language Processingpunedevscom

╠²

This document provides an overview of natural language processing (NLP) and the use of deep learning for NLP tasks. It discusses how deep learning models can learn representations and patterns from large amounts of unlabeled text data. Deep learning approaches are now achieving superior results to traditional NLP methods on many tasks, such as named entity recognition, machine translation, and question answering. However, deep learning models do not explicitly model linguistic knowledge. The document outlines common NLP tasks and how deep learning algorithms like LSTMs, CNNs, and encoder-decoder models are applied to problems involving text classification, sequence labeling, and language generation.Bijaya Zenchenko - An Embedding is Worth 1000 Words - Start Using Word Embedd...

Bijaya Zenchenko - An Embedding is Worth 1000 Words - Start Using Word Embedd...Rehgan Avon

╠²

An expert discusses using word embeddings to gain insights from text data. Word embeddings involve converting text into numeric vectors to analyze relationships between words. Key points discussed include:

- Word2Vec and fastText are popular neural models for creating word embeddings.

- Preprocessing like tokenization and stemming is important to get quality embeddings.

- Embeddings can be used for tasks like semantic similarity, clustering, and topic modeling.

- Packages like Gensim and Sklearn have functions to create embeddings and analyze text. Window size and other hyperparameters impact results.Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes¤æŗ Christopher Moody

╠²

The document discusses lda2vec, a model combining word2vec and LDA, focusing on how word vectors can capture semantic relationships and topic distributions in text data. It explains the mechanisms of word2vec for word prediction based on context and contrasts it with the global document structure of LDA. The presentation also emphasizes practical applications and computational efficiency, highlighting how these models can effectively represent language and meaning in machine learning tasks.L6.pptxsdv dfbdfjftj hgjythgfvfhjyggunghb fghtffn

L6.pptxsdv dfbdfjftj hgjythgfvfhjyggunghb fghtffnRwanEnan

╠²

This chapter introduces vector semantics for representing word meaning in natural language processing applications. Vector semantics learns word embeddings from text distributions that capture how words are used. Words are represented as vectors in a multidimensional semantic space derived from neighboring words in text. Models like word2vec use neural networks to generate dense, real-valued vectors for words from large corpora without supervision. Word vectors can be evaluated intrinsically by comparing similarity scores to human ratings for word pairs in context and without context.Engineering Intelligent NLP Applications Using Deep Learning ŌĆō Part 1

Engineering Intelligent NLP Applications Using Deep Learning ŌĆō Part 1Saurabh Kaushik

╠²

This document discusses natural language processing (NLP) and language modeling. It covers the basics of NLP including what NLP is, its common applications, and basic NLP processing steps like parsing. It also discusses word and sentence modeling in NLP, including word representations using techniques like bag-of-words, word embeddings, and language modeling approaches like n-grams, statistical modeling, and neural networks. The document focuses on introducing fundamental NLP concepts.A Panorama of Natural Language Processing

A Panorama of Natural Language ProcessingTed Xiao

╠²

The document provides a comprehensive overview of natural language processing (NLP), including its background, task types, challenges, and advancements such as machine translation and conversation agents. It discusses various techniques for word representation, including one-hot vectors, singular value decomposition, and modern deep learning methods like word2vec. The future of NLP is highlighted with emphasis on improved user interaction, analyzing unstructured information, and the increasing role of AI in industry applications.Neural Text Embeddings for Information Retrieval (WSDM 2017)

Neural Text Embeddings for Information Retrieval (WSDM 2017)Bhaskar Mitra

╠²

The document describes a tutorial on using neural networks for information retrieval. It discusses an agenda for the tutorial that includes fundamentals of IR, word embeddings, using word embeddings for IR, deep neural networks, and applications of neural networks to IR problems. It provides context on the increasing use of neural methods in IR applications and research.Tom├Ī┼Ī Mikolov - Distributed Representations for NLP

Tom├Ī┼Ī Mikolov - Distributed Representations for NLPMachine Learning Prague

╠²

The document discusses word embedding techniques, specifically Word2vec. It introduces the motivation for distributed word representations and describes the Skip-gram and CBOW architectures. Word2vec produces word vectors that encode linguistic regularities, with simple examples showing words with similar relationships have similar vector offsets. Evaluation shows Word2vec outperforms previous methods, and its word vectors are now widely used in NLP applications.Word embedding

Word embedding ShivaniChoudhary74

╠²

Word embeddings are numerical representations of words in a continuous vector space, crucial for natural language processing (NLP) tasks. These embeddings capture semantic relationships and can be generated through techniques like co-occurrence matrices, dimensionality reduction, and neural networks. Popular models include word2vec and GloVe, each with representation approaches that impact context understanding and embedding efficiency.Word embeddings

Word embeddingsShruti kar

╠²

Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.Contemporary Models of Natural Language Processing

Contemporary Models of Natural Language ProcessingKaterina Vylomova

╠²

This document provides an overview of contemporary models in natural language processing, focusing on language modeling and machine translation. It introduces n-gram models, distributional semantics, and neural language models such as word2vec. For machine translation, it covers statistical machine translation using noisy channel modeling and alignment, and neural machine translation using sequence-to-sequence models with attention. Neural models generally outperform statistical models on machine translation tasks.wordembedding.pptx

wordembedding.pptxJOBANPREETSINGH62

╠²

Word embedding is a technique in natural language processing where words are represented as dense vectors in a continuous vector space. These representations are designed to capture semantic and syntactic relationships between words based on their distributional properties in large amounts of text. Two popular word embedding models are Word2Vec and GloVe. Word2Vec uses a shallow neural network to learn word vectors that place words with similar meanings close to each other in the vector space. GloVe is an unsupervised learning algorithm that trains word vectors based on global word-word co-occurrence statistics from a corpus.Pycon ke word vectors

Pycon ke word vectorsOsebe Sammi

╠²

The document discusses word vectors for natural language processing. It explains that word vectors represent words as dense numeric vectors which encode the words' semantic meanings based on their contexts in a large text corpus. These vectors are learned using neural networks which predict words from their contexts. This allows determining relationships between words like synonyms which have similar contexts, and performing operations like finding analogies. Examples of using word vectors include determining word similarity, analogies, and translation.Designing, Visualizing and Understanding Deep Neural Networks

Designing, Visualizing and Understanding Deep Neural Networksconnectbeubax

╠²

The document discusses different approaches for representing the semantics and meaning of text, including propositional models that represent sentences as logical formulas and vector-based models that embed texts in a high-dimensional semantic space. It describes word embedding models like Word2vec that learn vector representations of words based on their contexts, and how these embeddings capture linguistic regularities and semantic relationships between words. The document also discusses how composition operations can be performed in the vector space to model the meanings of multi-word expressions.Word2vector

Word2vectorAshis Chanda

╠²

This document summarizes the Word2Vector model proposed by Tomas Mikolov et al. in 2013 for learning word embeddings from large amounts of text. It describes the motivation for representing words as vectors to capture semantic meaning based on context. The proposed method uses either the Continuous Bag-of-Words or Skip-gram model on a sliding window of words to predict target words. The models are trained using a neural network and stochastic gradient descent. The document also discusses applications of Word2Vector including using the model to learn embeddings of medical concepts from clinical notes.Word 2 vector

Word 2 vectorAshis Kumar Chanda

╠²

The document discusses the Word2Vec model developed by Tomas Mikolov et al. in 2013, which enables machines to understand word meanings through embeddings derived from context. It introduces two methods for generating these embeddings: Continuous Bag of Words (CBOW) and Skip-Gram, which utilize neural networks for representation. The application of Word2Vec in medical data is highlighted, emphasizing its potential for understanding medical codes and concepts.Tutorial on word2vec

Tutorial on word2vecLeiden University

╠²

The document is a tutorial on word2vec, a computational model for learning word embeddings from raw text, designed to map similar words to nearby points in a vector space. It covers the background of the distributional hypothesis, vector space models, and various techniques for training word embeddings, including advantages and applications in NLP. Hands-on practical sessions using the Python package gensim exemplify how to implement and evaluate word2vec models.Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone

╠²

The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.Ekaterina vylomova-what-do-neural models-know-about-language-p1

Ekaterina vylomova-what-do-neural models-know-about-language-p1Katerina Vylomova

╠²

This document summarizes the history and development of neural models for natural language processing from the 1940s to present day. It describes early models like the perceptron and how recurrent neural networks enabled modeling of longer sequences. Modern contextualized models like BERT are able to incorporate broader context using attention and bidirectional processing. However, neural models still struggle with tasks requiring complex reasoning and world knowledge.Embedding for fun fumarola Meetup Milano DLI luglio

Embedding for fun fumarola Meetup Milano DLI luglioDeep Learning Italia

╠²

This document discusses contextual word embeddings and how they address the limitations of context-free word embeddings. It begins by explaining that context-free word embeddings cannot model polysemy since words have the same embedding regardless of context. It then introduces contextual word embeddings as a solution, discussing early approaches like CoVe and ELMo that learn contextual embeddings from language models. The document emphasizes that contextual embeddings allow words to have different representations depending on the surrounding context.Word2Vec

Word2Vechyunyoung Lee

╠²

The document outlines a seminar agenda on word embeddings, focusing on Word2Vec and its comparison with models like GloVe. It details the architecture of neural networks for language modeling, including the continuous bag-of-words (CBOW) and skip-gram methods, as well as techniques for representing words through co-occurrence probabilities. Additionally, it references several resources for further learning in natural language processing.DL-CO2 -Session 3 Learning Vectorial Representations of Words.pptx

DL-CO2 -Session 3 Learning Vectorial Representations of Words.pptxKv Sagar

╠²

The document discusses vectorial representations of words, particularly focusing on methods like word2vec, SVD, and GloVe for learning word embeddings from co-occurrence data. It explores challenges such as high dimensionality and sparsity in word representations and presents solutions including dimensionality reduction and various optimization techniques. Finally, it compares count-based and prediction-based models, highlighting their respective benefits and performance in different linguistic tasks.Viral>Wondershare Filmora 14.5.18.12900 Crack Free Download

Viral>Wondershare Filmora 14.5.18.12900 Crack Free DownloadPuppy jhon

╠²

Ō×Ī ¤īŹ¤ō▒¤æēCOPY & PASTE LINK¤æē¤æē¤æē Ō׿ Ō׿Ō׿ https://drfiles.net/

Wondershare Filmora Crack is a user-friendly video editing software designed for both beginners and experienced users.

Reducing Conflicts and Increasing Safety Along the Cycling Networks of East-F...

Reducing Conflicts and Increasing Safety Along the Cycling Networks of East-F...Safe Software

╠²

In partnership with the Belgian Province of East-Flanders this project aimed to reduce conflicts and increase safety along a cycling route between the cities of Oudenaarde and Ghent. To achieve this goal, the current cycling network data needed some extra key information, including: Speed limits for segments, Access restrictions for different users (pedestrians, cyclists, motor vehicles, etc.), Priority rules at intersections. Using a 360┬░ camera and GPS mounted on a measuring bicycle, we collected images of traffic signs and ground markings along the cycling lanes building up mobile mapping data. Image recognition technologies identified the road signs, creating a dataset with their locations and codes. The data processing entailed three FME workspaces. These included identifying valid intersections with other networks (e.g., roads, railways), creating a topological network between segments and intersections and linking road signs to segments and intersections based on proximity and orientation. Additional features, such as speed zones, inheritance of speed and access to neighbouring segments were also implemented to further enhance the data. The final results were visualized in ArcGIS, enabling analysis for the end users. The project provided them with key insights, including statistics on accessible road segments, speed limits, and intersection priorities. These will make the cycling paths more safe and uniform, by reducing conflicts between users.More Related Content

Similar to Ashutosh pycon (20)

Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes¤æŗ Christopher Moody

╠²

The document discusses lda2vec, a model combining word2vec and LDA, focusing on how word vectors can capture semantic relationships and topic distributions in text data. It explains the mechanisms of word2vec for word prediction based on context and contrasts it with the global document structure of LDA. The presentation also emphasizes practical applications and computational efficiency, highlighting how these models can effectively represent language and meaning in machine learning tasks.L6.pptxsdv dfbdfjftj hgjythgfvfhjyggunghb fghtffn

L6.pptxsdv dfbdfjftj hgjythgfvfhjyggunghb fghtffnRwanEnan

╠²

This chapter introduces vector semantics for representing word meaning in natural language processing applications. Vector semantics learns word embeddings from text distributions that capture how words are used. Words are represented as vectors in a multidimensional semantic space derived from neighboring words in text. Models like word2vec use neural networks to generate dense, real-valued vectors for words from large corpora without supervision. Word vectors can be evaluated intrinsically by comparing similarity scores to human ratings for word pairs in context and without context.Engineering Intelligent NLP Applications Using Deep Learning ŌĆō Part 1

Engineering Intelligent NLP Applications Using Deep Learning ŌĆō Part 1Saurabh Kaushik

╠²

This document discusses natural language processing (NLP) and language modeling. It covers the basics of NLP including what NLP is, its common applications, and basic NLP processing steps like parsing. It also discusses word and sentence modeling in NLP, including word representations using techniques like bag-of-words, word embeddings, and language modeling approaches like n-grams, statistical modeling, and neural networks. The document focuses on introducing fundamental NLP concepts.A Panorama of Natural Language Processing

A Panorama of Natural Language ProcessingTed Xiao

╠²

The document provides a comprehensive overview of natural language processing (NLP), including its background, task types, challenges, and advancements such as machine translation and conversation agents. It discusses various techniques for word representation, including one-hot vectors, singular value decomposition, and modern deep learning methods like word2vec. The future of NLP is highlighted with emphasis on improved user interaction, analyzing unstructured information, and the increasing role of AI in industry applications.Neural Text Embeddings for Information Retrieval (WSDM 2017)

Neural Text Embeddings for Information Retrieval (WSDM 2017)Bhaskar Mitra

╠²

The document describes a tutorial on using neural networks for information retrieval. It discusses an agenda for the tutorial that includes fundamentals of IR, word embeddings, using word embeddings for IR, deep neural networks, and applications of neural networks to IR problems. It provides context on the increasing use of neural methods in IR applications and research.Tom├Ī┼Ī Mikolov - Distributed Representations for NLP

Tom├Ī┼Ī Mikolov - Distributed Representations for NLPMachine Learning Prague

╠²

The document discusses word embedding techniques, specifically Word2vec. It introduces the motivation for distributed word representations and describes the Skip-gram and CBOW architectures. Word2vec produces word vectors that encode linguistic regularities, with simple examples showing words with similar relationships have similar vector offsets. Evaluation shows Word2vec outperforms previous methods, and its word vectors are now widely used in NLP applications.Word embedding

Word embedding ShivaniChoudhary74

╠²

Word embeddings are numerical representations of words in a continuous vector space, crucial for natural language processing (NLP) tasks. These embeddings capture semantic relationships and can be generated through techniques like co-occurrence matrices, dimensionality reduction, and neural networks. Popular models include word2vec and GloVe, each with representation approaches that impact context understanding and embedding efficiency.Word embeddings

Word embeddingsShruti kar

╠²

Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.Contemporary Models of Natural Language Processing

Contemporary Models of Natural Language ProcessingKaterina Vylomova

╠²

This document provides an overview of contemporary models in natural language processing, focusing on language modeling and machine translation. It introduces n-gram models, distributional semantics, and neural language models such as word2vec. For machine translation, it covers statistical machine translation using noisy channel modeling and alignment, and neural machine translation using sequence-to-sequence models with attention. Neural models generally outperform statistical models on machine translation tasks.wordembedding.pptx

wordembedding.pptxJOBANPREETSINGH62

╠²

Word embedding is a technique in natural language processing where words are represented as dense vectors in a continuous vector space. These representations are designed to capture semantic and syntactic relationships between words based on their distributional properties in large amounts of text. Two popular word embedding models are Word2Vec and GloVe. Word2Vec uses a shallow neural network to learn word vectors that place words with similar meanings close to each other in the vector space. GloVe is an unsupervised learning algorithm that trains word vectors based on global word-word co-occurrence statistics from a corpus.Pycon ke word vectors

Pycon ke word vectorsOsebe Sammi

╠²

The document discusses word vectors for natural language processing. It explains that word vectors represent words as dense numeric vectors which encode the words' semantic meanings based on their contexts in a large text corpus. These vectors are learned using neural networks which predict words from their contexts. This allows determining relationships between words like synonyms which have similar contexts, and performing operations like finding analogies. Examples of using word vectors include determining word similarity, analogies, and translation.Designing, Visualizing and Understanding Deep Neural Networks

Designing, Visualizing and Understanding Deep Neural Networksconnectbeubax

╠²

The document discusses different approaches for representing the semantics and meaning of text, including propositional models that represent sentences as logical formulas and vector-based models that embed texts in a high-dimensional semantic space. It describes word embedding models like Word2vec that learn vector representations of words based on their contexts, and how these embeddings capture linguistic regularities and semantic relationships between words. The document also discusses how composition operations can be performed in the vector space to model the meanings of multi-word expressions.Word2vector

Word2vectorAshis Chanda

╠²

This document summarizes the Word2Vector model proposed by Tomas Mikolov et al. in 2013 for learning word embeddings from large amounts of text. It describes the motivation for representing words as vectors to capture semantic meaning based on context. The proposed method uses either the Continuous Bag-of-Words or Skip-gram model on a sliding window of words to predict target words. The models are trained using a neural network and stochastic gradient descent. The document also discusses applications of Word2Vector including using the model to learn embeddings of medical concepts from clinical notes.Word 2 vector

Word 2 vectorAshis Kumar Chanda

╠²

The document discusses the Word2Vec model developed by Tomas Mikolov et al. in 2013, which enables machines to understand word meanings through embeddings derived from context. It introduces two methods for generating these embeddings: Continuous Bag of Words (CBOW) and Skip-Gram, which utilize neural networks for representation. The application of Word2Vec in medical data is highlighted, emphasizing its potential for understanding medical codes and concepts.Tutorial on word2vec

Tutorial on word2vecLeiden University

╠²

The document is a tutorial on word2vec, a computational model for learning word embeddings from raw text, designed to map similar words to nearby points in a vector space. It covers the background of the distributional hypothesis, vector space models, and various techniques for training word embeddings, including advantages and applications in NLP. Hands-on practical sessions using the Python package gensim exemplify how to implement and evaluate word2vec models.Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone

╠²

The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.Ekaterina vylomova-what-do-neural models-know-about-language-p1

Ekaterina vylomova-what-do-neural models-know-about-language-p1Katerina Vylomova

╠²

This document summarizes the history and development of neural models for natural language processing from the 1940s to present day. It describes early models like the perceptron and how recurrent neural networks enabled modeling of longer sequences. Modern contextualized models like BERT are able to incorporate broader context using attention and bidirectional processing. However, neural models still struggle with tasks requiring complex reasoning and world knowledge.Embedding for fun fumarola Meetup Milano DLI luglio

Embedding for fun fumarola Meetup Milano DLI luglioDeep Learning Italia

╠²

This document discusses contextual word embeddings and how they address the limitations of context-free word embeddings. It begins by explaining that context-free word embeddings cannot model polysemy since words have the same embedding regardless of context. It then introduces contextual word embeddings as a solution, discussing early approaches like CoVe and ELMo that learn contextual embeddings from language models. The document emphasizes that contextual embeddings allow words to have different representations depending on the surrounding context.Word2Vec

Word2Vechyunyoung Lee

╠²

The document outlines a seminar agenda on word embeddings, focusing on Word2Vec and its comparison with models like GloVe. It details the architecture of neural networks for language modeling, including the continuous bag-of-words (CBOW) and skip-gram methods, as well as techniques for representing words through co-occurrence probabilities. Additionally, it references several resources for further learning in natural language processing.DL-CO2 -Session 3 Learning Vectorial Representations of Words.pptx

DL-CO2 -Session 3 Learning Vectorial Representations of Words.pptxKv Sagar

╠²

The document discusses vectorial representations of words, particularly focusing on methods like word2vec, SVD, and GloVe for learning word embeddings from co-occurrence data. It explores challenges such as high dimensionality and sparsity in word representations and presents solutions including dimensionality reduction and various optimization techniques. Finally, it compares count-based and prediction-based models, highlighting their respective benefits and performance in different linguistic tasks.Recently uploaded (20)

Viral>Wondershare Filmora 14.5.18.12900 Crack Free Download

Viral>Wondershare Filmora 14.5.18.12900 Crack Free DownloadPuppy jhon

╠²

Ō×Ī ¤īŹ¤ō▒¤æēCOPY & PASTE LINK¤æē¤æē¤æē Ō׿ Ō׿Ō׿ https://drfiles.net/

Wondershare Filmora Crack is a user-friendly video editing software designed for both beginners and experienced users.

Reducing Conflicts and Increasing Safety Along the Cycling Networks of East-F...

Reducing Conflicts and Increasing Safety Along the Cycling Networks of East-F...Safe Software

╠²

In partnership with the Belgian Province of East-Flanders this project aimed to reduce conflicts and increase safety along a cycling route between the cities of Oudenaarde and Ghent. To achieve this goal, the current cycling network data needed some extra key information, including: Speed limits for segments, Access restrictions for different users (pedestrians, cyclists, motor vehicles, etc.), Priority rules at intersections. Using a 360┬░ camera and GPS mounted on a measuring bicycle, we collected images of traffic signs and ground markings along the cycling lanes building up mobile mapping data. Image recognition technologies identified the road signs, creating a dataset with their locations and codes. The data processing entailed three FME workspaces. These included identifying valid intersections with other networks (e.g., roads, railways), creating a topological network between segments and intersections and linking road signs to segments and intersections based on proximity and orientation. Additional features, such as speed zones, inheritance of speed and access to neighbouring segments were also implemented to further enhance the data. The final results were visualized in ArcGIS, enabling analysis for the end users. The project provided them with key insights, including statistics on accessible road segments, speed limits, and intersection priorities. These will make the cycling paths more safe and uniform, by reducing conflicts between users.Providing an OGC API Processes REST Interface for FME Flow

Providing an OGC API Processes REST Interface for FME FlowSafe Software

╠²

This presentation will showcase an adapter for FME Flow that provides REST endpoints for FME Workspaces following the OGC API Processes specification. The implementation delivers robust, user-friendly API endpoints, including standardized methods for parameter provision. Additionally, it enhances security and user management by supporting OAuth2 authentication. Join us to discover how these advancements can elevate your enterprise integration workflows and ensure seamless, secure interactions with FME Flow.War_And_Cyber_3_Years_Of_Struggle_And_Lessons_For_Global_Security.pdf

War_And_Cyber_3_Years_Of_Struggle_And_Lessons_For_Global_Security.pdfbiswajitbanerjee38

╠²

Russia is one of the most aggressive nations when it comes to state coordinated cyberattacksŌĆŖŌĆöŌĆŖand Ukraine has been at the center of their crosshairs for 3 years. This report, provided the State Service of Special Communications and Information Protection of Ukraine contains an incredible amount of cybersecurity insights, showcasing the coordinated aggressive cyberwarfare campaigns of Russia against Ukraine.

It brings to the forefront that understanding your adversary, especially an aggressive nation state, is important for cyber defense. Knowing their motivations, capabilities, and tactics becomes an advantage when allocating resources for maximum impact.

Intelligence shows Russia is on a cyber rampage, leveraging FSB, SVR, and GRU resources to professionally target UkraineŌĆÖs critical infrastructures, military, and international diplomacy support efforts.

The number of total incidents against Ukraine, originating from Russia, has steadily increased from 1350 in 2021 to 4315 in 2024, but the number of actual critical incidents has been managed down from a high of 1048 in 2022 to a mere 59 in 2024ŌĆŖŌĆöŌĆŖshowcasing how the rapid detection and response to cyberattacks has been impacted by UkraineŌĆÖs improved cyber resilience.

Even against a much larger adversary, Ukraine is showcasing outstanding cybersecurity, enabled by strong strategies and sound tactics. There are lessons to learn for any enterprise that could potentially be targeted by aggressive nation states.

Definitely worth the read!Kubernetes Security Act Now Before ItŌĆÖs Too Late

Kubernetes Security Act Now Before ItŌĆÖs Too LateMichael Furman

╠²

In today's cloud-native landscape, Kubernetes has become the de facto standard for orchestrating containerized applications, but its inherent complexity introduces unique security challenges. Are you one YAML away from disaster?

This presentation, "Kubernetes Security: Act Now Before ItŌĆÖs Too Late," is your essential guide to understanding and mitigating the critical security risks within your Kubernetes environments. This presentation dives deep into the OWASP Kubernetes Top Ten, providing actionable insights to harden your clusters.

We will cover:

The fundamental architecture of Kubernetes and why its security is paramount.

In-depth strategies for protecting your Kubernetes Control Plane, including kube-apiserver and etcd.

Crucial best practices for securing your workloads and nodes, covering topics like privileged containers, root filesystem security, and the essential role of Pod Security Admission.

Don't wait for a breach. Learn how to identify, prevent, and respond to Kubernetes security threats effectively.

It's time to act now before it's too late!Floods in Valencia: Two FME-Powered Stories of Data Resilience

Floods in Valencia: Two FME-Powered Stories of Data ResilienceSafe Software

╠²

In October 2024, the Spanish region of Valencia faced severe flooding that underscored the critical need for accessible and actionable data. This presentation will explore two innovative use cases where FME facilitated data integration and availability during the crisis. The first case demonstrates how FME was used to process and convert satellite imagery and other geospatial data into formats tailored for rapid analysis by emergency teams. The second case delves into making human mobility dataŌĆöcollected from mobile phone signalsŌĆöaccessible as source-destination matrices, offering key insights into population movements during and after the flooding. These stories highlight how FME's powerful capabilities can bridge the gap between raw data and decision-making, fostering resilience and preparedness in the face of natural disasters. Attendees will gain practical insights into how FME can support crisis management and urban planning in a changing climate.Crypto Super 500 - 14th Report - June2025.pdf

Crypto Super 500 - 14th Report - June2025.pdfStephen Perrenod

╠²

This OrionX's 14th semi-annual report on the state of the cryptocurrency mining market. The report focuses on Proof-of-Work cryptocurrencies since those use substantial supercomputer power to mint new coins and encode transactions on their blockchains. Only two make the cut this time, Bitcoin with $18 billion of annual economic value produced and Dogecoin with $1 billion. Bitcoin has now reached the Zettascale with typical hash rates of 0.9 Zettahashes per second. Bitcoin is powered by the world's largest decentralized supercomputer in a continuous winner take all lottery incentive network.The State of Web3 Industry- Industry Report

The State of Web3 Industry- Industry ReportLiveplex

╠²

Web3 is poised for mainstream integration by 2030, with decentralized applications potentially reaching billions of users through improved scalability, user-friendly wallets, and regulatory clarity. Many forecasts project trillions of dollars in tokenized assets by 2030 , integration of AI, IoT, and Web3 (e.g. autonomous agents and decentralized physical infrastructure), and the possible emergence of global interoperability standards. Key challenges going forward include ensuring security at scale, preserving decentralization principles under regulatory oversight, and demonstrating tangible consumer value to sustain adoption beyond speculative cycles.vertical-cnc-processing-centers-drillteq-v-200-en.pdf

vertical-cnc-processing-centers-drillteq-v-200-en.pdfAmirStern2

╠²

ū×ūøūĢūĀūĢū¬ CNC ū¦ūÖūōūĢūŚ ūÉūĀūøūÖūĢū¬ ūöū¤ ūöūæūŚūÖū©ūö ūöūĀūøūĢūĀūö ūĢūöūśūĢūæūö ūæūÖūĢū¬ū© ū£ū¦ūÖūōūĢūŚ ūÉū©ūĢūĀūĢū¬ ūĢūÉū©ūÆū¢ūÖūØ ū£ūÖūÖū”ūĢū© ū©ūöūÖūśūÖūØ. ūöūŚū£ū¦ ūĀūĢūĪūó ū£ūÉūĢū©ūÜ ū”ūÖū© ūö-x ūæūÉū×ū”ūóūĢū¬ ū”ūÖū© ūōūÖūÆūÖūśū£ūÖ ū×ūōūĢūÖū¦, ūĢū¬ūżūĢūĪ ūó"ūÖ ū”ūæū¬ ū×ūøūĀūÖū¬, ūøūÜ ū®ūÉūÖū¤ ū”ūĢū©ūÜ ū£ūæū”ūó setup (ūöū¬ūÉū×ūĢū¬) ū£ūÆūōū£ūÖūØ ū®ūĢūĀūÖūØ ū®ū£ ūŚū£ū¦ūÖūØ. FME for Good: Integrating Multiple Data Sources with APIs to Support Local Ch...

FME for Good: Integrating Multiple Data Sources with APIs to Support Local Ch...Safe Software

╠²

Have-a-skate-with-Bob (HASB-KC) is a local charity that holds two Hockey Tournaments every year to raise money in the fight against Pancreatic Cancer. The FME Form software is used to integrate and exchange data via API, between Google Forms, Google Sheets, Stripe payments, SmartWaiver, and the GoDaddy email marketing tools to build a grass-roots Customer Relationship Management (CRM) system for the charity. The CRM is used to communicate effectively and readily with the participants of the hockey events and most importantly the local area sponsors of the event. Communication consists of a BLOG used to inform participants of event details including, the ever-important team rosters. Funds raised by these events are used to support families in the local area to fight cancer and support PanCan research efforts to find a cure against this insidious disease. FME Form removes the tedium and error-prone manual ETL processes against these systems into 1 or 2 workbenches that put the data needed at the fingertips of the event organizers daily freeing them to work on outreach and marketing of the events in the community.ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...

ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...Edge AI and Vision Alliance

╠²

For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/from-enterprise-to-makers-driving-vision-ai-innovation-at-the-extreme-edge-a-presentation-from-sony-semiconductor-solutions/

Amir Servi, Edge Deep Learning Product Manager at Sony Semiconductor Solutions, presents the ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme EdgeŌĆØ tutorial at the May 2025 Embedded Vision Summit.

SonyŌĆÖs unique integrated sensor-processor technology is enabling ultra-efficient intelligence directly at the image source, transforming vision AI for enterprises and developers alike. In this presentation, Servi showcases how the AITRIOS platform simplifies vision AI for enterprises with tools for large-scale deployments and model management.

Servi also highlights his companyŌĆÖs collaboration with Ultralytics and Raspberry Pi, which brings YOLO models to the developer community, empowering grassroots innovation. Whether youŌĆÖre scaling vision AI for industry or experimenting with cutting-edge tools, this presentation will demonstrate how Sony is accelerating high-performance, energy-efficient vision AI for all.FIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptx

FIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptxFIDO Alliance

╠²

FIDO Seminar: Perspectives on Passkeys & Consumer AdoptionFME for Distribution & Transmission Integrity Management Program (DIMP & TIMP)

FME for Distribution & Transmission Integrity Management Program (DIMP & TIMP)Safe Software

╠²

Peoples Gas in Chicago, IL has changed to a new Distribution & Transmission Integrity Management Program (DIMP & TIMP) software provider in recent years. In order to successfully deploy the new software we have created a series of ETL processes using FME Form to transform our gas facility data to meet the required DIMP & TIMP data specifications. This presentation will provide an overview of how we used FME to transform data from ESRIŌĆÖs Utility Network and several other internal and external sources to meet the strict data specifications for the DIMP and TIMP software solutions.Down the Rabbit Hole ŌĆō Solving 5 Training Roadblocks

Down the Rabbit Hole ŌĆō Solving 5 Training RoadblocksRustici Software

╠²

Feeling stuck in the Matrix of your training technologies? YouŌĆÖre not alone. Managing your training catalog, wrangling LMSs and delivering content across different tools and audiences can feel like dodging digital bullets. At some point, you hit a fork in the road: Keep patching things up as issues pop upŌĆ” or follow the rabbit hole to the root of the problems.

Good news, weŌĆÖve already been down that rabbit hole. Peter Overton and Cameron Gray of Rustici Software are here to share what we found. In this webinar, weŌĆÖll break down 5 training roadblocks in delivery and management and show you how theyŌĆÖre easier to fix than you might think.FIDO Seminar: Authentication for a Billion Consumers - Amazon.pptx

FIDO Seminar: Authentication for a Billion Consumers - Amazon.pptxFIDO Alliance

╠²

FIDO Seminar: Authentication for a Billion Consumers - AmazonTrustArc Webinar - 2025 Global Privacy Survey

TrustArc Webinar - 2025 Global Privacy SurveyTrustArc

╠²

How does your privacy program compare to your peers? What challenges are privacy teams tackling and prioritizing in 2025?

In the sixth annual Global Privacy Benchmarks Survey, we asked global privacy professionals and business executives to share their perspectives on privacy inside and outside their organizations. The annual report provides a 360-degree view of various industries' priorities, attitudes, and trends. See how organizational priorities and strategic approaches to data security and privacy are evolving around the globe.

This webinar features an expert panel discussion and data-driven insights to help you navigate the shifting privacy landscape. Whether you are a privacy officer, legal professional, compliance specialist, or security expert, this session will provide actionable takeaways to strengthen your privacy strategy.

This webinar will review:

- The emerging trends in data protection, compliance, and risk

- The top challenges for privacy leaders, practitioners, and organizations in 2025

- The impact of evolving regulations and the crossroads with new technology, like AI

Predictions for the future of privacy in 2025 and beyondCan We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation

╠²

Can We Use Rust to Develop Extensions for PostgreSQL?

(POSETTE: An Event for Postgres 2025)

June 11, 2025

Shinya Kato

NTT DATA Japan CorporationFIDO Seminar: Evolving Landscape of Post-Quantum Cryptography.pptx

FIDO Seminar: Evolving Landscape of Post-Quantum Cryptography.pptxFIDO Alliance

╠²

FIDO Seminar: Evolving Landscape of Post-Quantum CryptographyAI VIDEO MAGAZINE - June 2025 - r/aivideo

AI VIDEO MAGAZINE - June 2025 - r/aivideo1pcity Studios, Inc

╠²

AI VIDEO MAGAZINE - r/aivideo community newsletter ŌĆō Exclusive Tutorials: How to make an AI VIDEO from scratch, PLUS: How to make AI MUSIC, Hottest ai videos of 2025, Exclusive Interviews, New Tools, Previews, and MORE - JUNE 2025 ISSUE -ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...

ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...Edge AI and Vision Alliance

╠²

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation

╠²

Ad

Ashutosh pycon

- 1. Solving Logical Puzzles with Natural Language Processing Pycon-India 2015 by Ashutosh Trivedi Founder, bracketPy www.bracketpy.com Pycon-India-2015

- 2. The NLP Story I know Natural Language I know Processing Pycon-India-2015Pycon-India-2015

- 3. To understand natural language programatically But how ? Part of Speech Vocabulary n-gram Word style Noun detection The NLP Story.. Pycon-India-2015

- 4. The NLP Story.. Hey, I am a sentence.. can you process me ? current word previous word next word current word n-gram POS tag surrounding POS tag sequence word shape (all Caps?) surrounding word shape presence of word in left/right window Information Pycon-India-2015

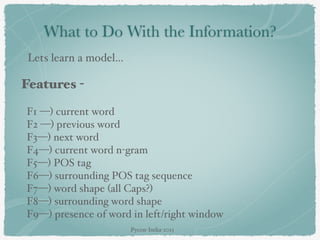

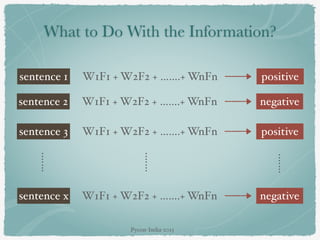

- 5. What to Do With the Information? Lets learn a modelŌĆ” F1 ŌĆö) current word F2 ŌĆö) previous word F3ŌĆö) next word F4ŌĆö) current word n-gram F5ŌĆö) POS tag F6ŌĆö) surrounding POS tag sequence F7ŌĆö) word shape (all Caps?) F8ŌĆö) surrounding word shape F9ŌĆö) presence of word in left/right window Features - Pycon-India-2015

- 6. What to Do With the Information? W1F1 + W2F2 + ŌĆ”ŌĆ”.+ WnFnsentence 1 positive W1F1 + W2F2 + ŌĆ”ŌĆ”.+ WnFnsentence 2 negative W1F1 + W2F2 + ŌĆ”ŌĆ”.+ WnFnsentence 3 positive W1F1 + W2F2 + ŌĆ”ŌĆ”.+ WnFnsentence x negative ŌĆ”ŌĆ”. ŌĆ”ŌĆ”. ŌĆ”ŌĆ”. Pycon-India-2015

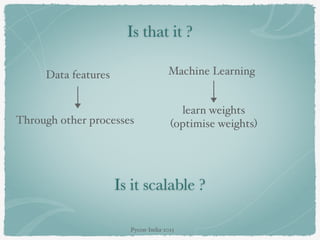

- 7. Is that it ? Data features Through other processes Machine Learning learn weights (optimise weights) Is it scalable ? Pycon-India-2015

- 8. So what is wrong ? We are talking about AI Pycon-India-2015

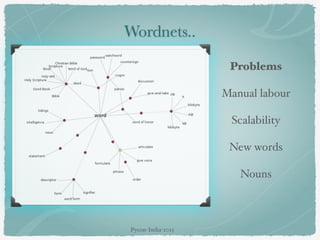

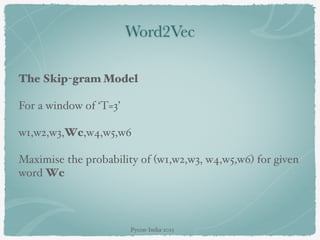

- 9. How do we represent a word? Index in vocab.. 30th word [0,0,ŌĆ”ŌĆ”.1,0,0,0,ŌĆ”0] 30 45th word [0,0,ŌĆ”ŌĆ”.0,0,0,ŌĆ”0,1,ŌĆ”0] 45 How would a processor know that ŌĆśgoodŌĆÖ at index 30 is synonym for ŌĆśniceŌĆÖ at index 45 Pycon-India-2015

- 12. So how do we remember words.. ? Our human hooks ŌĆö associated word person context taste smell time visual feelings Pycon-India-2015

- 13. how to represent words programmatically ? You, as an individual is average of 5 people you spend time with everyday. You shall know a word by the company it keeps -J.R.Firth 1957 Pycon-India-2015

- 14. How to make neighbours represent the word ? One of the most successful ideas of statistical NLP Co-occurrence matrix Capture both syntactical and semantical information Pycon-India-2015

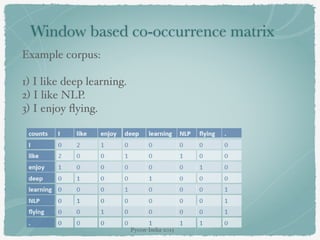

- 15. Window based co-occurrence matrix Example corpus: 1) I like deep learning. 2) I like NLP. 3) I enjoy ’¼éying. Pycon-India-2015

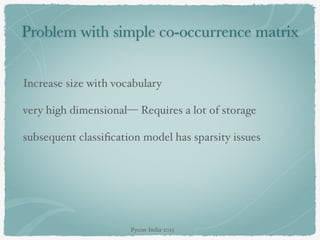

- 16. Problem with simple co-occurrence matrix Increase size with vocabulary very high dimensionalŌĆö Requires a lot of storage subsequent classi’¼ücation model has sparsity issues Pycon-India-2015

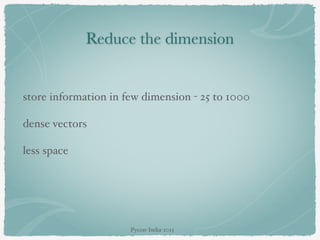

- 17. Reduce the dimension store information in few dimension - 25 to 1000 dense vectors less space Pycon-India-2015

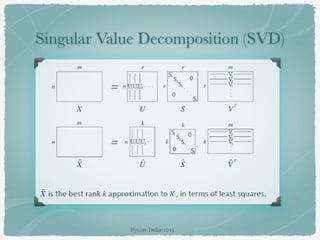

- 18. Singular Value Decomposition (SVD) Pycon-India-2015

- 20. Problem with SVD Computational cost scales quadratically for N x M matrix Bad for million of words or document Hard to incorporate new words Pycon-India-2015

- 21. Word2Vec Directly learn low dimensional vectors Instead of capturing co-occurrence counts directly, Predict surrounding words of every word ŌĆ£Glove: Global Vectors for Word RepresentationŌĆØ by Pennington et al. (2014) Faster and can easily incorporate a new sentence/ document or add a word to the vocabulary Pycon-India-2015

- 22. Word2Vec The Skip-gram Model objective of the Skip-gram model is to ’¼ünd word representations by the surrounding words in a sentence or a document. W1, W2, W3, . . . , Wt ŌĆö sentence/document Maximise the log probability of any context word given the current centre word. Pycon-India-2015

- 23. Word2Vec The Skip-gram Model For a window of ŌĆśT=3ŌĆÖ w1,w2,w3,Wc,w4,w5,w6 Maximise the probability of (w1,w2,w3, w4,w5,w6) for given word Wc Pycon-India-2015

- 24. Word2Vec Unsupervised method We are just optimising the probability of words with respect to its neighbours creating a low dimensional space (probabilistic) Pycon-India-2015

- 25. Lower Dimensions Dimension of Similarity Dimension of sentiment ? Dimension of POS ? Dimension of all word having 5 vowels It can be anything ŌĆ”. word embeddings Pycon-India-2015

- 26. Dimension of similarity Analogies testing dimensions of similarity can be solved quite well just by doing vector subtraction in the embedding space Syntactically. XØæÄØæØØæØØæÖØæÆ ŌłÆ XØæÄØæØØæØØæÖØæÆØæĀ Ōēł XØæÉØæÄØæ¤ ŌłÆ XØæÉØæÄØæ¤ØæĀ Ōēł XØæōØæÄØæÜØæ¢ØæÖØæ” ŌłÆ XØæōØæÄØæÜØæ¢ØæÖØæ¢ØæÆØæĀ Syntactical - Singular, Plural Pycon-India-2015

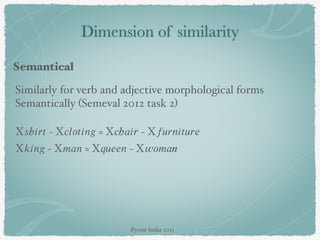

- 27. Dimension of similarity Semantical Similarly for verb and adjective morphological forms Semantically (Semeval 2012 task 2) XØæĀhØæ¢Øæ¤ØæĪ ŌłÆ XØæÉØæÖØæ£ØæĪØæ¢ØæøØæö Ōēł XØæÉhØæÄØæ¢Øæ¤ ŌłÆ XØæōØæóØæ¤ØæøØæ¢ØæĪØæóØæ¤ØæÆ XØæśØæ¢ØæøØæö ŌłÆ XØæÜØæÄØæø Ōēł XØæ×ØæóØæÆØæÆØæø ŌłÆ XØæżØæ£ØæÜØæÄn Pycon-India-2015

- 28. Dimension of similarity Test for linear relationships, examined by Mikolov et al. a:b :: c: ? man: woman :: king : ? man king woman queen 1 0.75 0.5 0.25 0 0.25 0.5 0.75 1 + king [0.5, 0.2] - man [0.25, 0.5] + woman [0.6, 1 ] ŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆöŌĆö- queen [0.85, 0.7] Pycon-India-2015

- 30. Pycon-India-2015

- 31. Pycon India , Ashutosh Trivedi Superlatives Pycon-India-2015

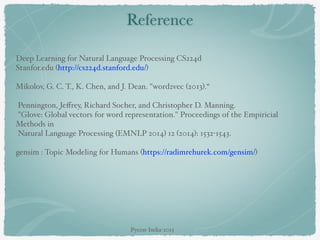

- 33. Reference Deep Learning for Natural Language Processing CS224d Stanfor.edu (http://cs224d.stanford.edu/) ŌĆ© Mikolov, G. C. T., K. Chen, and J. Dean. "word2vec (2013).ŌĆ£ ŌĆ© Pennington, Je’¼Ćrey, Richard Socher, and Christopher D. Manning. "Glove: Global vectors for word representation." Proceedings of the Empiricial Methods in Natural Language Processing (EMNLP 2014) 12 (2014): 1532-1543. ŌĆ© gensim : Topic Modeling for Humans (https://radimrehurek.com/gensim/) ŌĆ© Pycon-India-2015