Assignment 2 Practical

- 1. Practical Assignment 2 SaÚl Gausin RamÃģn GonzÃĄlez

- 2. FIFO

- 3. After execution We compare our results with Rinard notes. In this case we found an error in Rinard notes when page 4 enter in memory.

- 4. LRU This is the code of our implementation of LRU algorithm. The principal difference is that we move the page required to the end of the array of elements for know what page is the most recently used.

- 5. After execution Again we compare our results with Rinard notes. In the execution of the program you see a zero in the firsts 3 lines, it means that isn't any page in that position in the memory.

- 6. FIFO vs LRU The difference is in the efficiency of each algorithm to avoid a greater number of page faults. Comparing these two types of algorithms can be summarized that the number of page faults in the FIFO is greater than the LRU.

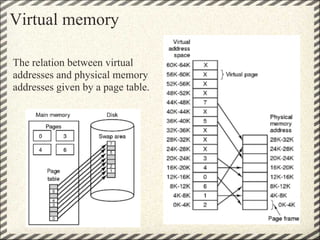

- 8. Virtual memory The relation between virtual addresses and physical memory addresses given by a page table.

- 9. TLB Translation lookaside buffer is a CPU cache that memory management hardware uses to improve virtual address translation speed. All current desktop and server processors use a TLB to map virtual and physical address spaces, and it is ubiquitous in any hardware which utilizes virtual memory.