Benchmarking Automated Machine Learning For Clustering

- 1. Benchmarking Automated Machine Learning for Clustering Biagio Licari Candidate Prof. Sylvio Barbon Junior Supervisor A.Y 22/23

- 2. ? Introduction ? Why benchmarking ? Benchmark Design ? Conclusion ? Results Index

- 3. Automated Machine Learning Introduction ? AutoML automates complex and time-consuming tasks in Machine Learning pipeline ? Enhancing accessibility for individuals with diverse expertise levels ? AutoML tasks include feature engineering, algorithm selection and hyperparameter optimization or both combined (CASH). ? AutoML frameworks mainly tackle supervised problems ? Validation of results for unsupervised problems is challenging

- 4. ? Clustering: A foundational technique with broad applications in pattern recognition, image segmentation, and anomaly detection ? Data clustering demands expertise in handling complex and diverse datasets ? Automating the generation of unsupervised clustering solutions poses a significant challenge ? Unsupervised clustering lacks clear targets ? No single metric adequately describes every dataset ? Meta-learning approaches hold potential in tackling the CASH problem AutoML for Clustering Introduction

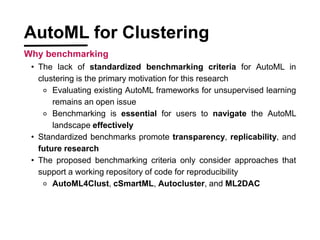

- 5. ? The lack of standardized benchmarking criteria for AutoML in clustering is the primary motivation for this research ? Evaluating existing AutoML frameworks for unsupervised learning remains an open issue ? Benchmarking is essential for users to navigate the AutoML landscape effectively ? Standardized benchmarks promote transparency, replicability, and future research ? The proposed benchmarking criteria only consider approaches that support a working repository of code for reproducibility ? AutoML4Clust, cSmartML, Autocluster, and ML2DAC AutoML for Clustering Why benchmarking

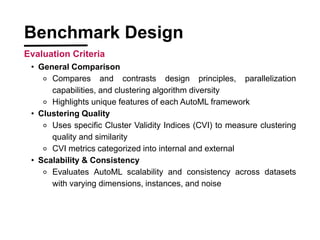

- 6. ? General Comparison ? Compares and contrasts design principles, parallelization capabilities, and clustering algorithm diversity ? Highlights unique features of each AutoML framework ? Clustering Quality ? Uses specific Cluster Validity Indices (CVI) to measure clustering quality and similarity ? CVI metrics categorized into internal and external ? Scalability & Consistency ? Evaluates AutoML scalability and consistency across datasets with varying dimensions, instances, and noise Benchmark Design Evaluation Criteria

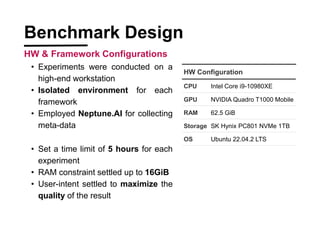

- 7. ? Experiments were conducted on a high-end workstation ? Isolated environment for each framework ? Employed Neptune.AI for collecting meta-data ? Set a time limit of 5 hours for each experiment ? RAM constraint settled up to 16GiB ? User-intent settled to maximize the quality of the result HW Configuration CPU Intel Core i9-10980XE GPU NVIDIA Quadro T1000 Mobile RAM 62.5 GiB Storage SK Hynix PC801 NVMe 1TB OS Ubuntu 22.04.2 LTS Benchmark Design HW & Framework Configurations

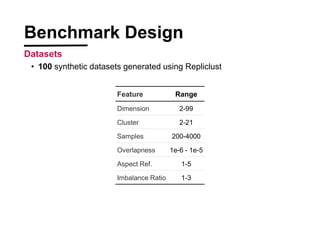

- 8. Feature Range Dimension 2-99 Cluster 2-21 Samples 200-4000 Overlapness 1e-6 - 1e-5 Aspect Ref. 1-5 Imbalance Ratio 1-3 Benchmark Design Datasets ? 100 synthetic datasets generated using Repliclust

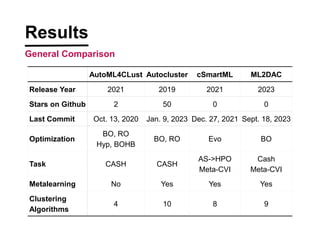

- 9. AutoML4CLust Autocluster cSmartML ML2DAC Release Year 2021 2019 2021 2023 Stars on Github 2 50 0 0 Last Commit Oct. 13, 2020 Jan. 9, 2023 Dec. 27, 2021 Sept. 18, 2023 Optimization BO, RO Hyp, BOHB BO, RO Evo BO Task CASH CASH AS->HPO Meta-CVI Cash Meta-CVI Metalearning No Yes Yes Yes Clustering Algorithms 4 10 8 9 Results General Comparison

- 10. ? Parallelization features: ? AutoML4Clust ? Supports concurrent optimization tasks with BOHB and HyperBand ? cSmartML ? Excels with internal parallelization for hyperparameter optimization ? Autocluster ? Lacks parallelization during execution ? ML2DAC ? Employs Dask, an open-source Python library for parallel computing. ? Not implemented using BO Results General Comparison

- 11. Results General Comparison ? The adaptability of AutoML frameworks significantly impacts clustering effectiveness ? Search Space features: ? ML2DAC and Autocluster offer a wider search space range for AS ? AutoML4Clust¡¯s search space comprises solely K-centered clustering algorithms ? cSmartML prioritizes hierarchy models with some density and graph theory adoption.

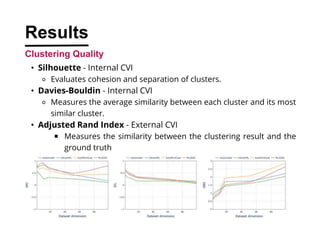

- 12. Results Clustering Quality ? Silhouette - Internal CVI ? Evaluates cohesion and separation of clusters. ? Davies-Bouldin - Internal CVI ? Measures the average similarity between each cluster and its most similar cluster. ? Adjusted Rand Index - External CVI ? Measures the similarity between the clustering result and the ground truth

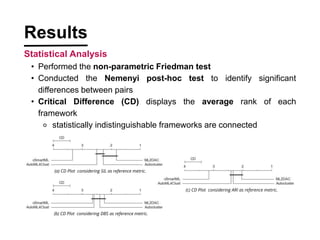

- 13. (c) CD Plot considering ARI as reference metric. (b) CD Plot considering DBS as reference metric. ? Performed the non-parametric Friedman test ? Conducted the Nemenyi post-hoc test to identify significant differences between pairs ? Critical Difference (CD) displays the average rank of each framework ? statistically indistinguishable frameworks are connected (a) CD Plot considering SIL as reference metric. Results Statistical Analysis

- 14. ? Performed the Bayesian Bradley-Terry model (BBT) to compare frameworks performance. ? BBT model assigns each framework a "merit number" determining its performance. ? The Bayesian approach enables the determination of probabilities associated with framework rankings. ? Provides confidence beyond simple ranking, enhancing decision-making. Results Bayesian Bradley-Terry Tree Analysis

- 15. ? BBT procedure results contrast previous analysis ? Silhouette ? ML2DAC superior to both Autocluster and AutoML4Clust ? Autocluster outperforms AutoML4Clust ? Davies-Boudlin ? ML2DAC and Autocluster superior to other frameworks ? No claim can be made between ML2DAC and Autocluster ? Adjustet Rand Index ? ML2DAC superior to both Autocluster and AutoML4Clust ? cSmartML is confirmed as inferior to other analyzed frameworks Results Bayesian Bradley-Terry Tree

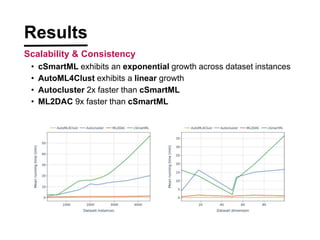

- 16. Results Scalability & Consistency ? cSmartML exhibits an exponential growth across dataset instances ? AutoML4Clust exhibits a linear growth ? Autocluster 2x faster than cSmartML ? ML2DAC 9x faster than cSmartML

- 17. Results Scalability & Consistency ? Autocluster is the most resource-efficient option ? ML2DAC requires approximately 40.51% more memory ? with higher performance in clustering quality ? cSmartML requires approximately 50.4% more memory ? with lower performance in clustering quality

- 18. ? ML2DAC emerged slightly ahead ? ML2DAC excelled in multiple tasks but was not consistently the top performer ? Rapid development within the AutoML community ? Significant progress in unsupervised learning, especially in clustering ? Room for enhancement ? Meta-learning approaches may offer solutions to improve performance. ? Balancing automation with transparency is crucial in order to maintain accessibility to clustering algorithm principles. Conclusion

- 19. Thank you for your attention !

- 20. ? Z?ller, M., & Huber, M.F. (2019). Benchmark and Survey of Automated Machine Learning Frameworks. J. Artif. Intell. Res., 70, 409-472. ? Gijsbers, P., Bueno, M.L., Coors, S., LeDell, E., Poirier, S., Thomas, J., Bischl, B., & Vanschoren, J. (2022). AMLB: an AutoML Benchmark. ArXiv, abs/2207.12560. ? Luxburg, U.V., Williamson, R.C., & Guyon, I. (2009). Clustering: Science or Art? ICML Unsupervised and Transfer Learning. ? Wainer, J. (2022). A Bayesian Bradley-Terry model to compare multiple ML algorithms on multiple data sets. ArXiv, abs/2208.04935. References

- 21. ? Tschechlov, D., Fritz, M., & Schwarz, H. (2021). AutoML4Clust: Efficient AutoML for Clustering Analyses. International Conference on Extending Database Technology. ? Shawi, R.E., & Sakr, S. (2022). cSmartML-Glassbox: Increasing Transparency and Controllability in Automated Clustering. 2022 IEEE International Conference on Data Mining Workshops (ICDMW), 47- 54. ? Treder-Tschechlov, D., Fritz, M., Schwarz, H., & Mitschang, B. (2023). ML2DAC: Meta-Learning to Democratize AutoML for Clustering Analysis. Proceedings of the ACM on Management of Data, 1, 1 - 26. ? Wong, W. Y. (2019). Autocluster: AutoML for clustering models in sklearn. References