Cache memoy designed by Mohd Tariq

- 2. What is cache memory ? ’üČ Cache memory is a small, high speed RAM buffer Located between the CPU and Main Memory. ’üČ Cache memory holds a copy the instructions (instruction cache) or data (operand a data cache) currently being used by the CPU. ’üČ The main purpose of a cache memory is to accelerate your computer speed while keeping the price of the computer low.

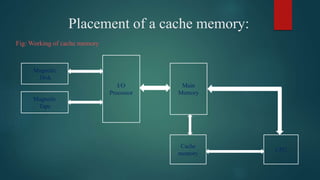

- 3. Placement of a cache memory: Fig: Working of cache memory Magnetic Disk Magnetic Tape I/O Processor Main Memory Cache memory CPU

- 4. Hit Ratio: ’üĄ The ratio of the total number of hits divided by the total CPU access to memory( i.e. hit plus misses) is called Hit Ratio. ’üĄ Hit ratio = Total number of Hits / Total number of hits + Total number of miss.

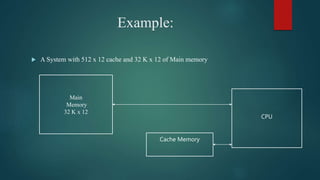

- 5. Example: ’üĄ A System with 512 x 12 cache and 32 K x 12 of Main memory Main Memory 32 K x 12 CPU Cache Memory

- 6. Types OF Cache Mapping: 1. Direct Mapping 2. Associative Mapping 3. Set Associative Mapping

- 7. 1. Direct Mapping i. Each location in RAM has one specific place in cache where the data will be held. ii. Consider the cache to be like an array. Part of the address is used as index into the cache to identify where the data will be held. iii. Since a data block from RAM can only be in one specific line in the cache, it must always replace the one block that was already there. There is no need for a replacement algorithm.

- 8. 2. Associative Mapping: I. In associative cache mapping, the data from any location in RAM can be stored in any location in cache. II. When the processor wants an address, all tag fields in the cache as checked to determine if the data is already in the cache. III. Each tag line requires circuitry to compare the desired address with the tag field. IV. All tag fields are checked in parallel

- 9. 3. Set Associative Mapping: I. Set associative mapping is a mixture of direct and associative mapping. II. The cache lines are grouped into sets. III. The number of lines in a set can vary from 2 to 16. IV. A portion of the address is used to specify which set will hold an address. V. The data can be stored in any of the lines in the set.

- 10. Replacement policy: ’üČ When a cache miss occurs, data is copied into some location in cache. ’üČ With Set Associative or Fully Associative mapping, the system must decide where to put the data and what values will be replaced. ’üČ Cache performance is greatly affected by properly choosing data that is unlikely to be referenced again.

- 11. Replacement Algorithms of Cache Memory: Replacement algorithms are used when there are no available space in a cache in which to place a data. Four of the most common cache replacement algorithms are described below: a) Least Recently Used (LRU): The LRU algorithm selects for replacement the item that has been least recently used by the CPU. b) First-In-First-Out (FIFO):The FIFO algorithm selects for replacement the item that has been in the cache from the longest time. c) Least Frequently Used (LRU):The LRU algorithm selects for replacement the item that has been least frequently used by the CPU. d) Random: The random algorithm selects for replacement the item randomly.

- 12. Writing into Cache: ŌĆó When memory write operations are performed, CPU first writes into the cache memory. These modifications made by CPU during a write operations, on the data saved in cache, need to be written back to main memory or to auxiliary memory. ŌĆó These two popular cache write policies (schemes) are: ’ü▒ Write-Through ’ü▒ Write-Back

- 13. Write-Through: 1. In a write through cache, the main memory is updated each time the CPU writes into cache. 2. The advantage of the write-through cache is that the main memory always contains the same data as the cache contains. 3. This characteristic is desirable in a system which uses direct memory access scheme of data transfer. The I/O devices communicating through DMA receive the most recent data.

- 14. Write-Back: 1. In a write back scheme, only the cache memory is updated during a write operation. 2. The updated locations in the cache memory are marked by a flag so that later on, when the word is removed from the cache, it is copied into the main memory. 3. The words are removed from the cache time to time to make room for a new block of words.