Ceph Day Beijing - Ceph RDMA Update

- 1. CEPH RDMA UPDATE XSKY Haomai Wang 2017.06.06

- 3. ? The History of Messenger ¨C SimpleMessenger ¨C XioMessenger ¨C AsyncMessenger Ceph Network Evolvement

- 4. Ceph Network Evolvement ? AsyncMessenger ¨C Core Library included by all components ¨C Kernel TCP/IP driver ¨C Epoll/Kqueue Drive ¨C Maintain connection lifecycle and session ¨C replaces aging SimpleMessenger ¨C fixed size thread pool (vs 2 threads per socket) ¨C scales better to larger clusters ¨C more healthy relationship with tcmalloc ¨C now the default!

- 5. Ceph Network Evolvement ? Performance Bottleneck: ¨C Non Local Process of Connections ? RX in interrupt context ? Application and system call in another ¨C Global TCP Control Block Management ¨C VFS Overhead ¨C TCP protocol optimized for: ? Throughput, not latency ? Long-haul networks (high latency) ? Congestion throughout ? Modest connections/server

- 6. Ceph Network Evolvement ? Hardware Assistance ¨C SolarFlare(TCP Offload) ¨C RDMA(Infiniband/RoCE) ¨C GAMMA(Genoa Active Messange Machine) ? Data Plane ¨C DPDK + Userspace TCP/IP Stack ? Linux Kernel Improvement ? TCP or NonTCP ? Pros: ¨C Compatible ¨C Proved ? Cons: ¨C Complexity ? Notes: ¨C Try lower latency and scalability but no need to do extremely

- 7. Ceph Network Evolvement ? Built for High Performance ¨C DPDK ¨C SPDK ¨C Full userspace IO path ¨C Shared-nothing TCP/IP Stack(Seastar refer)

- 8. Ceph Network Evolvement ? Problems ¨C OSD Design ? Each OSD own one disk ? Pipeline model ? Too much lock/wait in legacy ¨C DPDK + SPDK ? Must run on nvme ssd ? CPU spining ? Limited use cases

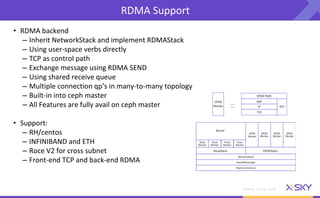

- 10. ? RDMA backend ¨C Inherit NetworkStack and implement RDMAStack ¨C Using user-space verbs directly ¨C TCP as control path ¨C Exchange message using RDMA SEND ¨C Using shared receive queue ¨C Multiple connection qpˇŻs in many-to-many topology ¨C Built-in into ceph master ¨C All Features are fully avail on ceph master ? Support: ¨C RH/centos ¨C INFINIBAND and ETH ¨C Roce V2 for cross subnet ¨C Front-end TCP and back-end RDMA RDMA Support

- 11. ÍřÂçŇýÇć ĬČĎŇýÇć Ó˛ĽţŇŞÇó ĐÔÄÜ ĽćČÝĐÔ OSD ´ć´˘Ňý ÇćŇŞÇó OSD´ć´˘˝éÖĘ ŇŞÇó Posix(Kernel) ĘÇ ÎŢ ÖĐ ĽćČÝČÎşÎTCP/IPÍř Âç ÎŢ ÎŢ DPDK+Userspace TCP/IP ·ń Ö§łÖDPDKµÄ Ířż¨ ¸ß ĽćČÝČÎşÎTCP/IPÍř Âç BlueStore ±ŘĐëĘąÓĂ NVME SSD RDMA ·ń Ö§łÖRDMA µÄÍřż¨ ¸ß Ö§łÖRDMA µÄÍřÂç ÎŢ ÎŢ RDMA Support

- 12. ? RDMA-VERBS ¨C Native RDMA Support ¨C Exchange Connection Via TCP/IP ? RDMA-CM: ¨C Provide a simper abstraction over verbs ¨C Required by iWarp ¨C Functionality is carried forward RDMA Support

- 13. RDMA Support ? Usages ¨C QEMU/KVM ¨C NBD ¨C FUSE ¨C S3/Swift ObjectStorage ¨C All ceph ecosystem

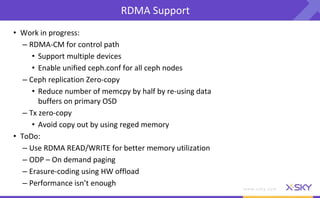

- 14. RDMA Support ? Work in progress: ¨C RDMA-CM for control path ? Support multiple devices ? Enable unified ceph.conf for all ceph nodes ¨C Ceph replication Zero-copy ? Reduce number of memcpy by half by re-using data buffers on primary OSD ¨C Tx zero-copy ? Avoid copy out by using reged memory ? ToDo: ¨C Use RDMA READ/WRITE for better memory utilization ¨C ODP ¨C On demand paging ¨C Erasure-coding using HW offload ¨C Performance isnˇŻt enough

- 16. Thank you