Chapter7 clustering types concepts algorithms.pdf

0 likes9 views

What is clustering and different types of clustering technologies and their algorithms with difference

1 of 29

Download to read offline

Recommended

05 Clustering in Data Mining

05 Clustering in Data MiningValerii Klymchuk

╠²

Classification of common clustering algorithm and techniques, e.g., hierarchical clustering, distance measures, K-means, Squared error, SOFM, Clustering large databases.CSA 3702 machine learning module 3

CSA 3702 machine learning module 3Nandhini S

╠²

This document provides an overview of clustering and k-means clustering algorithms. It begins by defining clustering as the process of grouping similar objects together and dissimilar objects separately. K-means clustering is introduced as an algorithm that partitions data points into k clusters by minimizing total intra-cluster variance, iteratively updating cluster means. The k-means algorithm and an example are described in detail. Weaknesses and applications are discussed. Finally, vector quantization and principal component analysis are briefly introduced.Advanced database and data mining & clustering concepts

Advanced database and data mining & clustering conceptsNithyananthSengottai

╠²

This document discusses various clustering methods used in data mining. It begins with an overview of clustering and its applications. It then describes five major categories of clustering methods: partitioning methods like k-means and k-medoids, hierarchical methods like agglomerative nesting and divisive analysis, density-based methods, grid-based methods, and model-based clustering methods. For each category, popular algorithms are provided as examples. The document also covers types of data for clustering and evaluating clustering results.machine learning - Clustering in R

machine learning - Clustering in RSudhakar Chavan

╠²

The document provides an overview of clustering methods and algorithms. It defines clustering as the process of grouping objects that are similar to each other and dissimilar to objects in other groups. It discusses existing clustering methods like K-means, hierarchical clustering, and density-based clustering. For each method, it outlines the basic steps and provides an example application of K-means clustering to demonstrate how the algorithm works. The document also discusses evaluating clustering results and different measures used to assess cluster validity.26-Clustering MTech-2017.ppt

26-Clustering MTech-2017.pptvikassingh569137

╠²

The document discusses the concept of clustering, which is an unsupervised machine learning technique used to group unlabeled data points that are similar. It describes how clustering algorithms aim to identify natural groups within data based on some measure of similarity, without any labels provided. The key types of clustering are partition-based (like k-means), hierarchical, density-based, and model-based. Applications include marketing, earth science, insurance, and more. Quality measures for clustering include intra-cluster similarity and inter-cluster dissimilarity.UNIT_V_Cluster Analysis.pptx

UNIT_V_Cluster Analysis.pptxsandeepsandy494692

╠²

Cluster analysis, or clustering, is the process of grouping data objects into subsets called clusters so that objects within a cluster are similar to each other but dissimilar to objects in other clusters. There are several approaches to clustering, including partitioning, hierarchical, density-based, and grid-based methods. The k-means and k-medoids algorithms are popular partitioning methods that aim to partition observations into k clusters by minimizing distances between observations and cluster centroids or medoids. K-medoids is more robust to outliers as it uses actual observations as cluster representatives rather than centroids. Both methods require specifying the number of clusters k in advance.Unsupervised Learning in Machine Learning

Unsupervised Learning in Machine LearningPyingkodi Maran

╠²

The document discusses various unsupervised learning techniques including clustering algorithms like k-means, k-medoids, hierarchical clustering and density-based clustering. It explains how k-means clustering works by selecting initial random centroids and iteratively reassigning data points to the closest centroid. The elbow method is described as a way to determine the optimal number of clusters k. The document also discusses how k-medoids clustering is more robust to outliers than k-means because it uses actual data points as cluster representatives rather than centroids.Clustering

ClusteringMd. Hasnat Shoheb

╠²

K-means clustering is an algorithm used to classify objects into k number of groups or clusters. It works by minimizing the sum of squares of distances between data points and assigned cluster centroids. The basic steps are to initialize k cluster centroids, assign each data point to the nearest centroid, recompute the centroids based on new assignments, and repeat until centroids don't change. Some examples of its applications include machine learning, data mining, speech recognition, image segmentation, and color quantization. However, it is sensitive to initialization and may get stuck in local optima.k-mean-clustering.pdf

k-mean-clustering.pdfYatharthKhichar1

╠²

This is the presentation on the topic of K-mean clustering which is taught is machine learning subject in many engineering colleges world wide.CLUSTER ANALYSIS ALGORITHMS.pptx

CLUSTER ANALYSIS ALGORITHMS.pptxShwetapadmaBabu1

╠²

The method of identifying similar groups of data in a data set is called clustering. Entities in each group are comparatively more similar to entities of that group than those of the other groups.

Hierachical clustering

Hierachical clusteringTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL

╠²

K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) based on their similarity. It works by randomly assigning data points to k clusters and then iteratively updating cluster centroids and reassigning points until cluster membership stabilizes. K-means clustering aims to minimize intra-cluster variation while maximizing inter-cluster variation. There are various applications and variants of the basic k-means algorithm.Unsupervised learning Algorithms and Assumptions

Unsupervised learning Algorithms and Assumptionsrefedey275

╠²

Topics :

Introduction to unsupervised learning

Unsupervised learning Algorithms and Assumptions

K-Means algorithm ŌĆō introduction

Implementation of K-means algorithm

Hierarchical Clustering ŌĆō need and importance of hierarchical clustering

Agglomerative Hierarchical Clustering

Working of dendrogram

Steps for implementation of AHC using Python

Gaussian Mixture Models ŌĆō Introduction, importance and need of the model

Normal , Gaussian distribution

Implementation of Gaussian mixture model

Understand the different distance metrics used in clustering

Euclidean, Manhattan, Cosine, Mahala Nobis

Features of a Cluster ŌĆō Labels, Centroids, Inertia, Eigen vectors and Eigen values

Principal component analysis

Supervised learning (classification)

Supervision: The training data (observations, measurements, etc.) are accompanied by labels indicating the class of the observations

New data is classified based on the training set

Unsupervised learning (clustering)

The class labels of training data is unknown

Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data

Types of Hierarchical Clustering

There are mainly two types of hierarchical clustering:

Agglomerative hierarchical clustering

Divisive Hierarchical clustering

A distribution in statistics is╠²a function that shows the possible values for a variable and how often they occur.╠²

In probability theory and statistics, the╠²Normal Distribution, also called the╠²Gaussian Distribution.

is the most significant continuous probability distribution.

Sometimes it is also called a bell curve.╠²

K means Clustering - algorithm to cluster n objects

K means Clustering - algorithm to cluster n objectsVoidVampire

╠²

The k-means algorithm is an algorithm to cluster n objects based on attributes into k partitions, where k < n.

It is similar to the expectation-maximization algorithm for mixtures of Gaussians in that they both attempt to find the centers of natural clusters in the data.

It assumes that the object attributes form a vector space.

clustering in DataMining and differences in models/ clustering in data mining

clustering in DataMining and differences in models/ clustering in data miningRevathiSundar4

╠²

Clustering and Partitioning methodsCLUSTERING IN DATA MINING.pdf

CLUSTERING IN DATA MINING.pdfSowmyaJyothi3

╠²

Clustering is an unsupervised machine learning technique used to group unlabeled data points. There are two main approaches: hierarchical clustering and partitioning clustering. Partitioning clustering algorithms like k-means and k-medoids attempt to partition data into k clusters by optimizing a criterion function. Hierarchical clustering creates nested clusters by merging or splitting clusters. Examples of hierarchical algorithms include agglomerative clustering, which builds clusters from bottom-up, and divisive clustering, which separates clusters from top-down. Clustering can group both numerical and categorical data.clustering using different methods in .pdf

clustering using different methods in .pdfofficialnovice7

╠²

Clustering using different methods in machine Learning clustering ppt.pptx

clustering ppt.pptxchmeghana1

╠²

Clustering is an unsupervised machine learning technique that groups similar objects together. There are several clustering techniques including partitioning methods like k-means, hierarchical methods like agglomerative and divisive clustering, density-based methods, graph-based methods, and model-based clustering. Clustering is used in applications such as pattern recognition, image processing, bioinformatics, and more to split data into meaningful subgroups.DS9 - Clustering.pptx

DS9 - Clustering.pptxJK970901

╠²

This document provides an overview of clustering analysis techniques, including k-means clustering, DBSCAN clustering, and self-organizing maps (SOM). It defines clustering as the process of grouping similar data points together. K-means clustering partitions data into k clusters by finding cluster centroids. DBSCAN identifies clusters based on density rather than specifying the number of clusters. SOM projects high-dimensional data onto a low-dimensional grid using a neural network approach.Types of clustering and different types of clustering algorithms

Types of clustering and different types of clustering algorithmsPrashanth Guntal

╠²

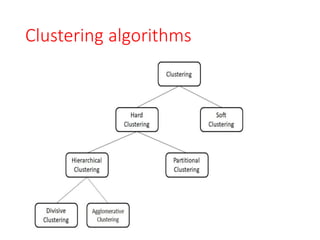

The document discusses different types of clustering algorithms:

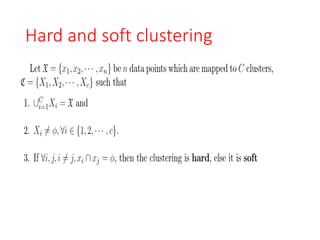

1. Hard clustering assigns each data point to one cluster, while soft clustering allows points to belong to multiple clusters.

2. Hierarchical clustering builds clusters hierarchically in a top-down or bottom-up approach, while flat clustering does not have a hierarchy.

3. Model-based clustering models data using statistical distributions to find the best fitting model.

It then provides examples of specific clustering algorithms like K-Means, Fuzzy K-Means, Streaming K-Means, Spectral clustering, and Dirichlet clustering.3b318431-df9f-4a2c-9909-61ecb6af8444.pptx

3b318431-df9f-4a2c-9909-61ecb6af8444.pptxNANDHINIS900805

╠²

Hierarchical clustering methods build groups of objects in a recursive manner through either an agglomerative or divisive approach. Agglomerative clustering starts with each object in its own cluster and merges the closest pairs of clusters until only one cluster remains. Divisive clustering starts with all objects in one cluster and splits clusters until each object is in its own cluster. DBSCAN is a density-based clustering method that identifies core, border, and noise points based on a density threshold. OPTICS improves upon DBSCAN by producing a cluster ordering that contains information about intrinsic clustering structures across different parameter settings.UNIT - 4: Data Warehousing and Data Mining

UNIT - 4: Data Warehousing and Data MiningNandakumar P

╠²

UNIT-IV

Cluster Analysis: Types of Data in Cluster Analysis ŌĆō A Categorization of Major Clustering Methods ŌĆō Partitioning Methods ŌĆō Hierarchical methods ŌĆō Density, Based Methods ŌĆō Grid, Based Methods ŌĆō Model, Based Clustering Methods ŌĆō Clustering High, Dimensional Data ŌĆō Constraint, Based Cluster Analysis ŌĆō Outlier Analysis.Clustering in Data Mining

Clustering in Data MiningArchana Swaminathan

╠²

Clustering is an unsupervised learning technique used to group unlabeled data points together based on similarities. It aims to maximize similarity within clusters and minimize similarity between clusters. There are several clustering methods including partitioning, hierarchical, density-based, grid-based, and model-based. Clustering has many applications such as pattern recognition, image processing, market research, and bioinformatics. It is useful for extracting hidden patterns from large, complex datasets.Unsupervised learning (clustering)

Unsupervised learning (clustering)Pravinkumar Landge

╠²

This document discusses various unsupervised machine learning clustering algorithms. It begins with an introduction to unsupervised learning and clustering. It then explains k-means clustering, hierarchical clustering, and DBSCAN clustering. For k-means and hierarchical clustering, it covers how they work, their advantages and disadvantages, and compares the two. For DBSCAN, it defines what it is, how it identifies core points, border points, and outliers to form clusters based on density.Mean shift and Hierarchical clustering

Mean shift and Hierarchical clustering Yan Xu

╠²

Mean shift clustering finds clusters by locating peaks in the probability density function of the data. It iteratively moves data points to the mean of nearby points until convergence. Hierarchical clustering builds clusters gradually by either merging or splitting clusters at each step. There are two types: divisive which splits clusters, and agglomerative which merges clusters. Agglomerative clustering starts with each point as a cluster and iteratively merges the closest pair of clusters until all are merged based on a chosen linkage method like complete or average linkage. The choice of distance metric and linkage method impacts the resulting clusters.Machine Learning : Clustering - Cluster analysis.pptx

Machine Learning : Clustering - Cluster analysis.pptxtecaviw979

╠²

Cluster analysis

Cluster analysis or simply clustering is the process of partitioning a set of data objects (or observations) into subsets.

Each subset is a cluster, such that objects in a cluster are similar to one another, yet dissimilar to objects in other clusters.

PPT s10-machine vision-s2

PPT s10-machine vision-s2Binus Online Learning

╠²

This document discusses unsupervised machine learning techniques for clustering unlabeled data. It covers k-means clustering, which partitions data into k groups based on minimizing distance between points and cluster centroids. It also discusses agglomerative hierarchical clustering, which successively merges clusters based on their distance. As an example, it shows hierarchical clustering of texture images from five classes to group similar textures.Chapter8 LINEAR DESCRIMINANT FOR MACHINE LEARNING.pdf

Chapter8 LINEAR DESCRIMINANT FOR MACHINE LEARNING.pdfPRABHUCECC

╠²

Linear DESCRIMINANT FOR MACHINE LEARNING CONCEPTS Chapter1MACHINE LEARNING THEORY AND PRACTICES.pdf

Chapter1MACHINE LEARNING THEORY AND PRACTICES.pdfPRABHUCECC

╠²

Machine learning definition types concepts different algorithmsMore Related Content

Similar to Chapter7 clustering types concepts algorithms.pdf (20)

Clustering

ClusteringMd. Hasnat Shoheb

╠²

K-means clustering is an algorithm used to classify objects into k number of groups or clusters. It works by minimizing the sum of squares of distances between data points and assigned cluster centroids. The basic steps are to initialize k cluster centroids, assign each data point to the nearest centroid, recompute the centroids based on new assignments, and repeat until centroids don't change. Some examples of its applications include machine learning, data mining, speech recognition, image segmentation, and color quantization. However, it is sensitive to initialization and may get stuck in local optima.k-mean-clustering.pdf

k-mean-clustering.pdfYatharthKhichar1

╠²

This is the presentation on the topic of K-mean clustering which is taught is machine learning subject in many engineering colleges world wide.CLUSTER ANALYSIS ALGORITHMS.pptx

CLUSTER ANALYSIS ALGORITHMS.pptxShwetapadmaBabu1

╠²

The method of identifying similar groups of data in a data set is called clustering. Entities in each group are comparatively more similar to entities of that group than those of the other groups.

Hierachical clustering

Hierachical clusteringTilani Gunawardena PhD(UNIBAS), BSc(Pera), FHEA(UK), CEng, MIESL

╠²

K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) based on their similarity. It works by randomly assigning data points to k clusters and then iteratively updating cluster centroids and reassigning points until cluster membership stabilizes. K-means clustering aims to minimize intra-cluster variation while maximizing inter-cluster variation. There are various applications and variants of the basic k-means algorithm.Unsupervised learning Algorithms and Assumptions

Unsupervised learning Algorithms and Assumptionsrefedey275

╠²

Topics :

Introduction to unsupervised learning

Unsupervised learning Algorithms and Assumptions

K-Means algorithm ŌĆō introduction

Implementation of K-means algorithm

Hierarchical Clustering ŌĆō need and importance of hierarchical clustering

Agglomerative Hierarchical Clustering

Working of dendrogram

Steps for implementation of AHC using Python

Gaussian Mixture Models ŌĆō Introduction, importance and need of the model

Normal , Gaussian distribution

Implementation of Gaussian mixture model

Understand the different distance metrics used in clustering

Euclidean, Manhattan, Cosine, Mahala Nobis

Features of a Cluster ŌĆō Labels, Centroids, Inertia, Eigen vectors and Eigen values

Principal component analysis

Supervised learning (classification)

Supervision: The training data (observations, measurements, etc.) are accompanied by labels indicating the class of the observations

New data is classified based on the training set

Unsupervised learning (clustering)

The class labels of training data is unknown

Given a set of measurements, observations, etc. with the aim of establishing the existence of classes or clusters in the data

Types of Hierarchical Clustering

There are mainly two types of hierarchical clustering:

Agglomerative hierarchical clustering

Divisive Hierarchical clustering

A distribution in statistics is╠²a function that shows the possible values for a variable and how often they occur.╠²

In probability theory and statistics, the╠²Normal Distribution, also called the╠²Gaussian Distribution.

is the most significant continuous probability distribution.

Sometimes it is also called a bell curve.╠²

K means Clustering - algorithm to cluster n objects

K means Clustering - algorithm to cluster n objectsVoidVampire

╠²

The k-means algorithm is an algorithm to cluster n objects based on attributes into k partitions, where k < n.

It is similar to the expectation-maximization algorithm for mixtures of Gaussians in that they both attempt to find the centers of natural clusters in the data.

It assumes that the object attributes form a vector space.

clustering in DataMining and differences in models/ clustering in data mining

clustering in DataMining and differences in models/ clustering in data miningRevathiSundar4

╠²

Clustering and Partitioning methodsCLUSTERING IN DATA MINING.pdf

CLUSTERING IN DATA MINING.pdfSowmyaJyothi3

╠²

Clustering is an unsupervised machine learning technique used to group unlabeled data points. There are two main approaches: hierarchical clustering and partitioning clustering. Partitioning clustering algorithms like k-means and k-medoids attempt to partition data into k clusters by optimizing a criterion function. Hierarchical clustering creates nested clusters by merging or splitting clusters. Examples of hierarchical algorithms include agglomerative clustering, which builds clusters from bottom-up, and divisive clustering, which separates clusters from top-down. Clustering can group both numerical and categorical data.clustering using different methods in .pdf

clustering using different methods in .pdfofficialnovice7

╠²

Clustering using different methods in machine Learning clustering ppt.pptx

clustering ppt.pptxchmeghana1

╠²

Clustering is an unsupervised machine learning technique that groups similar objects together. There are several clustering techniques including partitioning methods like k-means, hierarchical methods like agglomerative and divisive clustering, density-based methods, graph-based methods, and model-based clustering. Clustering is used in applications such as pattern recognition, image processing, bioinformatics, and more to split data into meaningful subgroups.DS9 - Clustering.pptx

DS9 - Clustering.pptxJK970901

╠²

This document provides an overview of clustering analysis techniques, including k-means clustering, DBSCAN clustering, and self-organizing maps (SOM). It defines clustering as the process of grouping similar data points together. K-means clustering partitions data into k clusters by finding cluster centroids. DBSCAN identifies clusters based on density rather than specifying the number of clusters. SOM projects high-dimensional data onto a low-dimensional grid using a neural network approach.Types of clustering and different types of clustering algorithms

Types of clustering and different types of clustering algorithmsPrashanth Guntal

╠²

The document discusses different types of clustering algorithms:

1. Hard clustering assigns each data point to one cluster, while soft clustering allows points to belong to multiple clusters.

2. Hierarchical clustering builds clusters hierarchically in a top-down or bottom-up approach, while flat clustering does not have a hierarchy.

3. Model-based clustering models data using statistical distributions to find the best fitting model.

It then provides examples of specific clustering algorithms like K-Means, Fuzzy K-Means, Streaming K-Means, Spectral clustering, and Dirichlet clustering.3b318431-df9f-4a2c-9909-61ecb6af8444.pptx

3b318431-df9f-4a2c-9909-61ecb6af8444.pptxNANDHINIS900805

╠²

Hierarchical clustering methods build groups of objects in a recursive manner through either an agglomerative or divisive approach. Agglomerative clustering starts with each object in its own cluster and merges the closest pairs of clusters until only one cluster remains. Divisive clustering starts with all objects in one cluster and splits clusters until each object is in its own cluster. DBSCAN is a density-based clustering method that identifies core, border, and noise points based on a density threshold. OPTICS improves upon DBSCAN by producing a cluster ordering that contains information about intrinsic clustering structures across different parameter settings.UNIT - 4: Data Warehousing and Data Mining

UNIT - 4: Data Warehousing and Data MiningNandakumar P

╠²

UNIT-IV

Cluster Analysis: Types of Data in Cluster Analysis ŌĆō A Categorization of Major Clustering Methods ŌĆō Partitioning Methods ŌĆō Hierarchical methods ŌĆō Density, Based Methods ŌĆō Grid, Based Methods ŌĆō Model, Based Clustering Methods ŌĆō Clustering High, Dimensional Data ŌĆō Constraint, Based Cluster Analysis ŌĆō Outlier Analysis.Clustering in Data Mining

Clustering in Data MiningArchana Swaminathan

╠²

Clustering is an unsupervised learning technique used to group unlabeled data points together based on similarities. It aims to maximize similarity within clusters and minimize similarity between clusters. There are several clustering methods including partitioning, hierarchical, density-based, grid-based, and model-based. Clustering has many applications such as pattern recognition, image processing, market research, and bioinformatics. It is useful for extracting hidden patterns from large, complex datasets.Unsupervised learning (clustering)

Unsupervised learning (clustering)Pravinkumar Landge

╠²

This document discusses various unsupervised machine learning clustering algorithms. It begins with an introduction to unsupervised learning and clustering. It then explains k-means clustering, hierarchical clustering, and DBSCAN clustering. For k-means and hierarchical clustering, it covers how they work, their advantages and disadvantages, and compares the two. For DBSCAN, it defines what it is, how it identifies core points, border points, and outliers to form clusters based on density.Mean shift and Hierarchical clustering

Mean shift and Hierarchical clustering Yan Xu

╠²

Mean shift clustering finds clusters by locating peaks in the probability density function of the data. It iteratively moves data points to the mean of nearby points until convergence. Hierarchical clustering builds clusters gradually by either merging or splitting clusters at each step. There are two types: divisive which splits clusters, and agglomerative which merges clusters. Agglomerative clustering starts with each point as a cluster and iteratively merges the closest pair of clusters until all are merged based on a chosen linkage method like complete or average linkage. The choice of distance metric and linkage method impacts the resulting clusters.Machine Learning : Clustering - Cluster analysis.pptx

Machine Learning : Clustering - Cluster analysis.pptxtecaviw979

╠²

Cluster analysis

Cluster analysis or simply clustering is the process of partitioning a set of data objects (or observations) into subsets.

Each subset is a cluster, such that objects in a cluster are similar to one another, yet dissimilar to objects in other clusters.

PPT s10-machine vision-s2

PPT s10-machine vision-s2Binus Online Learning

╠²

This document discusses unsupervised machine learning techniques for clustering unlabeled data. It covers k-means clustering, which partitions data into k groups based on minimizing distance between points and cluster centroids. It also discusses agglomerative hierarchical clustering, which successively merges clusters based on their distance. As an example, it shows hierarchical clustering of texture images from five classes to group similar textures.More from PRABHUCECC (7)

Chapter8 LINEAR DESCRIMINANT FOR MACHINE LEARNING.pdf

Chapter8 LINEAR DESCRIMINANT FOR MACHINE LEARNING.pdfPRABHUCECC

╠²

Linear DESCRIMINANT FOR MACHINE LEARNING CONCEPTS Chapter1MACHINE LEARNING THEORY AND PRACTICES.pdf

Chapter1MACHINE LEARNING THEORY AND PRACTICES.pdfPRABHUCECC

╠²

Machine learning definition types concepts different algorithmsChapter5 ML BASED FREQUENT ITEM SETS.pdf

Chapter5 ML BASED FREQUENT ITEM SETS.pdfPRABHUCECC

╠²

Machine learning learning based frequency item sets definition types concepts Chapter2 NEAREST NEIGHBOURHOOD ALGORITHMS.pdf

Chapter2 NEAREST NEIGHBOURHOOD ALGORITHMS.pdfPRABHUCECC

╠²

Machine learning nearest neighbourhood algorithm mathematical formulation and implementation of algorithm Data ware house and miningUNIT-1 DATA MINING CONCEPT.ppt

Data ware house and miningUNIT-1 DATA MINING CONCEPT.pptPRABHUCECC

╠²

As per JNTUK PRESENTATION GIVES ON DATA WAREHOUSING Recently uploaded (20)

Recruitment in the Odoo 17 - Odoo 17 ║▌║▌▀Żs

Recruitment in the Odoo 17 - Odoo 17 ║▌║▌▀ŻsCeline George

╠²

It is a sad fact that finding qualified candidates for open positions has grown to be a challenging endeavor for an organization's human resource management. In Odoo, we can manage this easily by using the recruitment moduleHow to Manage Check Out Process in Odoo 17 Website

How to Manage Check Out Process in Odoo 17 WebsiteCeline George

╠²

Checkout process is a final step before processing the purchase. At this step we review the product, add shipping details and confirm the purchase.Different perspectives on dugout canoe heritage of Soomaa.pdf

Different perspectives on dugout canoe heritage of Soomaa.pdfAivar Ruukel

╠²

Sharing the story of haabjas to 1st-year students of the University of Tartu MA programme "Folkloristics and Applied Heritage Studies" and 1st-year students of the Erasmus Mundus Joint Master programme "Education in Museums & Heritage". O SWEET SPONTANEOUS BY EDWARD ESTLIN CUMMINGSAN.pptx

O SWEET SPONTANEOUS BY EDWARD ESTLIN CUMMINGSAN.pptxAituzazKoree

╠²

O SWEET SPONTANEOUS

explain

ThemesAnti-Viral Agents.pptx Medicinal Chemistry III, B Pharm SEM VI

Anti-Viral Agents.pptx Medicinal Chemistry III, B Pharm SEM VISamruddhi Khonde

╠²

Antiviral agents are crucial in combating viral infections, causing a variety of diseases from mild to life-threatening. Developed through medicinal chemistry, these drugs target viral structures and processes while minimizing harm to host cells. Viruses are classified into DNA and RNA viruses, with each replicating through distinct mechanisms. Treatments for herpesviruses involve nucleoside analogs like acyclovir and valacyclovir, which inhibit the viral DNA polymerase. Influenza is managed with neuraminidase inhibitors like oseltamivir and zanamivir, which prevent the release of new viral particles. HIV is treated with a combination of antiretroviral drugs targeting various stages of the viral life cycle. Hepatitis B and C are treated with different strategies, with nucleoside analogs like lamivudine inhibiting viral replication and direct-acting antivirals targeting the viral RNA polymerase and other key proteins.

Antiviral agents are designed based on their mechanisms of action, with several categories including nucleoside and nucleotide analogs, protease inhibitors, neuraminidase inhibitors, reverse transcriptase inhibitors, and integrase inhibitors. The design of these agents often relies on understanding the structure-activity relationship (SAR), which involves modifying the chemical structure of compounds to enhance efficacy, selectivity, and bioavailability while reducing side effects. Despite their success, challenges such as drug resistance, viral mutation, and the need for long-term therapy remain.How to Setup Company Data in Odoo 17 Accounting App

How to Setup Company Data in Odoo 17 Accounting AppCeline George

╠²

The Accounting module in Odoo 17 is a comprehensive tool designed to manage all financial aspects of a business. It provides a range of features that help with everything from day-to-day bookkeeping to advanced financial analysis. Strategic Corporate Social Responsibility: Sustainable Value Creation Fourth

Strategic Corporate Social Responsibility: Sustainable Value Creation Fourthkeileyrazawi

╠²

Strategic Corporate Social Responsibility: Sustainable Value Creation Fourth

Strategic Corporate Social Responsibility: Sustainable Value Creation Fourth

Strategic Corporate Social Responsibility: Sustainable Value Creation FourthGeneral Quiz at Maharaja Agrasen College | Amlan Sarkar | Prelims with Answer...

General Quiz at Maharaja Agrasen College | Amlan Sarkar | Prelims with Answer...Amlan Sarkar

╠²

Prelims (with answers) + Finals of a general quiz originally conducted on 13th November, 2024.

Part of The Maharaja Quiz - the Annual Quiz Fest of Maharaja Agrasen College, University of Delhi.

Feedback welcome at amlansarkr@gmail.com10.socialorganisationandsocialsystem .pptx

10.socialorganisationandsocialsystem .pptxVivek Bhattji

╠²

SOCIAL ORGANIATION & SOCIAL DISORGANIZATION

APPLIED SOCIOLOGY Studying and Notetaking: Some Suggestions

Studying and Notetaking: Some SuggestionsDamian T. Gordon

╠²

Studying and Notetaking: Some SuggestionsAll India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025

All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025National Council of Open Schooling Research and Training

╠²

All India Council of Vocational Skills (AICSVS) and National Council of Open Schooling Research and Training (NCOSRT), Global International University, Asia Book of World Records (ABWRECORDS), International a joint Accreditation Commission of Higher Education (IACOHE)The prospectus is going to be published in the year 2025Unit1 Inroduction to Internal Combustion Engines

Unit1 Inroduction to Internal Combustion EnginesNileshKumbhar21

╠²

Introduction of I. C. Engines, Types of engine, working of engine, Nomenclature of engine, Otto cycle, Diesel cycle Fuel air cycles Characteristics of fuel - air mixtures Actual cycles, Valve timing diagram for high and low speed engine, Port timing diagramAnti-Fungal Agents.pptx Medicinal Chemistry III B. Pharm Sem VI

Anti-Fungal Agents.pptx Medicinal Chemistry III B. Pharm Sem VISamruddhi Khonde

╠²

UNIT ŌĆō IV

Antifungal agents:

Antifungal antibiotics: Amphotericin-B, Nystatin, Natamycin, Griseofulvin.

Synthetic Antifungal agents: Clotrimazole, Econazole, Butoconazole,

Oxiconazole Tioconozole, Miconazole*, Ketoconazole, Terconazole,

Itraconazole, Fluconazole, Naftifine hydrochloride, Tolnaftate*20250402 ACCA TeamScienceAIEra 20250402 v10.pptx

20250402 ACCA TeamScienceAIEra 20250402 v10.pptxhome

╠²

Team Science in the AI Era: Talk for the Association of Cancer Center Administrators (ACCA) Team Science Network (April 2, 2025, 3pm ET)

Host: Jill Slack-Davis (https://www.linkedin.com/in/jill-slack-davis-56024514/)

20250402 Team Science in the AI Era

These slides: TBD

Jim Twin V1 (English video - Heygen) - https://youtu.be/T4S0uZp1SHw

Jim Twin V1 (French video - Heygen) - https://youtu.be/02hCGRJnCoc

Jim Twin (Chat) Tmpt.me Platform ŌĆō https://tmpt.app/@jimtwin

Jim Twin (English video ŌĆō OpenSource) ŌĆō https://youtu.be/mwnZjTNegXE

Jim Blog Post - https://service-science.info/archives/6612

Jim EIT Article (Real Jim) - https://www.eitdigital.eu/newsroom/grow-digital-insights/personal-ai-digital-twins-the-future-of-human-interaction/

Jim EIT Talk (Real Jim) - https://youtu.be/_1X6bRfOqc4

Reid Hoffman (English video) - https://youtu.be/rgD2gmwCS10All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025

All India Council of Skills and Vocational Studies (AICSVS) PROSPECTUS 2025National Council of Open Schooling Research and Training

╠²

Chapter7 clustering types concepts algorithms.pdf

- 1. CLUSTERING CHAPTER 7: MACHINE LEARNING ŌĆō THEORY & PRACTICE

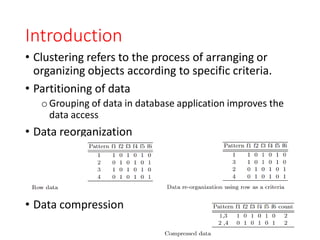

- 2. Introduction ŌĆó Clustering refers to the process of arranging or organizing objects according to specific criteria. ŌĆó Partitioning of data oGrouping of data in database application improves the data access ŌĆó Data reorganization ŌĆó Data compression

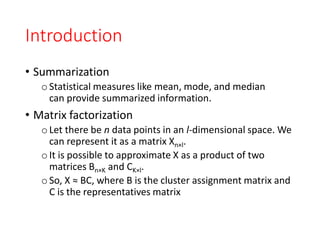

- 3. Introduction ŌĆó Summarization oStatistical measures like mean, mode, and median can provide summarized information. ŌĆó Matrix factorization o Let there be n data points in an l-dimensional space. We can represent it as a matrix Xn├Śl. oIt is possible to approximate X as a product of two matrices Bn├ŚK and CK├Śl. oSo, X Ōēł BC, where B is the cluster assignment matrix and C is the representatives matrix

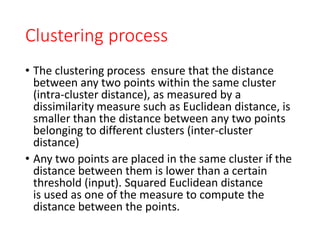

- 4. Clustering process ŌĆó The clustering process ensure that the distance between any two points within the same cluster (intra-cluster distance), as measured by a dissimilarity measure such as Euclidean distance, is smaller than the distance between any two points belonging to different clusters (inter-cluster distance) ŌĆó Any two points are placed in the same cluster if the distance between them is lower than a certain threshold (input). Squared Euclidean distance is used as one of the measure to compute the distance between the points.

- 5. Hard and soft clustering

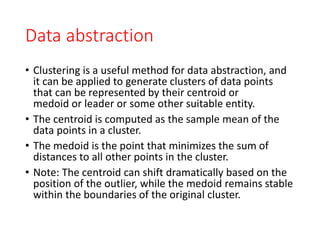

- 6. Data abstraction ŌĆó Clustering is a useful method for data abstraction, and it can be applied to generate clusters of data points that can be represented by their centroid or medoid or leader or some other suitable entity. ŌĆó The centroid is computed as the sample mean of the data points in a cluster. ŌĆó The medoid is the point that minimizes the sum of distances to all other points in the cluster. ŌĆó Note: The centroid can shift dramatically based on the position of the outlier, while the medoid remains stable within the boundaries of the original cluster.

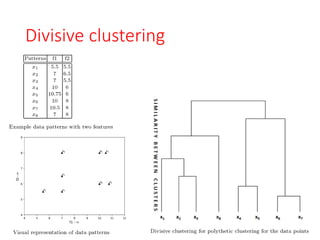

- 8. Divisive clustering ŌĆó Divisive algorithms are either polythetic where the division is based on more than one feature or monothetic when only one feature is considered at a time. ŌĆó The polythetic scheme is based on finding all possible 2-partitions of the data and choosing the best among them. If there are n patterns, the number of distinct 2-partions is given by (2n ŌłÆ2)/2 = 2nŌłÆ1 ŌĆō 1.

- 9. Divisive clustering ŌĆó Among all possible 2-partitions, the partition with the least sum of the sample variances of the two clusters is chosen as the best. ŌĆó From the resulting partition, the cluster with the maximum sample variance is selected and is split into an optimal 2-partition. ŌĆó This process is repeated till we get singleton clusters. ŌĆó If a collection of patterns (data points) is split into two clusters with p patterns x1, ┬Ę ┬Ę ┬Ę , xp in one cluster and q patterns y1, ┬Ę ┬Ę ┬Ę , yq in the other cluster with the centroids of the two clusters being C1 and C2 respectively, then the sum of the sample variances will be

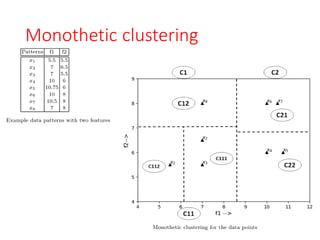

- 11. Monothetic clustering ŌĆó involves considering each feature direction individually and dividing the data into two clusters based on the gap in projected values along that feature direction. ŌĆó Specifically, the dataset is split into two parts at a point that corresponds to the mean value of the maximum gap observed among the feature values. ŌĆó This process is then repeated sequentially for the remaining features, further partitioning each cluster.

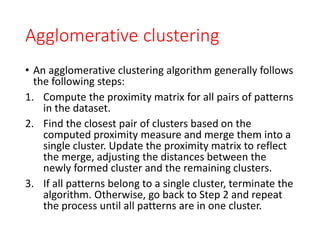

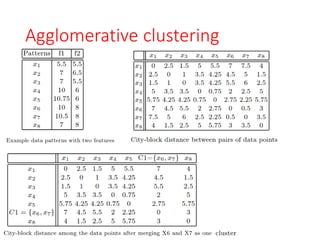

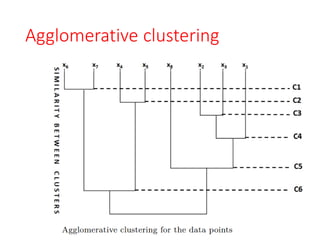

- 13. Agglomerative clustering ŌĆó An agglomerative clustering algorithm generally follows the following steps: 1. Compute the proximity matrix for all pairs of patterns in the dataset. 2. Find the closest pair of clusters based on the computed proximity measure and merge them into a single cluster. Update the proximity matrix to reflect the merge, adjusting the distances between the newly formed cluster and the remaining clusters. 3. If all patterns belong to a single cluster, terminate the algorithm. Otherwise, go back to Step 2 and repeat the process until all patterns are in one cluster.

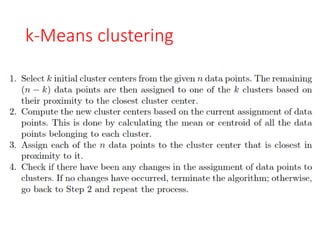

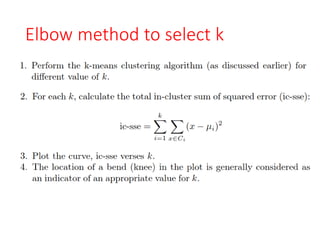

- 16. Elbow method to select k

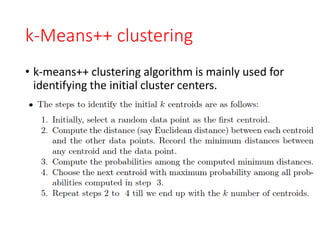

- 17. k-Means++ clustering ŌĆó k-means++ clustering algorithm is mainly used for identifying the initial cluster centers.

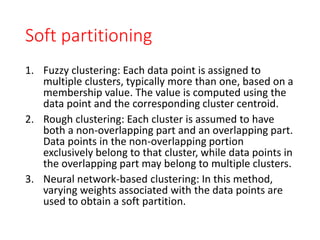

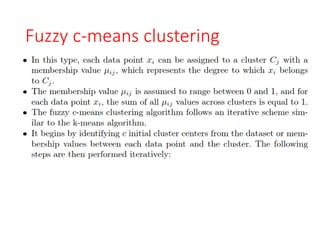

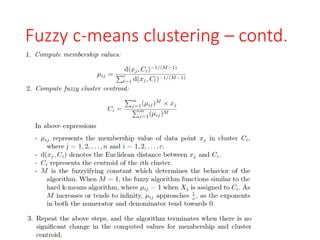

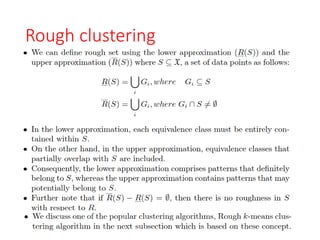

- 19. Soft partitioning 1. Fuzzy clustering: Each data point is assigned to multiple clusters, typically more than one, based on a membership value. The value is computed using the data point and the corresponding cluster centroid. 2. Rough clustering: Each cluster is assumed to have both a non-overlapping part and an overlapping part. Data points in the non-overlapping portion exclusively belong to that cluster, while data points in the overlapping part may belong to multiple clusters. 3. Neural network-based clustering: In this method, varying weights associated with the data points are used to obtain a soft partition.

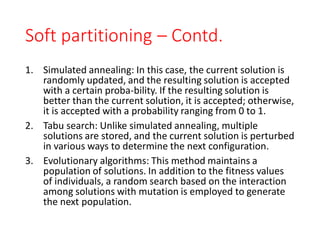

- 20. Soft partitioning ŌĆō Contd. 1. Simulated annealing: In this case, the current solution is randomly updated, and the resulting solution is accepted with a certain proba-bility. If the resulting solution is better than the current solution, it is accepted; otherwise, it is accepted with a probability ranging from 0 to 1. 2. Tabu search: Unlike simulated annealing, multiple solutions are stored, and the current solution is perturbed in various ways to determine the next configuration. 3. Evolutionary algorithms: This method maintains a population of solutions. In addition to the fitness values of individuals, a random search based on the interaction among solutions with mutation is employed to generate the next population.

- 22. Fuzzy c-means clustering ŌĆō contd.

- 23. Rough clustering

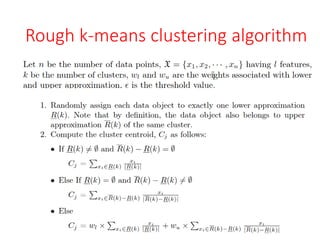

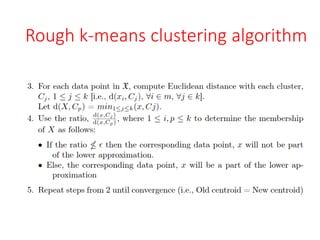

- 24. Rough k-means clustering algorithm

- 25. Rough k-means clustering algorithm

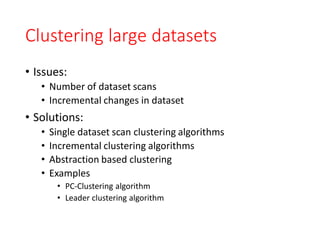

- 26. Clustering large datasets ŌĆó Issues: ŌĆó Number of dataset scans ŌĆó Incremental changes in dataset ŌĆó Solutions: ŌĆó Single dataset scan clustering algorithms ŌĆó Incremental clustering algorithms ŌĆó Abstraction based clustering ŌĆó Examples ŌĆó PC-Clustering algorithm ŌĆó Leader clustering algorithm

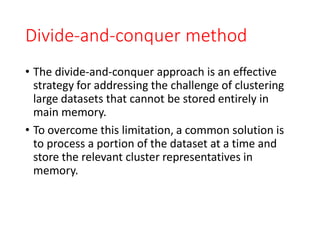

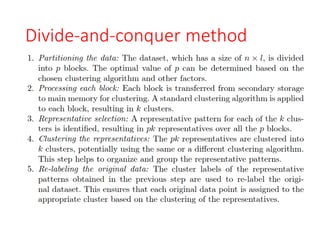

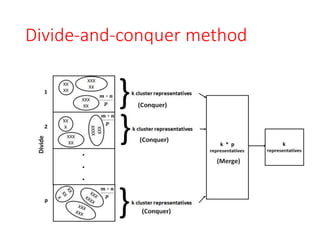

- 27. Divide-and-conquer method ŌĆó The divide-and-conquer approach is an effective strategy for addressing the challenge of clustering large datasets that cannot be stored entirely in main memory. ŌĆó To overcome this limitation, a common solution is to process a portion of the dataset at a time and store the relevant cluster representatives in memory.