Cipp model

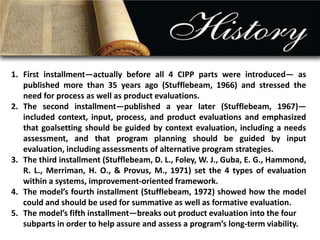

- 5. 1. First installment—actually before all 4 CIPP parts were introduced— as published more than 35 years ago (Stufflebeam, 1966) and stressed the need for process as well as product evaluations. 2. The second installment—published a year later (Stufflebeam, 1967)— included context, input, process, and product evaluations and emphasized that goalsetting should be guided by context evaluation, including a needs assessment, and that program planning should be guided by input evaluation, including assessments of alternative program strategies. 3. The third installment (Stufflebeam, D. L., Foley, W. J., Guba, E. G., Hammond, R. L., Merriman, H. O., & Provus, M., 1971) set the 4 types of evaluation within a systems, improvement-oriented framework. 4. The model’s fourth installment (Stufflebeam, 1972) showed how the model could and should be used for summative as well as formative evaluation. 5. The model’s fifth installment—breaks out product evaluation into the four subparts in order to help assure and assess a program’s long-term viability.

- 6. The CIPP Model’s current version Stufflebeam,Gullickson,Wingate, 2002) reflects prolonged effort and a modicum of progress to achieve the still distant goal of developing a sound evaluation theory which includes a coherent set of conceptual , hypothetical, pragmatic, and ethical principles forming a general framework to guide the evaluation.

- 7. Daniel Stufflebeam et.al. • D. L. Foley •W. J., Guba • E. G., Hammond • R. L., Merriman • Provus, M. • Shinkfield, A. J. • Gullickson • Wingate

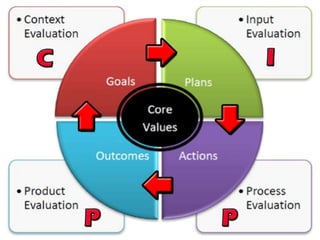

- 9. 1. Assess overall environmental readiness of the project; 2. Examine whether existing goals and priorities are attuned to the needs; 3. Refers to as NEEDS ASSESSMENT; 4. Provide rationale for setting objectives; 5. The expanded focus is to identify the strengths and weaknesses of an institution, program to indicate direction for improvement; 6. One of the basic use is to convince funding agencies of the worth of the project/program.

- 10. WHAT NEEDS TO BE DONE?

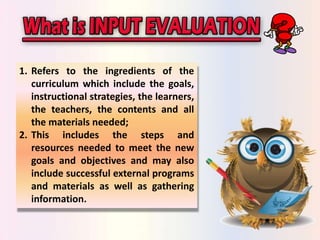

- 11. 1. Refers to the ingredients of the curriculum which include the goals, instructional strategies, the learners, the teachers, the contents and all the materials needed; 2. This includes the steps and resources needed to meet the new goals and objectives and may also include successful external programs and materials as well as gathering information.

- 12. HOW SHOULD IT BE DONE?

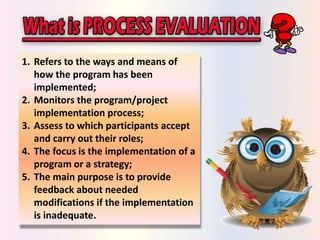

- 13. 1. Refers to the ways and means of how the program has been implemented; 2. Monitors the program/project implementation process; 3. Assess to which participants accept and carry out their roles; 4. The focus is the implementation of a program or a strategy; 5. The main purpose is to provide feedback about needed modifications if the implementation is inadequate.

- 14. IS IT BEING DONE?

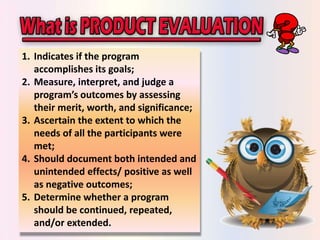

- 15. 1. Indicates if the program accomplishes its goals; 2. Measure, interpret, and judge a program’s outcomes by assessing their merit, worth, and significance; 3. Ascertain the extent to which the needs of all the participants were met; 4. Should document both intended and unintended effects/ positive as well as negative outcomes; 5. Determine whether a program should be continued, repeated, and/or extended.

- 16. DID THE PROGRAM SUCCEED?

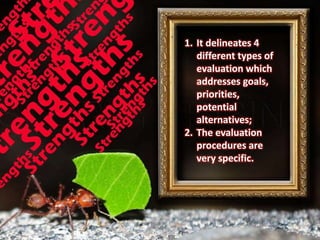

- 19. 1. It delineates 4 different types of evaluation which addresses goals, priorities, potential alternatives; 2. The evaluation procedures are very specific.

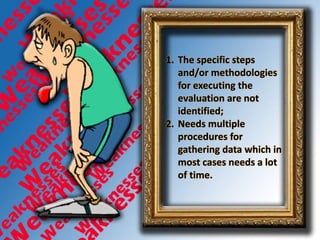

- 20. 1. The specific steps and/or methodologies for executing the evaluation are not identified; 2. Needs multiple procedures for gathering data which in most cases needs a lot of time.

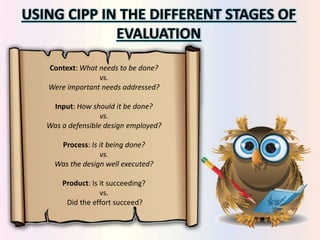

- 21. USING CIPP IN THE DIFFERENT STAGES OF EVALUATION Context: What needs to be done? vs. Were important needs addressed? Input: How should it be done? vs. Was a defensible design employed? Process: Is it being done? vs. Was the design well executed? Product: Is it succeeding? vs. Did the effort succeed?