Classification of Parallel Computers.pptx

Download as PPTX, PDF0 likes40 views

Classification of Parallel Computers.pptx

1 of 14

Download to read offline

Recommended

22CS201 COA

22CS201 COAKathirvel Ayyaswamy

╠²

This document discusses parallel processors and multicore architecture. It begins with an introduction to parallel processors, including concurrent access to memory and cache coherency. It then discusses multicore architecture, where a single physical processor contains the logic of two or more cores. This allows increasing processing power while keeping clock speeds and power consumption lower than would be needed for a single high-speed core. Cache coherence methods like write-through, write-back, and directory-based approaches are also summarized for maintaining consistency across cores' caches when accessing shared memory.unit 4.pptx

unit 4.pptxSUBHAMSHARANRA211100

╠²

This document provides an overview of parallelism, including the need for parallelism, types of parallelism, applications of parallelism, and challenges in parallelism. It discusses instruction level parallelism and data level parallelism in software. It describes Flynn's classification of computer architectures and the categories of SISD, SIMD, MISD, and MIMD. It also covers hardware multi-threading, uni-processors vs multi-processors, multi-core processors, memory in multi-processor systems, cache coherency, and the MESI protocol.unit 4.pptx

unit 4.pptxSUBHAMSHARANRA211100

╠²

This document provides an overview of parallelism and parallel computing architectures. It discusses the need for parallelism to improve performance and throughput. The main types of parallelism covered are instruction level parallelism, data parallelism, and task parallelism. Flynn's taxonomy is introduced for classifying computer architectures based on their instruction and data streams. Common parallel architectures like SISD, SIMD, MIMD are explained. The document also covers memory architectures for multi-processor systems including shared memory, distributed memory, and cache coherency protocols.Intro_ppt.pptx

Intro_ppt.pptxssuser906c831

╠²

A distributed system is a collection of independent computers that appear as a single coherent system to users. Components communicate by passing messages only. Distributed systems are motivated by the need to handle large computations that cannot be handled by a single computer, like those at companies like Google and Facebook. There are challenges in building distributed systems due to lack of shared memory, clocks, and potential failures.Computer architecture multi processor

Computer architecture multi processorMazin Alwaaly

╠²

Computer Architecture multi processor seminar

Mustansiriya University

Department of Education

Computer ScienceLecture 2

Lecture 2Mr SMAK

╠²

This document discusses key concepts and terminologies related to parallel computing. It defines tasks, parallel tasks, serial and parallel execution. It also describes shared memory and distributed memory architectures as well as communications and synchronization between parallel tasks. Flynn's taxonomy is introduced which classifies parallel computers based on instruction and data streams as Single Instruction Single Data (SISD), Single Instruction Multiple Data (SIMD), Multiple Instruction Single Data (MISD), and Multiple Instruction Multiple Data (MIMD). Examples are provided for each classification.Array Processors & Architectural Classification Schemes_Computer Architecture...

Array Processors & Architectural Classification Schemes_Computer Architecture...Sumalatha A

╠²

This document discusses array processors and architectural classification schemes. It describes how array processors use multiple arithmetic logic units that operate in parallel to achieve spatial parallelism. They are capable of processing array elements and connecting processing elements in various patterns depending on the computation. The document also introduces Flynn's taxonomy, which classifies architectures based on their instruction and data streams as SISD, SIMD, MIMD, or MISD. Feng's classification and Handlers classification schemes are also overviewed.OS_MD_4.pdf

OS_MD_4.pdfSangeethaBS4

╠²

This document discusses multiprocessor operating systems. It covers basic multiprocessor system architectures including tightly coupled, loosely coupled, UMA, NUMA, and NORMA systems. It also discusses interconnection networks like bus, crossbar switch, and multistage networks. Additionally, it summarizes the structures of basic multiprocessor operating systems including separate supervisor, master-slave, and symmetric models.Operating System Overview.pdf

Operating System Overview.pdfPrashantKhobragade3

╠²

The document provides an introduction to operating systems, including definitions, goals, and components. It discusses how operating systems manage computer hardware, execute user programs, and make systems convenient and efficient. It describes how operating systems act as an intermediary between users and hardware. It also summarizes the evolution of operating systems from early batch systems to modern time-sharing and networked systems. Key aspects covered include process management, memory management, multiprocessing, real-time systems, personal computers, and the role of the operating system in enabling interaction between programs and hardware.Lecture 1 (distributed systems)

Lecture 1 (distributed systems)Fazli Amin

╠²

This document provides an overview of distributed systems and distributed computing. It defines a distributed system as a collection of independent computers that appears as a single coherent system. It discusses the advantages and goals of distributed systems, including connecting users and resources, transparency, openness and scalability. It also covers hardware concepts like multi-processor systems with shared or non-shared memory, and multi-computer systems that can be homogeneous or heterogeneous.CA UNIT IV.pptx

CA UNIT IV.pptxssuser9dbd7e

╠²

Unit IV discusses parallelism and parallel processing architectures. It introduces Flynn's classifications of parallel systems as SISD, MIMD, SIMD, and SPMD. Hardware approaches to parallelism include multicore processors, shared memory multiprocessors, and message-passing systems like clusters, GPUs, and warehouse-scale computers. The goals of parallelism are to increase computational speed and throughput by processing data concurrently across multiple processors. Multiprocessor.pptx

Multiprocessor.pptxMuhammad54342

╠²

Flynn's taxonomy classifies computer architectures based on the number of instruction and data streams. The main categories are:

1) SISD - Single instruction, single data stream (von Neumann architecture)

2) SIMD - Single instruction, multiple data streams (vector/MMX processors)

3) MIMD - Multiple instruction, multiple data streams (most multiprocessors including multi-core)

Multiprocessor architectures can be organized as shared memory (SMP/UMA) or distributed memory (message passing/DSM). Shared memory allows automatic sharing but can have memory contention issues, while distributed memory requires explicit communication but scales better. Achieving high parallel performance depends on minimizing sequentialchapter1.ppt

chapter1.pptUsamaKhan987353

╠²

The document provides an overview of operating system concepts and components. It defines an operating system as a program that acts as an intermediary between the computer hardware and the user. The key components of a computer system are described as the hardware, operating system, application programs, and users. The document outlines the basic functions of an operating system including managing processes, memory, storage, protection and security. It provides descriptions of computer system organization, interrupt handling, I/O structure, storage hierarchy and memory management. The structures of multiprogramming, timesharing and virtual memory systems are also summarized.Operating System BCA 301

Operating System BCA 301CHANDERPRABHU JAIN COLLEGE OF HIGHER STUDIES & SCHOOL OF LAW

╠²

This document provides an introduction to operating systems. It defines an operating system as a program that acts as an intermediary between the user and computer hardware. The key components of a computer system are described as hardware, operating system, application programs, and users. Operating systems manage resources, control programs, and provide common services like memory management, process management, and I/O management. Various computing environments are explored, including traditional systems, mobile systems, distributed systems, client-server models, and virtualization.Parallel Processing & Pipelining in Computer Architecture_Prof.Sumalatha.pptx

Parallel Processing & Pipelining in Computer Architecture_Prof.Sumalatha.pptxSumalatha A

╠²

Parallel processing involves executing multiple programs or tasks simultaneously. It can be implemented through hardware and software means in both multiprocessor and uniprocessor systems. In uniprocessors, parallelism is achieved through techniques like pipelining instructions, overlapping CPU and I/O operations, using cache memory, and multiprocessing through time-sharing. Pipelining helps execute instructions in an overlapped fashion to improve efficiency compared to non-pipelined processors. Parallel computers break large problems into smaller parallel tasks executed simultaneously on multiple processors. Types of parallel computers include pipeline computers, array processors, and multiprocessor systems.Ch1 introduction

Ch1 introductionWelly Dian Astika

╠²

This document provides an overview of operating systems and computer system organization. It describes the basic components of a computer system including hardware, operating system, application programs, and users. It then discusses operating system functions like process management, memory management, storage management, and protection/security. It provides details on computer system architecture including multiprocessor systems and clustered systems. It also covers operating system structure for multiprogramming and timesharing systems.parallel-processing.ppt

parallel-processing.pptMohammedAbdelgader2

╠²

This document discusses parallel processing and multiple processor architectures. It covers single instruction, single data stream (SISD); single instruction, multiple data stream (SIMD); multiple instruction, single data stream (MISD); and multiple instruction, multiple data stream (MIMD) architectures. It then discusses the taxonomy of parallel processor architectures including tightly coupled symmetric multiprocessors (SMPs), non-uniform memory access (NUMA) systems, and loosely coupled clusters. It covers parallel organizations for these different architectures.CSE3120- Module1 part 1 v1.pptx

CSE3120- Module1 part 1 v1.pptxakhilagajjala

╠²

1. The document provides an introduction to operating systems, covering topics like computer system architecture, operating system structure and operations, types of computing environments, and operating system services.

2. It describes the basic components of an operating system including process management, memory management, storage management, I/O subsystem management, and protection and security.

3. Various computing environments are discussed, including stand-alone systems, distributed systems, client-server models, peer-to-peer networks, virtualization, cloud computing, and real-time embedded systems.Chapter 10

Chapter 10Er. Nawaraj Bhandari

╠²

This document discusses parallel processing and cache coherence in computer architecture. It defines parallel processing as using multiple CPUs simultaneously to execute a program faster. It describes different types of parallel processor systems based on the number of instruction and data streams. It then discusses symmetric multiprocessors (SMPs), which have multiple similar processors that share memory and I/O facilities. Finally, it explains the cache coherence problem that can occur when multiple caches contain the same data, and describes the MESI protocol used to maintain coherence between caches.Parallel processing

Parallel processingSyed Zaid Irshad

╠²

Parallel computing is a type of computation in which many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has been employed for many years, mainly in high-performance computing, but interest in it has grown lately due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.18 parallel processing

18 parallel processingdilip kumar

╠²

This document discusses different types of parallel processing architectures including single instruction single data stream (SISD), single instruction multiple data stream (SIMD), multiple instruction single data stream (MISD), and multiple instruction multiple data stream (MIMD). It provides details on tightly coupled symmetric multiprocessors (SMPs) and non-uniform memory access (NUMA) systems. It also covers cache coherence protocols like MESI and approaches to improving processor performance through multithreading and chip multiprocessing.Module 1 Introduction.ppt

Module 1 Introduction.pptshreesha16

╠²

The document provides an overview of operating system concepts, including:

- An operating system manages computer hardware and acts as an intermediary between users and the computer. It aims to execute programs, make the system convenient to use, and efficiently use hardware resources.

- A computer system consists of hardware, an operating system, application programs, and users. The operating system controls resource allocation and coordinates hardware, applications, and users.

- Operating systems provide services like file management, communication, error detection, resource allocation, accounting, and protection/security. System calls are the programming interface for these services.OS multiprocessing1.pptx

OS multiprocessing1.pptxamerdawood2

╠²

The document discusses multiprocessor operating systems. It defines multiprocessors as systems with multiple processors that share components like memory and I/O devices. There are two types of multiprocessors: symmetric, where each processor has equal functionality and workload, and asymmetric, where one processor acts as the master. Multiprocessors provide benefits like increased performance but also introduce complexity in communication. They differ from multicomputers which have separate memory for each processor and can distribute work across independent systems.parallel processing.ppt

parallel processing.pptNANDHINIS109942

╠²

This document discusses parallel processing and multiprocessing. It begins by explaining how processor performance is measured and how increasing clock frequency and instructions per cycle can improve performance. It then provides a taxonomy of parallel processor architectures including SISD, SIMD, MISD, and MIMD models. The MIMD model is further classified based on tightly coupled architectures like symmetric multiprocessors (SMPs) and loosely coupled clusters. Key issues for parallel processing like cache coherence and software/hardware solutions are also summarized.chapter-18-parallel-processing-multiprocessing (1).ppt

chapter-18-parallel-processing-multiprocessing (1).pptNANDHINIS109942

╠²

This document discusses parallel processing and multiprocessing. It begins by explaining how processor performance is measured and how increasing clock frequency and instructions per cycle can improve performance. It then provides a taxonomy of parallel processor architectures including SISD, SIMD, MISD, and MIMD models. The MIMD model is further classified based on tightly-coupled and loosely-coupled systems. Symmetric multiprocessors (SMPs) and issues like cache coherence are discussed for tightly-coupled MIMD systems. Hardware and software solutions to cache coherence are also summarized.Parallel & Distributed processing

Parallel & Distributed processingSyed Zaid Irshad

╠²

The document provides an introduction to high performance computing architectures. It discusses the von Neumann architecture that has been used in computers for over 40 years. It then explains Flynn's taxonomy, which classifies parallel computers based on whether their instruction and data streams are single or multiple. The main categories are SISD, SIMD, MISD, and MIMD. It provides examples of computer architectures that fall under each classification. Finally, it discusses different parallel computer memory architectures, including shared memory, distributed memory, and hybrid models.iTop VPN Crack Latest Version 2025? Free Download

iTop VPN Crack Latest Version 2025? Free Downloadbanceyking1122

╠²

Ō×Ī’ĖÅ ¤īŹ¤ō▒¤æēCOPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Download a FREE VPN to surf safely & privately. Be more secure and productive with our screen recording, data recovery, password, and PDF solutions.Unlock Full Access to Canva Pro Crack Features 2025

Unlock Full Access to Canva Pro Crack Features 2025crackstore786

╠²

COPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Unleash Unlimited Creative Power! Canva Pro is the ultimate design platform for creating stunning graphics, social media content, ...More Related Content

Similar to Classification of Parallel Computers.pptx (20)

OS_MD_4.pdf

OS_MD_4.pdfSangeethaBS4

╠²

This document discusses multiprocessor operating systems. It covers basic multiprocessor system architectures including tightly coupled, loosely coupled, UMA, NUMA, and NORMA systems. It also discusses interconnection networks like bus, crossbar switch, and multistage networks. Additionally, it summarizes the structures of basic multiprocessor operating systems including separate supervisor, master-slave, and symmetric models.Operating System Overview.pdf

Operating System Overview.pdfPrashantKhobragade3

╠²

The document provides an introduction to operating systems, including definitions, goals, and components. It discusses how operating systems manage computer hardware, execute user programs, and make systems convenient and efficient. It describes how operating systems act as an intermediary between users and hardware. It also summarizes the evolution of operating systems from early batch systems to modern time-sharing and networked systems. Key aspects covered include process management, memory management, multiprocessing, real-time systems, personal computers, and the role of the operating system in enabling interaction between programs and hardware.Lecture 1 (distributed systems)

Lecture 1 (distributed systems)Fazli Amin

╠²

This document provides an overview of distributed systems and distributed computing. It defines a distributed system as a collection of independent computers that appears as a single coherent system. It discusses the advantages and goals of distributed systems, including connecting users and resources, transparency, openness and scalability. It also covers hardware concepts like multi-processor systems with shared or non-shared memory, and multi-computer systems that can be homogeneous or heterogeneous.CA UNIT IV.pptx

CA UNIT IV.pptxssuser9dbd7e

╠²

Unit IV discusses parallelism and parallel processing architectures. It introduces Flynn's classifications of parallel systems as SISD, MIMD, SIMD, and SPMD. Hardware approaches to parallelism include multicore processors, shared memory multiprocessors, and message-passing systems like clusters, GPUs, and warehouse-scale computers. The goals of parallelism are to increase computational speed and throughput by processing data concurrently across multiple processors. Multiprocessor.pptx

Multiprocessor.pptxMuhammad54342

╠²

Flynn's taxonomy classifies computer architectures based on the number of instruction and data streams. The main categories are:

1) SISD - Single instruction, single data stream (von Neumann architecture)

2) SIMD - Single instruction, multiple data streams (vector/MMX processors)

3) MIMD - Multiple instruction, multiple data streams (most multiprocessors including multi-core)

Multiprocessor architectures can be organized as shared memory (SMP/UMA) or distributed memory (message passing/DSM). Shared memory allows automatic sharing but can have memory contention issues, while distributed memory requires explicit communication but scales better. Achieving high parallel performance depends on minimizing sequentialchapter1.ppt

chapter1.pptUsamaKhan987353

╠²

The document provides an overview of operating system concepts and components. It defines an operating system as a program that acts as an intermediary between the computer hardware and the user. The key components of a computer system are described as the hardware, operating system, application programs, and users. The document outlines the basic functions of an operating system including managing processes, memory, storage, protection and security. It provides descriptions of computer system organization, interrupt handling, I/O structure, storage hierarchy and memory management. The structures of multiprogramming, timesharing and virtual memory systems are also summarized.Operating System BCA 301

Operating System BCA 301CHANDERPRABHU JAIN COLLEGE OF HIGHER STUDIES & SCHOOL OF LAW

╠²

This document provides an introduction to operating systems. It defines an operating system as a program that acts as an intermediary between the user and computer hardware. The key components of a computer system are described as hardware, operating system, application programs, and users. Operating systems manage resources, control programs, and provide common services like memory management, process management, and I/O management. Various computing environments are explored, including traditional systems, mobile systems, distributed systems, client-server models, and virtualization.Parallel Processing & Pipelining in Computer Architecture_Prof.Sumalatha.pptx

Parallel Processing & Pipelining in Computer Architecture_Prof.Sumalatha.pptxSumalatha A

╠²

Parallel processing involves executing multiple programs or tasks simultaneously. It can be implemented through hardware and software means in both multiprocessor and uniprocessor systems. In uniprocessors, parallelism is achieved through techniques like pipelining instructions, overlapping CPU and I/O operations, using cache memory, and multiprocessing through time-sharing. Pipelining helps execute instructions in an overlapped fashion to improve efficiency compared to non-pipelined processors. Parallel computers break large problems into smaller parallel tasks executed simultaneously on multiple processors. Types of parallel computers include pipeline computers, array processors, and multiprocessor systems.Ch1 introduction

Ch1 introductionWelly Dian Astika

╠²

This document provides an overview of operating systems and computer system organization. It describes the basic components of a computer system including hardware, operating system, application programs, and users. It then discusses operating system functions like process management, memory management, storage management, and protection/security. It provides details on computer system architecture including multiprocessor systems and clustered systems. It also covers operating system structure for multiprogramming and timesharing systems.parallel-processing.ppt

parallel-processing.pptMohammedAbdelgader2

╠²

This document discusses parallel processing and multiple processor architectures. It covers single instruction, single data stream (SISD); single instruction, multiple data stream (SIMD); multiple instruction, single data stream (MISD); and multiple instruction, multiple data stream (MIMD) architectures. It then discusses the taxonomy of parallel processor architectures including tightly coupled symmetric multiprocessors (SMPs), non-uniform memory access (NUMA) systems, and loosely coupled clusters. It covers parallel organizations for these different architectures.CSE3120- Module1 part 1 v1.pptx

CSE3120- Module1 part 1 v1.pptxakhilagajjala

╠²

1. The document provides an introduction to operating systems, covering topics like computer system architecture, operating system structure and operations, types of computing environments, and operating system services.

2. It describes the basic components of an operating system including process management, memory management, storage management, I/O subsystem management, and protection and security.

3. Various computing environments are discussed, including stand-alone systems, distributed systems, client-server models, peer-to-peer networks, virtualization, cloud computing, and real-time embedded systems.Chapter 10

Chapter 10Er. Nawaraj Bhandari

╠²

This document discusses parallel processing and cache coherence in computer architecture. It defines parallel processing as using multiple CPUs simultaneously to execute a program faster. It describes different types of parallel processor systems based on the number of instruction and data streams. It then discusses symmetric multiprocessors (SMPs), which have multiple similar processors that share memory and I/O facilities. Finally, it explains the cache coherence problem that can occur when multiple caches contain the same data, and describes the MESI protocol used to maintain coherence between caches.Parallel processing

Parallel processingSyed Zaid Irshad

╠²

Parallel computing is a type of computation in which many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has been employed for many years, mainly in high-performance computing, but interest in it has grown lately due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.18 parallel processing

18 parallel processingdilip kumar

╠²

This document discusses different types of parallel processing architectures including single instruction single data stream (SISD), single instruction multiple data stream (SIMD), multiple instruction single data stream (MISD), and multiple instruction multiple data stream (MIMD). It provides details on tightly coupled symmetric multiprocessors (SMPs) and non-uniform memory access (NUMA) systems. It also covers cache coherence protocols like MESI and approaches to improving processor performance through multithreading and chip multiprocessing.Module 1 Introduction.ppt

Module 1 Introduction.pptshreesha16

╠²

The document provides an overview of operating system concepts, including:

- An operating system manages computer hardware and acts as an intermediary between users and the computer. It aims to execute programs, make the system convenient to use, and efficiently use hardware resources.

- A computer system consists of hardware, an operating system, application programs, and users. The operating system controls resource allocation and coordinates hardware, applications, and users.

- Operating systems provide services like file management, communication, error detection, resource allocation, accounting, and protection/security. System calls are the programming interface for these services.OS multiprocessing1.pptx

OS multiprocessing1.pptxamerdawood2

╠²

The document discusses multiprocessor operating systems. It defines multiprocessors as systems with multiple processors that share components like memory and I/O devices. There are two types of multiprocessors: symmetric, where each processor has equal functionality and workload, and asymmetric, where one processor acts as the master. Multiprocessors provide benefits like increased performance but also introduce complexity in communication. They differ from multicomputers which have separate memory for each processor and can distribute work across independent systems.parallel processing.ppt

parallel processing.pptNANDHINIS109942

╠²

This document discusses parallel processing and multiprocessing. It begins by explaining how processor performance is measured and how increasing clock frequency and instructions per cycle can improve performance. It then provides a taxonomy of parallel processor architectures including SISD, SIMD, MISD, and MIMD models. The MIMD model is further classified based on tightly coupled architectures like symmetric multiprocessors (SMPs) and loosely coupled clusters. Key issues for parallel processing like cache coherence and software/hardware solutions are also summarized.chapter-18-parallel-processing-multiprocessing (1).ppt

chapter-18-parallel-processing-multiprocessing (1).pptNANDHINIS109942

╠²

This document discusses parallel processing and multiprocessing. It begins by explaining how processor performance is measured and how increasing clock frequency and instructions per cycle can improve performance. It then provides a taxonomy of parallel processor architectures including SISD, SIMD, MISD, and MIMD models. The MIMD model is further classified based on tightly-coupled and loosely-coupled systems. Symmetric multiprocessors (SMPs) and issues like cache coherence are discussed for tightly-coupled MIMD systems. Hardware and software solutions to cache coherence are also summarized.Parallel & Distributed processing

Parallel & Distributed processingSyed Zaid Irshad

╠²

The document provides an introduction to high performance computing architectures. It discusses the von Neumann architecture that has been used in computers for over 40 years. It then explains Flynn's taxonomy, which classifies parallel computers based on whether their instruction and data streams are single or multiple. The main categories are SISD, SIMD, MISD, and MIMD. It provides examples of computer architectures that fall under each classification. Finally, it discusses different parallel computer memory architectures, including shared memory, distributed memory, and hybrid models.Recently uploaded (20)

iTop VPN Crack Latest Version 2025? Free Download

iTop VPN Crack Latest Version 2025? Free Downloadbanceyking1122

╠²

Ō×Ī’ĖÅ ¤īŹ¤ō▒¤æēCOPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Download a FREE VPN to surf safely & privately. Be more secure and productive with our screen recording, data recovery, password, and PDF solutions.Unlock Full Access to Canva Pro Crack Features 2025

Unlock Full Access to Canva Pro Crack Features 2025crackstore786

╠²

COPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Unleash Unlimited Creative Power! Canva Pro is the ultimate design platform for creating stunning graphics, social media content, ...Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]![Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/slidsahre-file-250220164342-7ea7fa5f-250303081849-fb582ea0-thumbnail.jpg?width=560&fit=bounds)

![Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/slidsahre-file-250220164342-7ea7fa5f-250303081849-fb582ea0-thumbnail.jpg?width=560&fit=bounds)

![Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/slidsahre-file-250220164342-7ea7fa5f-250303081849-fb582ea0-thumbnail.jpg?width=560&fit=bounds)

![Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/slidsahre-file-250220164342-7ea7fa5f-250303081849-fb582ea0-thumbnail.jpg?width=560&fit=bounds)

Betternet VPN Premium 8.6.0.1290 Full Crack [Latest]abbaskanju3

╠²

Direct License file Link Below¤æć https://click4pc.com/after-verification-click-go-to-download-page/

Betternet VPN Crack is an impressive application that can be used to browse the Internet anonymously or use other IP addresses and have the opportunity to visit any website. This application enables you to connect to a VPN server and enjoy the protection of IP trackers on the Internet.

Warrior Cats Animated EP 03 - "Fire and Ice"

Warrior Cats Animated EP 03 - "Fire and Ice"nastyratlad

╠²

Bundle 12 EP 03 of WCA's "Fire and Ice" based on Harper Collin's "Warrior cats" book series.Adobe Photoshop CC 2025 Crack Full Serial Key Updated

Adobe Photoshop CC 2025 Crack Full Serial Key Updatedcrackstore786

╠²

https://crackedtech.net/after-verification-click-go-to-download-page/

Below you have the Photoshop all version list updated for 2023-2024. The infographic is mainly about Adobe Photoshop All Versions List but it also cotains ...

Adobe Photoshop Crack Ō×ö Secure Download Now

Adobe Photoshop Crack Ō×ö Secure Download Nowlaksken83

╠²

Direct License file free Link Below¤æć

https://communityy.online

Adobe Photoshop 2025 Crack is a professional image editing software application used worldwide to inspire people.BUKTI JP MEMBER KANCAH4D TANPA BASA-BASI LANGSUNG DIKASIH !

BUKTI JP MEMBER KANCAH4D TANPA BASA-BASI LANGSUNG DIKASIH !Kancah4d Jitu

╠²

MEMBER BARU GAK PAKE LAMA LANGSUNG JP DI KANCAH4D , GAME BARU PG SOFT SEDANG GACOR-GACOR NYA

Cek sekarang di : https://heylink.me/kancah4dDisk Drill Pro Crack Free Download Free Download

Disk Drill Pro Crack Free Download Free Downloadcrackstore786

╠²

COPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Disk Drill Pro Crack is a professional-grade data recovery software designed to help users recover lost or deleted files from various ...Odeta Rose - A portrait of sophistication and artistic appreciation

Odeta Rose - A portrait of sophistication and artistic appreciationOdeta Rose

╠²

A portrait of a sophisticated woman, standing in an art gallery. She's wearing a dark, elegant dress. Her gaze is focused intently on a vibrant abstract painting. Soft, natural light illuminates her face, highlighting her thoughtful expression. The gallery background is blurred, emphasizing her connection to the artwork.INSTANTLY HOOK YOUR VIEWERS AND BOOST ENGAGEMENT WITH JAWDROPPING VIDEO TRANS...

INSTANTLY HOOK YOUR VIEWERS AND BOOST ENGAGEMENT WITH JAWDROPPING VIDEO TRANS...haak0n1

╠²

What if you could turn ordinary moments into viral videos with smooth transitions that catch

attention, increase engagement, and impress clients & brands? We do it every day, and weŌĆÖre

excited to teach you how, too>Download Free iTop VPN Crack Full [New Latest 2025]![>Download Free iTop VPN Crack Full [New Latest 2025]](https://cdn.slidesharecdn.com/ss_thumbnails/breakingbarriersintheuseofbiomedicaldata-multi-modaldatamanagement-250225133709-b66b7b0d-250225194816-1fe86967-thumbnail.jpg?width=560&fit=bounds)

![>Download Free iTop VPN Crack Full [New Latest 2025]](https://cdn.slidesharecdn.com/ss_thumbnails/breakingbarriersintheuseofbiomedicaldata-multi-modaldatamanagement-250225133709-b66b7b0d-250225194816-1fe86967-thumbnail.jpg?width=560&fit=bounds)

![>Download Free iTop VPN Crack Full [New Latest 2025]](https://cdn.slidesharecdn.com/ss_thumbnails/breakingbarriersintheuseofbiomedicaldata-multi-modaldatamanagement-250225133709-b66b7b0d-250225194816-1fe86967-thumbnail.jpg?width=560&fit=bounds)

![>Download Free iTop VPN Crack Full [New Latest 2025]](https://cdn.slidesharecdn.com/ss_thumbnails/breakingbarriersintheuseofbiomedicaldata-multi-modaldatamanagement-250225133709-b66b7b0d-250225194816-1fe86967-thumbnail.jpg?width=560&fit=bounds)

>Download Free iTop VPN Crack Full [New Latest 2025]crackstore786

╠²

https://crackedtech.net/after-verification-click-go-to-download-page/

iTop VPN offers 3200+ VPN servers in 100+ locations worldwide to help you access various sites privately and safely, along with dedicated IP ...party games memory game for a social gathering.pptx

party games memory game for a social gathering.pptxANKITGREWAL17

╠²

Memory games which enhances the memory of the individual ØÖ│ØÖŠØÜåØÖĮØÖ╗ØÖŠØÖ░ØÖ│ŌĆöCapcut Pro Crack For PC Latest 2025 Version

ØÖ│ØÖŠØÜåØÖĮØÖ╗ØÖŠØÖ░ØÖ│ŌĆöCapcut Pro Crack For PC Latest 2025 Versionlaksken83

╠²

Direct License file free Link Below¤æć

https://communityy.online

CapCut Pro Crack is a popular video editing app for mobile devices! It offers advanced features and capabilities to help you create stunning video.Join FeelGood at AfrikaBurn 2025 for a Life Changing Festival Journey

Join FeelGood at AfrikaBurn 2025 for a Life Changing Festival Journeydigitalsuhailkhan278

╠²

AfrikaBurn 2025 is not just a festival; it is an experience of transformation, self-expression, and human connection. FeelGood invites you to embark on a 14-day adventure that blends the best of AfrikaBurn with a carefully curated journey designed to elevate your experience. From the moment you arrive, you will be immersed in a world of creativity, radical expression, and deep community engagement. With VIP access, personalized activities, and a supportive group of like-minded travelers, this journey is about more than just the festival. It is about embracing adventure, breaking boundaries, and discovering new dimensions of yourself. Join us for an experience that will stay with you forever.

Adobe After Effects ØŚ¢ØŚ┐ØŚ«ØŚ░ØŚĖ (2025) + ØŚöØŚ░ØśüØŚČØÜ¤ØŚ«ØśüØŚČØŚ╝ØŚ╗

Adobe After Effects ØŚ¢ØŚ┐ØŚ«ØŚ░ØŚĖ (2025) + ØŚöØŚ░ØśüØŚČØÜ¤ØŚ«ØśüØŚČØŚ╝ØŚ╗crackstore786

╠²

COPY & PASTE LINK¤æē¤æē¤æē https://crackedstore.co/after-verification-click-go-to-download-page/

Download the full official version of Adobe After Effects for free. Create incredible motion graphics and the best visual effects with a free trial today!Adobe After Effects ØŚ¢ØŚ┐ØŚ«ØŚ░ØŚĖ (2025) + ØŚöØŚ░ØśüØŚČØÜ¤ØŚ«ØśüØŚČØŚ╝ØŚ╗

Adobe After Effects ØŚ¢ØŚ┐ØŚ«ØŚ░ØŚĖ (2025) + ØŚöØŚ░ØśüØŚČØÜ¤ØŚ«ØśüØŚČØŚ╝ØŚ╗crackstore786

╠²

Classification of Parallel Computers.pptx

- 2. Introduction ŌĆó Parallel computers are those that emphasize the parallel processing between the operations in some way. ŌĆó Parallel computers can be characterized based on the data and instruction streams forming various types of computer organizations. ŌĆó They can also be classified based on the computer structure, e.g. multiple processors having separate memory or one shared global memory. ŌĆó Parallel processing levels can also be defined based on the size of instructions in a program called grain size

- 3. Types of Classification ŌĆó 1. Classification based on the instruction and data streams ŌĆó 2. Classification based on the structure of computers ŌĆó 3. Classification based on how the memory is accessed ŌĆó 4. Classification based on grain size

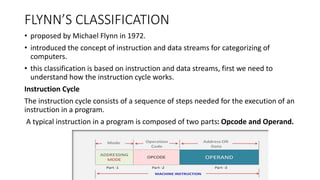

- 4. FLYNNŌĆÖS CLASSIFICATION ŌĆó proposed by Michael Flynn in 1972. ŌĆó introduced the concept of instruction and data streams for categorizing of computers. ŌĆó this classification is based on instruction and data streams, first we need to understand how the instruction cycle works. Instruction Cycle The instruction cycle consists of a sequence of steps needed for the execution of an instruction in a program. A typical instruction in a program is composed of two parts: Opcode and Operand.

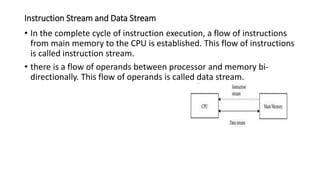

- 6. Instruction Stream and Data Stream ŌĆó In the complete cycle of instruction execution, a flow of instructions from main memory to the CPU is established. This flow of instructions is called instruction stream. ŌĆó there is a flow of operands between processor and memory bi- directionally. This flow of operands is called data stream.

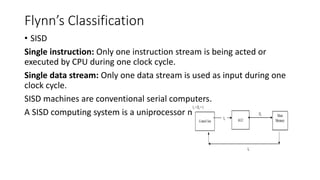

- 7. FlynnŌĆÖs Classification ŌĆó SISD Single instruction: Only one instruction stream is being acted or executed by CPU during one clock cycle. Single data stream: Only one data stream is used as input during one clock cycle. SISD machines are conventional serial computers. A SISD computing system is a uniprocessor machine

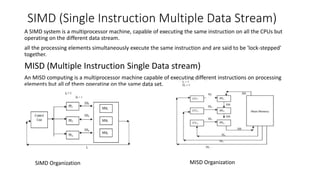

- 8. SIMD (Single Instruction Multiple Data Stream) A SIMD system is a multiprocessor machine, capable of executing the same instruction on all the CPUs but operating on the different data stream. all the processing elements simultaneously execute the same instruction and are said to be 'lock-stepped' together. MISD (Multiple Instruction Single Data stream) An MISD computing is a multiprocessor machine capable of executing different instructions on processing elements but all of them operating on the same data set. SIMD Organization MISD Organization

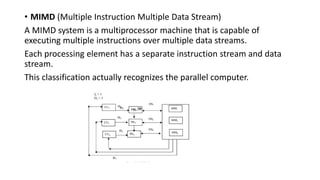

- 9. ŌĆó MIMD (Multiple Instruction Multiple Data Stream) A MIMD system is a multiprocessor machine that is capable of executing multiple instructions over multiple data streams. Each processing element has a separate instruction stream and data stream. This classification actually recognizes the parallel computer.

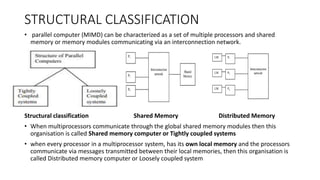

- 10. STRUCTURAL CLASSIFICATION ŌĆó parallel computer (MIMD) can be characterized as a set of multiple processors and shared memory or memory modules communicating via an interconnection network. Structural classification Shared Memory Distributed Memory ŌĆó When multiprocessors communicate through the global shared memory modules then this organisation is called Shared memory computer or Tightly coupled systems ŌĆó when every processor in a multiprocessor system, has its own local memory and the processors communicate via messages transmitted between their local memories, then this organisation is called Distributed memory computer or Loosely coupled system

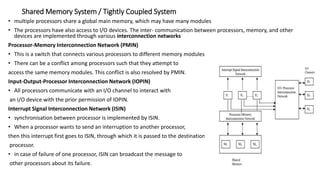

- 11. Shared Memory System / Tightly Coupled System ŌĆó multiple processors share a global main memory, which may have many modules ŌĆó The processors have also access to I/O devices. The inter- communication between processors, memory, and other devices are implemented through various interconnection networks Processor-Memory Interconnection Network (PMIN) ŌĆó This is a switch that connects various processors to different memory modules ŌĆó There can be a conflict among processors such that they attempt to access the same memory modules. This conflict is also resolved by PMIN. Input-Output-Processor Interconnection Network (IOPIN) ŌĆó All processors communicate with an I/O channel to interact with an I/O device with the prior permission of IOPIN. Interrupt Signal Interconnection Network (ISIN) ŌĆó synchronisation between processor is implemented by ISIN. ŌĆó When a processor wants to send an interruption to another processor, then this interrupt first goes to ISIN, through which it is passed to the destination. processor. ŌĆó in case of failure of one processor, ISIN can broadcast the message to other processors about its failure.

- 12. ŌĆó Since, every reference to the memory in tightly coupled systems is via interconnection network, there is a delay in executing the instructions. To reduce this delay, every processor may use cache memory for the frequent references made by the processor as shown Tightly coupled with cache memory The shared memory multiprocessor systems can further be divided into three modes which are based on the manner in which shared memory is accessed. Modes of Tightly coupled systems

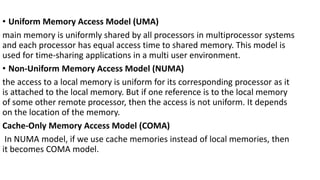

- 13. ŌĆó Uniform Memory Access Model (UMA) main memory is uniformly shared by all processors in multiprocessor systems and each processor has equal access time to shared memory. This model is used for time-sharing applications in a multi user environment. ŌĆó Non-Uniform Memory Access Model (NUMA) the access to a local memory is uniform for its corresponding processor as it is attached to the local memory. But if one reference is to the local memory of some other remote processor, then the access is not uniform. It depends on the location of the memory. Cache-Only Memory Access Model (COMA) In NUMA model, if we use cache memories instead of local memories, then it becomes COMA model.

- 14. Loosely Coupled Systems ŌĆó each processor in loosely coupled systems is having a large local memory (LM), which is not shared by any other processor. ŌĆó such systems have multiple processors with their own local memory and a set of I/O devices. ŌĆó These computer systems are connected together via message passing interconnection network through which processes communicate by passing messages to one another. ŌĆó Since every computer system or node in multicomputer systems has a separate memory, they are called distributed multicomputer systems. ŌĆó e, these systems are also known as no-remote memory access (NORMA) systems.