Classification.pptx

Download as PPTX, PDF0 likes11 views

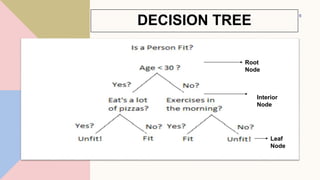

This document provides an overview of classification predictive modeling and decision trees. It discusses classification, including binary, multi-class, and multi-label classification. It then describes decision trees, including key terminology like root nodes, interior nodes, and leaf nodes. It explains how decision trees are built and parameters like max_depth and min_samples_leaf. Finally, it compares regression and classification trees and discusses advantages and disadvantages of decision trees.

1 of 21

Download to read offline

![REGRESSION TREES

• Tree-based modeling for continuous target

variable

• most intuitively appropriate method for loss

ratio analysis

• Find split that produces greatest separation in

∑[y – E(y)]2

• i.e.: find nodes with minimal within variance

• and therefore greatest between variance

• like credibility theory

12](https://image.slidesharecdn.com/classification-221202092348-b3837787/85/Classification-pptx-12-320.jpg)

Recommended

Classification Using Decision Trees and RulesChapter 5.docx

Classification Using Decision Trees and RulesChapter 5.docxmonicafrancis71118

Ã˝

Classification Using Decision

Trees and Rules

Chapter 5

Introduction

• Decision tree learners use a tree structure to model the relationships

among the features and the potential outcomes.

• a structure of branching decisions into a final predicted class value

• Decision begins at the root node, then passed through decision nodes

that require choices.

• Choices split the data across branches that indicate potential

outcomes of a decision

• Tree is terminated by leaf nodes that denote the action to be taken as

the result of the series of decisions.

Decision Tree Example

Benefits

• Flowchart-like tree structure is not necessarily exclusively for the

learner's internal use.

• Resulting structure in a human-readable format.

• Provides insight into how and why the model works or doesn't work well for a

particular task.

• Useful where classification mechanism needs to be transparent for legal reasons, or in

case the results need to be shared with others in order to inform future business

practices

• Credit scoring models where criteria that causes an applicant to be rejected need to be clearly

documented and free from bias

• Marketing studies of customer behavior such as satisfaction or churn, which will be shared

with management or advertising agencies

• Diagnosis of medical conditions based on laboratory measurements, symptoms, or the rate of

disease progression

Applicability

• Widely used machine learning technique

• Can be applied to model almost any type of data with excellent

results

• Does not fit task where the data has a large number of nominal

features with many levels or it has a large number of numeric

features.

• Result in large number of decisions and an overly complex tree.

• Tendency of decision trees to overfit data, though this can be overcome by

adjusting some simple parameters

Divide and Conquer

• Decision trees are built using a heuristic called recursive partitioning.

• Divide and conquer because it splits the data into subsets, which are then

split repeatedly into even smaller subsets,

• Stops when the data within the subsets are sufficiently homogenous, or

another stopping criterion has been met.

• Root node represents the entire dataset

• Algorithm must choose a feature to split upon

• Choose the feature most predictive of the target class.

• Algorithm continues to divide and conquer the data, choosing the best

candidate feature each time to create another decision node, until a stopping

criterion is reached.

Divide and Conquer

• Stopping Conditions

• All (or nearly all) of the examples at the node have the same class

• There are no remaining features to distinguish among the examples

• The tree has grown to a predefined size limit

Example

• Finding potential for a movie- Box Office Bust, Mainstream Hit, Critical Success

• Diagonal lines might have split the data even more cleanly.

• Limitation of the decision tree's knowledge representation, whi.Foundations of Machine Learning - StampedeCon AI Summit 2017

Foundations of Machine Learning - StampedeCon AI Summit 2017StampedeCon

Ã˝

This presentation will cover all aspects of modeling, from preparing data, training and evaluating the results. There will be descriptions of the mainline ML methods including, neural nets, SVM, boosting, bagging, trees, forests, and deep learning. common problems of overfitting and dimensionality will be covered with discussion of modeling best practices. Other topics will include field standardization, encoding categorical variables, feature creation and selection. It will be a soup-to-nuts overview of all the necessary procedures for building state-of-the art predictive models.Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...

Lecture 9 - Decision Trees and Ensemble Methods, a lecture in subject module ...Maninda Edirisooriya

Ã˝

Decision Trees and Ensemble Methods is a different form of Machine Learning algorithm classes. This was one of the lectures of a full course I taught in University of Moratuwa, Sri Lanka on 2023 second half of the year.Lecture 5 Decision tree.pdf

Lecture 5 Decision tree.pdfssuser4c50a9

Ã˝

The document discusses decision trees, which are a popular classification algorithm. It covers:

- Why decision trees are used for classification and prediction, and that they represent rules that can be understood by humans.

- The key components of a decision tree, including root nodes, internal decision nodes, leaf nodes, and branches. It describes how a decision tree classifies examples by moving from the root to a leaf node.

- The greedy algorithm for learning decision trees, which starts with an empty tree and recursively splits the data into purer subsets based on a splitting criterion until some stopping condition is met.Mini datathon

Mini datathonKunal Jain

Ã˝

The document summarizes an Analytics Vidhya meetup event. It discusses that the meetups will occur once a month, with the next one on May 24th. It aims to provide networking and learning around data science, big data, machine learning and IoT. It introduces the volunteer organizers and outlines the agenda, which includes an introduction, discussing the model building lifecycle, data exploration techniques, and modeling techniques like logistic regression, decision trees, random forests, and SVMs. It provides details on practicing these techniques by predicting survival on the Titanic dataset.decision tree machine learning model for classification

decision tree machine learning model for classificationShruti Jamsandekar

Ã˝

Machine learning classification model based on decision tree Introduction to ML.NET

Introduction to ML.NETMarco Parenzan

Ã˝

Writing Machine Learning code is now possible with .NET native library ML.NET that has recently reached 1.0 milestole. Let's look what we can do with this lib, which scenarios can be handled.2023 Supervised Learning for Orange3 from scratch

2023 Supervised Learning for Orange3 from scratchFEG

Ã˝

This document provides an overview of supervised learning and decision tree models. It discusses supervised learning techniques for classification and regression. Decision trees are explained as a method that uses conditional statements to classify examples based on their features. The document reviews node splitting criteria like information gain that help determine the most important features. It also discusses evaluating models for overfitting/underfitting and techniques like bagging and boosting in random forests to improve performance. Homework involves building a classification model on a healthcare dataset and reporting the results.Primer on major data mining algorithms

Primer on major data mining algorithmsVikram Sankhala IIT, IIM, Ex IRS, FRM, Fin.Engr

Ã˝

This document provides an overview of major data mining algorithms, including supervised learning techniques like decision trees, random forests, support vector machines, naive Bayes, and logistic regression. Unsupervised techniques discussed include clustering algorithms like k-means and EM, as well as association rule learning using the Apriori algorithm. Application areas and advantages/disadvantages of each technique are described. Libraries for implementing these algorithms in Python and R are also listed.Intro to ml_2021

Intro to ml_2021Sanghamitra Deb

Ã˝

Machine learning can be used to predict whether a user will purchase a book on an online book store. Features about the user, book, and user-book interactions can be generated and used in a machine learning model. A multi-stage modeling approach could first predict if a user will view a book, and then predict if they will purchase it, with the predicted view probability as an additional feature. Decision trees, logistic regression, or other classification algorithms could be used to build models at each stage. This approach aims to leverage user data to provide personalized book recommendations.DataMiningOverview_Galambos_2015_06_04.pptx

DataMiningOverview_Galambos_2015_06_04.pptxAkash527744

Ã˝

This document provides an overview of data mining techniques for predictive modeling, including classification and regression trees (CART), chi-squared automatic interaction detection (CHAID), neural networks, bagging, boosting, and examples of applying these techniques using SAS Enterprise Miner. It discusses data preparation, partitioning data into training, validation and test sets, handling missing data, selecting optimal tree size to avoid overfitting, and summarizes a preliminary decision tree model for predicting student GPA.Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social EngineeringMubashirHussain792093

Ã˝

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Machine Learning Unit-5 Decesion Trees & Random Forest.pdf

Machine Learning Unit-5 Decesion Trees & Random Forest.pdfAdityaSoraut

Ã˝

Its all about Machine learning .Machine learning is a field of artificial intelligence (AI) that focuses on the development of algorithms and statistical models that enable computers to perform tasks without explicit programming instructions. Instead, these algorithms learn from data, identifying patterns, and making decisions or predictions based on that data.

There are several types of machine learning approaches, including:

Supervised Learning: In this approach, the algorithm learns from labeled data, where each example is paired with a label or outcome. The algorithm aims to learn a mapping from inputs to outputs, such as classifying emails as spam or not spam.

Unsupervised Learning: Here, the algorithm learns from unlabeled data, seeking to find hidden patterns or structures within the data. Clustering algorithms, for instance, group similar data points together without any predefined labels.

Semi-Supervised Learning: This approach combines elements of supervised and unsupervised learning, typically by using a small amount of labeled data along with a large amount of unlabeled data to improve learning accuracy.

Reinforcement Learning: This paradigm involves an agent learning to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties, enabling it to learn the optimal behavior to maximize cumulative rewards over time.Machine learning algorithms can be applied to a wide range of tasks, including:

Classification: Assigning inputs to one of several categories. For example, classifying whether an email is spam or not.

Regression: Predicting a continuous value based on input features. For instance, predicting house prices based on features like square footage and location.

Clustering: Grouping similar data points together based on their characteristics.

Dimensionality Reduction: Reducing the number of input variables to simplify analysis and improve computational efficiency.

Recommendation Systems: Predicting user preferences and suggesting items or actions accordingly.

Natural Language Processing (NLP): Analyzing and generating human language text, enabling tasks like sentiment analysis, machine translation, and text summarization.

Machine learning has numerous applications across various domains, including healthcare, finance, marketing, cybersecurity, and more. It continues to be an area of active research andDecision tree for data mining and computer

Decision tree for data mining and computertttiba

Ã˝

The document discusses decision trees and their algorithms. It introduces decision trees, describing their structure as having root, internal, and leaf nodes. It then discusses Hunt's algorithm, the basis for decision tree induction algorithms like ID3 and C4.5. Hunt's algorithm grows a decision tree recursively by partitioning training records into purer subsets based on attribute tests. The document also covers methods for expressing test conditions based on attribute type, measures for selecting the best split like information gain, and advantages and disadvantages of decision trees.Mini datathon - Bengaluru

Mini datathon - BengaluruKunal Jain

Ã˝

This document provides an agenda for a meetup on data science topics. The meetup will be held once a month, with the next one on June 14th. It aims to provide the best networking and learning platform in Bangalore for areas like data science, big data, machine learning. The agenda includes introductions, an overview of the model building lifecycle, data exploration and feature engineering techniques, and modeling techniques like logistic regression, decision trees, random forests, and SVM. Teams will be formed to predict whether bids are from humans or robots using these techniques. Resources for implementing the techniques in Python and R are also provided.Data Mining - The Big Picture!

Data Mining - The Big Picture!Khalid Salama

Ã˝

Recently, in the fields Business Intelligence and Data Management, everybody is talking about data science, machine learning, predictive analytics and many other “clever” terms with promises to turn your data into gold. In this slides, we present the big picture of data science and machine learning. First, we define the context for data mining from BI perspective, and try to clarify various buzzwords in this field. Then we give an overview of the machine learning paradigms. After that, we are going to discuss - at a high level - the various data mining tasks, techniques and applications. Next, we will have a quick tour through the Knowledge Discovery Process. Screenshots from demos will be shown, and finally we conclude with some takeaway points.Decision Tree Classification Algorithm.pptx

Decision Tree Classification Algorithm.pptxPriyadharshiniG41

Ã˝

The document discusses decision tree classification algorithms. It defines key concepts like decision nodes, leaf nodes, splitting, pruning, and describes how a decision tree works. It starts with the root node and uses attribute selection measures like information gain or Gini index to recursively split nodes into subtrees until reaching leaf nodes. Decision trees can model human decision making and have intuitive tree structures, though they may overfit and have complexity issues with many layers.Causal Random Forest

Causal Random ForestBong-Ho Lee

Ã˝

Causal random forests are used to estimate heterogeneous treatment effects by splitting individuals into buckets defined by variable values. The splitting is done by regression trees that minimize an extended mean squared error, which rewards heterogeneity between leaves while penalizing small leaves. This honest sampling and splitting approach allows for unbiased estimates of conditional average treatment effects within subgroups. Causal random forests relax assumptions of traditional methods like propensity score matching and can help identify which subgroups experience the largest effects from a treatment or policy.Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...

Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...MubashirHussain792093

Ã˝

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

DECISION TREE AND PROBABILISTIC MODELS.pptx

DECISION TREE AND PROBABILISTIC MODELS.pptxGoodReads1

Ã˝

This presentation coverts the topics like constructing decision trees and unsupervised learningGeneral Tips for participating Kaggle Competitions

General Tips for participating Kaggle CompetitionsMark Peng

Ã˝

The slides of a talk at Spark Taiwan User Group to share my experience and some general tips for participating kaggle competitions. Decision tree

Decision treeSEMINARGROOT

Ã˝

The document discusses various decision tree learning methods. It begins by defining decision trees and issues in decision tree learning, such as how to split training records and when to stop splitting. It then covers impurity measures like misclassification error, Gini impurity, information gain, and variance reduction. The document outlines algorithms like ID3, C4.5, C5.0, and CART. It also discusses ensemble methods like bagging, random forests, boosting, AdaBoost, and gradient boosting.Creativity and Curiosity - The Trial and Error of Data Science

Creativity and Curiosity - The Trial and Error of Data ScienceDamianMingle

Ã˝

This document discusses the process of data science and machine learning model building. It begins by outlining the many options available at each step, from programming languages and visualization tools to model types and tuning techniques. It then describes a structured 5-step process for knowledge discovery: 1) define the goal, 2) explore the data, 3) prepare the data, 4) choose and evaluate models, and 5) ensemble techniques. For each step, it provides guidance on common tasks and considerations, such as identifying problems in the data, sampling techniques, evaluating model performance, and addressing overfitting. The overall message is that a curious yet structured approach can help remove uncertainty and ensure successful outcomes in data science projects.Anomaly detection Workshop slides

Anomaly detection Workshop slidesQuantUniversity

Ã˝

Anomaly detection (or Outlier analysis) is the identification of items, events or observations which do not conform to an expected pattern or other items in a dataset. It is used is applications such as intrusion detection, fraud detection, fault detection and monitoring processes in various domains including energy, healthcare and finance.

In this workshop, we will discuss the core techniques in anomaly detection and discuss advances in Deep Learning in this field.

Through case studies, we will discuss how anomaly detection techniques could be applied to various business problems. We will also demonstrate examples using R, Python, Keras and Tensorflow applications to help reinforce concepts in anomaly detection and best practices in analyzing and reviewing results.

What you will learn:

Anomaly Detection: An introduction

Graphical and Exploratory analysis techniques

Statistical techniques in Anomaly Detection

Machine learning methods for Outlier analysis

Evaluating performance in Anomaly detection techniques

Detecting anomalies in time series data

Case study 1: Anomalies in Freddie Mac mortgage data

Case study 2: Auto-encoder based Anomaly Detection for Credit risk with Keras and TensorflowDecision Tree.pptx

Decision Tree.pptxJayabharathiMuraliku

Ã˝

This document provides an overview of decision tree classification algorithms. It defines key concepts like decision nodes, leaf nodes, splitting, pruning, and explains how a decision tree is constructed using attributes to recursively split the dataset into purer subsets. It also describes techniques like information gain and Gini index that help select the best attributes to split on, and discusses advantages like interpretability and disadvantages like potential overfitting.Soil Properties and Methods of Determination

Soil Properties and Methods of DeterminationRajani Vyawahare

Ã˝

This PPT covers the index and engineering properties of soil. It includes details on index properties, along with their methods of determination. Various important terms related to soil behavior are explained in detail. The presentation also outlines the experimental procedures for determining soil properties such as water content, specific gravity, plastic limit, and liquid limit, along with the necessary calculations and graph plotting. Additionally, it provides insights to understand the importance of these properties in geotechnical engineering applications.The Golden Gate Bridge a structural marvel inspired by mother nature.pptx

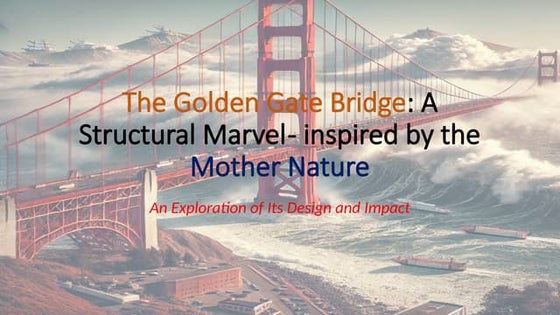

The Golden Gate Bridge a structural marvel inspired by mother nature.pptxAkankshaRawat75

Ã˝

The Golden Gate Bridge is a 6 lane suspension bridge spans the Golden Gate Strait, connecting the city of San Francisco to Marin County, California.

It provides a vital transportation link between the Pacific Ocean and the San Francisco Bay.

More Related Content

Similar to Classification.pptx (20)

2023 Supervised Learning for Orange3 from scratch

2023 Supervised Learning for Orange3 from scratchFEG

Ã˝

This document provides an overview of supervised learning and decision tree models. It discusses supervised learning techniques for classification and regression. Decision trees are explained as a method that uses conditional statements to classify examples based on their features. The document reviews node splitting criteria like information gain that help determine the most important features. It also discusses evaluating models for overfitting/underfitting and techniques like bagging and boosting in random forests to improve performance. Homework involves building a classification model on a healthcare dataset and reporting the results.Primer on major data mining algorithms

Primer on major data mining algorithmsVikram Sankhala IIT, IIM, Ex IRS, FRM, Fin.Engr

Ã˝

This document provides an overview of major data mining algorithms, including supervised learning techniques like decision trees, random forests, support vector machines, naive Bayes, and logistic regression. Unsupervised techniques discussed include clustering algorithms like k-means and EM, as well as association rule learning using the Apriori algorithm. Application areas and advantages/disadvantages of each technique are described. Libraries for implementing these algorithms in Python and R are also listed.Intro to ml_2021

Intro to ml_2021Sanghamitra Deb

Ã˝

Machine learning can be used to predict whether a user will purchase a book on an online book store. Features about the user, book, and user-book interactions can be generated and used in a machine learning model. A multi-stage modeling approach could first predict if a user will view a book, and then predict if they will purchase it, with the predicted view probability as an additional feature. Decision trees, logistic regression, or other classification algorithms could be used to build models at each stage. This approach aims to leverage user data to provide personalized book recommendations.DataMiningOverview_Galambos_2015_06_04.pptx

DataMiningOverview_Galambos_2015_06_04.pptxAkash527744

Ã˝

This document provides an overview of data mining techniques for predictive modeling, including classification and regression trees (CART), chi-squared automatic interaction detection (CHAID), neural networks, bagging, boosting, and examples of applying these techniques using SAS Enterprise Miner. It discusses data preparation, partitioning data into training, validation and test sets, handling missing data, selecting optimal tree size to avoid overfitting, and summarizes a preliminary decision tree model for predicting student GPA.Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social EngineeringMubashirHussain792093

Ã˝

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

Machine Learning Unit-5 Decesion Trees & Random Forest.pdf

Machine Learning Unit-5 Decesion Trees & Random Forest.pdfAdityaSoraut

Ã˝

Its all about Machine learning .Machine learning is a field of artificial intelligence (AI) that focuses on the development of algorithms and statistical models that enable computers to perform tasks without explicit programming instructions. Instead, these algorithms learn from data, identifying patterns, and making decisions or predictions based on that data.

There are several types of machine learning approaches, including:

Supervised Learning: In this approach, the algorithm learns from labeled data, where each example is paired with a label or outcome. The algorithm aims to learn a mapping from inputs to outputs, such as classifying emails as spam or not spam.

Unsupervised Learning: Here, the algorithm learns from unlabeled data, seeking to find hidden patterns or structures within the data. Clustering algorithms, for instance, group similar data points together without any predefined labels.

Semi-Supervised Learning: This approach combines elements of supervised and unsupervised learning, typically by using a small amount of labeled data along with a large amount of unlabeled data to improve learning accuracy.

Reinforcement Learning: This paradigm involves an agent learning to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties, enabling it to learn the optimal behavior to maximize cumulative rewards over time.Machine learning algorithms can be applied to a wide range of tasks, including:

Classification: Assigning inputs to one of several categories. For example, classifying whether an email is spam or not.

Regression: Predicting a continuous value based on input features. For instance, predicting house prices based on features like square footage and location.

Clustering: Grouping similar data points together based on their characteristics.

Dimensionality Reduction: Reducing the number of input variables to simplify analysis and improve computational efficiency.

Recommendation Systems: Predicting user preferences and suggesting items or actions accordingly.

Natural Language Processing (NLP): Analyzing and generating human language text, enabling tasks like sentiment analysis, machine translation, and text summarization.

Machine learning has numerous applications across various domains, including healthcare, finance, marketing, cybersecurity, and more. It continues to be an area of active research andDecision tree for data mining and computer

Decision tree for data mining and computertttiba

Ã˝

The document discusses decision trees and their algorithms. It introduces decision trees, describing their structure as having root, internal, and leaf nodes. It then discusses Hunt's algorithm, the basis for decision tree induction algorithms like ID3 and C4.5. Hunt's algorithm grows a decision tree recursively by partitioning training records into purer subsets based on attribute tests. The document also covers methods for expressing test conditions based on attribute type, measures for selecting the best split like information gain, and advantages and disadvantages of decision trees.Mini datathon - Bengaluru

Mini datathon - BengaluruKunal Jain

Ã˝

This document provides an agenda for a meetup on data science topics. The meetup will be held once a month, with the next one on June 14th. It aims to provide the best networking and learning platform in Bangalore for areas like data science, big data, machine learning. The agenda includes introductions, an overview of the model building lifecycle, data exploration and feature engineering techniques, and modeling techniques like logistic regression, decision trees, random forests, and SVM. Teams will be formed to predict whether bids are from humans or robots using these techniques. Resources for implementing the techniques in Python and R are also provided.Data Mining - The Big Picture!

Data Mining - The Big Picture!Khalid Salama

Ã˝

Recently, in the fields Business Intelligence and Data Management, everybody is talking about data science, machine learning, predictive analytics and many other “clever” terms with promises to turn your data into gold. In this slides, we present the big picture of data science and machine learning. First, we define the context for data mining from BI perspective, and try to clarify various buzzwords in this field. Then we give an overview of the machine learning paradigms. After that, we are going to discuss - at a high level - the various data mining tasks, techniques and applications. Next, we will have a quick tour through the Knowledge Discovery Process. Screenshots from demos will be shown, and finally we conclude with some takeaway points.Decision Tree Classification Algorithm.pptx

Decision Tree Classification Algorithm.pptxPriyadharshiniG41

Ã˝

The document discusses decision tree classification algorithms. It defines key concepts like decision nodes, leaf nodes, splitting, pruning, and describes how a decision tree works. It starts with the root node and uses attribute selection measures like information gain or Gini index to recursively split nodes into subtrees until reaching leaf nodes. Decision trees can model human decision making and have intuitive tree structures, though they may overfit and have complexity issues with many layers.Causal Random Forest

Causal Random ForestBong-Ho Lee

Ã˝

Causal random forests are used to estimate heterogeneous treatment effects by splitting individuals into buckets defined by variable values. The splitting is done by regression trees that minimize an extended mean squared error, which rewards heterogeneity between leaves while penalizing small leaves. This honest sampling and splitting approach allows for unbiased estimates of conditional average treatment effects within subgroups. Causal random forests relax assumptions of traditional methods like propensity score matching and can help identify which subgroups experience the largest effects from a treatment or policy.Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...

Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...MubashirHussain792093

Ã˝

Footprinting, Enumeration, Scanning, Sniffing, Social Engineering

DECISION TREE AND PROBABILISTIC MODELS.pptx

DECISION TREE AND PROBABILISTIC MODELS.pptxGoodReads1

Ã˝

This presentation coverts the topics like constructing decision trees and unsupervised learningGeneral Tips for participating Kaggle Competitions

General Tips for participating Kaggle CompetitionsMark Peng

Ã˝

The slides of a talk at Spark Taiwan User Group to share my experience and some general tips for participating kaggle competitions. Decision tree

Decision treeSEMINARGROOT

Ã˝

The document discusses various decision tree learning methods. It begins by defining decision trees and issues in decision tree learning, such as how to split training records and when to stop splitting. It then covers impurity measures like misclassification error, Gini impurity, information gain, and variance reduction. The document outlines algorithms like ID3, C4.5, C5.0, and CART. It also discusses ensemble methods like bagging, random forests, boosting, AdaBoost, and gradient boosting.Creativity and Curiosity - The Trial and Error of Data Science

Creativity and Curiosity - The Trial and Error of Data ScienceDamianMingle

Ã˝

This document discusses the process of data science and machine learning model building. It begins by outlining the many options available at each step, from programming languages and visualization tools to model types and tuning techniques. It then describes a structured 5-step process for knowledge discovery: 1) define the goal, 2) explore the data, 3) prepare the data, 4) choose and evaluate models, and 5) ensemble techniques. For each step, it provides guidance on common tasks and considerations, such as identifying problems in the data, sampling techniques, evaluating model performance, and addressing overfitting. The overall message is that a curious yet structured approach can help remove uncertainty and ensure successful outcomes in data science projects.Anomaly detection Workshop slides

Anomaly detection Workshop slidesQuantUniversity

Ã˝

Anomaly detection (or Outlier analysis) is the identification of items, events or observations which do not conform to an expected pattern or other items in a dataset. It is used is applications such as intrusion detection, fraud detection, fault detection and monitoring processes in various domains including energy, healthcare and finance.

In this workshop, we will discuss the core techniques in anomaly detection and discuss advances in Deep Learning in this field.

Through case studies, we will discuss how anomaly detection techniques could be applied to various business problems. We will also demonstrate examples using R, Python, Keras and Tensorflow applications to help reinforce concepts in anomaly detection and best practices in analyzing and reviewing results.

What you will learn:

Anomaly Detection: An introduction

Graphical and Exploratory analysis techniques

Statistical techniques in Anomaly Detection

Machine learning methods for Outlier analysis

Evaluating performance in Anomaly detection techniques

Detecting anomalies in time series data

Case study 1: Anomalies in Freddie Mac mortgage data

Case study 2: Auto-encoder based Anomaly Detection for Credit risk with Keras and TensorflowDecision Tree.pptx

Decision Tree.pptxJayabharathiMuraliku

Ã˝

This document provides an overview of decision tree classification algorithms. It defines key concepts like decision nodes, leaf nodes, splitting, pruning, and explains how a decision tree is constructed using attributes to recursively split the dataset into purer subsets. It also describes techniques like information gain and Gini index that help select the best attributes to split on, and discusses advantages like interpretability and disadvantages like potential overfitting.Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...

Comparitive Analysis .pptx Footprinting, Enumeration, Scanning, Sniffing, Soc...MubashirHussain792093

Ã˝

Recently uploaded (20)

Soil Properties and Methods of Determination

Soil Properties and Methods of DeterminationRajani Vyawahare

Ã˝

This PPT covers the index and engineering properties of soil. It includes details on index properties, along with their methods of determination. Various important terms related to soil behavior are explained in detail. The presentation also outlines the experimental procedures for determining soil properties such as water content, specific gravity, plastic limit, and liquid limit, along with the necessary calculations and graph plotting. Additionally, it provides insights to understand the importance of these properties in geotechnical engineering applications.The Golden Gate Bridge a structural marvel inspired by mother nature.pptx

The Golden Gate Bridge a structural marvel inspired by mother nature.pptxAkankshaRawat75

Ã˝

The Golden Gate Bridge is a 6 lane suspension bridge spans the Golden Gate Strait, connecting the city of San Francisco to Marin County, California.

It provides a vital transportation link between the Pacific Ocean and the San Francisco Bay.

Lecture -3 Cold water supply system.pptx

Lecture -3 Cold water supply system.pptxrabiaatif2

Ã˝

The presentation on Cold Water Supply explored the fundamental principles of water distribution in buildings. It covered sources of cold water, including municipal supply, wells, and rainwater harvesting. Key components such as storage tanks, pipes, valves, and pumps were discussed for efficient water delivery. Various distribution systems, including direct and indirect supply methods, were analyzed for residential and commercial applications. The presentation emphasized water quality, pressure regulation, and contamination prevention. Common issues like pipe corrosion, leaks, and pressure drops were addressed along with maintenance strategies. Diagrams and case studies illustrated system layouts and best practices for optimal performance.IPC-9716_2024 Requirements for Automated Optical Inspection (AOI) Process Con...

IPC-9716_2024 Requirements for Automated Optical Inspection (AOI) Process Con...ssuserd9338b

Ã˝

IPC-9716_2024 Requirements for Automated Optical Inspection (AOI) Process Control for Printed Board Assemblies.pdfUNIT 1FUNDAMENTALS OF OPERATING SYSTEMS.pptx

UNIT 1FUNDAMENTALS OF OPERATING SYSTEMS.pptxKesavanT10

Ã˝

UNIT 1FUNDAMENTALS OF OPERATING SYSTEMS.pptxIndian Soil Classification System in Geotechnical Engineering

Indian Soil Classification System in Geotechnical EngineeringRajani Vyawahare

Ã˝

This PowerPoint presentation provides a comprehensive overview of the Indian Soil Classification System, widely used in geotechnical engineering for identifying and categorizing soils based on their properties. It covers essential aspects such as particle size distribution, sieve analysis, and Atterberg consistency limits, which play a crucial role in determining soil behavior for construction and foundation design. The presentation explains the classification of soil based on particle size, including gravel, sand, silt, and clay, and details the sieve analysis experiment used to determine grain size distribution. Additionally, it explores the Atterberg consistency limits, such as the liquid limit, plastic limit, and shrinkage limit, along with a plasticity chart to assess soil plasticity and its impact on engineering applications. Furthermore, it discusses the Indian Standard Soil Classification (IS 1498:1970) and its significance in construction, along with a comparison to the Unified Soil Classification System (USCS). With detailed explanations, graphs, charts, and practical applications, this presentation serves as a valuable resource for students, civil engineers, and researchers in the field of geotechnical engineering. Structural QA/QC Inspection in KRP 401600 | Copper Processing Plant-3 (MOF-3)...

Structural QA/QC Inspection in KRP 401600 | Copper Processing Plant-3 (MOF-3)...slayshadow705

Ã˝

This presentation provides an in-depth analysis of structural quality control in the KRP 401600 section of the Copper Processing Plant-3 (MOF-3) in Uzbekistan. As a Structural QA/QC Inspector, I have identified critical welding defects, alignment issues, bolting problems, and joint fit-up concerns.

Key topics covered:

✔ Common Structural Defects – Welding porosity, misalignment, bolting errors, and more.

✔ Root Cause Analysis – Understanding why these defects occur.

✔ Corrective & Preventive Actions – Effective solutions to improve quality.

✔ Team Responsibilities – Roles of supervisors, welders, fitters, and QC inspectors.

✔ Inspection & Quality Control Enhancements – Advanced techniques for defect detection.

üìå Applicable Standards: GOST, KMK, SNK ‚Äì Ensuring compliance with international quality benchmarks.

üöÄ This presentation is a must-watch for:

‚úÖ QA/QC Inspectors, Structural Engineers, Welding Inspectors, and Project Managers in the construction & oil & gas industries.

‚úÖ Professionals looking to improve quality control processes in large-scale industrial projects.

üì¢ Download & share your thoughts! Let's discuss best practices for enhancing structural integrity in industrial projects.

Categories:

Engineering

Construction

Quality Control

Welding Inspection

Project Management

Tags:

#QAQC #StructuralInspection #WeldingDefects #BoltingIssues #ConstructionQuality #Engineering #GOSTStandards #WeldingInspection #QualityControl #ProjectManagement #MOF3 #CopperProcessing #StructuralEngineering #NDT #OilAndGasFrankfurt University of Applied Science urkunde

Frankfurt University of Applied Science urkundeLisa Emerson

Ã˝

Duplicate Frankfurt University of Applied Science urkunde, make a Frankfurt UAS degree.Wireless-Charger presentation for seminar .pdf

Wireless-Charger presentation for seminar .pdfAbhinandanMishra30

Ã˝

Wireless technology used in chargerUS Patented ReGenX Generator, ReGen-X Quatum Motor EV Regenerative Accelerati...

US Patented ReGenX Generator, ReGen-X Quatum Motor EV Regenerative Accelerati...Thane Heins NOBEL PRIZE WINNING ENERGY RESEARCHER

Ã˝

Preface: The ReGenX Generator innovation operates with a US Patented Frequency Dependent Load

Current Delay which delays the creation and storage of created Electromagnetic Field Energy around

the exterior of the generator coil. The result is the created and Time Delayed Electromagnetic Field

Energy performs any magnitude of Positive Electro-Mechanical Work at infinite efficiency on the

generator's Rotating Magnetic Field, increasing its Kinetic Energy and increasing the Kinetic Energy of

an EV or ICE Vehicle to any magnitude without requiring any Externally Supplied Input Energy. In

Electricity Generation applications the ReGenX Generator innovation now allows all electricity to be

generated at infinite efficiency requiring zero Input Energy, zero Input Energy Cost, while producing

zero Greenhouse Gas Emissions, zero Air Pollution and zero Nuclear Waste during the Electricity

Generation Phase. In Electric Motor operation the ReGen-X Quantum Motor now allows any

magnitude of Work to be performed with zero Electric Input Energy.

Demonstration Protocol: The demonstration protocol involves three prototypes;

1. Protytpe #1, demonstrates the ReGenX Generator's Load Current Time Delay when compared

to the instantaneous Load Current Sine Wave for a Conventional Generator Coil.

2. In the Conventional Faraday Generator operation the created Electromagnetic Field Energy

performs Negative Work at infinite efficiency and it reduces the Kinetic Energy of the system.

3. The Magnitude of the Negative Work / System Kinetic Energy Reduction (in Joules) is equal to

the Magnitude of the created Electromagnetic Field Energy (also in Joules).

4. When the Conventional Faraday Generator is placed On-Load, Negative Work is performed and

the speed of the system decreases according to Lenz's Law of Induction.

5. In order to maintain the System Speed and the Electric Power magnitude to the Loads,

additional Input Power must be supplied to the Prime Mover and additional Mechanical Input

Power must be supplied to the Generator's Drive Shaft.

6. For example, if 100 Watts of Electric Power is delivered to the Load by the Faraday Generator,

an additional >100 Watts of Mechanical Input Power must be supplied to the Generator's Drive

Shaft by the Prime Mover.

7. If 1 MW of Electric Power is delivered to the Load by the Faraday Generator, an additional >1

MW Watts of Mechanical Input Power must be supplied to the Generator's Drive Shaft by the

Prime Mover.

8. Generally speaking the ratio is 2 Watts of Mechanical Input Power to every 1 Watt of Electric

Output Power generated.

9. The increase in Drive Shaft Mechanical Input Power is provided by the Prime Mover and the

Input Energy Source which powers the Prime Mover.

10. In the Heins ReGenX Generator operation the created and Time Delayed Electromagnetic Field

Energy performs Positive Work at infinite efficiency and it increases the Kinetic Energy of the

system.

Taykon-Kalite belgeleri

Taykon-Kalite belgeleriTAYKON

Ã˝

Kalite Politikamız

Taykon Çelik için kalite, hayallerinizi bizlerle paylaştığınız an başlar. Proje çiziminden detayların çözümüne, detayların çözümünden üretime, üretimden montaja, montajdan teslime hayallerinizin gerçekleştiğini gördüğünüz ana kadar geçen tüm aşamaları, çalışanları, tüm teknik donanım ve çevreyi içine alır KALİTE.Multi objective genetic approach with Ranking

Multi objective genetic approach with Rankingnamisha18

Ã˝

Multi objective genetic approach with Ranking US Patented ReGenX Generator, ReGen-X Quatum Motor EV Regenerative Accelerati...

US Patented ReGenX Generator, ReGen-X Quatum Motor EV Regenerative Accelerati...Thane Heins NOBEL PRIZE WINNING ENERGY RESEARCHER

Ã˝

Classification.pptx

- 1. CLASSIFICATION Dr. Amanpreet Kaur‚Äã Associate Professor, Chitkara University, Punjab

- 2. AGENDA Introduction‚Äã Primary goals ‚ÄãAreas of growth Timeline ‚ÄãSummary‚Äã

- 3. CLASSIFICATION • Classification predictive modeling involves assigning a class label to input examples. • Binary classification refers to predicting one of two classes and multi-class classification involves predicting one of more than two classes. • Multi-label classification involves predicting one or more classes for each example and imbalanced classification refers to classification tasks where the distribution of examples across the classes is not equal. • Examples of classification problems include: • Given an example, classify if it is spam or not. • Given a handwritten character, classify it as one of the known characters. • Given recent user behavior, classify as churn or not. 3

- 4. •Text categorization (e.g., spam filtering) •Fraud detection •Optical character recognition •Machine vision (e.g., face detection) •Natural-language processing • (e.g., spoken language understanding) •Market segmentation • (e.g.: predict if customer will respond to promotion) •Bioinformatics •(e.g., classify proteins according to their function) 4 EXAMPLE OF CLASSIFICATION

- 5. DECISION TREE • The decision tree algorithm builds the classification model in the form of a tree structure. • It utilizes the if-then rules which are equally exhaustive and mutually exclusive in classification. • The process goes on with breaking down the data into smaller structures and eventually associating it with an incremental decision tree. • The final structure looks like a tree with nodes and leaves. The rules are learned sequentially using the training data one at a time. • Each time a rule is learned, the tuples covering the rules are removed. The process continues on the training set until the termination point is met. 5

- 7. • Terminologies Related to Decision Tree Algorithms • Root Node: This node gets divided into different homogeneous nodes. It represents entire sample. • Splitting: It is the process of splitting or dividing a node into two or more sub-nodes. • Interior Nodes: They represent different tests on an attribute. • Branches: They hold the outcomes of those tests. • Leaf Nodes: When the nodes can’t be split further, they are called leaf nodes. • Parent and Child Nodes: The node from where sub-nodes are created is called a parent node. And, the sub-nodes are called the child nodes. 7 DECISION TREE

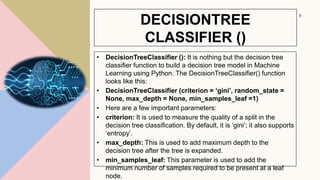

- 8. DECISIONTREE CLASSIFIER () • DecisionTreeClassifier (): It is nothing but the decision tree classifier function to build a decision tree model in Machine Learning using Python. The DecisionTreeClassifier() function looks like this: • DecisionTreeClassifier (criterion = ‘gini’, random_state = None, max_depth = None, min_samples_leaf =1) • Here are a few important parameters: • criterion: It is used to measure the quality of a split in the decision tree classification. By default, it is ‘gini’; it also supports ‘entropy’. • max_depth: This is used to add maximum depth to the decision tree after the tree is expanded. • min_samples_leaf: This parameter is used to add the minimum number of samples required to be present at a leaf node. 8

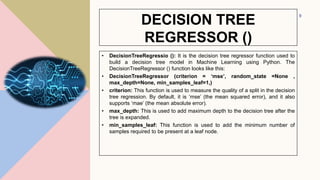

- 9. DECISION TREE REGRESSOR () • DecisionTreeRegressio (): It is the decision tree regressor function used to build a decision tree model in Machine Learning using Python. The DecisionTreeRegressor () function looks like this: • DecisionTreeRegressor (criterion = ‘mse’, random_state =None , max_depth=None, min_samples_leaf=1,) • criterion: This function is used to measure the quality of a split in the decision tree regression. By default, it is ‘mse’ (the mean squared error), and it also supports ‘mae’ (the mean absolute error). • max_depth: This is used to add maximum depth to the decision tree after the tree is expanded. • min_samples_leaf: This function is used to add the minimum number of samples required to be present at a leaf node. 9

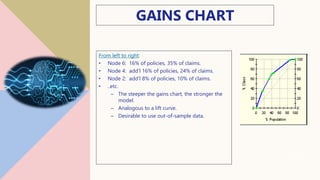

- 10. GAINS CHART From left to right: • Node 6: 16% of policies, 35% of claims. • Node 4: add’l 16% of policies, 24% of claims. • Node 2: add’l 8% of policies, 10% of claims. • ..etc. – The steeper the gains chart, the stronger the model. – Analogous to a lift curve. – Desirable to use out-of-sample data. 10

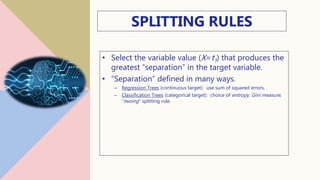

- 11. SPLITTING RULES • Select the variable value (X=t1) that produces the greatest “separation” in the target variable. • “Separation” defined in many ways. – Regression Trees (continuous target): use sum of squared errors. – Classification Trees (categorical target): choice of entropy, Gini measure, “twoing” splitting rule. 11

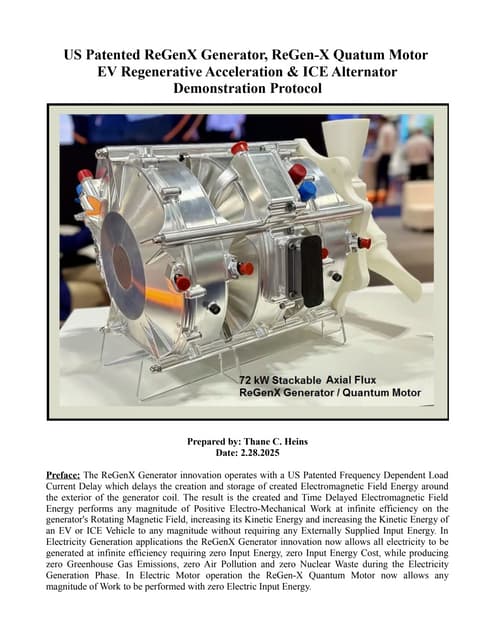

- 12. REGRESSION TREES • Tree-based modeling for continuous target variable • most intuitively appropriate method for loss ratio analysis • Find split that produces greatest separation in ∑[y – E(y)]2 • i.e.: find nodes with minimal within variance • and therefore greatest between variance • like credibility theory 12

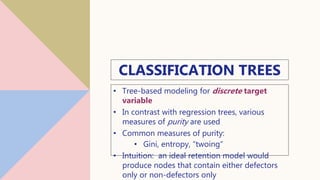

- 13. CLASSIFICATION TREES • Tree-based modeling for discrete target variable • In contrast with regression trees, various measures of purity are used • Common measures of purity: • Gini, entropy, “twoing” • Intuition: an ideal retention model would produce nodes that contain either defectors only or non-defectors only 13

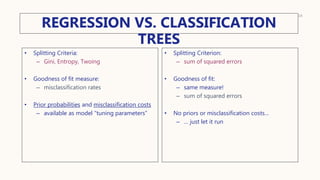

- 14. REGRESSION VS. CLASSIFICATION TREES 14 • Splitting Criteria: – Gini, Entropy, Twoing • Goodness of fit measure: – misclassification rates • Prior probabilities and misclassification costs – available as model “tuning parameters” • Splitting Criterion: – sum of squared errors • Goodness of fit: – same measure! – sum of squared errors • No priors or misclassification costs… – … just let it run

- 15. HOW CART SELECTS THE OPTIMAL TREE • Use cross-validation (CV) to select the optimal decision tree. • Built into the CART algorithm. – Essential to the method; not an add-on • Basic idea: “grow the tree” out as far as you can…. Then “prune back”. • CV: tells you when to stop pruning. 15

- 16. GROWING AND PRUNING • One approach: stop growing the tree early. • But how do you know when to stop? • CART: just grow the tree all the way out; then prune back. • Sequentially collapse nodes that result in the smallest change in purity. • “weakest link” pruning. 16

- 17. CART ADVANTAGES • Nonparametric (no probabilistic assumptions) • Automatically performs variable selection • Uses any combination of continuous/discrete variables – Very nice feature: ability to automatically bin massively categorical variables into a few categories. • zip code, business class, make/model… • Discovers “interactions” among variables – Good for “rules” search – Hybrid GLM-CART models 17

- 18. CART DISADVANTAGES • The model is a step function, not a continuous score • So if a tree has 10 nodes, yhat can only take on 10 possible values. • MARS improves this. • Might take a large tree to get good lift • But then hard to interpret • Data gets chopped thinner at each split • Instability of model structure • Correlated variables  random data fluctuations could result in entirely different trees. • CART does a poor job of modeling linear structure 18

- 19. USES OF CART • Building predictive models – Alternative to GLMs, neural nets, etc • Exploratory Data Analysis – Breiman et al: a different view of the data. – You can build a tree on nearly any data set with minimal data preparation. – Which variables are selected first? – Interactions among variables – Take note of cases where CART keeps re-splitting the same variable (suggests linear relationship) • Variable Selection – CART can rank variables – Alternative to stepwise regression 19

- 20. REFERENCES E Books- Peter Harrington “Machine Learning In Action”, DreamTech Press Ethem Alpaydın, “Introduction to Machine Learning”, MIT Press Video Links- https://www.youtube.com/watch?v=atw7hUrg3_8 https://www.youtube.com/watch?v=FuJVLsZYkuE 20