Computer Vision and GenAI for Geoscientists.pptx

- 1. Computer Vision and GenAI in Geoscience YOHANES NUWARA PETROLEUM ENGINEERS ASSOCIATION (PEA) Trondheim, 28.07.2024

- 2. Yohanes Nuwara Career: ¡ñ Data scientist at Prores AS, Norway (2024-) ¡ğ Computer vision for porosity and permeability prediction from core images ¡ñ Lead data analyst at APP Sinarmas, Indonesia (2022-2023) ¡ğ Sustainability dashboard for management ¡ñ Expert data scientist at APP Sinarmas, Indonesia (2022-2023) ¡ğ LiDAR, computer vision for remote sensing UAV ¡ñ Research engineer at OYO Corporation, Japan (2020-2022) ¡ğ Distributed Acoustic Sensing (DAS) for earthquake seismology Education: ¡ñ Politecnico di Milano, Italy (2023-) ¡ğ Master¡¯s in Business Analytics and Big Data ¡ñ Bandung Institute of Technology, Indonesia (2015-2019) ¡ğ Bachelor¡¯s in Geophysical Engineering

- 3. Outline ¡ñ What is computer vision? ¡ñ Computer vision methods and models ¡ñ Use case 1: Automatic rock typing using segmentation model ¡ñ Use case 2: Boulder detection for seabed mapping ¡ñ Challenges in computer vision ¡ñ What is Generative AI? ¡ñ Generative vision models ¡ñ Conclusion

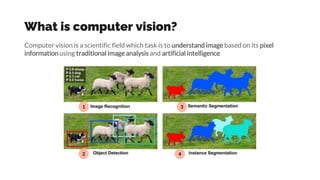

- 5. What is computer vision? Computer vision is a scientific field which task is to understand image based on its pixel information using traditional image analysis and artificial intelligence 1 2 3 4

- 6. Why computer vision is so growing??? 6 Rapid growth of computers and hardware chips Bigger, modern, and more secure data storage Rapid evolution of AI computer vision models More and more advanced optics and camera technologies

- 7. Computer Vision in geoscience Seismic interpretation Petrophysics Geology Remote sensing

- 8. Methods of computer vision

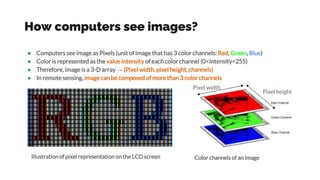

- 9. How computers see images? ¡ñ Computers see image as Pixels(unit of image that has 3 color channels: Red, Green, Blue) ¡ñ Color is represented as the value intensity of each color channel (0<Intensity<255) ¡ñ Therefore, image is a 3-D array ¡ú (Pixel width, pixel height, channels) ¡ñ In remote sensing, image can be composed of more than 3 color channels Illustration of pixel representation on the LCD screen Color channels of an image Pixel width Pixel height

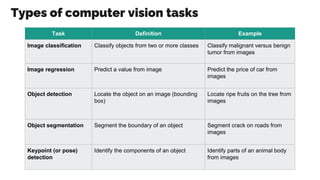

- 10. Types of computer vision tasks Task Definition Example Image classification Classify objects from two or more classes Classify malignant versus benign tumor from images Image regression Predict a value from image Predict the price of car from images Object detection Locate the object on an image (bounding box) Locate ripe fruits on the tree from images Object segmentation Segment the boundary of an object Segment crack on roads from images Keypoint (or pose) detection Identify the components of an object Identify parts of an animal body from images

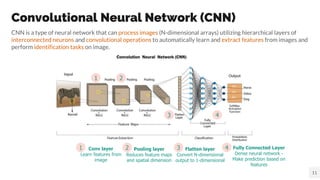

- 11. Convolutional Neural Network (CNN) CNN is a type of neural network that can process images (N-dimensional arrays) utilizing hierarchical layers of interconnected neurons and convolutional operations to automatically learn and extract features from images and perform identification tasks on image. 11 Fully Connected Layer Dense neural network - Make prediction based on features Conv layer Learn features from image Pooling layer Reduces feature maps and spatial dimension Flatten layer Convert N-dimensional output to 1-dimensional 1 2 3 4 1 2 3 4

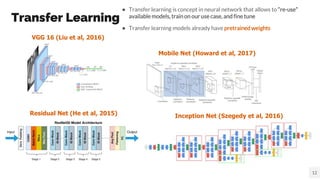

- 12. Transfer Learning ¡ñ Transfer learning is concept in neural network that allows to ¡°re-use¡± available models, train on our use case, and fine tune ¡ñ Transfer learning models already have pretrained weights 12 Residual Net (He et al, 2015) Inception Net (Szegedy et al, 2016) VGG 16 (Liu et al, 2016) Mobile Net (Howard et al, 2017)

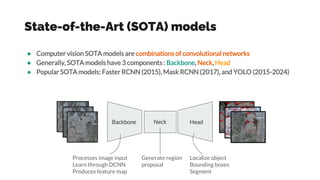

- 13. State-of-the-Art (SOTA) models ¡ñ Computer vision SOTA models are combinations of convolutional networks ¡ñ Generally, SOTA models have 3 components : Backbone, Neck, Head ¡ñ Popular SOTA models: Faster RCNN (2015), Mask RCNN (2017), and YOLO (2015-2024) Backbone Head Neck Processes image input Learn through DCNN Produces feature map Generate region proposal Localize object Bounding boxes Segment

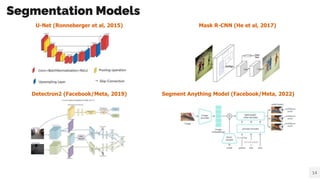

- 14. Segmentation Models Detectron2 (Facebook/Meta, 2019) Segment Anything Model (Facebook/Meta, 2022) 14 U-Net (Ronneberger et al, 2015) Mask R-CNN (He et al, 2017)

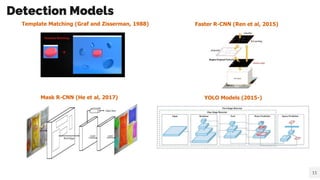

- 15. Detection Models Mask R-CNN (He et al, 2017) YOLO Models (2015-) 15 Template Matching (Graf and Zisserman, 1988) Faster R-CNN (Ren et al, 2015)

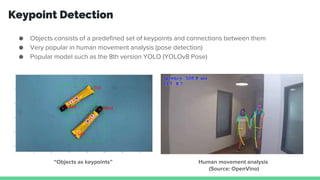

- 16. Keypoint Detection ¡ñ Objects consists of a predefined set of keypoints and connections between them ¡ñ Very popular in human movement analysis (pose detection) ¡ñ Popular model such as the 8th version YOLO (YOLOv8 Pose) ¡°Objects as keypoints¡± Human movement analysis (Source: OpenVino)

- 17. Use case 1: Automatic rock typing from core

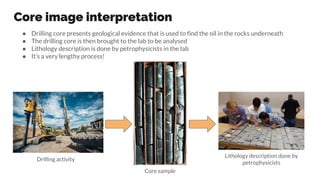

- 18. Core image interpretation Drilling activity Core sample Lithology description done by petrophysicists ¡ñ Drilling core presents geological evidence that is used to find the oil in the rocks underneath ¡ñ The drilling core is then brought to the lab to be analysed ¡ñ Lithology description is done by petrophysicists in the lab ¡ñ It¡¯s a very lengthy process!

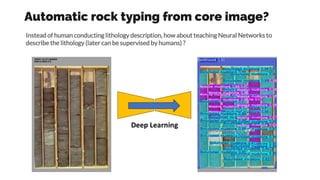

- 19. Automatic rock typing from core image? Instead of human conducting lithology description, how about teaching Neural Networks to describe the lithology (later can be supervised by humans) ?

- 20. Labelling and annotation 500 images are carefully segmented by different classes of lithologies, namely: Bioturbated mudstone/sandstone, Massive mudstone/sandstone, Parallel-laminated mudstone/sandstone, Cross- bedded/graded-bedded sandstone, Current-rippled sandstone, Conglomerate, Fissile shale, and Heterolithic

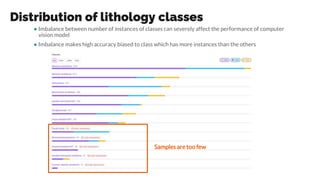

- 21. Distribution of lithology classes Samples are too few ¡ñ Imbalance between number of instances of classes can severely affect the performance of computer vision model ¡ñ Imbalance makes high accuracy biased to class which has more instances than the others

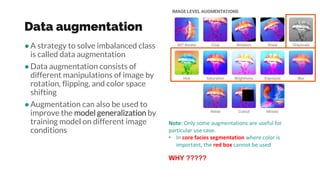

- 22. Data augmentation ¡ñA strategy to solve imbalanced class is called data augmentation ¡ñData augmentation consists of different manipulations of image by rotation, flipping, and color space shifting ¡ñAugmentation can also be used to improve the model generalization by training model on different image conditions Note: Only some augmentations are useful for particular use case. ? In core facies segmentation where color is important, the red box cannot be used WHY ?????

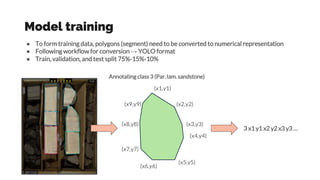

- 23. Model training (x1,y1) (x2,y2) (x3,y3) (x4,y4) (x5,y5) (x6,y6) (x7,y7) (x8,y8) (x9,y9) Annotating class 3 (Par. lam. sandstone) 3 x1 y1 x2 y2 x3 y3 ¡ ¡ñ To form training data, polygons (segment) need to be converted to numerical representation ¡ñ Following workflow for conversion ¡ú YOLO format ¡ñ Train, validation, and test split 75%-15%-10%

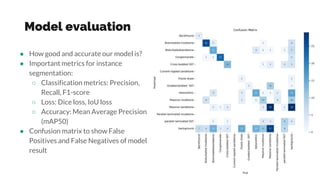

- 24. Model evaluation ¡ñ How good and accurate our model is? ¡ñ Important metrics for instance segmentation: ¡ğ Classification metrics: Precision, Recall, F1-score ¡ğ Loss: Dice loss, IoU loss ¡ğ Accuracy: Mean Average Precision (mAP50) ¡ñ Confusion matrix to show False Positives and False Negatives of model result

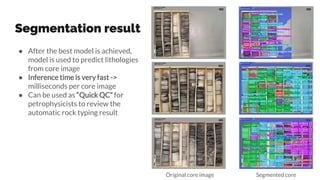

- 25. Segmentation result ¡ñ After the best model is achieved, model is used to predict lithologies from core image ¡ñ Inference time is very fast -> milliseconds per core image ¡ñ Can be used as ¡°Quick QC¡± for petrophysicists to review the automatic rock typing result Original core image Segmented core

- 26. Use case 2: Boulder detection for seabed mapping

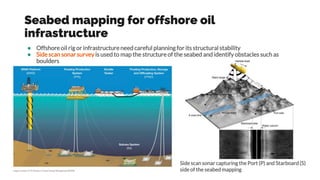

- 27. Seabed mapping for offshore oil infrastructure ¡ñ Offshore oil rig or infrastructure need careful planning for its structural stability ¡ñ Side scan sonar survey is used to map the structure of the seabed and identify obstacles such as boulders Side scan sonar capturing the Port (P) and Starboard (S) side of the seabed mapping

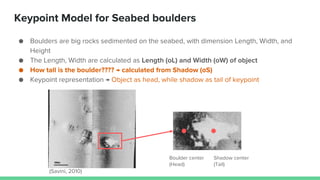

- 28. Keypoint Model for Seabed boulders ¡ñ Boulders are big rocks sedimented on the seabed, with dimension Length, Width, and Height ¡ñ The Length, Width are calculated as Length (oL) and Width (oW) of object ¡ñ How tall is the boulder???? ¡ú calculated from Shadow (oS) ¡ñ Keypoint representation ¡ú Object as head, while shadow as tail of keypoint Boulder center (Head) Shadow center (Tail) (Savini, 2010)

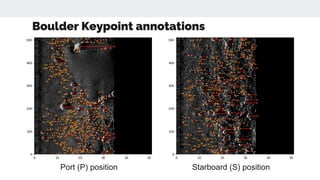

- 29. Boulder Keypoint annotations Port (P) position Starboard (S) position

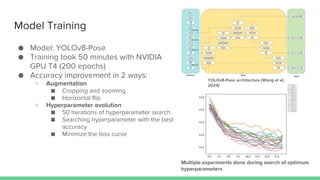

- 30. Model Training ¡ñ Model: YOLOv8-Pose ¡ñ Training took 50 minutes with NVIDIA GPU T4 (200 epochs) ¡ñ Accuracy improvement in 2 ways: ¡ğ Augmentation ¡ö Cropping and zooming ¡ö Horizontal flip ¡ğ Hyperparameter evolution ¡ö 50 iterations of hyperparameter search ¡ö Searching hyperparameter with the best accuracy ¡ö Minimize the loss curve Multiple experiments done during search of optimum hyperparameters YOLOv8-Pose architecture (Wang et al, 2024)

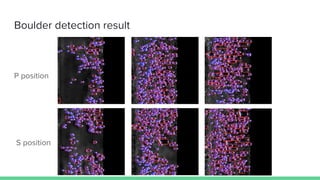

- 31. Boulder detection result P position S position

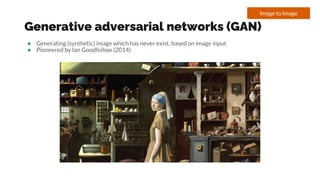

- 33. Generative adversarial networks (GAN) ¡ñ Generating (synthetic) image which has never exist, based on image input ¡ñ Pioneered by Ian Goodfellow (2014) Image to Image

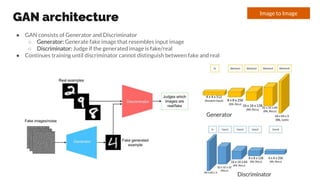

- 34. GAN architecture ¡ñ GAN consists of Generator and Discriminator ¡ğ Generator: Generate fake image that resembles input image ¡ğ Discriminator: Judge if the generated image is fake/real ¡ñ Continues training until discriminator cannot distinguish between fake and real Image to Image Generator Discriminator

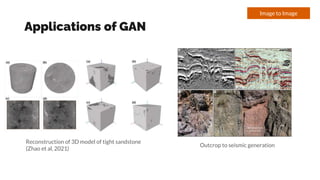

- 35. Applications of GAN Image to Image Reconstruction of 3D model of tight sandstone (Zhao et al, 2021) Outcrop to seismic generation

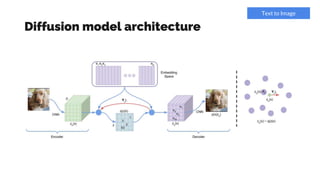

- 36. Diffusion models ¡ñ Generating (synthetic) image from text input by human ¡ñ Examples: DALL-E by OpenAI, Imagen by Google Text to Image

- 37. Diffusion model architecture Text to Image

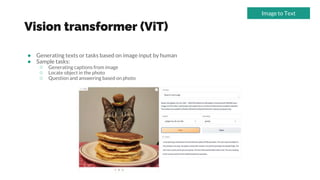

- 38. Vision transformer (ViT) ¡ñ Generating texts or tasks based on image input by human ¡ñ Sample tasks: ¡ğ Generating captions from image ¡ğ Locate object in the photo ¡ğ Question and answering based on photo Image to Text

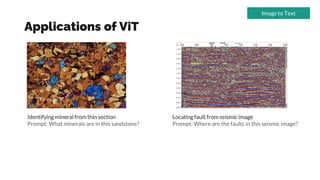

- 39. Applications of ViT Image to Text Identifying mineral from thin section Prompt: What minerals are in this sandstone? Locating fault from seismic image Prompt: Where are the faults in this seismic image?

- 40. Challenges in computer vision My paper in Springer¡¯s Lecture Notes on Computer Science (2024)

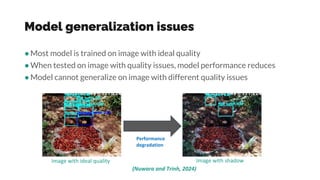

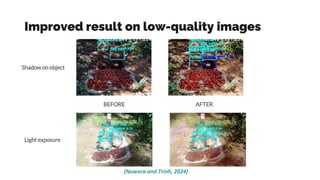

- 41. Image quality issues ¡ñImage can suffer from quality issues, for example ¡ğResolution reduction: blurred image due to camera movement or haze ¡ğOcclusion: shadowed image due to object obstacles blocking the light ¡ğOver-exposure: appearance looks too bright due to excessive light exposure ¡ğColour constancy: false colour of image tendency towards a certain colour Shadow Overexposure Yellow constancy (Nuwara and Trinh, 2024)

- 42. Model generalization issues ¡ñMost model is trained on image with ideal quality ¡ñWhen tested on image with quality issues, model performance reduces ¡ñModel cannot generalize on image with different quality issues Image with ideal quality Image with shadow Performance degradation (Nuwara and Trinh, 2024)

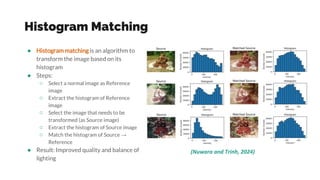

- 43. Histogram Matching ¡ñ Histogram matching is an algorithm to transform the image based on its histogram ¡ñ Steps: ¡ğ Select a normal image as Reference image ¡ğ Extract the histogram of Reference image ¡ğ Select the image that needs to be transformed (as Source image) ¡ğ Extract the histogram of Source image ¡ğ Match the histogram of Source ¡ú Reference ¡ñ Result: Improved quality and balance of lighting (Nuwara and Trinh, 2024)

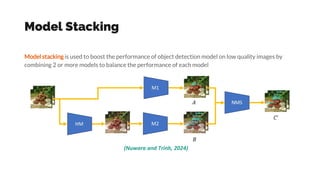

- 44. Model Stacking Model stacking is used to boost the performance of object detection model on low quality images by combining 2 or more models to balance the performance of each model (Nuwara and Trinh, 2024)

- 45. Improved result on low-quality images Shadowon object Light exposure (Nuwara and Trinh, 2024) BEFORE AFTER

- 46. Conclusion ¡ñ Computer vision makes huge impact in broad areas of geoscience ¡ñ Two use cases are presented using segmentation and object detection workflows ¡ñ Generative AI shape the future of AI implementation in geoscience

- 47. Thank you!