Connectivity Methodology3.0

- 1. Connectivity Methodology Ver. 3.0 NRDC Sustainable Cities Team Presenter: TL July 15,2015

- 2. Many thx to RND team!

- 3. Outline ŌĆó Key updates ŌĆó Principles ŌĆó Input preparation ŌĆó Interim procedure ŌĆó Output result ŌĆó Evaluation of the Methodology

- 4. Key Updates ŌĆó Powerful ArcGIS (licensed authorization!) ŌĆó Overcome some constrains posed by KMZ preparation ŌĆó Generate random points ŌĆó Select eligible points ŌĆó Batch: python code ŌĆó Update of Cityname.xlsx file ŌĆó Skipping of sheet Random_Points ŌĆó Important annotation: the unit of average altitude is kilometers

- 5. Principles ŌĆó The process willŌĆ” ŌĆó generate 1000 random points for each city; ŌĆó Increasing capacity is feasible ŌĆó allow us to choose 40 eligible points to measure the walking distances; ŌĆó Identify if the interim points meet the standard (od distance = 500m) ŌĆó Discard the points that do not meet the requirement ŌĆó Go back to the bank test if a new eligible point meet the requirement ŌĆó get average walking distances from the final 40 points; ŌĆó The process need to beŌĆ” ŌĆó strictly random in point selecting ŌĆó accurate in calculating the distance ŌĆó comparable across all cities ŌĆó efficient

- 6. Input Preparation ŌĆó Google Earth Pro: ŌĆó citynameRND.kmz (Many thanks to Ning, Xiao, Judy, Chenzi, Danlu) ŌĆó Same setting requirement: degree (decimal) ŌĆó Microsoft Excel: ŌĆó cityname.xlsx ŌĆó (Random_Points_Value), Eligible_Points, (Eligible_Points_Raw), Square_Points, Distance ŌĆó ESRI ArcGIS 10.X: ŌĆó Manually geoprocessing ŌĆó Python stand-alone processing ŌĆó (Windows environment is strongly recommended!) ŌĆó VPN and good network

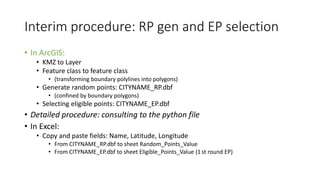

- 7. Interim procedure: RP gen and EP selection ŌĆó In ArcGIS: ŌĆó KMZ to Layer ŌĆó Feature class to feature class ŌĆó (transforming boundary polylines into polygons) ŌĆó Generate random points: CITYNAME_RP.dbf ŌĆó (confined by boundary polygons) ŌĆó Selecting eligible points: CITYNAME_EP.dbf ŌĆó Detailed procedure: consulting to the python file ŌĆó In Excel: ŌĆó Copy and paste fields: Name, Latitude, Longitude ŌĆó From CITYNAME_RP.dbf to sheet Random_Points_Value ŌĆó From CITYNAME_EP.dbf to sheet Eligible_Points_Value (1 st round EP)

- 8. Interim Procedure: Square Points ŌĆó In workbook Square_Points of Nanchang.xlsx: ŌĆó Fill value of NanchangŌĆÖs average altitude in the cell following Average Altitude;

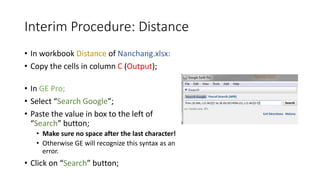

- 9. Interim Procedure: Distance ŌĆó In workbook Distance of Nanchang.xlsx: ŌĆó Copy the cells in column C (Output); ŌĆó In GE Pro; ŌĆó Select ŌĆ£Search GoogleŌĆØ; ŌĆó Paste the value in box to the left of ŌĆ£SearchŌĆØ button; ŌĆó Make sure no space after the last character! ŌĆó Otherwise GE will recognize this syntax as an error. ŌĆó Click on ŌĆ£SearchŌĆØ button;

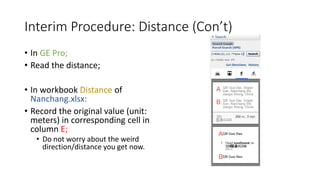

- 10. Interim Procedure: Distance (ConŌĆÖt) ŌĆó In GE Pro; ŌĆó Read the distance; ŌĆó In workbook Distance of Nanchang.xlsx: ŌĆó Record the original value (unit: meters) in corresponding cell in column E; ŌĆó Do not worry about the weird direction/distance you get now.

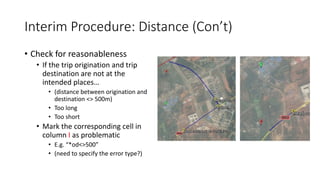

- 11. Interim Procedure: Distance (ConŌĆÖt) ŌĆó Check for reasonableness ŌĆó If the trip origination and trip destination are approximately located at the point eligible pointsŌĆ” ŌĆó You are lucky!

- 12. Interim Procedure: Distance (ConŌĆÖt) ŌĆó Check for reasonableness ŌĆó If the trip origination and trip destination are not at the intended placesŌĆ” ŌĆó (distance between origination and destination <> 500m) ŌĆó Too long ŌĆó Too short ŌĆó Mark the corresponding cell in column I as problematic ŌĆó E.g. ŌĆ£*od<>500ŌĆØ ŌĆó (need to specify the error type?)

- 13. Interim Procedure: Distance (ConŌĆÖt) ŌĆó Complete all 160 (4 square points of each eligible point * 40 eligible points) entries ŌĆó Good luck! ŌĆó Review the notes for problematic results; ŌĆó You have made marks for each pair of eligible point and square point; ŌĆó Look at column I; ŌĆó Check if the note belongs to a problematic eligible point ŌĆó If more than 3/4 (including 3/4) direction/distance results of the eligible point are marked as problematic, we need 2nd round of eligible points selecting; ŌĆó Clear all four results of the problematic eligible point in column E

- 14. Interim Procedure: 2nd round Eligible Points ŌĆó In workbook Eligible_Points of Nanchang.xlsx: ŌĆó Mark all problematic eligible points ŌĆó Find the first backup eligible pointsŌĆ” ŌĆó Directly from Nanchang_RP layer in GE ŌĆó Manually replace the number of the problematic eligible point in column A with the one of backup eligible point; ŌĆó Use a point from the back up list generated in 1st round ŌĆó Use a point from the back up list generated by ArcGIS ŌĆó (time saving) ŌĆó Repeat the Interim procedure: Distance ŌĆó If the back up point is still problematic, continue the process of finding new back up eligible point. ŌĆó Finish the process when no problematic eligible points show up.

- 15. Output Result ŌĆó Save Nanchang.xlsx. ŌĆó The results will keep in workbook Distance;

- 16. Output Result ŌĆó Copy column B, C, and D to Nanchang_EP.csv; ŌĆó Save Nanchang_EP.csv; ŌĆó No need to copy column A; ŌĆó Copy column C, D, and E to Nanchang_Square.csv; ŌĆó Save Nanchang_Square.csv; ŌĆó No need to copy the rest columns; ŌĆó Import Nanchang_EP.csv and Nanchang_Square.csv to Nanchang.kmz in GE Pro; ŌĆó Same procedure of importing Nanchang_RP.csv; ŌĆó Use different colors; ŌĆó Be sure to save to My Places; ŌĆó Save as Nanchang_Square.kmz;

- 17. Evaluation ŌĆó The estimated time of finishing one city is 2-3 hours. ŌĆó The majority of the process could be documented. ŌĆó Use ArcGIS can help increase the randomness in selecting eligible points ŌĆó Strongly depend on the accuracy of boundary and RND boundary ŌĆó Batch processing allows for massive amount of cities to be measured ŌĆó Strictly randomness in RP and EP selecting process ŌĆó Overcome the inconsistency of different coordinate systems ŌĆó WGS-84 and GCJ-02 coordinate system ŌĆó Points are random, so the relative location between points and road network is of no necessary importance in the process.

- 18. Evaluation ŌĆó In Interim Procedure: Distance, it would allow at ┬Į of the results to be inaccurate, which generate inaccuracy. ŌĆó Tolerance level could be lower by only allowing no more than ┬Į result to be problematic ŌĆó < 500m is calculated as 500m ŌĆó Hard to decide whether the distance between an od pair is 500m ŌĆó Usually not! ŌĆó How close? ŌĆó Both not accurate, but the distance seems to be 500m?

- 19. THX!

Editor's Notes

- #7: Mac: importing CSV may be problematic

- #9: The result of Latitude, Longitude, OriLat, OriLong will automatically appear. Functions: C2=CONCATENATE(A2,"-",B2) D2=F2+($I$2/(($I$3+$I$4)*2*PI())*360), E2=G2 D3=F3-($I$2/(($I$3+$I$4)*2*PI())*360), E3=G3 D4=F4, E4=G4+DEGREES(ATAN2(COS($I$2/($I$3+$I$4))-SIN(RADIANS(F4))*SIN(RADIANS(D4)),SIN(RADIANS(90))*SIN($I$2/($I$3+$I$4))*COS(RADIANS(F4)))) D5=F5, E5==G5+DEGREES(ATAN2(COS($I$2/($I$4))-SIN(RADIANS(F5))*SIN(RADIANS(D5)),SIN(RADIANS(270))*SIN($I$2/($I$4))*COS(RADIANS(F5)))) F2=INDEX(Eligibe_Points!C$2:C$41,MATCH(Square_Points!$A2,Eligibe_Points!$B$2:$B$41,0)) G2=INDEX(Eligibe_Points!D$2:D$41,MATCH(Square_Points!$A2,Eligibe_Points!$B$2:$B$41,0))

- #14: The result of Output, Distance, and Average will automatically appear. C2=CONCATENATE("from:",Square_Points!F2,",",Square_Points!G2,"(",Square_Points!A2,")"," to:",Square_Points!D2,",",Square_Points!E2,"(",Square_Points!C2,")") (E2=IF(E2<>"","y","n")) F2=IF(E2<>"",E2/1000,"") G2=IF(F2<0.5,0.5,F2) H1=AVERAGE(G2:G5)

![[Alghumgham]2011SPEpowerpoint](https://cdn.slidesharecdn.com/ss_thumbnails/8850602d-8d5e-4b61-8bd8-d72c875e4545-151120195603-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)