Frame the Crowd: Global Visual Features Labeling boosted with Crowdsourcing Information

- 1. Frame the Crowd: Global Visual Features Labeling boosted with Crowdsourcing Information Presentation: Michael Riegler, AAU Mathias Lux, AAU Christoph Kofler, TU Delft

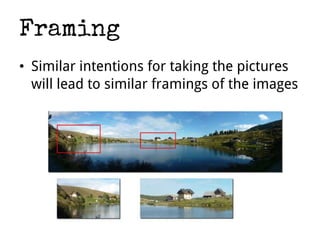

- 2. Framing ŌĆó Similar intentions for taking the pictures will lead to similar framings of the images

- 3. Example 1

- 4. Example 2

- 5. Idea ŌĆó Solve the problem with a Global Visual Features approach based on the framing theory ŌĆō Always available and for free (beside computation time) ŌĆó Workers Reliability for Crowdsourcing Information ŌĆó Transfer learning

- 6. Visual Classifier ŌĆó Modification of LIRE framework ŌĆó Search based ŌĆó 12 Global features ŌĆó Feature selection ŌĆó Feature combination ŌĆō late fusion

- 7. WorkersŌĆÖ Reliability ŌĆó Calculate the reliability of a Worker: #(agrees with majority vote) / #(total votes by worker) ŌĆó Used as weight for the votes ŌĆó Together with self report familiarity as feature vector

- 8. Runs 1. Reliability measure for workers 2. Visual information with MMSys model 3. Visual information with low fidelity worker votes of Fashion10000 dataset model 4. Visual information with new, by the method of run#1, labeled Fashion10000 dataset 5. Visual information based decision for not clear results of run#1

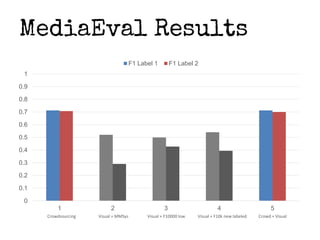

- 9. MediaEval Results 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 1 2 3 4 5 F1 Label 1 F1 Label 2 Crowdsourcing Visual + MMSys Visual + F10000 low Visual + F10k new labeled Crowd + Visual

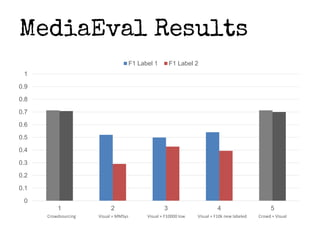

- 10. MediaEval Results 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 1 2 3 4 5 F1 Label 1 F1 Label 2 Crowdsourcing Visual + MMSys Visual + F10000 low Visual + F10k new labeled Crowd + Visual

- 11. Weighted F1 score (WF1) ŌĆó Weighted metric of each F1 score per class ŌĆó Can help to interpret the results better ŌĆó Can compensate differences between biased classes

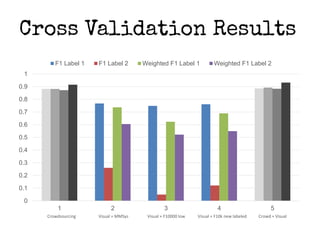

- 12. Cross Validation Results 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 1 2 3 4 5 F1 Label 1 F1 Label 2 Weighted F1 Label 1 Weighted F1 Label 2 Crowdsourcing Visual + MMSys Visual + F10000 low Visual + F10k new labeled Crowd + Visual

- 13. Conclusion ŌĆó Calculating the workersŌĆÖ reliability performs well ŌĆō Well known that metadata leads to better results ŌĆó Transfer learning works well ŌĆō Crowdsourcing can boost visual classification ŌĆó With visual features, even small amount of labeled data leads to good results ŌĆó Usefulness of Framing is reflected by the results ŌĆó Label 1 very good detectable with global visual features, but label 2 not (concept detection) ŌĆó Weighted F1 score can help to understand the results better

- 14. Michael Riegler michael.riegler@edu.uni-klu.ac.at Mathias Lux mlux@itec.aau.at Christoph Kofler c.Kofler@tudelft.nl