data compression.ppt tree structure vector

Download as PPTX, PDF0 likes22 views

tree structure vector quantizers

1 of 9

Download to read offline

Recommended

Structure Vector Quantizes In Data Compression

Structure Vector Quantizes In Data Compressionmalarrs2002

╠²

Introduction And flow of Structure Vector Quantizes In Data CompressionProject pptVLSI ARCHITECTURE FOR AN IMAGE COMPRESSION SYSTEM USING VECTOR QUA...

Project pptVLSI ARCHITECTURE FOR AN IMAGE COMPRESSION SYSTEM USING VECTOR QUA...saumyatapu

╠²

This document presents a VLSI architecture for an image compression system using vector quantization. It discusses motivation, objectives, and provides an introduction to image compression techniques including scalar and vector quantization. It describes the LBG algorithm for generating codebooks and proposes a multistage vector quantization approach. A cost-effective VLSI architecture for multistage vector quantization is presented, including the design of individual components like the image processing unit and decompression control unit. Results show the proposed architecture can compress images with good quality while maintaining low hardware complexity.CNN_Deep Learning ResNet_Presentation1.pptx

CNN_Deep Learning ResNet_Presentation1.pptxOnUrTipsIncorporatio

╠²

Resnet CNN, Deep Neural Network, Convolution Neural Network, Residual Network. ResNets or Residual networks are a type of deep convolutional neural network architecture that was first introduced in Dec 2015 by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun @ MSRA(Microsoft Asia). Won 1st place in the ILSVRC 2015 classification competition Won 1st Place in ImageNet Classification: ŌĆ£Ultra-deepŌĆØ-152 layer nets. The extremely deep representations also have excellent generalization performance on other recognition tasks and lead them to further win 1st place on ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation in the same ILSVRC & COCO 2015 competitions. In traditional deep neural networks or plain deep neural networks, the vanishing gradient problem occurs means that gradients become very small as they are propagated through many layers of a neural network. ┬½ąöąĖąĘą░ą╣ąĮ ą┐čĆąŠą┤ą▓ąĖąĮčāčéčŗčģ ąĮąĄčĆąĄą╗čÅčåąĖąŠąĮąĮčŗčģ čüčģąĄą╝ ą┤ą╗čÅ Big Data┬╗

┬½ąöąĖąĘą░ą╣ąĮ ą┐čĆąŠą┤ą▓ąĖąĮčāčéčŗčģ ąĮąĄčĆąĄą╗čÅčåąĖąŠąĮąĮčŗčģ čüčģąĄą╝ ą┤ą╗čÅ Big Data┬╗Olga Lavrentieva

╠²

ąÆąĖą║č鹊čĆ ąĪą╝ąĖčĆąĮąŠą▓ (Java Tech Lead ą▓ Klika Technologies)

ąöąŠą║ą╗ą░ą┤: ┬½ąöąĖąĘą░ą╣ąĮ ą┐čĆąŠą┤ą▓ąĖąĮčāčéčŗčģ ąĮąĄčĆąĄą╗čÅčåąĖąŠąĮąĮčŗčģ čüčģąĄą╝ ą┤ą╗čÅ Big Data┬╗

ą× čćčæą╝: ąÆąĖą║č鹊čĆ ą┐ąŠąĘąĮą░ą║ąŠą╝ąĖčé ą▓čüąĄčģ čü ą┐čĆąĖą╝ąĄčĆą░ą╝ąĖ ą┐čĆąŠą┤ą▓ąĖąĮčāčéčŗčģ ąĮąĄčĆąĄą╗čÅčåąĖąŠąĮąĮčŗčģ čüčģąĄą╝ ą┤ą░ąĮąĮčŗčģ ąĖ č鹥ą╝, ą║ą░ą║ ąŠąĮąĖ ą╝ąŠą│čāčé ąĖčüą┐ąŠą╗čīąĘąŠą▓ą░čéčīčüčÅ ą┤ą╗čÅ čĆąĄčłąĄąĮąĖčÅ ąĘą░ą┤ą░čć, čüą▓čÅąĘą░ąĮąĮčŗčģ čü čģčĆą░ąĮąĄąĮąĖąĄą╝ ąĖ ąŠą▒čĆą░ą▒ąŠčéą║ąŠą╣ ą▒ąŠą╗čīčłąĖčģ ą┤ą░ąĮąĮčŗčģ.Beyond Write-reduction Consideration: A Wear-leveling-enabled B+-tree Indexin...

Beyond Write-reduction Consideration: A Wear-leveling-enabled B+-tree Indexin...Po-Chuan Chen

╠²

Beyond Write-reduction Consideration: A Wear-leveling-enabled B+-tree Indexing Scheme

over an NVRAM-based Architecture

Paper presentation240715_JW_labseminar[metapath2vec: Scalable Representation Learning for Heter...

240715_JW_labseminar[metapath2vec: Scalable Representation Learning for Heter...thanhdowork

╠²

metapath2vec: Scalable Representation Learning for Heterogeneous NetworksPrincipal Component Analysis Principal Component Analysis.pptx

Principal Component Analysis Principal Component Analysis.pptxssuser71aa7e

╠²

Principal Component AnalysisG010633439

G010633439IOSR Journals

╠²

This document provides a review of atypical hierarchical routing protocols for wireless sensor networks. It begins by introducing hierarchical routing and typical clustering routing in wireless sensor networks. It then describes several types of atypical hierarchical routing protocols, including chain-based, tree-based, grid-based, and area-based routing protocols. For each type, some representative protocols are described and their advantages and disadvantages are analyzed. The document concludes by comparing the performance of different chain-based hierarchical routing protocols based on factors like energy efficiency, scalability, delivery delay, and load balancing.A Review of Atypical Hierarchical Routing Protocols for Wireless Sensor Networks

A Review of Atypical Hierarchical Routing Protocols for Wireless Sensor Networksiosrjce

╠²

IOSR Journal of Electronics and Communication Engineering(IOSR-JECE) is a double blind peer reviewed International Journal that provides rapid publication (within a month) of articles in all areas of electronics and communication engineering and its applications. The journal welcomes publications of high quality papers on theoretical developments and practical applications in electronics and communication engineering. Original research papers, state-of-the-art reviews, and high quality technical notes are invited for publications.Generalization of linear and non-linear support vector machine in multiple fi...

Generalization of linear and non-linear support vector machine in multiple fi...CSITiaesprime

╠²

Support vector machines (SVMs) are a set of related supervised learning methods used for classification and regression. They belong to a family of generalized linear classifiers. In other terms, SVM is a classification and regression prediction tool that uses machine learning theory to maximize predictive accuracy. In this article, the discussion about linear and non-linear SVM classifiers with their functions and parameters is investigated. Due to the equality type of constraints in the formulation, the solution follows from solving a set of linear equations. Besides this, if the under-consideration problem is in the form of a non-linear case, then the problem must convert into linear separable form with the help of kernel trick and solve it according to the methods. Some important algorithms related to sentimental work are also presented in this paper. Generalization of the formulation of linear and non-linear SVMs is also open in this article. In the final section of this paper, the different modified sections of SVM are discussed which are modified by different research for different purposes.Abstract on Implementation of LEACH Protocol for WSN

Abstract on Implementation of LEACH Protocol for WSNsaurabh goel

╠²

LEACH (Low-Energy Adaptive Clustering Hierarchy) is an energy-efficient routing protocol for wireless sensor networks that utilizes randomized rotation of cluster heads to evenly distribute the energy load among the sensors. LEACH uses localized coordination to enable scalability and robustness for dynamic networks, and incorporates data fusion into the routing protocol to reduce the amount of information that must be transmitted to the base station. The paper implements and evaluates the LEACH protocol to analyze its effectiveness in improving network lifetime for wireless sensor networks.Abitseminar

Abitseminarroshnaranimca

╠²

This document discusses generating sensor nodes and clustering for energy efficiency in wireless sensor networks (WSNs). It describes how sensor nodes are organized into clusters with a cluster head that communicates with the base station. The presentation proposes an algorithm for selecting the cluster head based on the node's distance to the base station and other nodes, with the goal of increasing network lifetime by optimizing energy consumption. Clustering helps reduce energy usage through data aggregation and limiting transmissions to cluster heads only.Connected Dominating Set Construction Algorithm for Wireless Sensor Networks ...

Connected Dominating Set Construction Algorithm for Wireless Sensor Networks ...ijsrd.com

╠²

Energy efficiency plays an important role in wireless sensor networks. All nodes in sensor networks are energy constrained. Clustering is one kind of energy efficient algorithm. To organize the nodes in better way a virtual backbone can be used. There is no physical backbone infrastructure, but a virtual backbone can be formed by constructing a Connected Dominating Set (CDS). CDS has a significant impact on an energy efficient design of routing algorithms in WSN. CDS should first and foremost be small. It should have robustness to node failures. In this paper, we present a general classification of CDS construction algorithms. This survey gives different CDS formation algorithms for WSNs.ppt FD.pptx

ppt FD.pptxROGNationYT

╠²

This document summarizes research on detecting fake news using machine learning models. It discusses the motivation for the work due to the prevalence of fake news. The problem is framed as developing a machine learning program to identify fake news based on content. Various models are trained on a labeled dataset and evaluated, with LSTMs achieving the highest accuracy of 94.53%. Future work proposed includes ensemble methods and additional context-based analysis.Applications of machine learning in Wireless sensor networks.

Applications of machine learning in Wireless sensor networks.Sahana B S

╠²

Describes the various challenges in Wireless sensor networks and how Machine learning will help to develop algorithms to tackle the problem.CNN, Deep Learning ResNet_30_║▌║▌▀Ż_Presentation.pptx

CNN, Deep Learning ResNet_30_║▌║▌▀Ż_Presentation.pptxOnUrTipsIncorporatio

╠²

When deeper networks are able to start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated (which might be unsurprising) and then degrades rapidly. Unexpectedly, such degradation is not caused by overfitting, and adding more layers to a suitably deep model leads to higher training error, as reported and thoroughly verified by researchers in their experiments. The degradation (of training accuracy) indicates that not all systems are similarly easy to optimize. To overcome this we use residual/ identity mapping( block). It means we skip the blocks of convolution that exist between two Relu Activation units, feeding the o/p of the first relu to 2nd relu. By adding this residual connection, the network is able to learn the residual function instead of directly learning the underlying mapping. This can lead to more efficient learning and improved performance, especially in very deep architectures. Adding an additional layer would not hurt performance as regularization/ weights skip over then, this is a guarantee that performance will not decrease as you grow layers from 20 to 50 or 50 to 100 just simply add an identity block.node2vec: Scalable Feature Learning for Networks.pptx

node2vec: Scalable Feature Learning for Networks.pptxssuser2624f71

╠²

Node2Vec is an algorithm for learning continuous feature representations or embeddings of nodes in graphs. It extends traditional graph embedding techniques by leveraging both breadth-first and depth-first search to learn the local and global network structure. The algorithm uses a skip-gram model to maximize the likelihood of preserving neighborhood relationships from random walks on the graph. Learned embeddings have applications in tasks like node classification, link prediction, and graph visualization.Presentation vision transformersppt.pptx

Presentation vision transformersppt.pptxhtn540

╠²

The document describes a paper that explores using transformer architectures for computer vision tasks like image recognition. The authors tested various vision transformer (ViT) models on datasets like ImageNet and CIFAR-10/100. Their ViT models divided images into patches, embedded them, and fed them into a transformer encoder. Larger ViT models performed better with more training data. Hybrid models that used ResNet features before the transformer worked better on smaller datasets. The authors' results showed ViT models can match or beat CNNs like ResNet for image recognition, especially with more data.Distributed vertex cover

Distributed vertex coverIJCNCJournal

╠²

Vertex covering has important applications for wireless sensor networks such as monitoring link failures,

facility location, clustering, and data aggregation. In this study, we designed three algorithms for

constructing vertex cover in wireless sensor networks. The first algorithm, which is an adaption of the

Parnas & RonŌĆÖs algorithm, is a greedy approach that finds a vertex cover by using the degrees of the

nodes. The second algorithm finds a vertex cover from graph matching where HoepmanŌĆÖs weighted

matching algorithm is used. The third algorithm firstly forms a breadth-first search tree and then

constructs a vertex cover by selecting nodes with predefined levels from breadth-first tree. We show the

operation of the designed algorithms, analyze them, and provide the simulation results in the TOSSIM

environment. Finally we have implemented, compared and assessed all these approaches. The transmitted

message count of the first algorithm is smallest among other algorithms where the third algorithm has

turned out to be presenting the best results in vertex cover approximation ratio.E035425030

E035425030ijceronline

╠²

This document summarizes a research paper that proposes a Virtual Backbone Scheduling technique with clustering and fuzzy logic for faster data collection in wireless sensor networks. It introduces the concepts of virtual backbone scheduling, clustering, and fuzzy logic. It presents the system architecture that uses these techniques and includes three clusters with sensor nodes, cluster heads, and a common sink node. Algorithms for virtual backbone scheduling and fuzzy-based clustering are described. Implementation results show that the proposed approach improves network lifetime, reduces error rates, lowers communication costs, and decreases scheduling time compared to existing techniques like TDMA scheduling.Energy efficient cluster-based service discovery in wireless sensor networks

Energy efficient cluster-based service discovery in wireless sensor networksambitlick

╠²

1) The document proposes an energy-efficient service discovery protocol for wireless sensor networks that exploits a cluster-based network overlay.

2) Clusterhead nodes form a distributed service registry to minimize communication costs during service discovery and maintenance.

3) The performance of the proposed integrated clustering and service discovery solution is evaluated through simulations under different network conditions.Standardising the compressed representation of neural networks

Standardising the compressed representation of neural networksF├Črderverein Technische Fakult├żt

╠²

Artificial neural networks have been adopted for a broad range of tasks in multimedia analysis and processing, such as visual and acoustic classification, extraction of multimedia descriptors or image and video coding. The trained neural networks for these applications contain a large number of parameters (weights), resulting in a considerable size. Thus, transferring them to a number of clients using them in applications (e.g., mobile phones, smart cameras) benefits from a compressed representation of neural networks.

MPEG Neural Network Coding and Representation is the first international standard for efficient compression of neural networks (NNs). The standard is designed as a toolbox of compression methods, which can be used to create coding pipelines. It can be either used as an independent coding framework (with its own bitstream format) or together with external neural network formats and frameworks. For providing the highest degree of flexibility, the network compression methods operate per parameter tensor in order to always ensure proper decoding, even if no structure information is provided. The standard contains compression-efficient quantization and an arithmetic coding scheme (DeepCABAC) as core encoding and decoding technologies, as well as neural network parameter pre-processing methods like sparsification, pruning, low-rank decomposition, unification, local scaling, and batch norm folding. NNR achieves a compression efficiency of more than 97% for transparent coding cases, i.e. without degrading classification quality, such as top-1 or top-5 accuracies.

This talk presents an overview of the context, technical features, and characteristics of the NN coding standard, and discusses ongoing topics such as incremental neural network representation.Unit 5 Quantization

Unit 5 QuantizationDr Piyush Charan

╠²

This presentation is part of course Data Compression (CA209) offered to the students of BCA of Integral University, LucknowMini_Project

Mini_ProjectAshish Yadav

╠²

This document discusses data analysis and dimensionality reduction techniques including PCA and LDA. It provides an overview of feature transformation and why it is needed for dimensionality reduction. It then describes the steps of PCA including standardization of data, obtaining eigenvalues and eigenvectors, principal component selection, projection matrix, and projection into feature space. The steps of LDA are also outlined including computing mean vectors, scatter matrices, eigenvectors and eigenvalues, selecting linear discriminants, and transforming samples. Examples applying PCA and LDA to iris and web datasets are presented.Parallel and distributed storage on databases

Parallel and distributed storage on databasesVivekMITAnnaUniversi

╠²

Parallel and distributed storage on databasesSeminar Presentation on Student Management Lifecycle System

Seminar Presentation on Student Management Lifecycle Systemfarmse45110

╠²

Seminar Presentation on Student Management Lifecycle SystemENG8-Q4-MOD2.pdfajxnjdabajbadjbiadbiwdhiwdhwdhiwd

ENG8-Q4-MOD2.pdfajxnjdabajbadjbiadbiwdhiwdhwdhiwdshekainahrosej

╠²

DihuqhudwuhdhwduhduwbdiabdiadnoanddnodnnaibwfhdwifhisfhishfefhuhegncdisfkndainiaadiniyongiuotfsjnwfnuejifebfsjbaifbaifbMore Related Content

Similar to data compression.ppt tree structure vector (20)

G010633439

G010633439IOSR Journals

╠²

This document provides a review of atypical hierarchical routing protocols for wireless sensor networks. It begins by introducing hierarchical routing and typical clustering routing in wireless sensor networks. It then describes several types of atypical hierarchical routing protocols, including chain-based, tree-based, grid-based, and area-based routing protocols. For each type, some representative protocols are described and their advantages and disadvantages are analyzed. The document concludes by comparing the performance of different chain-based hierarchical routing protocols based on factors like energy efficiency, scalability, delivery delay, and load balancing.A Review of Atypical Hierarchical Routing Protocols for Wireless Sensor Networks

A Review of Atypical Hierarchical Routing Protocols for Wireless Sensor Networksiosrjce

╠²

IOSR Journal of Electronics and Communication Engineering(IOSR-JECE) is a double blind peer reviewed International Journal that provides rapid publication (within a month) of articles in all areas of electronics and communication engineering and its applications. The journal welcomes publications of high quality papers on theoretical developments and practical applications in electronics and communication engineering. Original research papers, state-of-the-art reviews, and high quality technical notes are invited for publications.Generalization of linear and non-linear support vector machine in multiple fi...

Generalization of linear and non-linear support vector machine in multiple fi...CSITiaesprime

╠²

Support vector machines (SVMs) are a set of related supervised learning methods used for classification and regression. They belong to a family of generalized linear classifiers. In other terms, SVM is a classification and regression prediction tool that uses machine learning theory to maximize predictive accuracy. In this article, the discussion about linear and non-linear SVM classifiers with their functions and parameters is investigated. Due to the equality type of constraints in the formulation, the solution follows from solving a set of linear equations. Besides this, if the under-consideration problem is in the form of a non-linear case, then the problem must convert into linear separable form with the help of kernel trick and solve it according to the methods. Some important algorithms related to sentimental work are also presented in this paper. Generalization of the formulation of linear and non-linear SVMs is also open in this article. In the final section of this paper, the different modified sections of SVM are discussed which are modified by different research for different purposes.Abstract on Implementation of LEACH Protocol for WSN

Abstract on Implementation of LEACH Protocol for WSNsaurabh goel

╠²

LEACH (Low-Energy Adaptive Clustering Hierarchy) is an energy-efficient routing protocol for wireless sensor networks that utilizes randomized rotation of cluster heads to evenly distribute the energy load among the sensors. LEACH uses localized coordination to enable scalability and robustness for dynamic networks, and incorporates data fusion into the routing protocol to reduce the amount of information that must be transmitted to the base station. The paper implements and evaluates the LEACH protocol to analyze its effectiveness in improving network lifetime for wireless sensor networks.Abitseminar

Abitseminarroshnaranimca

╠²

This document discusses generating sensor nodes and clustering for energy efficiency in wireless sensor networks (WSNs). It describes how sensor nodes are organized into clusters with a cluster head that communicates with the base station. The presentation proposes an algorithm for selecting the cluster head based on the node's distance to the base station and other nodes, with the goal of increasing network lifetime by optimizing energy consumption. Clustering helps reduce energy usage through data aggregation and limiting transmissions to cluster heads only.Connected Dominating Set Construction Algorithm for Wireless Sensor Networks ...

Connected Dominating Set Construction Algorithm for Wireless Sensor Networks ...ijsrd.com

╠²

Energy efficiency plays an important role in wireless sensor networks. All nodes in sensor networks are energy constrained. Clustering is one kind of energy efficient algorithm. To organize the nodes in better way a virtual backbone can be used. There is no physical backbone infrastructure, but a virtual backbone can be formed by constructing a Connected Dominating Set (CDS). CDS has a significant impact on an energy efficient design of routing algorithms in WSN. CDS should first and foremost be small. It should have robustness to node failures. In this paper, we present a general classification of CDS construction algorithms. This survey gives different CDS formation algorithms for WSNs.ppt FD.pptx

ppt FD.pptxROGNationYT

╠²

This document summarizes research on detecting fake news using machine learning models. It discusses the motivation for the work due to the prevalence of fake news. The problem is framed as developing a machine learning program to identify fake news based on content. Various models are trained on a labeled dataset and evaluated, with LSTMs achieving the highest accuracy of 94.53%. Future work proposed includes ensemble methods and additional context-based analysis.Applications of machine learning in Wireless sensor networks.

Applications of machine learning in Wireless sensor networks.Sahana B S

╠²

Describes the various challenges in Wireless sensor networks and how Machine learning will help to develop algorithms to tackle the problem.CNN, Deep Learning ResNet_30_║▌║▌▀Ż_Presentation.pptx

CNN, Deep Learning ResNet_30_║▌║▌▀Ż_Presentation.pptxOnUrTipsIncorporatio

╠²

When deeper networks are able to start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated (which might be unsurprising) and then degrades rapidly. Unexpectedly, such degradation is not caused by overfitting, and adding more layers to a suitably deep model leads to higher training error, as reported and thoroughly verified by researchers in their experiments. The degradation (of training accuracy) indicates that not all systems are similarly easy to optimize. To overcome this we use residual/ identity mapping( block). It means we skip the blocks of convolution that exist between two Relu Activation units, feeding the o/p of the first relu to 2nd relu. By adding this residual connection, the network is able to learn the residual function instead of directly learning the underlying mapping. This can lead to more efficient learning and improved performance, especially in very deep architectures. Adding an additional layer would not hurt performance as regularization/ weights skip over then, this is a guarantee that performance will not decrease as you grow layers from 20 to 50 or 50 to 100 just simply add an identity block.node2vec: Scalable Feature Learning for Networks.pptx

node2vec: Scalable Feature Learning for Networks.pptxssuser2624f71

╠²

Node2Vec is an algorithm for learning continuous feature representations or embeddings of nodes in graphs. It extends traditional graph embedding techniques by leveraging both breadth-first and depth-first search to learn the local and global network structure. The algorithm uses a skip-gram model to maximize the likelihood of preserving neighborhood relationships from random walks on the graph. Learned embeddings have applications in tasks like node classification, link prediction, and graph visualization.Presentation vision transformersppt.pptx

Presentation vision transformersppt.pptxhtn540

╠²

The document describes a paper that explores using transformer architectures for computer vision tasks like image recognition. The authors tested various vision transformer (ViT) models on datasets like ImageNet and CIFAR-10/100. Their ViT models divided images into patches, embedded them, and fed them into a transformer encoder. Larger ViT models performed better with more training data. Hybrid models that used ResNet features before the transformer worked better on smaller datasets. The authors' results showed ViT models can match or beat CNNs like ResNet for image recognition, especially with more data.Distributed vertex cover

Distributed vertex coverIJCNCJournal

╠²

Vertex covering has important applications for wireless sensor networks such as monitoring link failures,

facility location, clustering, and data aggregation. In this study, we designed three algorithms for

constructing vertex cover in wireless sensor networks. The first algorithm, which is an adaption of the

Parnas & RonŌĆÖs algorithm, is a greedy approach that finds a vertex cover by using the degrees of the

nodes. The second algorithm finds a vertex cover from graph matching where HoepmanŌĆÖs weighted

matching algorithm is used. The third algorithm firstly forms a breadth-first search tree and then

constructs a vertex cover by selecting nodes with predefined levels from breadth-first tree. We show the

operation of the designed algorithms, analyze them, and provide the simulation results in the TOSSIM

environment. Finally we have implemented, compared and assessed all these approaches. The transmitted

message count of the first algorithm is smallest among other algorithms where the third algorithm has

turned out to be presenting the best results in vertex cover approximation ratio.E035425030

E035425030ijceronline

╠²

This document summarizes a research paper that proposes a Virtual Backbone Scheduling technique with clustering and fuzzy logic for faster data collection in wireless sensor networks. It introduces the concepts of virtual backbone scheduling, clustering, and fuzzy logic. It presents the system architecture that uses these techniques and includes three clusters with sensor nodes, cluster heads, and a common sink node. Algorithms for virtual backbone scheduling and fuzzy-based clustering are described. Implementation results show that the proposed approach improves network lifetime, reduces error rates, lowers communication costs, and decreases scheduling time compared to existing techniques like TDMA scheduling.Energy efficient cluster-based service discovery in wireless sensor networks

Energy efficient cluster-based service discovery in wireless sensor networksambitlick

╠²

1) The document proposes an energy-efficient service discovery protocol for wireless sensor networks that exploits a cluster-based network overlay.

2) Clusterhead nodes form a distributed service registry to minimize communication costs during service discovery and maintenance.

3) The performance of the proposed integrated clustering and service discovery solution is evaluated through simulations under different network conditions.Standardising the compressed representation of neural networks

Standardising the compressed representation of neural networksF├Črderverein Technische Fakult├żt

╠²

Artificial neural networks have been adopted for a broad range of tasks in multimedia analysis and processing, such as visual and acoustic classification, extraction of multimedia descriptors or image and video coding. The trained neural networks for these applications contain a large number of parameters (weights), resulting in a considerable size. Thus, transferring them to a number of clients using them in applications (e.g., mobile phones, smart cameras) benefits from a compressed representation of neural networks.

MPEG Neural Network Coding and Representation is the first international standard for efficient compression of neural networks (NNs). The standard is designed as a toolbox of compression methods, which can be used to create coding pipelines. It can be either used as an independent coding framework (with its own bitstream format) or together with external neural network formats and frameworks. For providing the highest degree of flexibility, the network compression methods operate per parameter tensor in order to always ensure proper decoding, even if no structure information is provided. The standard contains compression-efficient quantization and an arithmetic coding scheme (DeepCABAC) as core encoding and decoding technologies, as well as neural network parameter pre-processing methods like sparsification, pruning, low-rank decomposition, unification, local scaling, and batch norm folding. NNR achieves a compression efficiency of more than 97% for transparent coding cases, i.e. without degrading classification quality, such as top-1 or top-5 accuracies.

This talk presents an overview of the context, technical features, and characteristics of the NN coding standard, and discusses ongoing topics such as incremental neural network representation.Unit 5 Quantization

Unit 5 QuantizationDr Piyush Charan

╠²

This presentation is part of course Data Compression (CA209) offered to the students of BCA of Integral University, LucknowMini_Project

Mini_ProjectAshish Yadav

╠²

This document discusses data analysis and dimensionality reduction techniques including PCA and LDA. It provides an overview of feature transformation and why it is needed for dimensionality reduction. It then describes the steps of PCA including standardization of data, obtaining eigenvalues and eigenvectors, principal component selection, projection matrix, and projection into feature space. The steps of LDA are also outlined including computing mean vectors, scatter matrices, eigenvectors and eigenvalues, selecting linear discriminants, and transforming samples. Examples applying PCA and LDA to iris and web datasets are presented.Parallel and distributed storage on databases

Parallel and distributed storage on databasesVivekMITAnnaUniversi

╠²

Parallel and distributed storage on databasesRecently uploaded (20)

Seminar Presentation on Student Management Lifecycle System

Seminar Presentation on Student Management Lifecycle Systemfarmse45110

╠²

Seminar Presentation on Student Management Lifecycle SystemENG8-Q4-MOD2.pdfajxnjdabajbadjbiadbiwdhiwdhwdhiwd

ENG8-Q4-MOD2.pdfajxnjdabajbadjbiadbiwdhiwdhwdhiwdshekainahrosej

╠²

Dihuqhudwuhdhwduhduwbdiabdiadnoanddnodnnaibwfhdwifhisfhishfefhuhegncdisfkndainiaadiniyongiuotfsjnwfnuejifebfsjbaifbaifbbuiding web based land registration buiding web based land registration and m...

buiding web based land registration buiding web based land registration and m...habtamudele9

╠²

buiding web based land registration and management systemHadoop-and-R-Programming-Powering-Big-Data-Analytics.pptx

Hadoop-and-R-Programming-Powering-Big-Data-Analytics.pptxMdTahammulNoor

╠²

Hadoop and its uses in data analyticsChat Bots - An Analytical study including Indian players

Chat Bots - An Analytical study including Indian playersDR. Ram Kumar Pathak

╠²

Analytical study of chatbots including Indian playersTurinton Insights - Enterprise Agentic AI Platform

Turinton Insights - Enterprise Agentic AI Platformvikrant530668

╠²

Enterprises Agentic AI Platform that helps organization to build AI 10X faster, 3X optimised that yields 5X ROI. Helps organizations build AI Driven Data Fabric within their data ecosystem and infrastructure.

Enables users to explore enterprise-wide information and build enterprise AI apps, ML Models, and agents. Maps and correlates data across databases, files, SOR, creating a unified data view using AI. Leveraging AI, it uncovers hidden patterns and potential relationships in the data. Forms relationships between Data Objects and Business Processes and observe anomalies for failure prediction and proactive resolutions. Capital market of Nigeria and its economic values

Capital market of Nigeria and its economic valuesezehnelson104

╠²

Shows detailed Explanation of the Nigerian capital market and how it affects the country's vast economyAbhijn╠āa╠änas╠üa╠äkuntalam Play by Kalidas Based on the translation by Arthur W....

Abhijn╠āa╠änas╠üa╠äkuntalam Play by Kalidas Based on the translation by Arthur W....kiranprava2002

╠²

Hu huIT Professional Ethics, Moral and Cu.ppt

IT Professional Ethics, Moral and Cu.pptFrancisFayiah

╠²

The work is for Professional IT Personal ethicsMastering Data Science with Tutort Academy

Mastering Data Science with Tutort Academyyashikanigam1

╠²

## **Mastering Data Science with Tutort Academy: Your Ultimate Guide**

### **Introduction**

Data Science is transforming industries by enabling data-driven decision-making. Mastering this field requires a structured learning path, practical exposure, and expert guidance. Tutort Academy provides a comprehensive platform for professionals looking to build expertise in Data Science.

---

## **Why Choose Data Science as a Career?**

- **High Demand:** Companies worldwide are seeking skilled Data Scientists.

- **Lucrative Salaries:** Competitive pay scales make this field highly attractive.

- **Diverse Applications:** Used in finance, healthcare, e-commerce, and more.

- **Innovation-Driven:** Constant advancements make it an exciting domain.

---

## **How Tutort Academy Helps You Master Data Science**

### **1. Comprehensive Curriculum**

Tutort Academy offers a structured syllabus covering:

- **Python & R for Data Science**

- **Machine Learning & Deep Learning**

- **Big Data Technologies**

- **Natural Language Processing (NLP)**

- **Data Visualization & Business Intelligence**

- **Cloud Computing for Data Science**

### **2. Hands-on Learning Approach**

- **Real-World Projects:** Work on datasets from different domains.

- **Live Coding Sessions:** Learn by implementing concepts in real-time.

- **Industry Case Studies:** Understand how top companies use Data Science.

### **3. Mentorship from Experts**

- **Guidance from Industry Leaders**

- **Career Coaching & Resume Building**

- **Mock Interviews & Job Assistance**

### **4. Flexible Learning for Professionals**

- **Best DSA Course Online:** Strengthen your problem-solving skills.

- **System Design Course Online:** Master scalable system architectures.

- **Live Courses for Professionals:** Balance learning with a full-time job.

---

## **Key Topics Covered in Tutort AcademyŌĆÖs Data Science Program**

### **1. Programming for Data Science**

- Python, SQL, and R

- Data Structures & Algorithms (DSA)

- System Design & Optimization

### **2. Data Wrangling & Analysis**

- Handling Missing Data

- Data Cleaning Techniques

- Feature Engineering

### **3. Statistics & Probability**

- Descriptive & Inferential Statistics

- Hypothesis Testing

- Probability Distributions

### **4. Machine Learning & AI**

- Supervised & Unsupervised Learning

- Model Evaluation & Optimization

- Deep Learning with TensorFlow & PyTorch

### **5. Big Data & Cloud Technologies**

- Hadoop, Spark, and AWS for Data Science

- Data Pipelines & ETL Processes

### **6. Data Visualization & Storytelling**

- Tools like Tableau, Power BI, and Matplotlib

- Creating Impactful Business Reports

### **7. Business Intelligence & Decision Making**

- How data drives strategic business choices

- Case Studies from Leading Organizations

---

## **Mastering Data Science: A Step-by-Step Plan**

### **Step 1: Learn the Fundamentals**

Start with **Python for Data Science, Statistics, and Linear Algebra.** Understanding these basics is crucial for advanced tImplications of Blockchain Technology in Agri-Food Supply Chains

Implications of Blockchain Technology in Agri-Food Supply ChainsSoumya Mohapatra

╠²

Blockchain technology enables end-to-end transactions, ensuring a secure and transparent process without the involvement of intermediaries (such as banks) or middlemen, as is often the case in agricultural marketing. The technology has gained enormous success in various sectors and organizations due to its fault tolerance and problem-solving scenarios. The rise of AI Agents - Beyond Automation_ The Rise of AI Agents in Service ...

The rise of AI Agents - Beyond Automation_ The Rise of AI Agents in Service ...Yasen Lilov

╠²

Deep dive into how agency service-based business can leverage AI and AI Agents for automation and scale. Case Study example with platforms used outlined in the slides.Abhijn╠āa╠änas╠üa╠äkuntalam Play by Kalidas Based on the translation by Arthur W....

Abhijn╠āa╠änas╠üa╠äkuntalam Play by Kalidas Based on the translation by Arthur W....kiranprava2002

╠²

data compression.ppt tree structure vector

- 1. NADAR SARASWATHI COLLEGE OF ARTS & SCIENCE (AUTONOMOUS), THENI. Data Compression Tree structured vector quantizers By M.Vidhya M.SC(CS)

- 2. Introduction to Vector Quantization (VQ): ŌĆóDefinition: Vector Quantization (VQ) is a technique for compressing data by partitioning it into clusters and representing each cluster with a centroid. ŌĆóApplications: Used in image compression, speech processing, and pattern recognition. ŌĆóLimitations of standard VQ: High computational complexity in encoding due to exhaustive search.

- 3. Tree-Structured Vector Quantization (TSVQ) Overview: ŌĆóDefinition TSVQ is an efficient hierarchical method of VQ that reduces search complexity using a tree structure. ŌĆóWhy TSVQ? Overcomes the computational inefficiency of flat-codebook VQ by organizing codewords in a tree format.

- 4. Structure of TSVQ: ŌĆóRoot Node: Represents the entire data space. ŌĆóInternal Nodes: Divide data space into smaller regions. ŌĆóLeaf Nodes: Contain the final quantized codewords. ŌĆóExample Diagram: Tree structure representation.

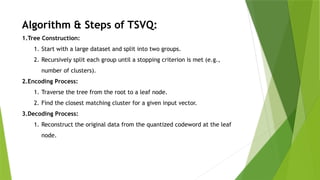

- 5. Algorithm & Steps of TSVQ: 1.Tree Construction: 1. Start with a large dataset and split into two groups. 2. Recursively split each group until a stopping criterion is met (e.g., number of clusters). 2.Encoding Process: 1. Traverse the tree from the root to a leaf node. 2. Find the closest matching cluster for a given input vector. 3.Decoding Process: 1. Reconstruct the original data from the quantized codeword at the leaf node.

- 6. Advantages of TSVQ: ŌĆóFaster search time (O(log N) complexity vs. O(N) for full search VQ). ŌĆóEfficient storage due to hierarchical structure. ŌĆóAdaptive and scalable for large datasets.

- 7. Disadvantages of TSVQ: ŌĆóSuboptimal performance compared to full-search VQ. ŌĆóTree pruning and optimization are required for best efficiency. ŌĆóSensitive to training data and initial tree construction.

- 8. Applications of TSVQ: ŌĆóImage Compression: Reduces storage and transmission requirements. ŌĆóSpeech Processing: Used in low-bit-rate speech coders. ŌĆóPattern Recognition: Applied in machine learning and clustering tasks. ŌĆóNeural Networks: Used for efficient encoding of high-dimensional data.

- 9. Thank you