Deep MIML Network

- 1. Deep MIML Network Ji Feng Zhi-Hua Zhou Saad Elbeleidy

- 2. Agenda Ī± Brief Overview Ī Ī Ī Ī± Proposal Ī Ī Ī Ī± Experiments & Results 2

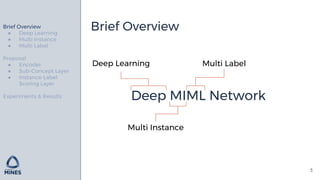

- 3. Brief Overview 3 Deep MIML Network Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results Deep Learning Multi Label Multi Instance

- 4. Deep Learning 4 Ī░DeepĪ▒ refers to the depth/number of hidden layers in a neural network. With Deep Learning, neural networks have significantly more layers that can improve the learning that occurs in the network. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 7. Types Of Neural Networks There are many different types of neural networks. You can learn more about them at the following link: http://www.asimovinstitute.org/neural-network-zoo/ 7

- 8. Fully Connected Layer Every perceptron from the previous layer is connected to every perceptron in the following layer. 8http://cs231n.github.io/neural-networks-1/

- 9. Max Pooling Ī± Sample-based discretization process Ī± Reduces dimensionality by making assumptions about contained features Ī± Controls for over-fitting 9 http://cs231n.github.io/convolutional-networks/

- 10. Multi Instance 10 Instead of receiving labeled instances, we receive labeled groups of instances (called bags). Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 11. Single Instance vs Multi Instance Single Instance Labeled keys that can unlock a door. 11 Multi Instance Labeled keychains that can unlock a door. Key Can Unlock? Red No Green No Blue Yes Orange No Black Yes Orange ? Keychain Can Unlock? Red, Green, Blue Yes Green, Orange No Red, Orange No Blue, Orange Yes Red, Orange, Black Yes Blue, Red ?

- 12. Instance-Label Relation Discovery The discovery process of locating the key instance pattern that triggers the output labels. Detecting the keys that unlock the door. Blue, Black 12 Keychain Can Unlock? Red, Green, Blue Yes Green, Orange No Red, Orange No Blue, Orange Yes Red, Orange, Black Yes Blue, Red ?

- 13. Multi Label 13 Determine all possible labels instead of a single label. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 14. Single Instance Multi Label (SIML) 14 Key Unlock A? Unlock B? Unlock C? Red No Yes No Green No Yes Yes Blue Yes No Yes Orange No Yes No Black Yes No No Orange ? ? ? Labeled keys that can unlock multiple doors.

- 15. Labeled keychains that can unlock multiple doors. Multi Instance Multi Label (MIML) 15 Keychain Unlock A? Unlock B? Unlock C? Red, Green, Blue No Yes No Green, Orange No Yes Yes Red, Orange Yes No Yes Blue, Orange No Yes No Red, Orange, Black Yes No No Blue, Red ? ? ?

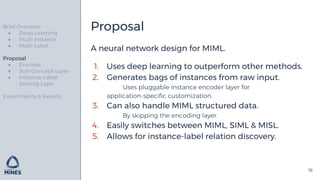

- 16. Proposal 16 A neural network design for MIML. 1. Uses deep learning to outperform other methods. 2. Generates bags of instances from raw input. 3. Can also handle MIML structured data. 4. Easily switches between MIML, SIML & MISL. 5. Allows for instance-label relation discovery. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 17. Network Overview 1. Raw Input 2. Encoder / Instance Generator 3. Sub-Concept Layer 4. Instance-Label Scoring Layer 5. Output 17

- 18. Encoder 18 Application-specific feature representation/extraction. Included as part of the same NN to improve the feature representation based on resulting labeling. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 19. Sub-Concept Layer 19 Models the matching scores between an instance and sub-concepts for each label. 1. Add dimensions for sub-concepts and instances. 2. Followed by max-pooling to return to 1-dimensional representation. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 20. 2D Sub-Concept Layer Ī± Fully Connected K (sub-concepts) * L (labels) layer. Ī± RELU for activation function Ī 20

- 21. 2D Sub-Concept Layer 21 Score vector for label Sub-concept score for label

- 22. 2D Sub-Concept Layer Ī± Fully connected with input instance vector Ī± Activations ~ matching scores between sub-concept for each label and the instance Ī± Weights different for each node (as opposed to conv. layer) Ī± Intuitive Ī Ī Ī± Max pooling used to locate the maximum matching score 22

- 23. Pooling of 2D Sub-Concept Layer Ī± Output is a K*1 scoring layer. Ī± Extracts label predictions Ī± Eliminates over-assignment on sub-concepts 23

- 24. 3D Sub-Concept Layer Ī± Stack 2D Sub-Concept layers for each instance Ī± K*L*M Ī± Vertical Pooling Ī Ī Ī Ī± Horizontal Pooling Ī Ī 24

- 25. 3D Sub-Concept Layer Ī± Outputs an L*1 vector that models the score for each label. Ī± Allows for SIML, MISL by dropping the respective dimensions. Ī Ī 25

- 26. Instance Label Scoring Layer 26 Models the matching scores for instance i on label j. Ī± Examining these scores allows for straightforward instance-label relation discovery. Ī± Can detect key instances triggering one particular label by backtracking to the highest matching score in the 2D pooling layer. Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 27. Network Summary 27 A neural network design that can be used for MIML. Ī± Extracts sub concepts from instances Ī± Instance-label relation discovery Ī± Outperforms other MIML methods

- 28. Experiments & Results 28 Ī± Text Ī± Image Ī± MIML Ī Ī Brief Overview Ī± Deep Learning Ī± Multi Instance Ī± Multi Label Proposal Ī± Encoder Ī± Sub-Concept Layer Ī± Instance-Label Scoring Layer Experiments & Results

- 29. Text Experiment Ī± Yelp Dataset Ī± 19,934 Reviews, 100 categories Ī± Train / Test Split of 70 / 30 Ī± Encoder: pre-trained Skip-Thought Vector Ī± Loss function: mean binary cross entropy Ī± Stochastic Gradient Descent w/ dropout of 0.5 (to prevent overfitting) Goal: Classify review into several categories. 29

- 30. Text Experiment Results Near 1. The curries are nice too 2. The calamari is good. 3. The BBQ is great. 4. The food is great the setup is nice 30 Far 1. Nope nope nope. 2. Disappointed. 3. Not coming back. 4. Dislike. Query The beef is good.

- 31. Text Experiment Results 31 Improvement on other methods. Mean Average Precision Ranking Loss SoftMax 0.313 0.083 MLP 0.325 0.080 DeepMIML 0.330 0.078

- 32. Image Experiment Ī± MS-COCO Dataset Ī± 82,783 Images, 80 labels Ī± Train / Test Split of 70 / 30 Ī± Encoder: pre-trained VGG-16 Ī± Loss function: Hamming Loss Goal: Classify images into several labels. 32

- 34. Image Experiment Results Improvement on VGG-16. Sub-optimal but close enough to show feasibility. 34 Mean Average Precision Hamming Loss F1 score VGG-16 57% 0.025 0.650 CNN-RNN 61.2% - 0.678 DeepMIML 60.5% 0.021 0.637

- 35. MIML Tasks 1. MIML News Text data that has already been preprocessed using tf-idf. 2. MIML Scene Image data that has Single Blob with Neighbors (SBN) features. 35

- 36. Improvement over state of the art MIML algorithms. MIML News Results 36 Hamming Loss Coverage Ranking Loss DeepMIML 0.160 0.890 0.157 KISAR 0.167 0.928 0.162 MIML SVM 0.184 1.039 0.190 MIML KNN 0.172 0.944 0.169 MIML RBF 0.169 0.950 0.169 MIML Boost 0.189 0.947 0.172

- 37. Improvement over state of the art MIML algorithms. MIML Scene Results 37 Hamming Loss Coverage Ranking Loss DeepMIML 0.026 0.261 0.016 KISAR 0.032 0.278 0.019 MIML SVM 0.044 0.373 0.034 MIML KNN 0.063 0.489 0.051 MIML RBF 0.061 0.481 0.052 MIML Boost 0.053 0.417 0.039

- 38. Conclusion 38 1. DeepMIML Network is a good framework to consider for general problem solving when the problem can be represented as MIML. 2. DeepMIML Network performs better than other state of the art MIML algorithms on MIML datasets.

- 39. Questions? 39

- 40. References Feng, J., & Zhou, Z. (2017). Deep MIML Network. Proceedings of the 31st Conference on Artificial Intelligence (AAAI 2017), (2014), 1884©C1890. Babenko, B. (2008). Multiple instance learning: algorithms and applications. View Article PubMed/NCBI Google Scholar, 1©C19. 40