Dhacaini

Download as ppt, pdf0 likes315 views

Computers use binary digits (0s and 1s) to represent all data as these are the only two states that electronics can interpret. Characters are encoded using patterns of bits and bytes, with ASCII and Unicode being the most common encoding schemes to represent a wide range of languages. For a character to be displayed, it is converted through various stages from a keyboard press to a binary code to the appropriate character on screen.

1 of 6

Download to read offline

Ad

Recommended

Input devices [autosaved]

Input devices [autosaved]Zahida Pervaiz

╠²

This document discusses various input methods for computers beyond keyboards and mice. It describes pen and touchscreen input systems used on tablets and handheld devices. It also outlines optical input technologies like bar code readers and image scanners that allow computers to interpret light-based inputs. Microphones and webcams are mentioned as audio-visual input devices that enable voice recognition, video chatting, and recording of sound and images.Usb 72213 76207

Usb 72213 76207Muruly Krishan

╠²

USB (Universal Serial Bus) is a standard interface that provides connectivity for devices through a serial bus, allowing for simple and low-cost connection of peripherals like keyboards, mice and printers. The USB standard defines the communication protocols and connectors between a host such as a personal computer and peripheral devices. USB supports various data transfer types and has become popular in embedded systems by offering a standardized interface for connecting additional functionality to devices.Basic computer

Basic computerMilestone College

╠²

This document provides an introduction to a multimedia class about computers. It discusses what a computer is, what computers do, and the main parts of a computer. A computer is a machine that accepts data from users as input, processes that data, and provides output in the form of results. Computers have three main types of devices - input devices to enter data, output devices to display results, and storage devices that serve as the computer's memory. Specific devices mentioned include the mouse, monitor, printer, headphones, and speaker. The document concludes by listing some common uses of computers such as checking spelling, solving math problems, listening to music, drawing pictures, and using the internet.Microsoft Office & Hardware of Computer By Rimon Rayhan

Microsoft Office & Hardware of Computer By Rimon RayhanBUBT-Bangladesh University of Business and Tecnology

╠²

This document provides an overview of Microsoft Office software and computer hardware. It discusses the main Microsoft Office applications including Word, Excel, PowerPoint, and Outlook. It also defines computer hardware and describes common hardware components such as the motherboard, power supply, storage devices, graphics controller, and interface controllers. Examples of specific hardware are then given including monitors, mice, keyboards, printers, routers, and hard disks.Binary number system (part 2)

Binary number system (part 2)Billy Jean Morado

╠²

This document discusses how computers represent letters and symbols using binary numbers through character encoding systems. It explains that computers first convert letters into numbers using an encoding system like Unicode or ASCII, which provide number equivalents for letters according to set rules. Unicode can represent letters of all world languages, while older ASCII only supported English letters but was easier to compute with since it used 8 bits. The document will use ASCII to encode a message into binary numbers for a computer to understand.4 character encoding-ascii

4 character encoding-asciiirdginfo

╠²

ASCII is a 7-bit character encoding standard used to represent English text that defines 128 characters including 95 printable characters and 33 non-printing control characters. It was developed by ANSI and while it can only encode basic English text, ASCII was almost universally supported and could represent each character with a single byte, making it important for early digital communication.System Unit

System Unitchrispaul8676

╠²

A processor, also called the central processing unit (CPU), interprets and carries out instructions to operate a computer. Modern processors often have multiple cores on a single chip. Common multi-core processors are dual-core and quad-core. A processor contains a control unit that directs operations and an arithmetic logic unit (ALU) that performs calculations. Memory stores instructions, data, and results and allows input/output between devices under the direction of the control unit.Chong ren jye

Chong ren jyechrispaul8676

╠²

This document defines and provides examples of various computer expansion components and peripherals. It discusses expansion slots on motherboards that can hold adapter cards to enhance system functions. Peripherals connect to the system unit and are controlled by the processor. Specific examples of peripherals include sound cards, video cards, removable flash memory like memory cards and USB drives, and PC Cards/ExpressCards that add capabilities when inserted into slots.Topic 2.3 (1)

Topic 2.3 (1)nabilbesttravel

╠²

The document discusses information coding schemes used in data processing. It explains three common coding schemes - ASCII, EBCDIC, and Unicode. ASCII uses 8 bits to represent 256 characters and is used on personal computers. EBCDIC also uses 8 bits but is used on IBM mainframes. Unicode uses 16 bits to represent over 65,000 characters and supports all global languages.Chapter 2

Chapter 2Hajar Len

╠²

This document provides information about computer systems and their components. It discusses that a computer accepts data as input, processes it according to rules, produces output, and stores results. It also describes the functions of input, output, storage, and processing devices. The central processing unit (CPU) controls and coordinates the computer's operations by fetching instructions, decoding them, executing them, and storing results. Data is represented digitally using bits, bytes, and character encoding schemes like ASCII. Units of data measurement like kilobytes and clockspeed measures like megahertz and gigahertz are also explained.Chapter 2Hardware2.1 The System Unit2.2 Data and P

Chapter 2Hardware2.1 The System Unit2.2 Data and PEstelaJeffery653

╠²

The document outlines key concepts in computer hardware, focusing on data representation in digital computers, where information is communicated through binary code, composed of bits and bytes. It explains various coding systems, such as ASCII and Unicode, as well as types of multimedia data like graphics, audio, and video, highlighting their respective representations and storage requirements. Additionally, it discusses the role of the motherboard and CPU in a computer system, emphasizing the advancements that allow for increased data processing efficiency.Chapter 2 computer system

Chapter 2 computer systemAten Kecik

╠²

A computer system consists of four main hardware components: input devices that allow users to enter data, a processor that processes the data, storage devices that hold data and files for future use, and output devices that display processed data for users. A computer requires both hardware components like input/output devices and storage as well as software programs and an operating system to manage the processing and storage of data. An operating system controls basic computer operations like launching programs, allocating memory and storage, and providing a user interface between the computer and applications.Chapter 2 computer system

Chapter 2 computer systemmeisaina

╠²

A computer system consists of four main components: input devices, output devices, a processor, and storage devices. It requires hardware, software, and a user to fully function. The processor controls all activities within the system by processing input data. Processed data is then sent to output devices or stored in storage for future use. Computer storage helps users save programs and data to be accessed later.Data Representation class 11 computer.pptx

Data Representation class 11 computer.pptxDEV8C

╠²

The document discusses data representation, explaining how symbols represent people, events, and ideas, with an emphasis on different number systems such as decimal, binary, octal, and hexadecimal, and their unique representations. It further covers binary addition, 1's and 2's complement, as well as character encoding standards including ASCII, ISCI, and Unicode, detailing their significance in electronic communication and data handling. Overall, it highlights the complexity and necessity of structured data representation in computing.Ch 02 Encoding & Number System.pdf

Ch 02 Encoding & Number System.pdfA23AyushRajBaranwal

╠²

This document discusses different encoding schemes and number systems used in computers. It begins by explaining how keyboard characters are mapped to binary codes that computers can understand. This is done through encoding schemes like ASCII and UNICODE which assign unique codes to characters. It then describes different number systems like decimal, binary, octal and hexadecimal that are used to represent numbers in a format understood by computers and humans. Decimal uses base-10, binary uses base-2, octal uses base-8 and hexadecimal uses base-16.chapter-2.pptx

chapter-2.pptxRithinA1

╠²

Computers represent both numeric and non-numeric characters using predefined codes. The ASCII code represents 128 characters using 7 bits, while ASCII-8 extends this to 256 characters using 8 bits. ISCII was developed for Indian languages and allows simultaneous use of English and Indian scripts using 8 bits per character. Unicode is now the universal standard adopted by all platforms that assigns a unique number to every character worldwide for interchange, processing and display of written texts across languages.Chapter2puc1firstyearnotessssssssss.docx

Chapter2puc1firstyearnotessssssssss.docxSimranShaikh84

╠²

The document discusses various encoding schemes used for text interpretation by computers, including ASCII, ISCII, and Unicode, detailing their development and applications. It also covers number systems such as decimal, binary, octal, and hexadecimal, explaining their characteristics and conversions. Additionally, the practical uses of hexadecimal in memory addressing and web design are highlighted.Introduction to digital computers and Number systems.pptx

Introduction to digital computers and Number systems.pptxBhawaniShankarSahu1

╠²

The document provides an introduction to digital computers and number systems. It discusses computer systems and architecture, decimal and binary number systems, number conversion between systems, and character encoding standards like ASCII, ISCII, and Unicode. It also introduces programming concepts like flowcharts, algorithms, and C/C++ compilers. Key topics covered include data representation, computer components, software types, number bases, programming languages, and applications of C/C++.Computer Introduction (Software)-Lecture03

Computer Introduction (Software)-Lecture03Dr. Mazin Mohamed alkathiri

╠²

The document provides an introduction to computer software, explaining the relationship between hardware and software, with a focus on the binary system as the foundation for computer operations. It categorizes software into system software, application software, and middleware, detailing their functions and examples. Additionally, it discusses the role of logical operations, data representation, machine code execution, and the importance of encoding systems like ASCII in computing.Learning Area 2

Learning Area 2norshipa

╠²

The document provides an overview of computer systems and their components. It can be summarized as follows:

1) A computer system consists of four major hardware components: input devices, output devices, a processor, and storage devices. It requires both hardware and software to function, with software providing instructions to tell the hardware what to do.

2) Input devices such as keyboards and mice are used to enter data into the system. Processing is carried out by the central processing unit (CPU). Output devices like monitors and printers are used to display or print the processed data. Storage devices hold data, programs and files for future use.

3) The information processing cycle involves a user inputting data, the processor carrying outLearning Area 2

Learning Area 2norshipa

╠²

The document provides an overview of computer systems and their components. It can be summarized as follows:

1) A computer system consists of four major hardware components: input devices, output devices, a processor, and storage devices. It requires both hardware and software to function, with software providing instructions to tell the hardware what to do.

2) Common input devices include keyboards, mice, and scanners. Output devices display processed data through monitors, printers, and speakers. Storage holds data and programs, while the processor controls activities and executes instructions.

3) The information processing cycle involves a user inputting data, the processor accessing stored programs and data to process the input, and output devices presenting the processed output backCOMPUTER FUNDAMENTALS PPT.pptx

COMPUTER FUNDAMENTALS PPT.pptxSwagatoBiswas

╠²

This document provides summaries of key computer science concepts in multiple paragraphs. It discusses discs/diskettes which are magnetic storage devices that can read and write information. It describes computer memory and its three forms: cache, main memory, and secondary storage. It defines the processor (CPU) as the main integrated circuit responsible for arithmetic, logic, and input/output functions. The operating system acts as a bridge between the user and hardware, providing an efficient environment to run applications. Storage devices allow users to safely store and access data and programs. Character representation standards like ASCII are discussed, along with number systems such as binary, octal, and hexadecimal. Binary arithmetic and how to convert between number systems is briefly covered.Coding System

Coding SystemRafiqah Nabihah bt Rossazali

╠²

ASCII uses 8 bits to represent each character, allowing it to store data using less memory than other coding schemes. It represents a total of 256 characters and was developed as a standard coding system to enable communication between computers and is still widely used today, especially for displaying text on the internet. It is the most common encoding system and was important for enabling the development of personal computers and sharing of digital information.Introduction to programming concepts

Introduction to programming conceptshermiraguilar

╠²

The document discusses the basics of computer hardware and software components. It defines what a computer is and describes the principal hardware components including the central processing unit, memory, input/output devices, and storage devices. It also discusses computer data representation in binary form, computer codes like ASCII and Unicode. The document then covers the basics of computer software, the different types of software, and the software development life cycle.Abap slide class4 unicode-plusfiles

Abap slide class4 unicode-plusfilesMilind Patil

╠²

The document discusses Unicode and file handling topics for an ABAP workshop. It covers characters and encoding, ASCII standards, glyphs and fonts, extended ASCII issues, character sets and code pages, little and big endian formats, Unicode, Unicode transformation formats, Unicode in SAP systems, file interfaces, and error handling for files on application and presentation servers. Unicode provides a unique number for every character to standardize representation across languages, platforms, and programs.Data representation in a computer

Data representation in a computerGirmachew Tilahun

╠²

This document discusses how computers represent different types of data using binary numbers. It explains that all data inside a computer is stored as binary digits (bits) that represent ON and OFF switches. Various data types like characters, pictures, sound, programs and integers are represented by grouping bits into bytes. The context determines how a computer interprets each byte. Standards like ASCII, JPEG and WAV define how different data is encoded into binary format and bytes. The document also covers number systems like binary, decimal, hexadecimal and their properties.Buses in a computer

Buses in a computerchrispaul8676

╠²

Buses transfer data and communication signals within a computer. They allow different components like the CPU, memory, and input/output devices to exchange information. The bus width and clock speed determine how much data can be transferred at once and how quickly. Wider buses and faster clock speeds improve performance by allowing more data to be processed in less time. A computer has several types of buses that connect different internal components like the processor, cache, and expansion ports.Expansion Slot

Expansion Slotchrispaul8676

╠²

This document defines and provides examples of various computer expansion slots, adapter cards, peripherals, and removable flash memory. It explains that expansion slots hold adapter cards that enhance system functions and connect peripherals. Peripherals connect to the computer and are controlled by the processor. Examples of adapter cards given are sound cards and video cards. Removable flash memory like memory cards, USB drives, and PC cards can be hot-plugged while the computer is running.More Related Content

Similar to Dhacaini (20)

Topic 2.3 (1)

Topic 2.3 (1)nabilbesttravel

╠²

The document discusses information coding schemes used in data processing. It explains three common coding schemes - ASCII, EBCDIC, and Unicode. ASCII uses 8 bits to represent 256 characters and is used on personal computers. EBCDIC also uses 8 bits but is used on IBM mainframes. Unicode uses 16 bits to represent over 65,000 characters and supports all global languages.Chapter 2

Chapter 2Hajar Len

╠²

This document provides information about computer systems and their components. It discusses that a computer accepts data as input, processes it according to rules, produces output, and stores results. It also describes the functions of input, output, storage, and processing devices. The central processing unit (CPU) controls and coordinates the computer's operations by fetching instructions, decoding them, executing them, and storing results. Data is represented digitally using bits, bytes, and character encoding schemes like ASCII. Units of data measurement like kilobytes and clockspeed measures like megahertz and gigahertz are also explained.Chapter 2Hardware2.1 The System Unit2.2 Data and P

Chapter 2Hardware2.1 The System Unit2.2 Data and PEstelaJeffery653

╠²

The document outlines key concepts in computer hardware, focusing on data representation in digital computers, where information is communicated through binary code, composed of bits and bytes. It explains various coding systems, such as ASCII and Unicode, as well as types of multimedia data like graphics, audio, and video, highlighting their respective representations and storage requirements. Additionally, it discusses the role of the motherboard and CPU in a computer system, emphasizing the advancements that allow for increased data processing efficiency.Chapter 2 computer system

Chapter 2 computer systemAten Kecik

╠²

A computer system consists of four main hardware components: input devices that allow users to enter data, a processor that processes the data, storage devices that hold data and files for future use, and output devices that display processed data for users. A computer requires both hardware components like input/output devices and storage as well as software programs and an operating system to manage the processing and storage of data. An operating system controls basic computer operations like launching programs, allocating memory and storage, and providing a user interface between the computer and applications.Chapter 2 computer system

Chapter 2 computer systemmeisaina

╠²

A computer system consists of four main components: input devices, output devices, a processor, and storage devices. It requires hardware, software, and a user to fully function. The processor controls all activities within the system by processing input data. Processed data is then sent to output devices or stored in storage for future use. Computer storage helps users save programs and data to be accessed later.Data Representation class 11 computer.pptx

Data Representation class 11 computer.pptxDEV8C

╠²

The document discusses data representation, explaining how symbols represent people, events, and ideas, with an emphasis on different number systems such as decimal, binary, octal, and hexadecimal, and their unique representations. It further covers binary addition, 1's and 2's complement, as well as character encoding standards including ASCII, ISCI, and Unicode, detailing their significance in electronic communication and data handling. Overall, it highlights the complexity and necessity of structured data representation in computing.Ch 02 Encoding & Number System.pdf

Ch 02 Encoding & Number System.pdfA23AyushRajBaranwal

╠²

This document discusses different encoding schemes and number systems used in computers. It begins by explaining how keyboard characters are mapped to binary codes that computers can understand. This is done through encoding schemes like ASCII and UNICODE which assign unique codes to characters. It then describes different number systems like decimal, binary, octal and hexadecimal that are used to represent numbers in a format understood by computers and humans. Decimal uses base-10, binary uses base-2, octal uses base-8 and hexadecimal uses base-16.chapter-2.pptx

chapter-2.pptxRithinA1

╠²

Computers represent both numeric and non-numeric characters using predefined codes. The ASCII code represents 128 characters using 7 bits, while ASCII-8 extends this to 256 characters using 8 bits. ISCII was developed for Indian languages and allows simultaneous use of English and Indian scripts using 8 bits per character. Unicode is now the universal standard adopted by all platforms that assigns a unique number to every character worldwide for interchange, processing and display of written texts across languages.Chapter2puc1firstyearnotessssssssss.docx

Chapter2puc1firstyearnotessssssssss.docxSimranShaikh84

╠²

The document discusses various encoding schemes used for text interpretation by computers, including ASCII, ISCII, and Unicode, detailing their development and applications. It also covers number systems such as decimal, binary, octal, and hexadecimal, explaining their characteristics and conversions. Additionally, the practical uses of hexadecimal in memory addressing and web design are highlighted.Introduction to digital computers and Number systems.pptx

Introduction to digital computers and Number systems.pptxBhawaniShankarSahu1

╠²

The document provides an introduction to digital computers and number systems. It discusses computer systems and architecture, decimal and binary number systems, number conversion between systems, and character encoding standards like ASCII, ISCII, and Unicode. It also introduces programming concepts like flowcharts, algorithms, and C/C++ compilers. Key topics covered include data representation, computer components, software types, number bases, programming languages, and applications of C/C++.Computer Introduction (Software)-Lecture03

Computer Introduction (Software)-Lecture03Dr. Mazin Mohamed alkathiri

╠²

The document provides an introduction to computer software, explaining the relationship between hardware and software, with a focus on the binary system as the foundation for computer operations. It categorizes software into system software, application software, and middleware, detailing their functions and examples. Additionally, it discusses the role of logical operations, data representation, machine code execution, and the importance of encoding systems like ASCII in computing.Learning Area 2

Learning Area 2norshipa

╠²

The document provides an overview of computer systems and their components. It can be summarized as follows:

1) A computer system consists of four major hardware components: input devices, output devices, a processor, and storage devices. It requires both hardware and software to function, with software providing instructions to tell the hardware what to do.

2) Input devices such as keyboards and mice are used to enter data into the system. Processing is carried out by the central processing unit (CPU). Output devices like monitors and printers are used to display or print the processed data. Storage devices hold data, programs and files for future use.

3) The information processing cycle involves a user inputting data, the processor carrying outLearning Area 2

Learning Area 2norshipa

╠²

The document provides an overview of computer systems and their components. It can be summarized as follows:

1) A computer system consists of four major hardware components: input devices, output devices, a processor, and storage devices. It requires both hardware and software to function, with software providing instructions to tell the hardware what to do.

2) Common input devices include keyboards, mice, and scanners. Output devices display processed data through monitors, printers, and speakers. Storage holds data and programs, while the processor controls activities and executes instructions.

3) The information processing cycle involves a user inputting data, the processor accessing stored programs and data to process the input, and output devices presenting the processed output backCOMPUTER FUNDAMENTALS PPT.pptx

COMPUTER FUNDAMENTALS PPT.pptxSwagatoBiswas

╠²

This document provides summaries of key computer science concepts in multiple paragraphs. It discusses discs/diskettes which are magnetic storage devices that can read and write information. It describes computer memory and its three forms: cache, main memory, and secondary storage. It defines the processor (CPU) as the main integrated circuit responsible for arithmetic, logic, and input/output functions. The operating system acts as a bridge between the user and hardware, providing an efficient environment to run applications. Storage devices allow users to safely store and access data and programs. Character representation standards like ASCII are discussed, along with number systems such as binary, octal, and hexadecimal. Binary arithmetic and how to convert between number systems is briefly covered.Coding System

Coding SystemRafiqah Nabihah bt Rossazali

╠²

ASCII uses 8 bits to represent each character, allowing it to store data using less memory than other coding schemes. It represents a total of 256 characters and was developed as a standard coding system to enable communication between computers and is still widely used today, especially for displaying text on the internet. It is the most common encoding system and was important for enabling the development of personal computers and sharing of digital information.Introduction to programming concepts

Introduction to programming conceptshermiraguilar

╠²

The document discusses the basics of computer hardware and software components. It defines what a computer is and describes the principal hardware components including the central processing unit, memory, input/output devices, and storage devices. It also discusses computer data representation in binary form, computer codes like ASCII and Unicode. The document then covers the basics of computer software, the different types of software, and the software development life cycle.Abap slide class4 unicode-plusfiles

Abap slide class4 unicode-plusfilesMilind Patil

╠²

The document discusses Unicode and file handling topics for an ABAP workshop. It covers characters and encoding, ASCII standards, glyphs and fonts, extended ASCII issues, character sets and code pages, little and big endian formats, Unicode, Unicode transformation formats, Unicode in SAP systems, file interfaces, and error handling for files on application and presentation servers. Unicode provides a unique number for every character to standardize representation across languages, platforms, and programs.Data representation in a computer

Data representation in a computerGirmachew Tilahun

╠²

This document discusses how computers represent different types of data using binary numbers. It explains that all data inside a computer is stored as binary digits (bits) that represent ON and OFF switches. Various data types like characters, pictures, sound, programs and integers are represented by grouping bits into bytes. The context determines how a computer interprets each byte. Standards like ASCII, JPEG and WAV define how different data is encoded into binary format and bytes. The document also covers number systems like binary, decimal, hexadecimal and their properties.More from chrispaul8676 (13)

Buses in a computer

Buses in a computerchrispaul8676

╠²

Buses transfer data and communication signals within a computer. They allow different components like the CPU, memory, and input/output devices to exchange information. The bus width and clock speed determine how much data can be transferred at once and how quickly. Wider buses and faster clock speeds improve performance by allowing more data to be processed in less time. A computer has several types of buses that connect different internal components like the processor, cache, and expansion ports.Expansion Slot

Expansion Slotchrispaul8676

╠²

This document defines and provides examples of various computer expansion slots, adapter cards, peripherals, and removable flash memory. It explains that expansion slots hold adapter cards that enhance system functions and connect peripherals. Peripherals connect to the computer and are controlled by the processor. Examples of adapter cards given are sound cards and video cards. Removable flash memory like memory cards, USB drives, and PC cards can be hot-plugged while the computer is running.Power Supply

Power Supplychrispaul8676

╠²

A processor, also called the central processing unit (CPU), interprets and carries out instructions to operate a computer. Modern processors often have multiple cores on a single chip. Common multi-core processors are dual-core and quad-core. The control unit directs operations like the arithmetic logic unit (ALU) which performs calculations using data from memory. Memory stores operating systems, programs, and data for processing and output to devices.Ports and connectors

Ports and connectorschrispaul8676

╠²

Ports and connectors allow external devices to connect to a computer system. Ports are points of connection on the computer case where peripherals attach via cables and connectors. Common ports include USB, FireWire, Bluetooth, serial and parallel ports. Connectors join cables to ports and come in male and female varieties. Devices like hubs allow multiple peripherals to connect to a single port.data representation

data representationchrispaul8676

╠²

Computers use binary digits (0s and 1s) to represent all data as these are the only two states that electronics can recognize. Characters are represented by patterns of bits (the smallest unit of data) that are grouped into bytes. Initially, the ASCII coding scheme was used to represent English and Western European languages using 7-bit bytes, but Unicode superseded it using 16-bit bytes and supports over 65,000 characters for many languages. When typing on a keyboard, keys are converted to electronic signals then binary codes which are processed and converted back to recognizable characters on a screen.It presentation

It presentationchrispaul8676

╠²

A presentation on system failure was given by Ong Teng Sin, Hoe Nai Hung, and Lee Tze Wen on July 30th, 2012. The presentation defined system failure as a hardware or software malfunction that causes a computer system to freeze, reboot, or stop working entirely and can result in lost data. Common causes of system failure included aging hardware, natural disasters, power problems, programming errors, and viruses. The presentation recommended preventing failures by replacing old hardware, using surge protectors, connecting uninterruptible power supplies, and using anti-virus software.Identity theft

Identity theftchrispaul8676

╠²

The document lists the group members for a project: Tasha Rowena Vaz, Lalit Guha, Ram, and Sugenthiren.It ppt new

It ppt newchrispaul8676

╠²

Computer security involves protecting computers and networks from hardware theft and vandalism. Some techniques used include locked doors and windows, alarm systems, cables to lock equipment down, and small locking devices for hard disks and optical drives. For mobile devices, tracking software, passwords, and biometrics can provide protection and deterrence against theft. Anti-theft measures aim to render stolen hardware useless to thieves.System failure

System failurechrispaul8676

╠²

System failures can occur due to various reasons such as user error, environmental factors, aging hardware, software errors, and power issues. This disrupts computer operation and can result in data loss. Surge protectors and uninterruptible power supplies (UPS) can help protect devices from electrical disturbances. For wireless networks, enabling encryption via WPA and changing default settings are important for security. Home users should take steps like filtering devices, using strong passwords, and enabling firewalls.Unauthorized access and use

Unauthorized access and usechrispaul8676

╠²

1. Unauthorized access involves accessing a computer or network without permission, often by connecting and logging in as a legitimate user without causing damages by merely accessing data or programs.

2. Unauthorized use involves using a computer or its data for unapproved or illegal activities like unauthorized bank transfers.

3. Safeguards against unauthorized access and use include having an acceptable use policy, using firewalls, access controls, and intrusion detection software.Software theft

Software theftchrispaul8676

╠²

Software theft involves illegally copying or distributing copyrighted software. There are four main types of software theft: [1] physically stealing software media or hardware, [2] disgruntled employees deleting programs from company computers, [3] widespread piracy of software manufacturers' products, and [4] obtaining registration codes illegally without purchase. To prevent software theft, owners should secure original materials, users should backup regularly, companies should escort terminated employees, and software companies enforce license agreements limiting legal use.Hardware theft

Hardware theftchrispaul8676

╠²

Hardware theft involves stealing computer equipment, such as by opening computers and taking parts. Hardware vandalism is the deliberate destruction of computer equipment, like cutting cables or smashing computers. These crimes pose a threat mainly to businesses, schools, and mobile users, as desktop computers are generally too large to easily steal. Each year, over 600,000 notebooks are estimated to be stolen.Ad

Recently uploaded (20)

FME for Distribution & Transmission Integrity Management Program (DIMP & TIMP)

FME for Distribution & Transmission Integrity Management Program (DIMP & TIMP)Safe Software

╠²

Peoples Gas in Chicago, IL has changed to a new Distribution & Transmission Integrity Management Program (DIMP & TIMP) software provider in recent years. In order to successfully deploy the new software we have created a series of ETL processes using FME Form to transform our gas facility data to meet the required DIMP & TIMP data specifications. This presentation will provide an overview of how we used FME to transform data from ESRIŌĆÖs Utility Network and several other internal and external sources to meet the strict data specifications for the DIMP and TIMP software solutions.OWASP Barcelona 2025 Threat Model Library

OWASP Barcelona 2025 Threat Model LibraryPetraVukmirovic

╠²

Threat Model Library Launch at OWASP Barcelona 2025

https://owasp.org/www-project-threat-model-library/cnc-processing-centers-centateq-p-110-en.pdf

cnc-processing-centers-centateq-p-110-en.pdfAmirStern2

╠²

ū×ū©ūøū¢ ūóūÖūæūĢūōūÖūØ ū¬ūóū®ūÖūÖū¬ūÖ ūæūóū£ 3/4/5 ū”ūÖū©ūÖūØ, ūóūō 22 ūöūŚū£ūżūĢū¬ ūøū£ūÖūØ ūóūØ ūøū£ ūÉūżū®ū©ūĢūÖūĢū¬ ūöūóūÖūæūĢūō ūöūōū©ūĢū®ūĢū¬.╠²ūæūóū£ ū®ūśūŚ ūóūæūĢūōūö ūÆūōūĢū£ ūĢū×ūŚū®ūæ ūĀūĢūŚ ūĢū¦ū£ ū£ūöūżūóū£ūö ūæū®ūżūö ūöūóūæū©ūÖū¬/ū©ūĢūĪūÖū¬/ūÉūĀūÆū£ūÖū¬/ūĪūżū©ūōūÖū¬/ūóū©ūæūÖū¬ ūĢūóūĢūō..

ū×ūĪūĢūÆū£ ū£ūæū”ūó ūżūóūĢū£ūĢū¬ ūóūÖūæūĢūō ū®ūĢūĀūĢū¬ ūöū×ū¬ūÉūÖū×ūĢū¬ ū£ūóūĀūżūÖūØ ū®ūĢūĀūÖūØ: ū¦ūÖūōūĢūŚ ūÉūĀūøūÖ, ūÉūĢūżū¦ūÖ, ūĀūÖūĪūĢū©, ūĢūøū©ūĪūĢūØ ūÉūĀūøūÖ.From Manual to Auto Searching- FME in the Driver's Seat

From Manual to Auto Searching- FME in the Driver's SeatSafe Software

╠²

Finding a specific car online can be a time-consuming task, especially when checking multiple dealer websites. A few years ago, I faced this exact problem while searching for a particular vehicle in New Zealand. The local classified platform, Trade Me (similar to eBay), wasnŌĆÖt yielding any results, so I expanded my search to second-hand dealer sitesŌĆöonly to realise that periodically checking each one was going to be tedious. ThatŌĆÖs when I noticed something interesting: many of these websites used the same platform to manage their inventories. Recognising this, I reverse-engineered the platformŌĆÖs structure and built an FME workspace that automated the search process for me. By integrating API calls and setting up periodic checks, I received real-time email alerts when matching cars were listed. In this presentation, IŌĆÖll walk through how I used FME to save hours of manual searching by creating a custom car-finding automation system. While FME canŌĆÖt buy a car for youŌĆöyetŌĆöit can certainly help you find the one youŌĆÖre after!Artificial Intelligence in the Nonprofit Boardroom.pdf

Artificial Intelligence in the Nonprofit Boardroom.pdfOnBoard

╠²

OnBoard recently partnered with Microsoft Tech for Social Impact on the AI in the Nonprofit Boardroom Survey, an initiative designed to uncover the current and future role of artificial intelligence in nonprofit governance. AI VIDEO MAGAZINE - June 2025 - r/aivideo

AI VIDEO MAGAZINE - June 2025 - r/aivideo1pcity Studios, Inc

╠²

AI VIDEO MAGAZINE - r/aivideo community newsletter ŌĆō Exclusive Tutorials: How to make an AI VIDEO from scratch, PLUS: How to make AI MUSIC, Hottest ai videos of 2025, Exclusive Interviews, New Tools, Previews, and MORE - JUNE 2025 ISSUE -AI vs Human Writing: Can You Tell the Difference?

AI vs Human Writing: Can You Tell the Difference?Shashi Sathyanarayana, Ph.D

╠²

This slide illustrates a side-by-side comparison between human-written, AI-written, and ambiguous content. It highlights subtle cues that help readers assess authenticity, raising essential questions about the future of communication, trust, and thought leadership in the age of generative AI.FIDO Seminar: New Data: Passkey Adoption in the Workforce.pptx

FIDO Seminar: New Data: Passkey Adoption in the Workforce.pptxFIDO Alliance

╠²

FIDO Seminar: New Data: Passkey Adoption in the WorkforcePowering Multi-Page Web Applications Using Flow Apps and FME Data Streaming

Powering Multi-Page Web Applications Using Flow Apps and FME Data StreamingSafe Software

╠²

Unleash the potential of FME Flow to build and deploy advanced multi-page web applications with ease. Discover how Flow Apps and FMEŌĆÖs data streaming capabilities empower you to create interactive web experiences directly within FME Platform. Without the need for dedicated web-hosting infrastructure, FME enhances both data accessibility and user experience. Join us to explore how to unlock the full potential of FME for your web projects and seamlessly integrate data-driven applications into your workflows.Security Tips for Enterprise Azure Solutions

Security Tips for Enterprise Azure SolutionsMichele Leroux Bustamante

╠²

Delivering solutions to Azure may involve a variety of architecture patterns involving your applications, APIs data and associated Azure resources that comprise the solution. This session will use reference architectures to illustrate the security considerations to protect your Azure resources and data, how to achieve Zero Trust, and why it matters. Topics covered will include specific security recommendations for types Azure resources and related network security practices. The goal is to give you a breadth of understanding as to typical security requirements to meet compliance and security controls in an enterprise solution.Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation

╠²

Can We Use Rust to Develop Extensions for PostgreSQL?

(POSETTE: An Event for Postgres 2025)

June 11, 2025

Shinya Kato

NTT DATA Japan CorporationENERGY CONSUMPTION CALCULATION IN ENERGY-EFFICIENT AIR CONDITIONER.pdf

ENERGY CONSUMPTION CALCULATION IN ENERGY-EFFICIENT AIR CONDITIONER.pdfMuhammad Rizwan Akram

╠²

DC Inverter Air Conditioners are revolutionizing the cooling industry by delivering affordable,

energy-efficient, and environmentally sustainable climate control solutions. Unlike conventional

fixed-speed air conditioners, DC inverter systems operate with variable-speed compressors that

modulate cooling output based on demand, significantly reducing energy consumption and

extending the lifespan of the appliance.

These systems are critical in reducing electricity usage, lowering greenhouse gas emissions, and

promoting eco-friendly technologies in residential and commercial sectors. With advancements in

compressor control, refrigerant efficiency, and smart energy management, DC inverter air conditioners

have become a benchmark in sustainable climate control solutionsFIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptx

FIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptxFIDO Alliance

╠²

FIDO Seminar: Perspectives on Passkeys & Consumer AdoptionCrypto Super 500 - 14th Report - June2025.pdf

Crypto Super 500 - 14th Report - June2025.pdfStephen Perrenod

╠²

This OrionX's 14th semi-annual report on the state of the cryptocurrency mining market. The report focuses on Proof-of-Work cryptocurrencies since those use substantial supercomputer power to mint new coins and encode transactions on their blockchains. Only two make the cut this time, Bitcoin with $18 billion of annual economic value produced and Dogecoin with $1 billion. Bitcoin has now reached the Zettascale with typical hash rates of 0.9 Zettahashes per second. Bitcoin is powered by the world's largest decentralized supercomputer in a continuous winner take all lottery incentive network.June Patch Tuesday

June Patch TuesdayIvanti

╠²

IvantiŌĆÖs Patch Tuesday breakdown goes beyond patching your applications and brings you the intelligence and guidance needed to prioritize where to focus your attention first. Catch early analysis on our Ivanti blog, then join industry expert Chris Goettl for the Patch Tuesday Webinar Event. There weŌĆÖll do a deep dive into each of the bulletins and give guidance on the risks associated with the newly-identified vulnerabilities. No-Code Workflows for CAD & 3D Data: Scaling AI-Driven Infrastructure

No-Code Workflows for CAD & 3D Data: Scaling AI-Driven InfrastructureSafe Software

╠²

When projects depend on fast, reliable spatial data, every minute counts.

AI Clearing needed a faster way to handle complex spatial data from drone surveys, CAD designs and 3D project models across construction sites. With FME Form, they built no-code workflows to clean, convert, integrate, and validate dozens of data formats ŌĆō cutting analysis time from 5 hours to just 30 minutes.

Join us, our partner Globema, and customer AI Clearing to see how they:

-Automate processing of 2D, 3D, drone, spatial, and non-spatial data

-Analyze construction progress 10x faster and with fewer errors

-Handle diverse formats like DWG, KML, SHP, and PDF with ease

-Scale their workflows for international projects in solar, roads, and pipelines

If you work with complex data, join us to learn how to optimize your own processes and transform your results with FME.You are not excused! How to avoid security blind spots on the way to production

You are not excused! How to avoid security blind spots on the way to productionMichele Leroux Bustamante

╠²

We live in an ever evolving landscape for cyber threats creating security risk for your production systems. Mitigating these risks requires participation throughout all stages from development through production delivery - and by every role including architects, developers QA and DevOps engineers, product owners and leadership. No one is excused! This session will cover examples of common mistakes or missed opportunities that can lead to vulnerabilities in production - and ways to do better throughout the development lifecycle.Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation

╠²

You are not excused! How to avoid security blind spots on the way to production

You are not excused! How to avoid security blind spots on the way to productionMichele Leroux Bustamante

╠²

Ad

Dhacaini

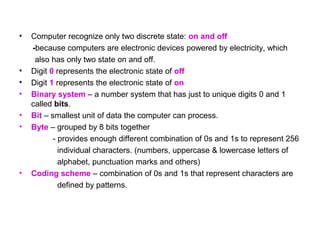

- 2. ŌĆó Computer recognize only two discrete state: on and off -because computers are electronic devices powered by electricity, which also has only two state on and off. ŌĆó Digit 0 represents the electronic state of off ŌĆó Digit 1 represents the electronic state of on ŌĆó Binary system ŌĆō a number system that has just to unique digits 0 and 1 called bits. ŌĆó Bit ŌĆō smallest unit of data the computer can process. ŌĆó Byte ŌĆō grouped by 8 bits together - provides enough different combination of 0s and 1s to represent 256 individual characters. (numbers, uppercase & lowercase letters of alphabet, punctuation marks and others) ŌĆó Coding scheme ŌĆō combination of 0s and 1s that represent characters are defined by patterns.

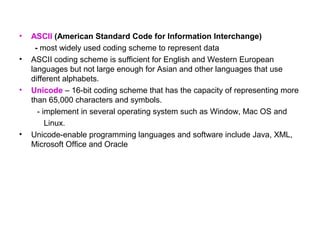

- 3. ŌĆó ASCII (American Standard Code for Information Interchange) - most widely used coding scheme to represent data ŌĆó ASCII coding scheme is sufficient for English and Western European languages but not large enough for Asian and other languages that use different alphabets. ŌĆó Unicode ŌĆō 16-bit coding scheme that has the capacity of representing more than 65,000 characters and symbols. - implement in several operating system such as Window, Mac OS and Linux. ŌĆó Unicode-enable programming languages and software include Java, XML, Microsoft Office and Oracle

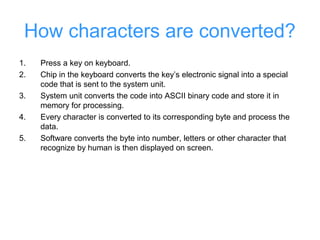

- 5. How characters are converted? 1. Press a key on keyboard. 2. Chip in the keyboard converts the keyŌĆÖs electronic signal into a special code that is sent to the system unit. 3. System unit converts the code into ASCII binary code and store it in memory for processing. 4. Every character is converted to its corresponding byte and process the data. 5. Software converts the byte into number, letters or other character that recognize by human is then displayed on screen.