Distributed Logging with Kubernetes

- 2. 2 HELLO! I am Gregory Fod└ Sanderson Jive DevOps team You can email me at gregory.sanderson@logmein.com

- 3. 3

- 5. 8 DATACENTERS Distributed across US, Brazil & Europe 5

- 6. 4000 PODS Distributed across US, Brazil & Europe 6

- 7. 10600 CONTAINERS Distributed across US, Brazil & Europe 7

- 8. 2TB LOGS PER DAY Distributed across US, Brazil & Europe 8

- 9. A LOT OF LOGS ! How do we store them ?!?!?!?!?! 9

- 10. 10

- 13. SOLUTION: LOG PRODUCERS ? Docker reconfigured to add log metadata ? DOCKER_OPTS= --log-driver=json-file --log-opt env=K8S_CLUSTER --log-opt labels= io.kubernetes.pod.name, io.kubernetes.container.name, io.kubernetes.container.restartCount, io.kubernetes.pod.namespace --log-opt max-size=20m --log-opt max-file=2

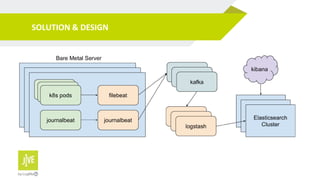

- 14. SOLUTION: LOG PRODUCERS ? Filebeat ? Daemonset ? /var/lib/docker/containers/*/*.log ? Push logs to kafka ? Journalbeat ? Daemonset ? Reads logs from journald (system logs) ? Push logs to kafka

- 15. 15

- 16. SOLUTION: LOG TRANSPORT ? Kafka ? Distributed data streaming pipelines ? Producer & Consumer model ? Fault tolerance for cluster & consumers ? Logstash ? Consumes logs and sends to ES cluster ? All instances part of the same consumer group ? Adds metadata to logs

- 17. SOLUTION: LOG TRANSPORT grok { match => { '[attrs][io.kubernetes.pod.name]' => '(?<service>.*).[0-9]+-(?<pod_id>[A-Za-z0-9]{5})' } } mutate { add_field => { 'container' => '%{[attrs][io.kubernetes.container.name]}' 'provider' => `K8S' } replace => { "hostname" => "%{[beat][name]}.%{site}"} }

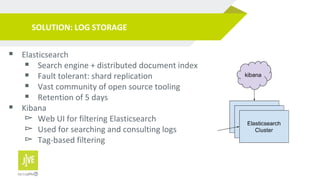

- 18. SOLUTION: LOG STORAGE ? Elasticsearch ? Search engine + distributed document index ? Fault tolerant: shard replication ? Vast community of open source tooling ? Retention of 5 days ? Kibana ? Web UI for filtering Elasticsearch ? Used for searching and consulting logs ? Tag-based filtering

- 19. FUTURE PLANS: MOVE ES TO AWS ? Current cluster ? 3 bare metal servers ? 56 CPUs ? 256 RAM ? 2 Disks (2TB + 4TB) ? AWS cluster ? AWS ES managed ? 10 i3.2xlarge instances ? 64GB RAM ? NVMe 1.9 TB disks ? 2 AZ

- 20. 20

- 21. 21 THANKS! Any questions? You can find me at gregory.sanderson@logmein.com

Editor's Notes

- #3: =g

![SOLUTION: LOG TRANSPORT

grok {

match => { '[attrs][io.kubernetes.pod.name]' =>

'(?<service>.*).[0-9]+-(?<pod_id>[A-Za-z0-9]{5})' }

}

mutate {

add_field => {

'container' => '%{[attrs][io.kubernetes.container.name]}'

'provider' => `K8S'

}

replace => { "hostname" => "%{[beat][name]}.%{site}"}

}](https://image.slidesharecdn.com/k8s-logs1-190614184553/85/Distributed-Logging-with-Kubernetes-17-320.jpg)