Frustration-Reduced Spark: DataFrames and the Spark Time-Series Library

- 1. Frustration-Reduced Spark DataFrames and the Spark Time-Series Library Ilya Ganelin

- 2. Why are we here? ’éŚ Spark for quick and easy batch ETL (no streaming) ’éŚ Actually using data frames ’éŚ Creation ’éŚ Modification ’éŚ Access ’éŚ Transformation ’éŚ Time Series analysis ’éŚ https://github.com/cloudera/spark-timeseries ’éŚ http://blog.cloudera.com/blog/2015/12/spark-ts-a-new-library- for-analyzing-time-series-data-with-apache-spark/

- 3. Why Spark? ’éŚ Batch/micro-batch processing of large datasets ’éŚ Easy to use, easy to iterate, wealth of common industry-standard ML algorithms ’éŚ Super fast if properly configured ’éŚ Bridges the gap between the old (SQL, single machine analytics) and the new (declarative/functional distributed programming)

- 4. Why not Spark? ’éŚ Breaks easily with poor usage or improperly specified configs ’éŚ Scaling up to larger datasets 500GB -> TB scale requires deep understanding of internal configurations, garbage collection tuning, and Spark mechanisms ’éŚ While there are lots of ML algorithms, a lot of them simply donŌĆÖt work, donŌĆÖt work at scale, or have poorly defined interfaces / documentation

- 5. Scala ’éŚ Yes, I recommend Scala ’éŚ Python API is underdeveloped, especially for ML Lib ’éŚ Java (until Java 8) is a second class citizen as far as convenience vs. Scala ’éŚ Spark is written in Scala ŌĆō understanding Scala helps you navigate the source ’éŚ Can leverage the spark-shell to rapidly prototype new code and constructs ’éŚ http://www.scala- lang.org/docu/files/ScalaByExample.pdf

- 6. Why DataFrames? ’éŚ Iterate on datasets MUCH faster ’éŚ Column access is easier ’éŚ Data inspection is easier ’éŚ groupBy, join, are faster due to under-the-hood optimizations ’éŚ Some chunks of ML Lib now optimized to use data frames

- 7. Why not DataFrames? ’éŚ RDD API is still much better developed ’éŚ Getting data into DataFrames can be clunky ’éŚ Transforming data inside DataFrames can be clunky ’éŚ Many of the algorithms in ML Lib still depend on RDDs

- 8. Creation ’éŚ Read in a file with an embedded header ’éŚ http://tinyurl.com/zc5jzb2

- 9. ’éŚ Create a DF ’éŚ Option A ŌĆō Map schema to Strings, convert to Rows ’éŚ Option B ŌĆō default type (case-classes or tuples) DataFrame Creation

- 10. ’éŚ Option C ŌĆō Define the schema explicitly ’éŚ Check your work with df.show() DataFrame Creation

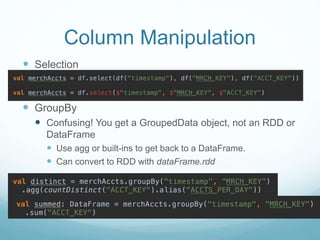

- 11. Column Manipulation ’éŚ Selection ’éŚ GroupBy ’éŚ Confusing! You get a GroupedData object, not an RDD or DataFrame ’éŚ Use agg or built-ins to get back to a DataFrame. ’éŚ Can convert to RDD with dataFrame.rdd

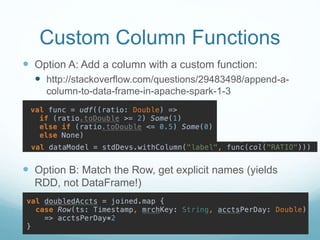

- 12. Custom Column Functions ’éŚ Option A: Add a column with a custom function: ’éŚ http://stackoverflow.com/questions/29483498/append-a- column-to-data-frame-in-apache-spark-1-3 ’éŚ Option B: Match the Row, get explicit names (yields RDD, not DataFrame!)

- 13. Row Manipulation ’éŚ Filter ’éŚ Range: ’éŚ Equality: ’éŚ Column functions

- 14. Joins ’éŚ Option A (inner join) ’éŚ Option B (explicit) ’éŚ Join types: ŌĆ£innerŌĆØ, ŌĆ£outerŌĆØ, ŌĆ£left_outerŌĆØ, ŌĆ£right_outerŌĆØ, ŌĆ£leftsemiŌĆØ ’éŚ DataFrame joins benefit from Tungsten optimizations

- 15. Null Handling ’éŚ Built in support for handling nulls in data frames. ’éŚ Drop, fill, replace ’éŚ https://spark.apache.org/docs/1.6.0/api/java/org/apache /spark/sql/DataFrameNaFunctions.html

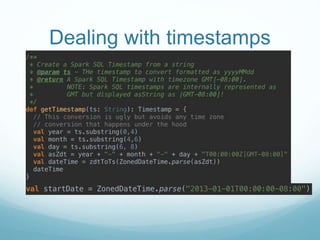

- 17. Spark-TS ’éŚ https://github.com/cloudera/spark-timeseries ’éŚ Uses Java 8 ZonedDateTime as of 0.2 release:

- 19. Why Spark TS? ’éŚ Each row of the TimeSeriesRDD is a keyed vector of doubles (indexed by your time index) ’éŚ Easily and efficiently slice data-sets by time: ’éŚ Generate statistics on the data:

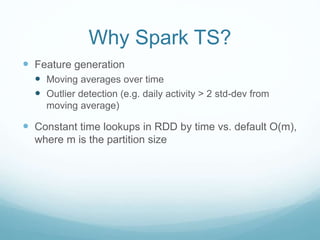

- 20. Why Spark TS? ’éŚ Feature generation ’éŚ Moving averages over time ’éŚ Outlier detection (e.g. daily activity > 2 std-dev from moving average) ’éŚ Constant time lookups in RDD by time vs. default O(m), where m is the partition size

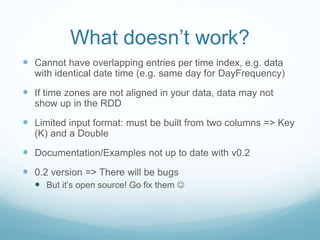

- 21. What doesnŌĆÖt work? ’éŚ Cannot have overlapping entries per time index, e.g. data with identical date time (e.g. same day for DayFrequency) ’éŚ If time zones are not aligned in your data, data may not show up in the RDD ’éŚ Limited input format: must be built from two columns => Key (K) and a Double ’éŚ Documentation/Examples not up to date with v0.2 ’éŚ 0.2 version => There will be bugs ’éŚ But itŌĆÖs open source! Go fix them ’üŖ

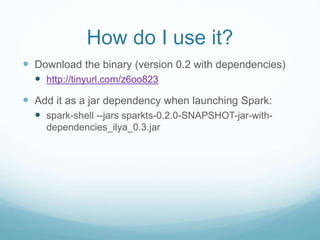

- 22. How do I use it? ’éŚ Download the binary (version 0.2 with dependencies) ’éŚ http://tinyurl.com/z6oo823 ’éŚ Add it as a jar dependency when launching Spark: ’éŚ spark-shell --jars sparkts-0.2.0-SNAPSHOT-jar-with- dependencies_ilya_0.3.jar

- 23. What else? ’éŚ Save your work => Write completed datasets to file ’éŚ Work on small data first, then go to big data ’éŚ Create test data to capture edge cases ’éŚ LMGTFY

- 24. By popular demand: screen spark-shell --driver-memory 100g --num-executors 60 --executor-cores 5 --master yarn-client --conf "spark.executor.memory=20gŌĆØ --conf "spark.io.compression.codec=lz4" --conf "spark.shuffle.consolidateFiles=true" --conf "spark.dynamicAllocation.enabled=false" --conf "spark.shuffle.manager=tungsten-sort" --conf "spark.akka.frameSize=1028" --conf "spark.executor.extraJavaOptions=-Xss256k -XX:MaxPermSize=128m - XX:PermSize=96m -XX:MaxTenuringThreshold=2 -XX:SurvivorRatio=6 - XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=75 - XX:+UseCMSInitiatingOccupancyOnly -XX:+AggressiveOpts - XX:+UseCompressedOops"

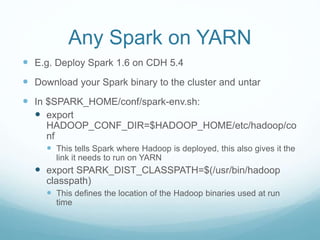

- 25. Any Spark on YARN ’éŚ E.g. Deploy Spark 1.6 on CDH 5.4 ’éŚ Download your Spark binary to the cluster and untar ’éŚ In $SPARK_HOME/conf/spark-env.sh: ’éŚ export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop/co nf ’éŚ This tells Spark where Hadoop is deployed, this also gives it the link it needs to run on YARN ’éŚ export SPARK_DIST_CLASSPATH=$(/usr/bin/hadoop classpath) ’éŚ This defines the location of the Hadoop binaries used at run time

- 26. References ’éŚ http://spark.apache.org/docs/latest/programming-guide.html ’éŚ http://spark.apache.org/docs/latest/sql-programming-guide.html ’éŚ http://tinyurl.com/leqek2d (Working With Spark, by Ilya Ganelin) ’éŚ http://blog.cloudera.com/blog/2015/03/how-to-tune-your-apache- spark-jobs-part-1/ (by Sandy Ryza) ’éŚ http://blog.cloudera.com/blog/2015/03/how-to-tune-your-apache- spark-jobs-part-2/ (by Sandy Ryza) ’éŚ http://www.slideshare.net/ilganeli/frustrationreduced-spark- dataframes-and-the-spark-timeseries-library