Heuristic search algorithm in ai and machine learning

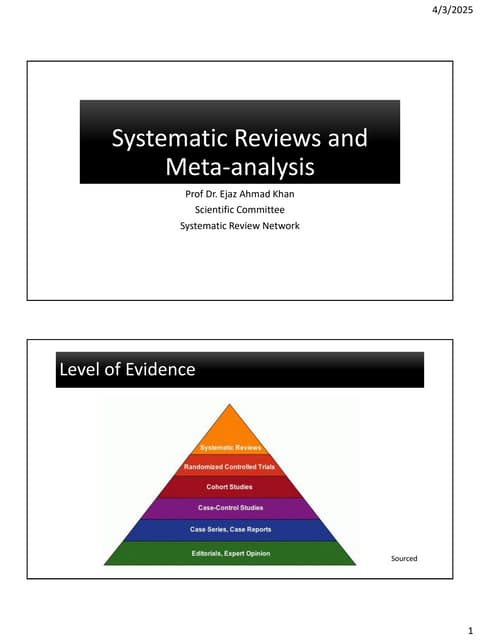

- 1. . Heuristic Search Techniques Artificial Intelligence Final Year IT AS2024 Information Technology Department College of Science and Technology Royal University of Bhutan

- 2. . Outline ’é¦ Adversarial Search ’é¦ Types of Games in AI ’é¦ Game Theory ’é¦ Terminologies ’é¦ Pure Strategy ’é¦ Mix Strategy ’é¦ References 10/21/2024 2 Artificial Intelligence

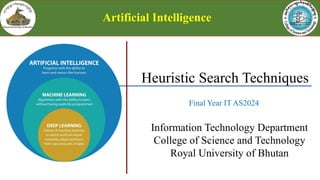

- 3. . Adversarial Search: game playing ’üČ It is a search, where we examine the problem which arises when we try to plan ahead of the world and other agents are planning against us. ’āś In previous topics, we have studied the search strategies which are only associated with a single agent ’ā╝ Which aims to find the solution that often expressed in the form of a sequence of actions. ’āś However, there might be some situations where more than one agent is searching for the solution in the same search space. ’ā╝ This situation usually occurs in game playing. ’ā╝ Adversarial search problems are called games. ’üČ Therefore, Searches in which two or more players with conflicting goals are trying to explore the same search space for the solution, are called adversarial searches, often known as Games. 10/21/2024 CST @ 3 Artificial Intelligence

- 4. . Adversarial Search ’üČ It is a game-playing technique where the agents are surrounded by a competitive environments. ’āś They occur in multiagent competitive environments. ’āś There is an opponent we canŌĆÖt control planning against us. ’üČ Game Vs. Search algorithms ’āś Optimal solution is not a sequence of actions but a strategy (policy). ’ā╝ If opponent does A, agent does B, else if opponent does C, agent does D, etc. ’āś Tedious and fragile if hard-coded (i.e., implemented with rules) ’āś Good news is games are modeled as search problems and use heuristic evaluation functions. ’ā╝ Two main factors which help to model and solve games in AI are: 1. Search Problem 2. Heuristic evaluation function 10/21/2024 CST @ 4 Artificial Intelligence

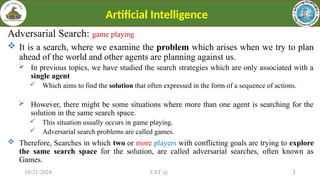

- 5. . Key Concepts ŌĆó Game Tree: A tree-like structure where nodes represent game states and edges represent moves. The root is the current game state, and the branches represent possible future states. ŌĆó Minimax Algorithm: A classic adversarial search algorithm used to find the optimal move for a player, assuming that the opponent also plays optimally. The algorithm explores the entire game tree by simulating possible moves, assigning a value to each outcome, and choosing the move that maximizes the player's minimum gain (or minimizes the opponent's maximum gain). ŌĆó Maximizer and Minimizer: In a two-player game, one player tries to maximize their score (Maximizer), while the other tries to minimize the MaximizerŌĆÖs score (Minimizer). In the game tree, the MaximizerŌĆÖs nodes choose the maximum value from child nodes, while the MinimizerŌĆÖs nodes choose the minimum. ŌĆó Alpha-Beta Pruning: An optimization of the Minimax algorithm that reduces the number of nodes evaluated in the game tree. It prunes branches that cannot influence the final decision, thus speeding up the search process. 10/21/2024 CST @ 5 Artificial Intelligence

- 6. . Classic Approaches ŌĆó Minimax and Alpha-Beta Pruning: these are foundational techniques in traditional game- playing AI. They are effective for games with relatively small state spaces and well-defined rules, such as tic-tac-toe or chess. ŌĆó Heuristic Evaluation Functions: In complex games where itŌĆÖs impractical to search the entire game tree (e.g., chess), heuristic evaluation functions estimate the value of a game state. These functions are often based on expert knowledge, such as material advantage in chess. 10/21/2024 CST @ 6 Artificial Intelligence

- 7. . Modern Approaches ŌĆó Monte Carlo Tree Search (MCTS): A probabilistic search algorithm that is particularly effective in games with large and complex state spaces, like Go. MCTS builds a search tree based on random sampling of the game space, gradually focusing on the most promising moves. ŌĆó Deep Learning and Reinforcement Learning: AI systems like AlphaGo use deep neural networks and reinforcement learning to evaluate game states and make decisions. These systems can learn strategies by playing against themselves millions of times, improving with each iteration. ŌĆó Neural Networks in Game Playing: Deep learning models, particularly convolutional neural networks (CNNs), can be trained to evaluate game states and predict the best moves. In AlphaGo, for example, two neural networks were used: one to evaluate the board state (value network) and another to suggest moves (policy network). 10/21/2024 CST @ 7 Artificial Intelligence

- 8. . Applications Beyond Games The principles of adversarial search and game playing extend to various real-world applications: ŌĆó Cybersecurity: Adversarial search can be used in scenarios like intrusion detection, where the defenderŌĆÖs actions are modelled against potential attackers. ŌĆó Business Strategy: Companies can model competitive markets as adversarial environments, optimizing pricing, product placement, and marketing strategies. ŌĆó Robotics: In scenarios where multiple robots or agents must compete or cooperate, adversarial search techniques can optimize their strategies. 10/21/2024 CST @ 8 Artificial Intelligence

- 9. . Challenges and Limitations ŌĆó State Space Complexity: As the complexity of the game increases, the state space (all possible configurations of the game) can become prohibitively large, making exhaustive search impractical. ŌĆó Computational Resources: Techniques like Alpha-Beta Pruning and MCTS reduce the need for exhaustive searches, but they still require significant computational resources, especially in real-time applications. ŌĆó Generalization: While game-playing AI can achieve superhuman performance in specific games, generalizing these strategies to other domains or more complex environments remains a challenge. 10/21/2024 CST @ 9 Artificial Intelligence

- 10. . Games ’üČ Games are considered as the hard topic in AI ’āś Games are Big deal in AI. ’āś Games are interesting to AI because they are too hard to solve. ’ā╝ Example: chess has a branching factor of 35, with 35100 nodes 10154 search spaces. ’üČ In games, we need to make some decision even when the optimal decision is infeasible. ’üČ Types of games in AI: 10/21/2024 CST @ 10 Artificial Intelligence Deterministic Non-deterministic Perfect information Chess, Checkers, go, Othello Backgammon, monopoly Imperfect information Battleships, blind, tic-tac-toe Bridge, poker, scrabble, nuclear war

- 11. . Types of games in AI ’üČ Perfect Information ’āś A game with the perfect information is that in which agents can look into the complete board. ’āś Agents have all the information about the game, and they can see each other moves. ’ā╝ Examples ’āĀ Chess, Checkers, Go, etc. ’üČ Imperfect Information ’āś In a game if agents do not have all information about the game and not aware with whatŌĆÖs going on, such type of games are called the game with imperfect information, ’ā╝ Example ’āĀ tic-tac-toe, Battleship, blind, Bridge, etc. 10/21/2024 CST @ 11 Artificial Intelligence

- 12. . Types of games in AI ’üČ Deterministic games ’āś Deterministic games are those games which follow a strict pattern and set of rules for the games, and there is no randomness associated with them. ’ā╝ Examples are chess, Checkers, Go, tic-tac-toe, etc. ’üČ Non-deterministic games ’āś Non-deterministic are those games which have various unpredictable events and has a factor of chance or luck. ’ā╝ This factor of chance or luck is introduced by either dice or cards. ’ā╝ These are random, and each action response is not fixed. ’ā╝ Such games are also called as stochastic games. 10/21/2024 CST @ 12 Artificial Intelligence

- 13. . Game Theory ’üČ Mathematically, Adversarial search is based on the concept of ŌĆśGame Theory.ŌĆÖ ’āś According to game theory, a game is played between two players. ’āś To complete the game, one has to win the game and the other looses automatically.ŌĆÖ ’üČ Game theory is the mathematical model which is used for making decision. ’üČ Generally decision making situations can be classified into three different categories: 1. Deterministic situation 2. Probabilistic situation 3. Uncertainty situation ’üČ Terminologies of Game Theory: 10/21/2024 CST @ 13 Artificial Intelligence

- 14. . Terminologies of Game Theory 1. Players ’āĀ Player A and Player B. 2. Strategies ’āĀ The course of actions to be taken by a player. 2.1 Pure Strategy 2.2 Mix Strategy 3. Payoff matrix Two players A and B with three strategies 10/21/2024 CST @ 14 Artificial Intelligence Player selects only one strategy: P1 = 0, P2 = 1, P3 = 0 ’āĀ total prob. = 1 Player selects more strategies: P1 = 0.45, P2 = 0.55, P3 = 0 ’āĀ total prob. = 1 20 10 22 30 40 38 15 18 25 1 2 3 Player B Player A 3 2 1 ’āĀ If player A selects strategy 1 and player B selects strategy 2, the outcome is 10, etc. ’āĀTherefore, the payoff matrix is the outcomes of all the possible combination of the strategies ’āĀ If the outcome is Positive, it is gain to Player A and lost to player B ’āĀ Similarly, if the outcome is Negative, it is gain to play B but lost to Play A

- 15. . Terminologies of Game Theory 4. Maximin Principle ’āĀ Maximizes the minimum guaranteed gain of Player A. 5. Minimax Principle ’āĀ Minimizes the maximum losses. 6. Saddle Point ’āĀ Maximin value = Minimax value 7. Value of the game ’āĀ If the game has a saddle point, then the value of the cell at the saddle point is called the value of the game. 8. Two-person zero-sum game ’āĀIn a game with two players, if the gain of one player is equal to the loss of another player then that game is called two-person zero-sum game. 10/21/2024 CST @ 15 Artificial Intelligence 20 10 22 30 40 38 15 18 25 1 2 3 Player B Player A 2 If both the players select second strategy (let say - 40), Player B gain and the same amount is lost by Player A. This is called Two-person zero-sum game 3 1

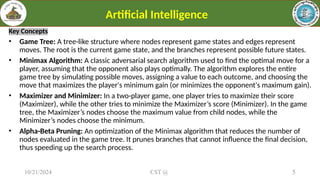

- 16. . Game with Pure Strategies ’üČ Find the optimum strategies of the players in the following games: 10/21/2024 CST @ 16 Artificial Intelligence 25 20 35 50 45 55 58 40 42 1 2 3 Player B Player A 1 3 2 Step 1’āĀ Calculate Row MIN: 20 45 40 Maximin = 45 Step 2’āĀ Calculate Column MAX: 58 45 55 Minimax = 45 ’āĀ Player A is called Maximin player and Player B is called Minimax player ’āĀ Therefore: ’āĀ Player A is maximizing it's minimum guaranteed gain. ’āĀ Player B is minimizing it's maximum lost. ’āĀ Intersecting point is 45 = Saddle Point ’āĀ minimax = maximin ’āĀ 45 = 45 Therefore, value of the game V = 45 Hence, the game has a saddle point at the cell corresponding to Row 2 and Column 2. Optimal probabilities: A [P1, P2, P3] = A [0,1,0] B [q1, q2, q3] = B [0,1,0] Thus, this game is of pure strategy Optimum strategy of A ’āĀ 2 and optimum strategy of B ’āĀ 2

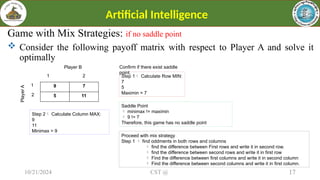

- 17. . Game with Mix Strategies: if no saddle point ’üČ Consider the following payoff matrix with respect to Player A and solve it optimally 10/21/2024 CST @ 17 Artificial Intelligence 1 2 Player B Player A 1 2 Step 1’āĀ Calculate Row MIN: 7 5 Maximin = 7 Step 2’āĀ Calculate Column MAX: 9 11 Minimax = 9 Saddle Point ’āĀ minimax != maximin ’āĀ 9 != 7 Therefore, this game has no saddle point Proceed with mix strategy Step 1 ’āĀ find oddments in both rows and columns ’āĀ find the difference between First rows and write it in second row. ’āĀ find the difference between second rows and write it in first row ’āĀ Find the difference between first columns and write it in second column ’āĀ Find the difference between second columns and write it in first column. 9 7 5 11 Confirm if there exist saddle point.

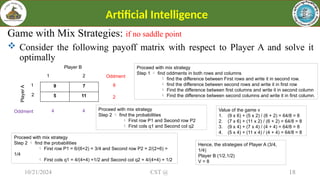

- 18. . Game with Mix Strategies: if no saddle point ’üČ Consider the following payoff matrix with respect to Player A and solve it optimally 10/21/2024 CST @ 18 Artificial Intelligence 1 2 Player B Player A 1 2 Proceed with mix strategy Step 1 ’āĀ find oddments in both rows and columns ’āĀ find the difference between First rows and write it in second row. ’āĀ find the difference between second rows and write it in first row ’āĀ Find the difference between first columns and write it in second column ’āĀ Find the difference between second columns and write it in first column. 9 7 5 11 Proceed with mix strategy Step 2 ’āĀ find the probabilities ’āĀ First row P1 and Second row P2 ’āĀ First cols q1 and Second col q2 Oddment 6 2 Oddment 4 4 Proceed with mix strategy Step 2 ’āĀ find the probabilities ’āĀ First row P1 = 6/(6+2) = 3/4 and Second row P2 = 2/(2+6) = 1/4 ’āĀ First cols q1 = 4/(4+4) =1/2 and Second col q2 = 4/(4+4) = 1/2 Value of the game v 1. (9 x 6) + (5 x 2) / (6 + 2) = 64/8 = 8 2. (7 x 6) + (11 x 2) / (6 + 2) = 64/8 = 8 3. (9 x 4) + (7 x 4) / (4 + 4) = 64/8 = 8 4. (5 x 4) + (11 x 4) / (4 + 4) = 64/8 = 8 Hence, the strategies of Player A (3/4, 1/4) Player B (1/2,1/2) V = 8

- 19. . Solve following questions ’üČ Solve the following two-person zero-sum game with the following 3x2 payoff matrix of player A. 10/21/2024 CST @ 19 Artificial Intelligence B1 B2 Player B Player A A1 A2 9 2 8 6 6 4 A3 What is optimum strategy of A and B? What is Value of game? Player B Player A -5 2 0 7 5 6 4 8 4 0 2 -3 A1 A2 A3 B1 B2 B3 B4 Player B Player A 2 -1 8 -4 -3 4 -8 -4 0 1 -6 -2 q1 q2 q3 P1 P2 P3 q4

- 20. . Solve following questions ’üČ Find range of p and q that will make the payoff matrix given below: 10/21/2024 CST @ 20 Artificial Intelligence Player B Player A 2 4 7 10 7 q 4 p 8 A1 A2 A3 B1 B2 B3 Find R-min 2 7 4 Max-min = 7 Find C-max 10 7 8 Min-Max = 7 Range 7 Ōēż q Ōēż 8 Range 4 Ōēż p Ōēż 7 Optimum strategy of B is B2 Optimum strategy of A is A2 Game of value = 7

- 21. . References Lucci, S., & Kopec, D. (2016). Artificial Intelligence in the 21st Century: a Living Introduction (2nd ed.). Mercury Learning and Information. https://www.javatpoint.com/ai-adversarial-search https://www.youtube.com/watch?v=fSuqTgnCVRg https://www.youtube.com/watch?v=YgqC8_WOZyY 10/21/2024 CST @ 21 Artificial Intelligence

![.

Game with Pure Strategies

’üČ Find the optimum strategies of the players in the following games:

10/21/2024 CST @ 16

Artificial Intelligence

25 20 35

50 45 55

58 40 42

1 2 3

Player B

Player

A

1

3

2

Step 1’āĀ Calculate Row MIN:

20

45

40

Maximin = 45

Step 2’āĀ Calculate Column MAX:

58

45

55

Minimax = 45

’āĀ Player A is called Maximin player and Player B

is called Minimax player

’āĀ Therefore:

’āĀ Player A is maximizing it's minimum

guaranteed gain.

’āĀ Player B is minimizing it's maximum

lost.

’āĀ Intersecting point is 45 = Saddle Point

’āĀ minimax = maximin

’āĀ 45 = 45

Therefore, value of the game V = 45

Hence, the game has a saddle point at the cell corresponding to Row 2 and Column 2.

Optimal probabilities:

A [P1, P2, P3] = A [0,1,0]

B [q1, q2, q3] = B [0,1,0]

Thus, this game is of pure strategy

Optimum strategy of A ’āĀ 2 and optimum strategy of B ’āĀ 2](https://image.slidesharecdn.com/lecture-2-241021034934-c1f50ec4/85/Heuristic-search-algorithm-in-ai-and-machine-learning-16-320.jpg)