How to fix JavaScript SEO problems on a scale

- 1. How to fix JavaScript SEO problems on a scale Serge Bezborodov JetOctopus slideshare.net/sergebezborodov @sergebezborodov

- 2. JetOctopus Serge Bezborodov What is the difference between modern JavaScript websites and old-school

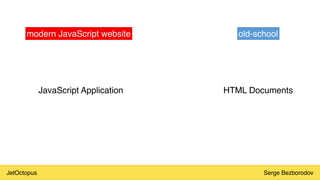

- 3. JetOctopus Serge Bezborodov modern JavaScript website JavaScript Application old-school HTML Documents

- 4. JetOctopus Serge Bezborodov Applications have bugs

- 5. JetOctopus Serge Bezborodov BUGS = Google canŌĆÖt render page properly

- 6. JetOctopus Serge Bezborodov JavaScript SEO is mostly about pages rendered properly

- 7. JetOctopus Serge Bezborodov JavaScript SEO = QA

- 8. JetOctopus Serge Bezborodov LetŌĆÖs go with the most usual JavaScript bugs

- 9. JetOctopus Serge Bezborodov JS Bug #1: Page canŌĆÖt be fully rendered -page loads quite a long time -page canŌĆÖt be fully rendered (you donŌĆÖt see a footer) -page became fully loaded after some interaction (scroll, click) test cases:

- 10. JetOctopus Serge Bezborodov JS Bug #2: Incomplete content -page misses some content blocks -JavaScript removes some content (IŌĆÖm not joking) test cases:

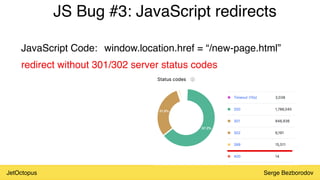

- 11. JetOctopus Serge Bezborodov JS Bug #3: JavaScript redirects window.location.href = ŌĆ£/new-page.htmlŌĆØ redirect without 301/302 server status codes JavaScript Code:

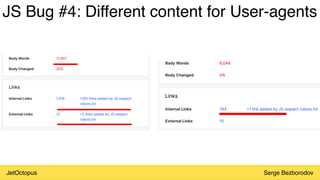

- 12. JetOctopus Serge Bezborodov JS Bug #4: Different content for User-agents

- 13. JetOctopus Serge Bezborodov JS Bug #5: SSR is broken -text/HTML ratio closer to 0

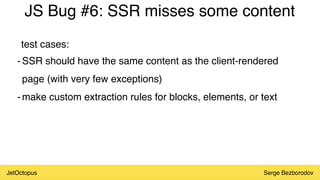

- 14. JetOctopus Serge Bezborodov JS Bug #6: SSR misses some content -SSR should have the same content as the client-rendered page (with very few exceptions) -make custom extraction rules for blocks, elements, or text test cases:

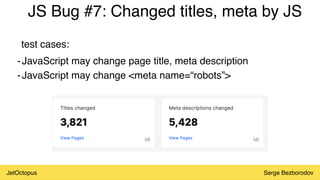

- 15. JetOctopus Serge Bezborodov JS Bug #7: Changed titles, meta by JS -JavaScript may change page title, meta description -JavaScript may change <meta name=ŌĆ£robotsŌĆØ> test cases:

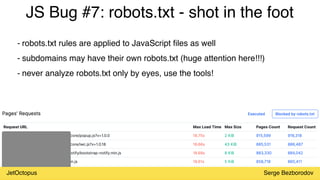

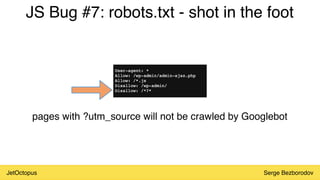

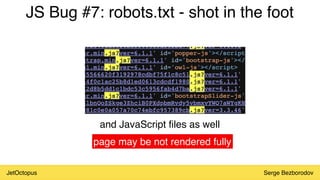

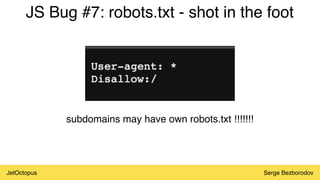

- 16. JetOctopus Serge Bezborodov JS Bug #7: robots.txt - shot in the foot - robots.txt rules are applied to JavaScript files as well - subdomains may have their own robots.txt (huge attention here!!!) - never analyze robots.txt only by eyes, use the tools!

- 17. JetOctopus Serge Bezborodov JS Bug #7: robots.txt - shot in the foot pages with ?utm_source will not be crawled by Googlebot

- 18. JetOctopus Serge Bezborodov JS Bug #7: robots.txt - shot in the foot and JavaScript files as well page may be not rendered fully

- 19. JetOctopus Serge Bezborodov JS Bug #7: robots.txt - shot in the foot subdomains may have own robots.txt !!!!!!!

- 20. JetOctopus Serge Bezborodov - I checked a bunch of template pages, everything works fine The common way of JS QA

- 21. JetOctopus Serge Bezborodov - nope, check more

- 22. JetOctopus Serge Bezborodov check more = crawl more not hundreds of pages but tens of thousands

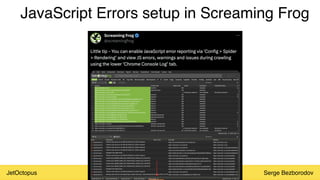

- 23. JetOctopus Serge Bezborodov JavaScript Errors setup in Screaming Frog

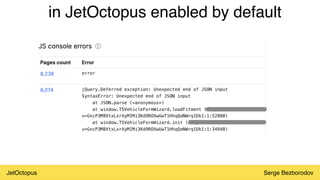

- 24. JetOctopus Serge Bezborodov in JetOctopus enabled by default

- 25. JetOctopus Serge Bezborodov Bonus part

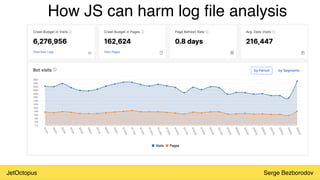

- 26. JetOctopus Serge Bezborodov How JS can harm log file analysis

- 27. JetOctopus Serge Bezborodov ItŌĆÖs not a page crawled by GBot but JS requests

- 28. JetOctopus Serge Bezborodov Make sure, you exclude JS requests from your log file analysis

- 29. JetOctopus Serge Bezborodov Crawl budget is the amount of crawled pages, not JS requests made during pages rendering

- 30. JetOctopus Serge Bezborodov Conclusions -JavaScript is already with us and wonŌĆÖt go shortly -JavaScript SEO is all about troubleshooting -you can do almost nothing by yourself -make strong communication with the developers' team -without tools, itŌĆÖs impossible to do it on a scale

- 31. JetOctopus Serge Bezborodov Thank you @sergebezborodov Extended JavaScript Crawler JetOctopus.com 7 days free trial