HPC Advisory Council Stanford Conference 2016

- 1. Scaling Deep Learning Bryan Catanzaro @ctnzr

- 2. Bryan Catanzaro What do we want AI to do? Drive us to work Serve drinks? Help us communicate °ďÖúÎŇĂÇąµÍ¨ Keep us organized Help us find things Guide us to content

- 3. Bryan Catanzaro OCR-based Translation App Baidu IDL hello

- 4. Bryan Catanzaro Face Analysis Baidu IDL Gender Age Range Ethnicity Mood

- 5. Bryan Catanzaro Medical Diagnostics App Baidu BDL AskADoctor can assess 520 different diseases, representing ~90 percent of the most common medical problems.

- 6. Bryan Catanzaro Image Captioning Baidu IDL A yellow bus driving down a road with green trees and green grass in the background. Living room with white couch and blue carpeting. Room in apartment gets some afternoon sun.

- 7. Bryan Catanzaro Image Q&A Baidu IDL Sample questions and answers

- 8. Bryan Catanzaro Natural User Interfaces ? Goal: Make interacting with computers as natural as interacting with humans ? AI problems: ¨C Speech recognition ¨C Emotional recognition ¨C Semantic understanding ¨C Dialog systems ¨C Speech synthesis

- 9. Bryan Catanzaro Machine learning for computer vision (c. 2009) ˇ°Please put away the coffee mugs!ˇ±

- 10. Bryan Catanzaro Machine learning for computer vision ˇ°Mugˇ± Machine Learning Cleanup-bot! (Woohoo!)

- 11. Bryan Catanzaro AI applications are hardˇ

- 12. Bryan Catanzaro AI applications are hardˇ Machine Learning can solve challenging problems --- but it is a lot of work! This eventually worked ~95% of the time.

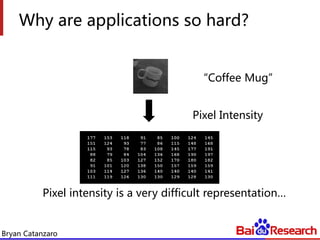

- 13. Bryan Catanzaro Why are applications so hard? ˇ°Coffee Mugˇ± Pixel Intensity Pixel intensity is a very difficult representationˇ

- 14. Bryan Catanzaro pixel 1 pixel 2 Coffee Mug Not Coffee Mug Why are applications so hard? pixel 1 pixel 2 Pixel Intensity[72 160] -+ + - + -

- 15. Bryan Catanzaro Why are applications so hard? + pixel 1 pixel 2 - + + - - + - + +Coffee Mug Not Coffee Mug- + pixel 1 pixel 2 - + + - - + - + Is this a Coffee Mug? Learning Algorithm

- 16. Bryan Catanzaro Features + handle? cylinder? - + +- - + - + +Coffee Mug Not Coffee Mug- cylinder?handle? Is this a Coffee Mug? Learning Algorithm + handle? cylinder? - + - - + - ++

- 17. Bryan Catanzaro Machine learning in practice ˇ°Mug ˇ± Machine Learning (Classifier) Feature Extraction

- 18. Bryan Catanzaro Machine learning in practice ˇ°Mug ˇ± Machine Learning (Classifier) Feature Extraction Prior Knowledge Experience

- 19. Bryan Catanzaro Machine learning in practice ? Enormous amounts of research time spent inventing new features. Idea CodeTest Hack up in Matlab Run on workstation Think really hardˇ

- 20. Bryan Catanzaro Learning features ? Deep learning: learn multiple stages of features to achieve end goal. Features Features ˇ°Mugˇ±?Classifier Pixels

- 21. Bryan Catanzaro Learning features ? ˇ°Neural networksˇ± are one way to represent features Features Features ˇ°Mugˇ±? Classif ier Pixels y = g(W x) x y W

- 22. Bryan Catanzaro Learning features ? Deep learning: learn multiple stages of features to achieve end goal ˇ°Mugˇ±? Pixels Features Features Classifier W3 W2 W1

- 23. Bryan Catanzaro Why Deep Learning? 1. Scale Matters ¨C Bigger models usually win 2. Data Matters ¨C More data means less cleverness necessary 3. Productivity Matters ¨C Teams with better tools can try out more ideas Data & Compute Accuracy Deep Learning Many previou methods

- 24. Bryan Catanzaro Scaling up ? Make progress on AI by focusing on systems ¨C Make models bigger ¨C Tackle more data ¨C Reduce research cycle time ? Accelerate large-scale experiments

- 25. Bryan Catanzaro Exascale ? Strong scaling important but difficult ¨C Weak scaling over time as datasets increase ? We run our experiments on 8-128 GPUs ? Exascale likely important for running many ˇ°smallˇ± experiments

- 26. Bryan Catanzaro Training Deep Neural Networks ? Computation dominated by dot products ? Multiple inputs, multiple outputs, batch means GEMM ¨C Compute bound ? Convolutional layers even more compute bound

- 27. Bryan Catanzaro Computational Characteristics ? High arithmetic intensity ¨C Arithmetic operations / byte of data ¨C O(Exaflops) / O(Terabytes) : 10^6 ? In contrast, many other ML training jobs are O(Petaflops)/O(Petabytes) = 10^0 ? Medium size datasets ¨C Generally fit on 1 node ¨C HDFS, fault tolerance, disk I/O not bottlenecks Training 1 model: ~10 Exaflops

- 28. Bryan Catanzaro Deep Neural Network training is HPC Idea CodeTest ? Turnaround time is key ? Use most efficient hardware ¨C Parallel, heterogeneous computing ¨C Fast interconnect (PCIe, Infiniband) ? Push strong scalability ¨C Models and data have to be of commensurate size ? This is all standard HPC!

- 29. Bryan Catanzaro Training: Stochastic Gradient Descent ? Simple algorithm ¨C Add momentum to power through local minima ¨C Compute gradient by backpropagation ? Operates on minibatches ¨C This makes it a GEMM problem instead of GEMV ? Choose minibatches stochastically ¨C Important to avoid memorizing training order ? Difficult to parallelize ¨C Prefers lots of small steps ¨C Increasing minibatch size not always helpful

- 30. Bryan Catanzaro Limitations of batching Error Iterations Batch size = ? Batch size = 2? Spending 2x the work picking a direction DoesnˇŻt reduce iteration count by 2x

- 31. Bryan Catanzaro SVAIL Infrastructure 1 http://www.tyan.com FT77CB7079 Service EngineerˇŻs Manual NVIDIA GeForce GTX Titan X Titan X x8 Mellanox Interconnect ? Software: CUDA, MPI, Majel (SVAIL internal library) ? Hardware:

- 32. Bryan Catanzaro Node Architecture ? All pairs of GPUs communicate simultaneously over PCIe Gen 3 x16 ? Groups of 4 GPUs form Peer to Peer domain ? Avoid moving data to CPUs or across QPI

- 33. Bryan Catanzaro Parallelism Model Parallel Data Parallel MPI_Allreduce() Training Data Training Data

- 34. Bryan Catanzaro Speech Recognition: Traditional ASR ? Getting higher performance is hard ? Improve each stage by engineering Accuracy Traditional ASR Data + Model Size Expert engineering. Adam Coates

- 35. Bryan Catanzaro Speech recognition: Traditional ASR ? Huge investment in features for speech! ¨C Decades of work to get very small improvements Spectrogram MFCC Flux

- 36. Bryan Catanzaro Speech Recognition 2: Deep Learning! ? Since 2011, deep learning for features AcousticModel HMM Language Model Transcription ˇ°The quick brown fox jumps over the lazy dog.ˇ±

- 37. Bryan Catanzaro Speech Recognition 2: Deep Learning! ? With more data, DL acoustic models perform better than traditional models Accuracy Traditional ASR Data + Model Size DL V1 for Speech

- 38. Bryan Catanzaro Speech Recognition 3: ˇ°Deep Speechˇ± ? End-to-end learning ˇ°The quick brown fox jumps over the lazy dog.ˇ± Transcription

- 39. Bryan Catanzaro Speech Recognition 3: ˇ°Deep Speechˇ± ? We believe end-to-end DL works better when we have big models and lots of data Accuracy Traditional ASR Data + Model Size DL V1 for Speech Deep Speech

- 40. Bryan Catanzaro End-to-end speech with DL ? Deep neural network predicts characters directly from audio . . . . . . T H _ E ˇ D O G

- 41. Bryan Catanzaro Recurrent Network ? RNNs model temporal dependence ? Various flavors used in many applications ¨C LSTM, GRU, Bidirectional, ˇ ¨C Especially sequential data (time series, text, etc.) ? Sequential dependence complicates parallelism

- 42. Bryan Catanzaro Connectionist Temporal Classification

- 43. Bryan Catanzaro warp-ctc ? Recently open sourced our CTC implementation ? Efficient, parallel CPU and GPU backend ? 100-400X faster than other implementations ? Apache license, C interfacehttps://github.com/baidu-research/warp-ctc

- 44. Bryan Catanzaro Training sets ? Train on ~1? years of data (and growing) ? English and Mandarin ? End-to-end deep learning is key to assembling large datasets ? Datasets drive accuracy

- 45. Bryan Catanzaro All-reduce ? We implemented our own all-reduce out of send and receive ? Several algorithm choices based on size ? Careful attention to affinity and topology

- 46. Bryan Catanzaro Scalability ? Batch size is hard to increase ¨C algorithm, memory limits ? Performance at small batch sizes (32, 64) leads to scalability limits

- 47. Bryan Catanzaro Performance for RNN training ? 55% of GPU FMA peak using a single GPU ? ~48% of peak using 8 GPUs in one node ? Weak scaling very efficient, albeit algorithmically challenged 1 2 4 8 16 32 64 128 256 512 1 2 4 8 16 32 64 128 TFLOP/s Number of GPUs Typical training run one node multi node

- 48. Bryan Catanzaro Precision ? FP16 mostly works ¨C Use FP32 for softmax and weight updates ? More sensitive to labeling error 1 10 100 1000 10000 100000 1000000 10000000 100000000 -31 -30 -29 -28 -27 -26 -25 -24 -23 -22 -21 -20 -19 -18 -17 -16 -15 -14 -13 -12 -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1 0 Count Magnitude Weight Distribution

- 49. Bryan Catanzaro Determinism ? Determinism very important ? So much randomness, hard to tell if you have a bug ? Networks train despite bugs, although accuracy impaired ? Reproducibility is important ¨C For the usual scientific reasons ¨C Progress not possible without reproducibility ? We use synchronous SGD

- 50. Bryan Catanzaro Conclusion ? Deep Learning is solving many hard problems ? Training deep neural networks is an HPC problem ? Scaling brings AI progress!

- 51. Bryan Catanzaro Thanks ? Andrew Ng, Adam Coates, Awni Hannun, Patrick LeGresley ˇ and all of SVAIL Bryan Catanzaro @ctnzr

![Bryan Catanzaro

pixel 1

pixel 2

Coffee Mug

Not Coffee Mug

Why are applications so hard?

pixel 1

pixel 2

Pixel Intensity[72 160]

-+

+

-

+

-](https://image.slidesharecdn.com/hpc-advisory-council-catanzaro-160225192831/85/HPC-Advisory-Council-Stanford-Conference-2016-14-320.jpg)