Human error, brains and how agility helps

- 1. Human Error, Brains and How Agility/Scrum Helps ScrumDay Barcelona ŌĆō 2nd December 2021 Guy Maslen

- 2. Levi Strauss SAP rollout. Planned rollout for new ERP system Budget $5m Expenditure $192.5m ŌĆ£Why Your IT Project May Be Riskier Than You ThinkŌĆØ by Bent Flyvbjerg and Alexander Budzier, HBR September 2011

- 3. Piper Alpha Platform built in 1976 In operation for 12 years Pump under repair Repair not finished at shift end Tag-out lock-out fails Another repair scheduled at the same time Pump assumed okay by new shift Pump started and fails Explosion Gas continues to be pumped 187 lives lost

- 4. Black Swan Events It is something that is assumed to be impossible It is something that will have a big impact It is something that happens (event) After the event, it is obvious Nissam Nicholas Taleb (2007)

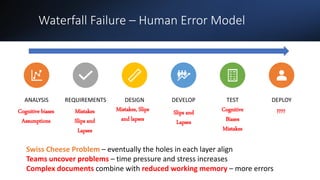

- 5. Swiss Cheese Model We have processes to reduce risk Those processes can have holes The holes are due to human error Each stage is like a slice of Swiss cheese When the holes line up, a disaster happens ŌĆ£Human ErrorŌĆØ James Reason - 1991

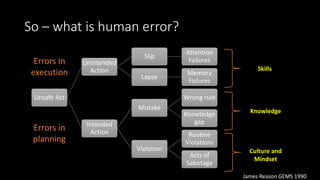

- 6. So ŌĆō what is human error? Unsafe Act Unintended Action Slip Attention Failures Lapse Memory Failures Intended Action Mistake Wrong rule Knowledge gap Violation Routine Violations Acts of Sabotage James Reason GEMS 1990 Skills Errors in execution Errors in planning Knowledge Culture and Mindset

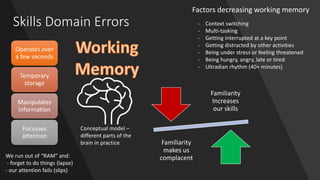

- 7. Skills Domain Errors Operates over a few seconds Temporary storage Manipulates information Focusses attention We run out of ŌĆ£RAMŌĆØ and: - forget to do things (lapse) - our attention fails (slips) Familiarity Increases our skills Familiarity makes us complacent - Context switching - Multi-tasking - Getting interrupted at a key point - Getting distracted by other activities - Being under stress or feeling threatened - Being hungry, angry, late or tired - Ultradian rhythm (40+ minutes) Conceptual model ŌĆō different parts of the brain in practice Factors decreasing working memory

- 8. Countering Skill Domain Errors Simplify Small slices Clear criteria Shared understanding Focus Less distractions Less interruptions Frequent breaks Structure Use a pattern Work in pairs or mobs Work to a cadence

- 9. Knowledge Domain Errors ŌĆó By Snowded - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=33783436 Experts have hypotheses to test Experts have solutions to apply ANCHORING ŌĆō the first thing we hear, we hold on to CONFIRMATION ŌĆō we ŌĆ£cherry pickŌĆØ data selectively to support our beliefs GROUP THINKŌĆō we are reluctant to go against the group SUNK COST ŌĆō we have invested time/effort we are reluctant to abandon DUNNING-KRUGER ŌĆō misplaced confidence through inexperience

- 10. Brains, Knowledge and Belief Facts that contradict our beliefs drive a threat response(1) Being right increases our status in a group Being wrong decreases our status in a group(2) (1) Neural correlates of maintaining oneŌĆÖs political beliefs in the face of counter evidence ŌĆō Jonas Kaplan 2016 (2) SCARF ŌĆō A brain-based model for collaborating with and influencing others ŌĆō David Rock 2009 Prefrontal Cortex Hippocampus Amygdala REWARD THREAT APPROACH AVOID We process information in parallel ŌĆō cognitive and pattern matching Pattern matching (threat/reward) is faster than cognition Threats reduce working memory ŌĆō loss of skill, emotional reaction

- 11. Countering Knowledge Domain Errors ŌĆó Discuss bias ŌĆó Discuss errors ŌĆó Discuss brains ŌĆó We might be wrong! Awareness ŌĆó Define hypotheses ŌĆó List assumptions ŌĆó Do research spikes ŌĆó Uncover what is right! Experiment ŌĆó Use groups ŌĆó Check in/out ŌĆó Avoid drama ŌĆó Get feedback Structure

- 12. Waterfall Failure ŌĆō Human Error Model ANALYSIS REQUIREMENTS DESIGN DEVELOP TEST DEPLOY Cognitive biases Assumptions Mistakes Slips and Lapses Mistakes, Slips and lapses Slips and Lapses Cognitive Biases Mistakes ???? Swiss Cheese Problem ŌĆō eventually the holes in each layer align Teams uncover problems ŌĆō time pressure and stress increases Complex documents combine with reduced working memory ŌĆō more errors

- 13. Agile Model : Bow-tie ŌĆ£defenseŌĆØ We Build The Wrong Thing We Build The Thing Wrong Requirements Errors Design Errors Analysis Errors Execution Errors Testing Errors Lean Canvas User Stories Walking Skeleton Pairing TDD, Automation1 (1) - Plus Bias Training ŌĆō ŌĆśSoftware Defect Prevation Using Human Error Theory ŌĆō Fuqun Huang, Bin Liu Cost Overruns Delayed Delivery Benefits Not Realised Incremental Delivery Iterative Delivery Highest Value First No longer a ŌĆ£Swiss cheeseŌĆØ chain of errors Barriers/Controls on likelihood of error and on possible consequences

- 15. What is culture? Paradigm Power Structures Organisation Structures Control Systems Stories Rituals and Routines Symbols Cultural Web ŌĆō Johnson and Scholes How we do things round here: - Formally and informally based - SCARF applies to change - WhatŌĆÖs my Status? - What Certainty do I have? - What Autonomy do I have? - What are my Relationships? - Am I treated Fairly?

- 16. Fear ŌĆ£Fear invites wrong figures. Bearers of bad news fare badly. To keep his job, anyone may present to his boss only good newsŌĆØ ŌĆō W Edwards Deming Fear of censure or punishment. Fear of missing out on a reward. Fear of loss of status. Fear of loss of relationships. Fear of loss of autonomy. Fear of being treated unfairly. Fear drives violations ŌĆō we do not act as we should out of fear

- 17. Psychological Safety and Teams Teams that reported the most mistakes were also the best performing ŌĆō this led Google to Amy EdmondsonŌĆÖs paper ŌĆ£Psychological Safety and Learning Behaviors in work teamsŌĆØ Not the same as TRUST ŌĆ£If I speak up, it will not impact negatively on my STATUS or RELATIONSHIPSŌĆØ - Willingness to speak up about slips, lapses, mistakes and violations - Open to feedback on things that impact on our status, ego and identity ItŌĆÖs NOT about ŌĆ£safe spacesŌĆØ; it is about behaving in a safe way The Scrum Guide suggests ŌĆ£courageŌĆØ; this is how we apply it

- 18. The Safety Culture ŌĆ£LadderŌĆØ No belief or trust; environment of punishing, blaming and controlling the workforce Failures caused by individuals; no blame but responsibility. Workforce needs to be educated to follow processes. Command and control environment; lots of metrics and graphs flow upward, but do not represent what is happening. Management walkabouts; workforce involvement is promoted/ruled by specialist staff who are obsessed with statistics Management in respectful partnership with workforce. Management has to fix systemic failures, workforce has to identify them After Hudson, 2001 ŌĆśSafety Culture Theory and PracticeŌĆÖ

- 19. Gilbert Enoka ŌĆō All Blacks Mental skills coach We use STRUCTURE to recover our MINDSET to apply our SKILLSET ŌĆ£Cabin crews, arm doors and cross-checkŌĆØ Scrum (or XP, or Kanban) provides the STRUCTURE that helps to preserve the agile mindset - are we being generative? - are we being psychologically safe?

- 20. Countering Mindset Domain Errors ŌĆó Ownership ŌĆó Psychological Safety Behaviors ŌĆó Generative ŌĆó Servant Leader Culture ŌĆó Ritual and Routines ŌĆó Stories and symbols Structure

- 21. Wrap Up ŌĆó As coaches, we can teach our teams about human error, but thatŌĆÖs not enough ŌĆó As teams, we can build processes to trap errors, but thatŌĆÖs not enough ŌĆó As leaders, we can model behaviors, but thatŌĆÖs not enough ŌĆó The safety world has walked this path already We can only preserve the right mindset with structure; without that, our culture and performance will erode, because we are human.

- 22. Selected References Papers and Articles: ŌĆ£Why Your IT Project May Be Riskier Than You ThinkŌĆØ - Bent Flyvbjerg and Alexander Budzier, HBR September 201 ŌĆ£Rest breaks and accident riskŌĆØ - P Tucker, S Folkard, I Macdonald, The Lancet, March 2003 ŌĆ£The impact of rest breaks upon accident risk, fatigue and performance: a reviewŌĆØ- P Tucker, 2003 ŌĆ£Impact of Task Switching and Work Interruptions on Software Development ProcessesŌĆØ ŌĆō A. Tregubov, N. Rodchenko, B. Boehm and JA. Lane, 2017 ŌĆ£No Task Left Behind? Examining the Nature of Fragmented WorkŌĆØ - Gloria Mark, Victor M. Gonzalez, Justin Harris, 2005 ŌĆ£SCARF : a brain-based model for collaborating with and influencing othersŌĆØ ŌĆō David Rock, 2009 ŌĆ£Neural correlates of maintaining oneŌĆÖs political beliefs in the face of counter evidenceŌĆØ ŌĆō Jonas Kaplan, Nature 2016 ŌĆ£Psychological Safety and Learning Behavior in Work TeamsŌĆØ ŌĆō Amy Edmondson, Administrative Science Quarterly 1999 ŌĆ£A Typology of Organisational CulturesŌĆØ ŌĆō Ron Westrum, Quality and Safety in Health Care January 2005 ŌĆ£Safety Culture ŌĆō Theory and PracticeŌĆØ- Patrick Hudson, January 2001 ŌĆ£Ethical Dissonance, Justifications and Moral BehaviorŌĆØ- Rachel Barkin, Current Opinion in Psychology, August 2015 Books: ŌĆ£Human ErrorŌĆØ ŌĆō James Reason, 1991 ŌĆ£Out of the CrisisŌĆØ ŌĆō W Edwards Deming, 1985 ŌĆ£Exploring Corporate StrategyŌĆØ - Johnson and Scholes, 1990+ ŌĆ£The Black Swan: The Impact of the Highly ImprobableŌĆØ - Nassim Nicholas Taleb, 2007 ,

Editor's Notes

- #2: Introduction : Guy Maslen Journey into how and why things go wrong, why thatŌĆÖs largely down to neuroscience and our brains, and what we can do about it. Using the journey that health-and-safety has been on over the last 20 years ŌĆō which is all about risk ŌĆō and applying this to agility ŌĆō which is also all about risk. Empiricism and Scrum; outcome of helping mere humans to do complex work ŌĆō or Scrum through the lens of human error. Linking the strands of my career ŌĆō offshore geophysics, software, geohazard monitoring

- #3: - 11 years ago asked by CEO to look at IT; deep rabbit hole into catastrophic project failures. - how can these things happen? - how do you set out to spend $5m and overrun by $192.5m? - other big failures Deutsche Post-DHL wrote off Ōé¼345m of costs and abandon the ill-fated New Forwarding Environment - example from a paper on IT and ŌĆ£black swansŌĆØ ŌĆō a long tail of disastrous overrunsŌĆ” ; advice was to slice projects small, and lots of medium sized risky projects made for a lot of risk - still curious about why>

- #4: A different kind of disaster; NS Oil place in my life, did my BOSIET training just after recomednations brought in. Unlike IT projects we have a public enquiry, and thereŌĆÖs a chain of events. What has this got to do with IT? ItŌĆÖs all about human error. Why things go wrong, just that the HSE world has been their first, and we can learn from it.

- #5: These are called ŌĆ£black swan eventsŌĆØ ŌĆō obvious after the fact there will be a big issue, but before hand no-one seems to see it coming, or the advice and leading indicators are ignored. Named for the Australian blackswans ŌĆō all European swans were white, referenced by HBR paper

- #7: Routine violations at piper alpha ŌĆō breakdown of safety culture (tag out lock out)

- #8: Example ŌĆō driving car to the office and otherhtings on my mind, turn to the gym instead Wing mirror checking when driving Walk through doorway and forget why we came in (working memory wiped) Stress lowers working memory ŌĆō glucose and oxygen go to pattern matching part of brain Errors 2x more likely in the last half hour of a 2 hour period (Tucker, Folkard, MacDonald) ŌĆ£Flow stateŌĆØ can take 25 minutes to recover (Gloria Mark)

- #9: Pomodoro technique (5 mins every half hour), 20 minutes every 90 minute sessions.

- #10: We all suffer from cognitive biases; tricks our brain plays on us, that reduce how objectively we think. Many biases, just a few key ones here. Dave Snowdens Cynven presents the same challenge ŌĆō how do we know if we are in the complex or complicated domain? Are our biases at play? Deepwater Horizon and the bladder effect ŌĆō talk themselves into a disaster, via anchoring

- #11: Some of this again is about brain function. We parallel process incoming information, through the pattern-matching centres (amygdala/hippocampus) and the cognitive centres. The cognitive centre is faster ŌĆō so the emotional response (threat or reward) is triggered first, reducing working memory, limiting our openness and creativity. We are ŌĆ£strong but wrongŌĆØ ŌĆō example Reason gives is Chernobyl. Two under pinning phenomena related to our status.

- #12: Raise team awareness of thee types of error; understand we have a hypothesis to test, discuss leading indicators Work in groups aware of biases, and use structure to recognize when we have an emotional attachment to something.

- #13: So applying these ideas to a Waterfall development model, what can we see? ThereŌĆÖs scope for human error at every handoff.

- #14: Bow tie is a model from aviation industry around risk. Look at contributing evenets or triggers that form a hazard, then the consequneces of that. Then explore barriers we cam put into place. So ŌĆō how does Scrum help us?

- #16: WeŌĆÖre into culture and mindset driven errors. Diliberate violations ŌĆō we know what we *should* do, and we do a different thing. Ethical Dissonance (Rachel Barkin 2015) ŌĆō we do the wrong thing and justify it to ourselves or others. So what drives these deliberate violations of process?

- #20: GNS Science conference ŌĆō 300 scientsts and engineers, 10 minutes all taking notes. What coaching really is, and how effective it can be, Asked question

- #22: Car analogy seatbet