iFL: An Interactive Environment for Understanding Feature Implementations

- 1. Shinpei Hayashi, Katsuyuki Sekine, and Motoshi Saeki Department of Computer Science, Tokyo Institute of Technology, Japan iFL: An Interactive Environment for Understanding Feature Implementations ICSM 2010 ERA 14 Sep., 2010 TOKYO INSTITUTE OF TECHNOLOGY DEPARTMENT OF COMPUTER SCIENCE

- 2. ? We have developed iFL ? An environment for program understanding ? Interactively supports the understanding of feature implementation using a feature location technique ? Can reduce understanding costs Abstract 2

- 3. ? Program understanding is costly ? Extending/fixing existing features Understanding the implementation of target feature is necessary ? Dominant of maintenance costs [Vestdam 04] ? Our focus: feature/concept location (FL) ? Locating/extracting code fragments which implement the given feature/concept Background [Vestdam 04]: Ī░Maintaining Program Understanding ©C Issues, Tools, and Future DirectionsĪ▒, Nordic Journal of Computing, 2004. 3

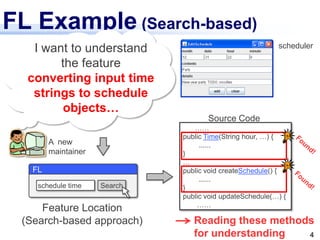

- 4. FL Example (Search-based) ĪŁĪŁ public Time(String hour, ĪŁ) { ...... } ĪŁ public void createSchedule() { ...... } public void updateSchedule(ĪŁ) { ĪŁĪŁ scheduler Source Code schedule time Search Feature Location (Search-based approach) FL I want to understand the feature converting input time strings to schedule objectsĪŁ Reading these methods for understanding 4 A new maintainer

- 5. ? Constructing appropriate queries requires rich knowledge for the implementation ? Times: time, date, hour/minute/second ? Images: image, picture, figure ? Developers in practice use several keywords for FL through trial and error Problem 1: How to Find Appropriate Queries? Search FL How?? 5

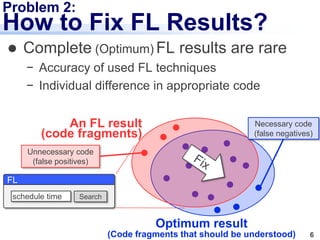

- 6. ? Complete (Optimum) FL results are rare ? Accuracy of used FL techniques ? Individual difference in appropriate code Problem 2: How to Fix FL Results? schedule time Search FL An FL result (code fragments) Optimum result (Code fragments that should be understood) Unnecessary code (false positives) Necessary code (false negatives) 6

- 7. Selection and understanding of code fragments Query Input Feature location (calculating scores) schedule Search 1st: ScheduleManager.addSchedule() 2nd: EditSchedule.inputCheck() ĪŁ Updating queries Relevance feedback (addition of hints) ? Added two feedback processes Our Solution: Feedbacks Finish if the user judges that he/she has read all the necessary code fragments 7

- 8. ? Wide query for initial FL ? By expanding queries to its synonyms ? Narrow query for subsequent FLs ? By using concrete identifies in source code Query Expansion Expand ? schedule ? agenda ? plan schedule* date* Search 1st FL public void createSchedule() { ĪŁ String hour = ĪŁ Time time = new Time(hour, ĪŁ); ĪŁ } schedule time Search 2nd FL ? list ? time ? date A code fragment in a FL result Thesaurus 8

- 9. Dependency Code fragment with its score ? Improving FL results by users feedback ? Adding a hint when the selected code fragments is relevant or irrelevant to the feature ? Feedbacks are propagated into other fragments using dependencies Relevance Feedback i th result of FL 1 2 9 6 ?: relevant 1 8 11 6 (i+1) th result of FL propagation by dependencies 9

- 10. Supporting Tool: iFL ? Implemented as an Eclipse plug-in ? For static analysis: Eclipse JDT ? For dynamic analysis: Reticella [Noda 09] ? For a thesaurus: WordNet JDT Reticella iFL- core Eclipse Word Net Exec. Traces / dependencies Syntactic information Synonym Info. Implemented! 10

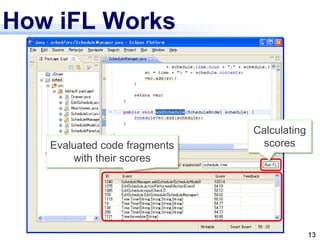

- 13. How iFL Works Calculating scoresEvaluated code fragments with their scores 13

- 14. How iFL Works Associated method will be shown in the code editor when user selects a code fragment 14

- 15. How iFL Works Calculating scores again 15 Adding hints

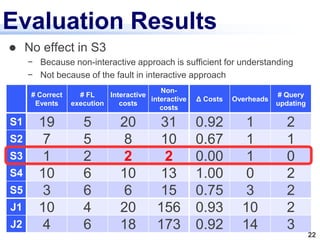

- 18. ? A user (familiar with Java and iFL) actually understood feature implementations Preliminary Evaluation # Correct Events # FL execution Interactive costs Non- interactive costs ”ż Costs Overheads # Query updating S1 19 5 20 31 0.92 1 2 S2 7 5 8 10 0.67 1 1 S3 1 2 2 2 0.00 1 0 S4 10 6 10 13 1.00 0 2 S5 3 6 6 15 0.75 3 2 J1 10 4 20 156 0.93 10 2 J2 4 6 18 173 0.92 14 3 5 change requirements and related features from Sched (home-grown, small-sized) 2 change requirements and related features from JDraw (open-source, medium-sized) 18

- 19. Evaluation Criteria # Correct Events # FL execution Interactive costs Non- interactive costs ”ż Costs Overheads # Query updating S1 19 5 20 31 0.92 1 2 S2 7 5 8 10 0.67 1 1 S3 1 2 2 2 0.00 1 0 S4 10 6 10 13 1.00 0 2 S5 3 6 6 15 0.75 3 2 J1 10 4 20 156 0.93 10 2 J2 4 6 18 173 0.92 14 3 # selected, but unnecessary code fragments Reduced ratio of overheads between interactive and non-interactive approaches 19

- 20. # Correct Events # FL execution Interactive costs Non- interactive costs ”ż Costs Overheads # Query updating S1 19 5 20 31 0.92 1 2 S2 7 5 8 10 0.67 1 1 S3 1 2 2 2 0.00 1 0 S4 10 6 10 13 1.00 0 2 S5 3 6 6 15 0.75 3 2 J1 10 4 20 156 0.93 10 2 J2 4 6 18 173 0.92 14 3 ? Reduced costs for 6 out of 7 cases ? Especially, reduced 90% of costs for 4 cases Evaluation Results 20

- 21. # Correct Events # FL execution Interactive costs Non- interactive costs ”ż Costs Overheads # Query updating S1 19 5 20 31 0.92 1 2 S2 7 5 8 10 0.67 1 1 S3 1 2 2 2 0.00 1 0 S4 10 6 10 13 1.00 0 2 S5 3 6 6 15 0.75 3 2 J1 10 4 20 156 0.93 10 2 J2 4 6 18 173 0.92 14 3 ? Small overheads ? Sched: 1.2, JDraw: 12 in average Evaluation Results 21

- 22. # Correct Events # FL execution Interactive costs Non- interactive costs ”ż Costs Overheads # Query updating S1 19 5 20 31 0.92 1 2 S2 7 5 8 10 0.67 1 1 S3 1 2 2 2 0.00 1 0 S4 10 6 10 13 1.00 0 2 S5 3 6 6 15 0.75 3 2 J1 10 4 20 156 0.93 10 2 J2 4 6 18 173 0.92 14 3 ? No effect in S3 ? Because non-interactive approach is sufficient for understanding ? Not because of the fault in interactive approach Evaluation Results 22

- 23. ? Summary ? We developed iFL: interactively supporting the understanding of feature implementation using FL ? iFL can reduce understanding costs in 6 out of 7 cases ? Future Work ? Evaluation++: on larger-scale projects ? Feedback++: for more efficient relevance feedback ? Observing code browsing activities on IDE Conclusion 23

- 25. Source code Execution trace / dependencies Dynamic analysis Methods with their scores Static analysis Evaluating eventsTest case Query schedule ? Based on search-based FL ? Use of static + dynamic analyses The FL Approach Events with their scores (FL result) Hints ? ? 25

- 26. Static Analysis: eval. methods ? Matching queries to identifiers public void createSchedule() { ĪŁ String hour = ĪŁ Time time = new Time(hour, ĪŁ); ĪŁ } 20 for method names 1 for local variables ? schedule ? agenda ? time ? date Schedule time Search Thesaurus The basic score (BS) of createSchedule: 21 Expand Expanded queries 26

- 27. Dynamic Analysis ? Extracting execution traces and their dependencies by executing source code with a test case e1: loadSchedule() e2: initWindow() e3: createSchedule() e4: Time() e5: ScheduleModel() e6: updateList() e1 e3 e6e2 e4 e5 Dependencies (Method invocation relations)Execution trace 27

- 28. Evaluating Methods ? Highly scored events are: ? executing methods having high basic scores ? Close to highly scored events Events Methods BS Score e1 loadSchedule() 20 52.2 e2 initWindow() 0 18.7 e3 createSchedule() 21 38.7 e4 Time() 20 66.0 e5 ScheduleModel() 31 42.6 e6 updateList() 2 19.0 e1 e3 e6e2 e4 e5 52.2 (20) 18.7 (0) 19.0 (2) 42.6 (31) 66.0 (20) 38.7 (21) 28

- 29. Selection and Understanding ? Selecting highly ranked events ? Extracting associated code fragment (method body) and reading it to understand public Time(String hour, ĪŁ) { ĪŁ String hour = ĪŁ String minute = ĪŁ ĪŁ } Events Methods Scores Rank e1 loadSchedule() 52.2 2 e2 initWindow() 18.7 6 e3 createSchedule() 38.7 4 e4 Time() 66.0 1 e5 ScheduleModel() 42.6 3 e6 updateList() 19.0 5 Extracted code fragments 29

- 30. Relevance Feedback ? User reads the selected code fragments, and adds hints ? Relevant (?): maximum basic score ? Irrelevant to the feature (?): score becomes 0 Events Methods Hints Basic scores Scores Ranks e1 loadSchedule() 20 52.2 77.6 2 e2 initWindow() 0 18.7 6 e3 createSchedule() 21 38.7 96.4 4 1 e4 Time() ? 20 46.5 66.0 70.2 1 3 e5 ScheduleModel() 31 42.6 51.0 3 4 e6 updateList() 2 19.0 5 30

![? Program understanding is costly

? Extending/fixing existing features

Understanding the implementation of target

feature is necessary

? Dominant of maintenance costs [Vestdam 04]

? Our focus: feature/concept location (FL)

? Locating/extracting code fragments which

implement the given feature/concept

Background

[Vestdam 04]: Ī░Maintaining Program Understanding ©C Issues, Tools, and Future DirectionsĪ▒,

Nordic Journal of Computing, 2004.

3](https://image.slidesharecdn.com/ifl-101023015627-phpapp02/85/iFL-An-Interactive-Environment-for-Understanding-Feature-Implementations-3-320.jpg)

![Supporting Tool: iFL

? Implemented as an Eclipse plug-in

? For static analysis: Eclipse JDT

? For dynamic analysis: Reticella [Noda 09]

? For a thesaurus: WordNet

JDT

Reticella iFL-

core

Eclipse

Word

Net

Exec. Traces /

dependencies

Syntactic

information

Synonym

Info.

Implemented!

10](https://image.slidesharecdn.com/ifl-101023015627-phpapp02/85/iFL-An-Interactive-Environment-for-Understanding-Feature-Implementations-10-320.jpg)