Intro KaKao ADT (Almighty Data Transmitter)

- 2. About Speakers ŌĆó ņä▒ļÅÖņ░¼ ŌĆó KTH, Ēŗ░ļ¬¼, ņ╣┤ņ╣┤ņśż, (Ēśä)ņ╣┤ņ╣┤ņśżļ▒ģĒü¼ DBA ŌĆó ĒŖ╣ņØ┤ņé¼ĒĢŁ: ADT ĒöäļĪ£ņĀØĒŖĖ ļÅäņżæ ņØĆĒ¢ēņ£╝ļĪ£ ĒŖÉ ŌĆó A.k.a ļ░░ņŗĀņ×É (ņןļé£ņ×ģļŗłļŗż.ŃģÄŃģÄ) ŌĆó ĒĢ£ņłśĒśĖ ŌĆó 2007: (ņŻ╝)ņĢäņØ┤ņö©ņ£Ā Ļ│ĄļÅÖ ņ░ĮņŚģ ŌĆó 2012: ņ╣┤ņ╣┤ņśżņŚÉ ņØĖņłśļÉ©(ņ╣┤ņ╣┤ņśżļ×®ņ£╝ļĪ£ ņé¼ļ¬ģ ļ│ĆĻ▓Į) ŌĆó ņ╣┤ņ╣┤ņśżņŚÉņä£ Ļ│äņåŹ ņל ņ¦Ćļé┤ļŖö ņżæ

- 4. History ŌĆó 2015ļģä ņżæļ░ś - ņØ╝ļČĆ ņä£ļ╣äņŖżņØś MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ ĒĢäņÜöņä▒ ļīĆļæÉ

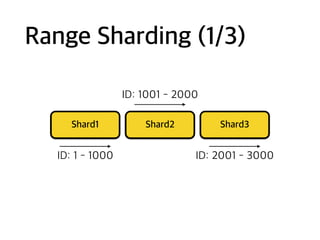

- 5. Range Sharding (1/3) Shard1 Shard2 Shard3 ID: 1 - 1000 ID: 1001 - 2000 ID: 2001 - 3000

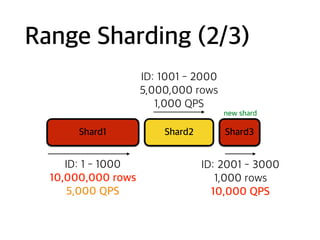

- 6. Range Sharding (2/3) Shard1 Shard2 Shard3 ID: 1 - 1000 10,000,000 rows 5,000 QPS ID: 1001 - 2000 5,000,000 rows 1,000 QPS ID: 2001 - 3000 1,000 rows 10,000 QPS new shard

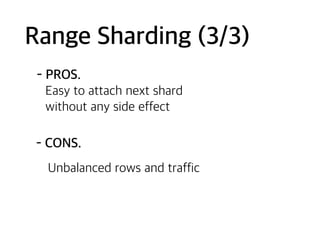

- 7. Range Sharding (3/3) - PROS. - CONS. Unbalanced rows and traffic Easy to attach next shard ŌĆ© without any side effect

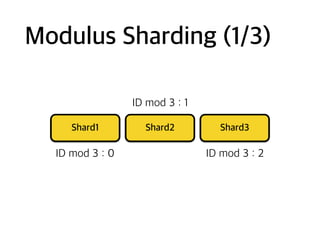

- 8. Modulus Sharding (1/3) Shard1 Shard2 Shard3 ID mod 3 : 0 ID mod 3 : 1 ID mod 3 : 2

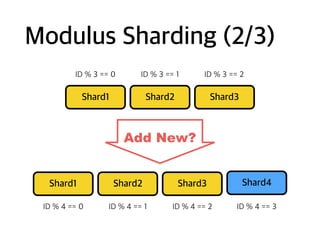

- 9. Modulus Sharding (2/3) Shard1 Shard2 Shard3 ID % 3 == 0 ID % 3 == 1 ID % 3 == 2 Shard1 Shard2 Shard3 ID % 4 == 0 ID % 4 == 1 ID % 4 == 2 Shard4 ID % 4 == 3 Add New?

- 10. Modulus Sharding (3/3) - PROS. - CONS. Difficult to attach new shard Better resource balancing

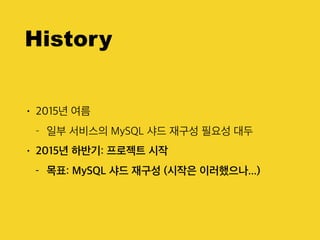

- 11. History ŌĆó 2015ļģä ņŚ¼ļ”ä - ņØ╝ļČĆ ņä£ļ╣äņŖżņØś MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ ĒĢäņÜöņä▒ ļīĆļæÉ ŌĆó 2015ļģä ĒĢśļ░śĻĖ░: ĒöäļĪ£ņĀØĒŖĖ ņŗ£ņ×æ - ļ¬®Ēæ£: MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ (ņŗ£ņ×æņØĆ ņØ┤ļ¤¼Ē¢łņ£╝ļéś...)

- 12. ŌĆō Chan ŌĆ£MySQL Binary Logļź╝ ņØ┤ņÜ®ĒĢ┤ņä£ ĒĢĀ ņłś ņ׳ļŖö Ļ▓āņØ┤ ņāżļō£ ņ×¼ĻĄ¼ņä▒ ņÖĖņŚÉļÅä ļ¦Äņ¦Ć ņĢŖņØäĻ╣īņÜö?ŌĆØ

- 13. History ŌĆó 2015ļģä ņŚ¼ļ”ä - ņØ╝ļČĆ ņä£ļ╣äņŖżņØś MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ ĒĢäņÜöņä▒ ļīĆļæÉ ŌĆó 2015ļģä ĒĢśļ░śĻĖ░: ĒöäļĪ£ņĀØĒŖĖ ņŗ£ņ×æ - ļ¬®Ēæ£: MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ - ļ¬®Ēæ£: ļŗżņ¢æĒĢ£ ļ¦łņØ┤ĻĘĖļĀłņØ┤ņģś (ETL+CDC ?)

- 14. ŌĆō ņ¢┤ļŖÉ ņä£ļ╣äņŖżņØś ņ¢┤ļŖÉ MySQL ņä£ļ▓ä ņłśļ░▒MB/minņØś ņåŹļÅäļĪ£ ņ×ÉļØ╝ļéśļŖö Binlogļź╝ ļŗłĻ░Ć Ļ░Éļŗ╣ĒĢĀ ņłś ņ׳ņØäĻ╣ī?Ńģŗ

- 15. History ŌĆó 2015ļģä ņŚ¼ļ”ä - ņØ╝ļČĆ ņä£ļ╣äņŖżņØś MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ ĒĢäņÜöņä▒ ļīĆļæÉ ŌĆó 2015ļģä ĒĢśļ░śĻĖ░: ĒöäļĪ£ņĀØĒŖĖ ņŗ£ņ×æ - ļ¬®Ēæ£: MySQL ņāżļō£ ņ×¼ĻĄ¼ņä▒ - ļŗżņ¢æĒĢ£ ļ¦łņØ┤ĻĘĖļĀłņØ┤ņģś - ļ╣ĀļźĖ ņ▓śļ”¼ ņåŹļÅäĻ░Ć ĒĢäņÜöĒĢ©

- 16. Goals & Concepts

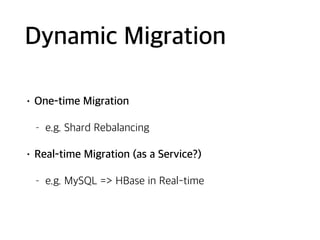

- 17. Goals Dynamic Migration Performance Support only MySQL in first release

- 18. Dynamic Migration ŌĆó One-time Migration - e.g. Shard Rebalancing ŌĆó Real-time Migration (as a Service?) - e.g. MySQL => HBase in Real-time

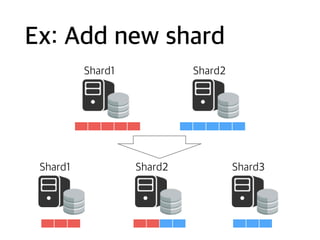

- 19. Ex: Add new shard Shard1 Shard2 Shard1 Shard3Shard2

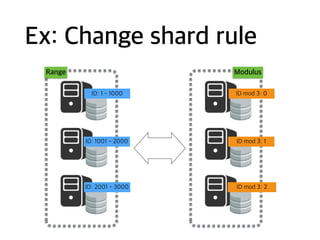

- 20. Ex: Change shard rule ID: 1 - 1000 ID: 1001 - 2000 ID: 2001 - 3000 Range ID mod 3: 0 ID mod 3: 1 ID mod 3: 2 Modulus

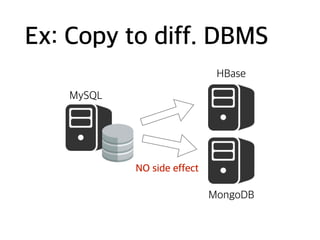

- 21. Ex: Copy to diff. DBMS MySQL HBase MongoDB NO side effect

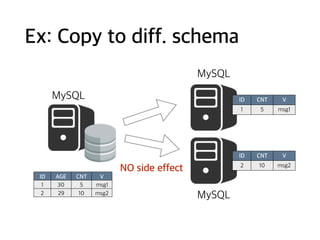

- 22. Ex: Copy to diff. schema MySQL MySQL MySQL ID AGE CNT V 1 30 5 msg1 2 29 10 msg2 ID CNT V 1 5 msg1 ID CNT V 2 10 msg2 NO side effect

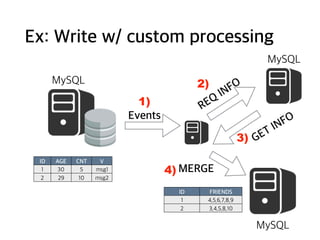

- 23. Ex: Write w/ custom processing MySQL MySQL MySQL Events ID AGE CNT V 1 30 5 msg1 2 29 10 msg2 1) REQ INFO2) 3) GET INFO 4) MERGE ID FRIENDS 1 4,5,6,7,8,9 2 3,4,5,8,10

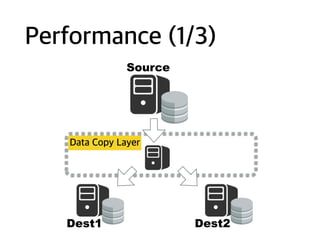

- 24. Performance (1/3) Source Dest1 Dest2 Data Copy Layer

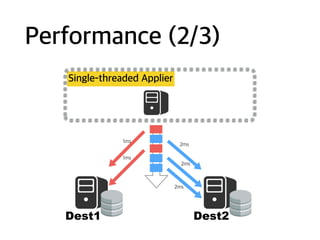

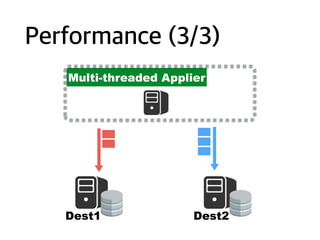

- 25. Performance (2/3) Single-threaded Applier Dest1 Dest2 1ms 1ms 2ms 2ms 2ms

- 27. Parallel Processing (1/3) Different Row ID Parallel Processing Same Row ID Sequential Processing

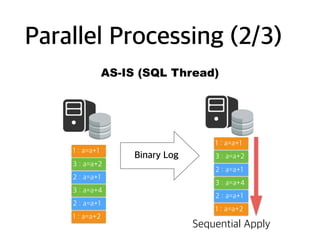

- 28. Parallel Processing (2/3) AS-IS (SQL Thread) 1 : a=a+1 3 : a=a+2 2 : a=a+1 3 : a=a+4 2 : a=a+1 1 : a=a+2 Binary Log 1 : a=a+1 3 : a=a+2 2 : a=a+1 3 : a=a+4 2 : a=a+1 1 : a=a+2 Sequential Apply

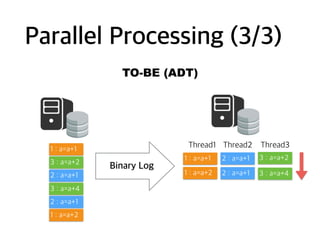

- 29. Parallel Processing (3/3) TO-BE (ADT) 1 : a=a+1 3 : a=a+2 2 : a=a+1 3 : a=a+4 2 : a=a+1 1 : a=a+2 1 : a=a+1 3 : a=a+22 : a=a+1 3 : a=a+42 : a=a+11 : a=a+2 Thread1 Thread2 Thread3 Binary Log

- 30. ADT Features

- 31. Features ŌĆó Table Crawler ŌĆó SELECT ņ┐╝ļ”¼ņØś ļ░śļ│ĄŌĆ© SELECT * FROM ? [ WHERE id > ? ] LIMIT ?; ŌĆó Binlog Receiver ŌĆó MySQL Replication ĒöäļĪ£ĒåĀņĮ£ ŌĆó Custom Data Handler ŌĆó ņłśņ¦æĒĢ£ ļŹ░ņØ┤Ēä░ņØś ņ▓śļ”¼ ļČĆļČäŌĆ© e.g. Shard reconstruction handler ŌĆó ņŚ¼ļ¤¼ ņŖżļĀłļō£ņŚÉ ņØśĒĢ┤ ļÅÖņŗ£ņŚÉ ņŗżĒ¢ēļÉ©

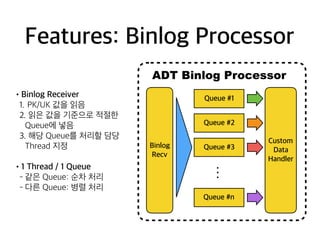

- 32. Features: Binlog Processor Binlog Recv Queue #1 Custom Data Handler Queue #2 Queue #3 Queue #n ŌĆ” ADT Binlog Processor ŌĆóBinlog Receiver 1. PK/UK Ļ░ÆņØä ņØĮņØī 2. ņØĮņØĆ Ļ░ÆņØä ĻĖ░ņżĆņ£╝ļĪ£ ņĀüņĀłĒĢ£ QueueņŚÉ ļäŻņØī 3. ĒĢ┤ļŗ╣ Queueļź╝ ņ▓śļ”¼ĒĢĀ ļŗ┤ļŗ╣ Thread ņ¦ĆņĀĢ ŌĆó1 Thread / 1 Queue - Ļ░ÖņØĆ Queue: ņł£ņ░© ņ▓śļ”¼ - ļŗżļźĖ Queue: ļ│æļĀ¼ ņ▓śļ”¼

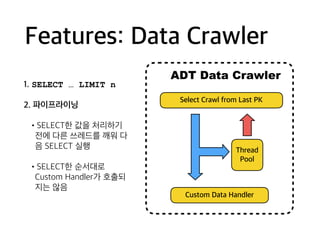

- 33. Features: Data Crawler 1. SELECT ŌĆ” LIMIT n 2. ĒīīņØ┤ĒöäļØ╝ņØ┤ļŗØ ŌĆóSELECTĒĢ£ Ļ░ÆņØä ņ▓śļ”¼ĒĢśĻĖ░ ņĀäņŚÉ ļŗżļźĖ ņō░ļĀłļō£ļź╝ Ļ╣©ņøī ļŗż ņØī SELECT ņŗżĒ¢ē ŌĆóSELECTĒĢ£ ņł£ņä£ļīĆļĪ£ Custom HandlerĻ░Ć ĒśĖņČ£ļÉś ņ¦ĆļŖö ņĢŖņØīŌĆ© Select Crawl from Last PK Custom Data Handler ADT Data Crawler Thread Pool

- 34. ADT Requirements

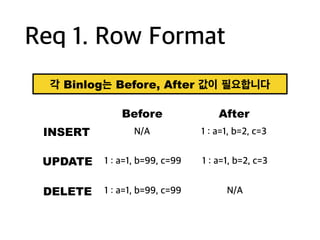

- 35. Req 1. Row Format Ļ░ü BinlogļŖö Before, After Ļ░ÆņØ┤ ĒĢäņÜöĒĢ®ļŗłļŗż N/A 1 : a=1, b=2, c=3 Before After INSERT 1 : a=1, b=99, c=99 1 : a=1, b=2, c=3UPDATE 1 : a=1, b=99, c=99 N/ADELETE

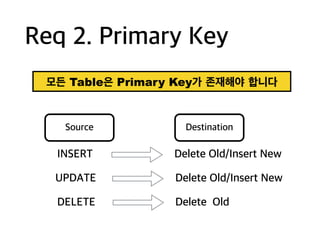

- 36. Req 2. Primary Key DELETE Source Destination INSERT UPDATE Delete Old/Insert New Delete Old/Insert New Delete Old ļ¬©ļōĀ TableņØĆ Primary KeyĻ░Ć ņĪ┤ņ×¼ĒĢ┤ņĢ╝ ĒĢ®ļŗłļŗż

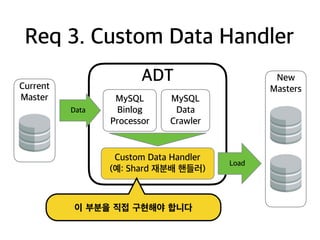

- 37. ADT Req 3. Custom Data Handler MySQL Binlog Processor MySQL Data Crawler Custom Data Handler (ņśł: Shard ņ×¼ļČäļ░░ ĒĢĖļōżļ¤¼) New Masters Load Current Master Data ņØ┤ ļČĆļČäņØä ņ¦üņĀæ ĻĄ¼ĒśäĒĢ┤ņĢ╝ ĒĢ®ļŗłļŗż

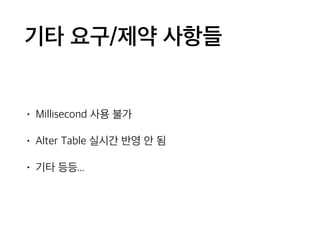

- 38. ĻĖ░ĒāĆ ņÜöĻĄ¼/ņĀ£ņĢĮ ņé¼ĒĢŁļōż ŌĆó Millisecond ņé¼ņÜ® ļČłĻ░Ć ŌĆó Alter Table ņŗżņŗ£Ļ░ä ļ░śņśü ņĢł ļÉ© ŌĆó ĻĖ░ĒāĆ ļō▒ļō▒...

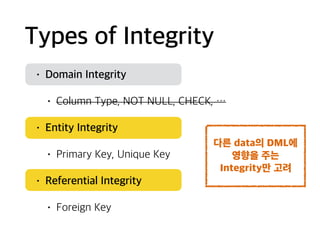

- 40. Types of Integrity ŌĆó Domain Integrity ŌĆó Column Type, NOT NULL, CHECK, ŌĆ” ŌĆó Entity Integrity ŌĆó Primary Key, Unique Key ŌĆó Referential Integrity ŌĆó Foreign Key ļŗżļźĖ dataņØś DMLņŚÉ ņśüĒ¢źņØä ņŻ╝ļŖö Integrityļ¦ī Ļ│ĀļĀż

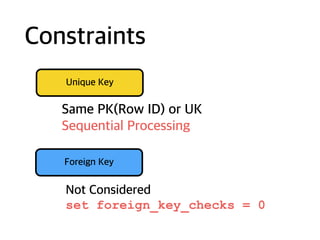

- 41. Constraints Unique Key Same PK(Row ID) or UK Sequential Processing Foreign Key Not ConsideredŌĆ© set foreign_key_checks = 0

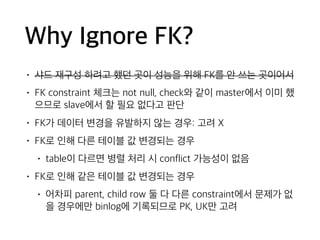

- 42. Why Ignore FK? ŌĆó ņāżļō£ ņ×¼ĻĄ¼ņä▒ ĒĢśļĀżĻ│Ā Ē¢łļŹś Ļ││ņØ┤ ņä▒ļŖźņØä ņ£äĒĢ┤ FKļź╝ ņĢł ņō░ļŖö Ļ││ņØ┤ņ¢┤ņä£ ŌĆó FK constraint ņ▓┤Ēü¼ļŖö not null, checkņÖĆ Ļ░ÖņØ┤ masterņŚÉņä£ ņØ┤ļ»Ė Ē¢ł ņ£╝ļ»ĆļĪ£ slaveņŚÉņä£ ĒĢĀ ĒĢäņÜö ņŚåļŗżĻ│Ā ĒīÉļŗ© ŌĆó FKĻ░Ć ļŹ░ņØ┤Ēä░ ļ│ĆĻ▓ĮņØä ņ£Āļ░£ĒĢśņ¦Ć ņĢŖļŖö Ļ▓ĮņÜ░: Ļ│ĀļĀż X ŌĆó FKļĪ£ ņØĖĒĢ┤ ļŗżļźĖ ĒģīņØ┤ļĖö Ļ░Æ ļ│ĆĻ▓ĮļÉśļŖö Ļ▓ĮņÜ░ ŌĆó tableņØ┤ ļŗżļź┤ļ®┤ ļ│æļĀ¼ ņ▓śļ”¼ ņŗ£ conflict Ļ░ĆļŖźņä▒ņØ┤ ņŚåņØī ŌĆó FKļĪ£ ņØĖĒĢ┤ Ļ░ÖņØĆ ĒģīņØ┤ļĖö Ļ░Æ ļ│ĆĻ▓ĮļÉśļŖö Ļ▓ĮņÜ░ ŌĆó ņ¢┤ņ░©Ēö╝ parent, child row ļæś ļŗż ļŗżļźĖ constraintņŚÉņä£ ļ¼ĖņĀ£Ļ░Ć ņŚå ņØä Ļ▓ĮņÜ░ņŚÉļ¦ī binlogņŚÉ ĻĖ░ļĪØļÉśļ»ĆļĪ£ PK, UKļ¦ī Ļ│ĀļĀż

- 43. Error Handling

- 44. ŌĆō Chan & Gordon ŌĆ£ņÜ┤ņśüņØ┤ ĒĢŁņāü ļé┤ ļ¦łņØīļīĆļĪ£ ļÉśļŖö Ļ▓āļÅä ņĢäļŗłĻ│Ā, ņŚ¼ļ¤¼ ņāüĒÖ®ņŚÉ ļīĆĒĢ┤ ļŗż Ļ│ĀļĀżĒĢĀ ĒĢäņÜö ņŚåņØ┤ Ļ░äļŗ©ĒĢśĻ▓ī Ļ░æņŗ£ļŗż.ŌĆØ

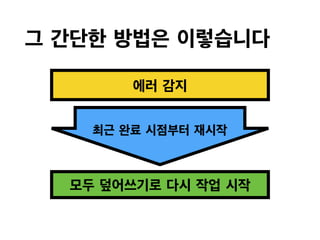

- 45. ĻĘĖ Ļ░äļŗ©ĒĢ£ ļ░®ļ▓ĢņØĆ ņØ┤ļĀćņŖĄļŗłļŗż ņĄ£ĻĘ╝ ņÖäļŻī ņŗ£ņĀÉļČĆĒä░ ņ×¼ņŗ£ņ×æ ņŚÉļ¤¼ Ļ░Éņ¦Ć ļ¬©ļæÉ ļŹ«ņ¢┤ņō░ĻĖ░ļĪ£ ļŗżņŗ£ ņ×æņŚģ ņŗ£ņ×æ

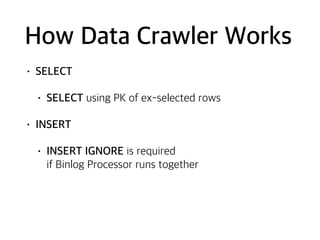

- 47. How Data Crawler Works ŌĆó SELECT ŌĆó SELECT using PK of ex-selected rows ŌĆó INSERT ŌĆó INSERT IGNORE is requiredŌĆ© if Binlog Processor runs together

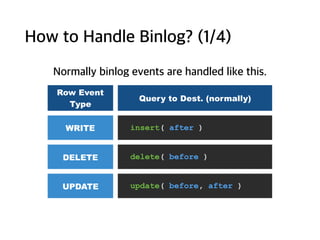

- 48. How to Handle Binlog? (1/4) Row Event Type Query to Dest. (normally) WRITE insert( after ) DELETE delete( before ) UPDATE update( before, after ) Normally binlog events are handled like this.

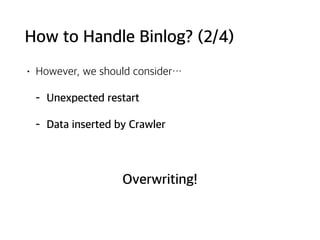

- 49. How to Handle Binlog? (2/4) ŌĆó However, we should considerŌĆ” - Unexpected restart - Data inserted by Crawler Overwriting!

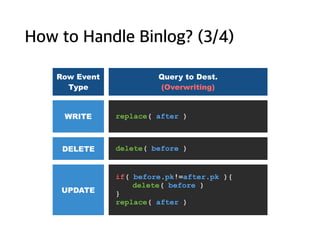

- 50. How to Handle Binlog? (3/4) Row Event Type Query to Dest. (Overwriting) WRITE replace( after ) DELETE delete( before ) UPDATE if( before.pk!=after.pk ){ delete( before ) } replace( after )

- 51. How to Handle Binlog? (4/4) ŌĆó Normal QueryŌĆ© UPDATE ŌĆ” SET @1=after.1, @2=after.2,ŌĆ”ŌĆ© WHERE pk_col=before.pk ŌĆó Transformation 1: UnrollingŌĆ© DELETE FROM ŌĆ” WHERE pk_col=before.pk; INSERT INTO ŌĆ” VALUES(after.1, after.2,ŌĆ”); ŌĆó Transformation 2: OverwritingŌĆ© DELETE FROM ŌĆ” WHERE pk_col=before.pk; REPLACE INTO ŌĆ” VALUES(after.1, after.2,ŌĆ”); ŌĆó Transformation 3: Reducing ŌĆó Delete [before] only if PK is changed

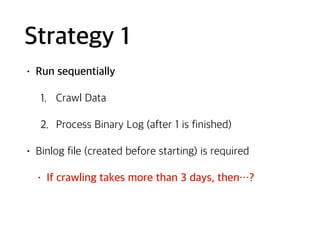

- 52. Strategy 1 ŌĆó Run sequentially 1. Crawl Data 2. Process Binary Log (after 1 is finished) ŌĆó Binlog file (created before starting) is required ŌĆó If crawling takes more than 3 days, thenŌĆ”?

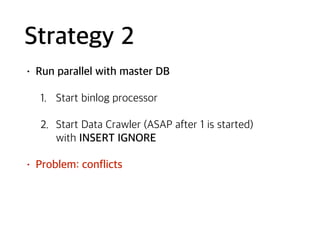

- 53. Strategy 2 ŌĆó Run parallel with master DB 1. Start binlog processor 2. Start Data Crawler (ASAP after 1 is started)ŌĆ© with INSERT IGNORE ŌĆó Problem: conflicts

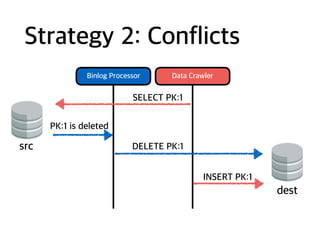

- 54. Strategy 2: Conflicts src dest Binlog Processor Data Crawler SELECT PK:1 INSERT PK:1 PK:1 is deleted DELETE PK:1

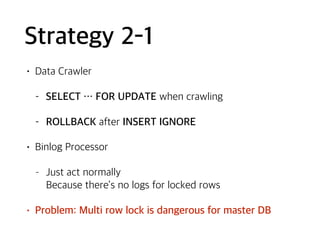

- 55. Strategy 2-1 ŌĆó Data Crawler - SELECT ŌĆ” FOR UPDATE when crawling - ROLLBACK after INSERT IGNORE ŌĆó Binlog Processor - Just act normallyŌĆ© Because thereŌĆÖs no logs for locked rows ŌĆó Problem: Multi row lock is dangerous for master DB

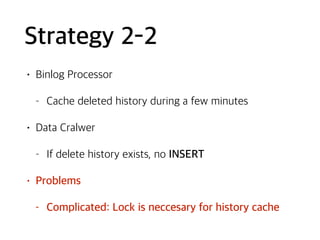

- 56. Strategy 2-2 ŌĆó Binlog Processor - Cache deleted history during a few minutes ŌĆó Data Cralwer - If delete history exists, no INSERT ŌĆó Problems - Complicated: Lock is neccesary for history cache

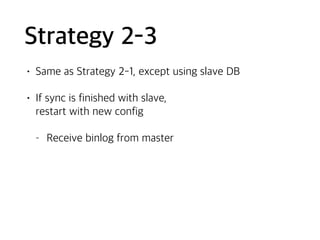

- 57. Strategy 2-3 ŌĆó Same as Strategy 2-1, except using slave DB ŌĆó If sync is finished with slave, ŌĆ© restart with new config - Receive binlog from master

- 58. Test

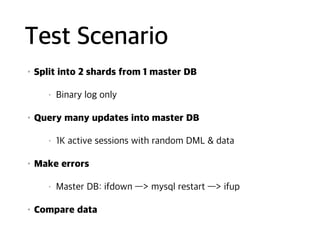

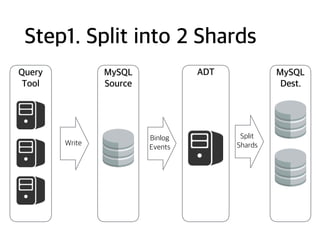

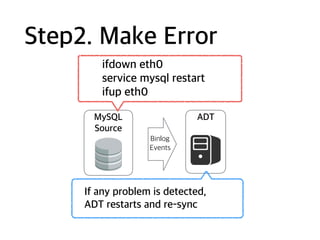

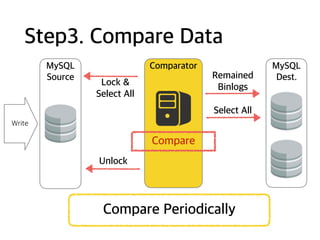

- 59. Test Scenario ŌĆóSplit into 2 shards from 1 master DB ŌĆó Binary log only ŌĆóQuery many updates into master DB ŌĆó 1K active sessions with random DML & data ŌĆóMake errors ŌĆó Master DB: ifdown ŌĆö> mysql restart ŌĆö> ifup ŌĆóCompare data

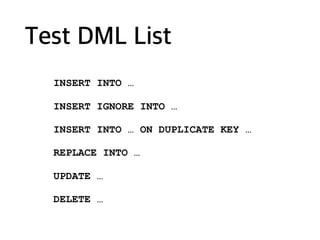

- 60. Test DML List INSERT INTO ŌĆ” INSERT IGNORE INTO ŌĆ” INSERT INTO ŌĆ” ON DUPLICATE KEY ŌĆ” REPLACE INTO ŌĆ” UPDATE ŌĆ” DELETE ŌĆ”

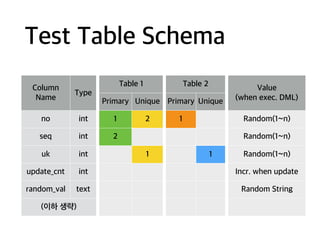

- 61. Test Table Schema Column Name Type Table 1 Table 2 ValueŌĆ© (when exec. DML)Primary Unique Primary Unique no int 1 2 1 Random(1~n) seq int 2 Random(1~n) uk int 1 1 Random(1~n) update_cnt int Incr. when update random_val text Random String (ņØ┤ĒĢś ņāØļץ)

- 62. Step1. Split into 2 Shards MySQL Source Query Tool ADT Binlog Events Write MySQL Dest. Split Shards

- 63. Step2. Make Error MySQL Source ADT Binlog Events ifdown eth0 service mysql restart ifup eth0 If any problem is detected, ADT restarts and re-sync

- 64. Step3. Compare Data MySQL Source Comparator MySQL Dest. Lock & Select All Remained Binlogs Select All Compare Unlock Compare Periodically Write

- 65. Test result isŌĆ” No Error during 2 weeks

- 66. TODO

- 67. Wish to Apply forŌĆ” Shard reconstruction (default) MySQL binary log ŌĆö> NoSQL Copy data change history into OLAP MySQL binary log ŌĆö> Push Notification Re-construct shards by GPS Point (Kakao Taxi?) ŌĆ”ŌĆ”

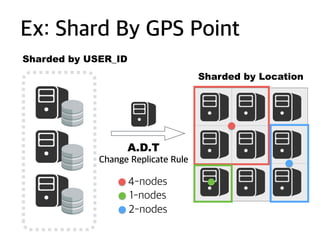

- 68. A.D.T Change Replicate Rule Sharded by USER_ID Sharded by Location 4-nodes 1-nodes 2-nodes Ex: Shard By GPS Point

- 69. Next Dev. Plans ŌĆó Change language: Java ŌĆö> GoLang ŌĆó Control Tower: Admin & Monitoring ŌĆó Is ADT alive? ŌĆó Save checkpoint for ungraceful restart ŌĆó Support Multiple DB Types ŌĆó Redis, PgSQL, ŌĆ”ŌĆ”

![Features

ŌĆó Table Crawler

ŌĆó SELECT ņ┐╝ļ”¼ņØś ļ░śļ│ĄŌĆ©

SELECT * FROM ? [ WHERE id > ? ] LIMIT ?;

ŌĆó Binlog Receiver

ŌĆó MySQL Replication ĒöäļĪ£ĒåĀņĮ£

ŌĆó Custom Data Handler

ŌĆó ņłśņ¦æĒĢ£ ļŹ░ņØ┤Ēä░ņØś ņ▓śļ”¼ ļČĆļČäŌĆ©

e.g. Shard reconstruction handler

ŌĆó ņŚ¼ļ¤¼ ņŖżļĀłļō£ņŚÉ ņØśĒĢ┤ ļÅÖņŗ£ņŚÉ ņŗżĒ¢ēļÉ©](https://image.slidesharecdn.com/4-170510114403/85/Intro-KaKao-ADT-Almighty-Data-Transmitter-31-320.jpg)

![How to Handle Binlog? (4/4)

ŌĆó Normal QueryŌĆ©

UPDATE ŌĆ” SET @1=after.1, @2=after.2,ŌĆ”ŌĆ©

WHERE pk_col=before.pk

ŌĆó Transformation 1: UnrollingŌĆ©

DELETE FROM ŌĆ” WHERE pk_col=before.pk;

INSERT INTO ŌĆ” VALUES(after.1, after.2,ŌĆ”);

ŌĆó Transformation 2: OverwritingŌĆ©

DELETE FROM ŌĆ” WHERE pk_col=before.pk;

REPLACE INTO ŌĆ” VALUES(after.1, after.2,ŌĆ”);

ŌĆó Transformation 3: Reducing

ŌĆó Delete [before] only if PK is changed](https://image.slidesharecdn.com/4-170510114403/85/Intro-KaKao-ADT-Almighty-Data-Transmitter-51-320.jpg)