ITB_2023_Chatgpt_Box_Scott_Steinbeck.pdf

- 1. Session ChatGPT Box LED BY SCOTT STEINBECK

- 2. SCOTT STEINBECK SPEAKER AT ITB2023 ŌŚÅ Software Engineer ŌŚÅ 15+ Year of experience ŌŚÅ Father ŌŚÅ Hardware Tinkerer ŌŚÅ Coding on my free time ŌŚÅ Overcommitting myself ŌŚÅ Automating everything ŌŚÅ IOT Development ŌŚÅ Teaching Coding/Electronics ŌŚÅ Contributing to open source Hobbies

- 3. BREIF HISTORY Thanks to our sponsors

- 4. ŌŚÅ Traditional language models (e.g., n-gram models) had limitations: ŌŚŗ Explosion of state space (curse of dimensionality) and sparsity led to inconsistent output resembling gibberish. ŌŚŗ Inability to handle novel phrases and lack of coherence in the generated text. Pre-2000's:

- 5. ŌŚÅ Advancements in training neural networks by Geo’¼Ćrey Hinton: ŌŚŗ Deep neural networks alleviated some language model problems. ŌŚŗ Nuanced arbitrary concepts were represented in a ’¼ünite space, but output lacked coherence relative to the input sequence. Mid-2000's:

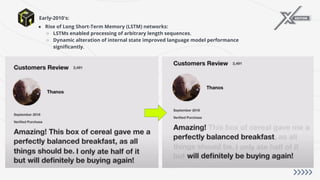

- 6. ŌŚÅ Rise of Long Short-Term Memory (LSTM) networks: ŌŚŗ LSTMs enabled processing of arbitrary length sequences. ŌŚŗ Dynamic alteration of internal state improved language model performance signi’¼ücantly. Early-2010's:

- 7. ŌŚÅ Introduction of Transformer Networks with the "Attention Is All You Need" paper by Google: ŌŚŗ Transformers revolutionized natural language processing, achieving tasks like language translation at human-level or better. ŌŚŗ Attention mechanisms and parallel processing allowed the model to analyze the entire input simultaneously. Late-2010's:

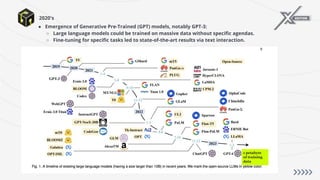

- 8. ŌŚÅ Emergence of Generative Pre-Trained (GPT) models, notably GPT-3: ŌŚŗ Large language models could be trained on massive data without speci’¼üc agendas. ŌŚŗ Fine-tuning for speci’¼üc tasks led to state-of-the-art results via text interaction. 2020's 1 petabyte of training data

- 9. DIGGING IN Thanks to our sponsors

- 10. AI UNDER THE HOOD AI, or Artificial Intelligence, is a broad field that encompasses the development of intelligent systems capable of performing tasks that typically require human intelligence. This works by combining rule-based modeling of human languageŌĆöwith statistical, machine learning, and deep learning models

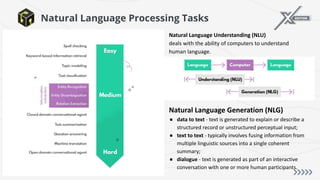

- 11. Natural Language Processing Tasks Natural Language Understanding (NLU) deals with the ability of computers to understand human language. Natural Language Generation (NLG) ŌŚÅ data to text - text is generated to explain or describe a structured record or unstructured perceptual input; ŌŚÅ text to text - typically involves fusing information from multiple linguistic sources into a single coherent summary; ŌŚÅ dialogue - text is generated as part of an interactive conversation with one or more human participants.

- 12. Machine Learning ŌŚÅ ML techniques play a key role in AI by enabling systems to automatically learn from experience, adapt to new data, and improve their performance over time. ŌŚÅ ML algorithms are used in various AI applications, including computer vision, speech recognition, recommendation systems, and, importantly, NLP tasks. Machine Learning to Play Hill Climb Racing

- 13. Deep Learning ŌŚÅ DL has revolutionized ML by enabling models to automatically learn more abstract and nuanced features from raw data. ŌŚÅ DL help NLP to understand natural language, enabling them to capture contextual dependencies and learn complex representations. Deep Learning to car attribute classification

- 14. WHAT IS AN LLM Practical AI A large language model is a prediction engine that takes a sequence of words and tries to predict the most likely sequence to come after that sequence. It does this by assigning a probability to likely next sequences and then samples from those to choose one. The process repeats until some stopping criteria is met. APPLICATIONS AND INFRASTRUCTURE USING ARTIFICIAL INTELLIGENCE TODAY

- 15. HOW AI LEARNS NEW ABILITIES Practical AI As LLMs are scaled they hit a series of critical scales at which new abilities are suddenly ŌĆ£unlockedŌĆØ. LLMs are not directly trained to have these abilities, and they appear in rapid and unpredictable ways as if emerging out of thin air.

- 16. Applications of Large Language Models Practical AI

- 17. ASKING AI A QUESTION Thanks to our sponsors

- 18. TOKENIZATION CHATGPT What are some common mistakes to avoid when writing code? Tokens can be words, subwords, or characters, depending on the specific tokenization scheme used.

- 19. INPUT ANALYSIS CHATGPT 1. Informational Questions: ŌŚÅ "What is the capital of France?" ŌŚÅ "Can you explain the concept of relativity?" 2. Procedural Questions: ŌŚÅ "What are the steps to install a software program?" 3. Comparison Questions: ŌŚÅ "Compare the advantages of iOS and Android." 4. Opinion Questions: ŌŚÅ ŌĆ£Do you think technology is making us more connected or disconnected?" 5. Problem-Solving Questions: ŌŚÅ "How can I optimize my website's loading speed?" 6. Request for Advice: ŌŚÅ "Which programming language should I learn as a beginner?" 7. Request for Explanation: ŌŚÅ "What is the significance of the Pythagorean theorem?" What are some common mistakes to avoid when writing code?

- 20. Understanding the Question CHATGPT 1. Neural Networks turn tokens into dense vectors (word embeddings) ŌŚÅ This adds context and captures complex relationships between words What are some common mistakes to avoid when writing code? Vectors with shorter distances between them usually share common contexts in the corpus. This allows us to find distances between words |cat - dog| = 1.20 |cat - cow| = 1.48 "cat" is closer to "dog" than it is to the "cow". Monday Vector What Vector

- 21. Retrieval/Integration of Knowledge CHATGPT _ _ _ _ mistakes _ avoid _ writing code?

- 22. Generating the Answer CHATGPT _ _ _ _ mistakes _ avoid _ writing code? Common mistakes to avoid when writing code include: ŌŚÅ Poor code organization and lack of proper indentation. ŌŚÅ Inefficient algorithms or data structures that result in slow performance. ŌŚÅ Lack of proper error handling and exception management. ŌŚÅ Insufficient comments and documentation, making the code difficult to understand and maintain. ŌŚÅ Failure to validate input data, leading to potential security vulnerabilities. ŌŚÅ Hardcoding values instead of using constants or configuration files. ŌŚÅ Ignoring code style conventions, making the codebase inconsistent and harder to read. ŌŚÅ Neglecting to handle edge cases and unexpected scenarios. ŌŚÅ Not using version control, resulting in difficulties in tracking changes and collaborating with others. ŌŚÅ Overcomplicating solutions instead of keeping the code simple and maintainable.

- 23. PROMPT ENGINEERING Thanks to our sponsors

- 24. WHAT IS A PROMPT CHATGPT A prompt, sometimes referred to as context, is the text provided to a model before it begins generating output. It guides the model to explore a particular area of what it has learned so that the output is relevant to your goals. Prompts are the secret sauce of chat agents

- 25. MORE DETAILED PROMPTS CHATGPT Responses are only as detailed as focused as the question that is asked. You can see we explain to the bot the various roles we get a more concise answer: ŌŚÅ Give the bot a role ŌŚÅ Give some context on the user ŌŚÅ Give some dynamic data we want the bot to have access to ŌŚÅ and then guidance on how the bot should respond. CHAT GPT IS EXCEPTIONALLY GOOD AT UNDERSTANDING MARKDOWN & JSON

- 26. GIVING CHATGPT JSON AND ASKING QUESTIONS CHATGPT

- 27. CRAFTING YOUR OWN PROMPTS CHATGPT if you were helping a user book a trip, you could: ŌŚÅ Ask the user their dates and destination. ŌŚÅ Behind the scenes, search for flights and hotels. ŌŚÅ Embed the flight and hotel search results in the hidden context. ŌŚÅ Also embed the companyŌĆÖs travel policy in the hidden context.

- 29. ONE SHOT PROMPTING CHATGPT These types of prompts provide the AI model with one example of what you expect the output to be. The basic steps are: Give a quick explanation of what you need, add a question-answer pair or a template as an example

- 30. FEW SHOT PROMPTING CHATGPT Large language models are few-shot learners, meaning that they can learn a new task by being provided just a few examples. The basic steps are: Give a quick explanation of what you need, add a few question-answer pairs or templates as an example.

- 31. FEW SHOT PROMPTING CHATGPT Large language models are few-shot learners, meaning that they can learn a new task by being provided just a few examples. The basic steps are: Give a quick explanation of what you need, add a few question-answer pairs or templates as an example.

- 32. CHAIN OF THOUGHT PROMPTING CHATGPT

- 33. AUGMENTING LARGE LANGUAGE MODELS Thanks to our sponsors

- 37. VECTOR DATABASES CHATGPT Full-text search VS Semantic search

- 38. AGENTS CHATGPT Build your own chat that pulls in other services attach calculator tool for questions regarding calculations