Janus & docker: friends or foe

- 1. Janus & Docker: friends or foe? Alessandro Amirante @alexamirante

- 2. Outline ¡ñ Microservices & Docker ¡ñ Janus as a microservice: issues and takeaways ¡ð Docker networking explained ¡ñ Examples of Docker-based complex architectures ¡ð IETF RPS ¡ð Recordings production 2

- 5. Docker ¡ñ Open source platform for developing, shipping and running applications using container virtualization technology ¡ñ De-facto standard container technology ¡ñ Containers share the same OS kernel ¡ñ Avoid replicating (virtualizing) guest OS, RAM, CPUs, ... ¡ñ Containers are isolated from each other, but can share resources ¡ð File system volumes ¡ð Networks ¡ð ¡ 5

- 8. Deploying Janus ¡ñ Bare metal ¡ñ Virtual Machines ¡ñ Docker containers ¡ñ Cloud instances ¡ñ A mix of the above 8

- 9. Containers deployment strategies ¡ñ Most WebRTC failures are network-related ¡ñ Different networking modes are available for containers ¡ð Host ¡ð NAT ¡ð Dedicated IP ¡ñ Choosing the most appropriate one is the main challenge ¡ñ Spoiler alert: dedicated IP addresses for the win! 9

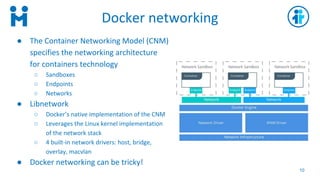

- 10. Docker networking 10 ¡ñ The Container Networking Model (CNM) specifies the networking architecture for containers technology ¡ð Sandboxes ¡ð Endpoints ¡ð Networks ¡ñ Libnetwork ¡ð Docker¡¯s native implementation of the CNM ¡ð Leverages the Linux kernel implementation of the network stack ¡ð 4 built-in network drivers: host, bridge, overlay, macvlan ¡ñ Docker networking can be tricky!

- 11. Network drivers: host ¡ñ Containers use the network stack of the host machine ¡ð No namespaces ¡ð All host ifaces can be directly used by the container ¡ñ Easiest networking mode ¡ñ Network ports conflicts need to be avoided ¡ñ Limits the number of containers running on the same host ¡ñ Auto-scaling is difficult 11

- 12. Network drivers: bridge ¡ñ Docker¡¯s default network mode ¡ñ Implements NAT functionality ¡ñ Containers on the same bridge network communicate over LAN ¡ñ Containers on different bridge networks need routing ¡ñ Port mapping needed for reachability from the outside ¡ð Conflicts need to be avoided 12

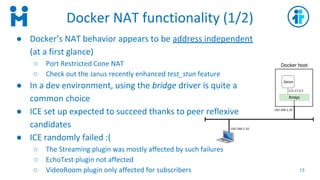

- 13. Docker NAT functionality (1/2) ¡ñ Docker¡¯s NAT behavior appears to be address independent (at a first glance) ¡ð Port Restricted Cone NAT ¡ð Check out the Janus recently enhanced test_stun feature ¡ñ In a dev environment, using the bridge driver is quite a common choice ¡ñ ICE set up expected to succeed thanks to peer reflexive candidates ¡ñ ICE randomly failed :( ¡ð The Streaming plugin was mostly affected by such failures ¡ð EchoTest plugin not affected ¡ð VideoRoom plugin only affected for subscribers 13

- 14. Docker NAT functionality (2/2) ¡ñ Turned out to depend on which party sends the JSEP offer ¡ð Browser offers, Janus answers ¡ú ICE succeeds ¡ð Janus offers, browser answers ¡ú ICE fails ¡ñ Tracked down this behavior to libnetfilter, upon which Docker¡¯s libnetwork is based ¡ñ The Docker NAT is not address independent! ¡ð It sometimes acts like a symmetric NAT 14

- 21. Takeaways ¡ñ Docker networking can be tricky when dealing with ICE ¡ñ Host networking limits the number of containers running on the same host ¡ñ Ports mapping is not ideal when you want to scale a service up/down as needed ¡ñ NATed networks should be fine in a controlled environment, but¡ ¡ñ ¡ things get weird when the browser is also behind a NAT ¡ð Firefox multiprocess has a built in UDP packet filter ¡ñ The new obfuscation of host candidates through mDNS makes things even worse! ¡ð Chrome and Safari already there, Firefox coming soon ¡ñ Dedicated IP addresses to containers for the win! ¡ð Macvlan ¡ð Pipework 21

- 22. Macvlan ¡ñ Docker built-in network driver ¡ñ Allows a single (host) physical iface to have multiple MAC and IP addresses to assign to containers ¡ñ No need for port publishing 22

- 23. Pipework ¡ñ Tool for connecting together containers in arbitrarily complex scenarios ¡ñ https://github.com/jpetazzo/pipework ¡ñ Allows to create a new network interface inside a container and set networking parameters (IP address, netmask, gateway, ...) ¡ð This new interface becomes the default one for the container 23 $ pipework <hostinterface> [-i containerinterface] <guest> <ipaddr>/<subnet>[@default_gateway] [macaddr][@vlan] $ pipework <hostinterface> [-i containerinterface] <guest> dhcp [macaddr][@vlan] ¡ñ If you want to use both IPv4 and IPV6, the IPv6 interface has to be created first

- 24. ¡ñ The whole IETF Remote Participation Service is based upon Docker ¡ñ The NOC team deploys bare metal servers at meeting venues ¡ñ Four VMs running on different servers are dedicated to the remote participation service ¡ñ VMs host a bunch of Docker containers ¡ð Janus ¡ð Asterisk ¡ð Tomcat 1 instance of the Meetecho RPS ¡ð Redis + Node.js (containers share the network stack and have public IPv4 and IPv6 addresses) ¡ð Nginx ¡ñ Eight instances of the Meetecho RPS (one per room) ¡ð Split on two different VMs ¡ð A third VM is left idle for failover ¡ú containers migration if needed ¡ñ Other containers (stats, auth service, TURN, ¡) running on the fourth VM Example: IETF Remote Participation 24

- 25. Melter: a Docker Swarm cluster for recordings production 25

- 26. Janus recording functionality 26 ¡ñ Janus records individual contributions into MJR files ¡ñ MJRs can be converted into Opus/Wave/WebM/MP4 playable files via the janus-pp-rec tool shipped with Janus ¡ñ Individual contributions can be merged together into a single audio/video file ¡ð Timing information need to be taken into account to properly sync media ¡ð Other info might be needed as well, e.g., time of the first keyframe written into the MJR

- 27. Meetecho Melter ¡ñ A solution for converting MJR files into videos according to a given layout ¡ñ Leverages the MLT Multimedia Framework ¡ð https://www.mltframework.org/ ¡ñ Post-processing and encoding happen on a cluster of machines hosting Docker containers ¡ð Initially implemented with CoreOS ¡ð Moved to Docker native Swarm mode 27

- 28. Docker Swarm ¡ñ Cluster management and orchestration embedded in Docker engine ¡ñ Docker engine = swarm node ¡ð Manager(s) ¡ö Maintain cluster state through Raft consensus ¡ö Schedule services ¡ö Serve the swarm HTTP API ¡ð Worker(s) ¡ö Run containers scheduled by managers ¡ñ Fault tolerance ¡ð Containers are re-scheduled if a node fails ¡ð The cluster can tolerate up to (N-1)/2 managers failing 28

- 29. ¡ñ Leverage a number of bare metal servers as swarm nodes ¡ñ Set the maximum number of containers per node according to nodes¡¯ specs ¡ñ Schedule containers according to the above limits ¡ñ Solution: exploit Docker networks and the swarm scheduler in a ¡°hacky¡± way Challenges 29

- 30. Swarm-scoped Macvlan network ¡ñ On each swarm node create a network configuration ¡ð The network will have a limited number of IP addresses available (via subnetting) ¡ð The --aux-address option excludes an IP address from the usable ones ¡ð Must define non-overlapping ranges of addresses among all nodes ¡ñ On the Swarm manager, create a swarm-scoped network from the defined config 30 $ docker network create --config-only --subnet 192.168.100.0/24 --ip-range 192.168.100.0/29 --gateway 192.168.100.254 --aux-address "a=192.168.100.1" --aux-address "b=192.168.100.2" meltnet-config $ docker network create --config-from meltnet-config --scope swarm -d macvlan meltnet

- 31. Swarm-scoped Macvlan network ¡ñ The manager spawns containers on the swarm from a docker stack descriptor ¡ñ Each container is plumbed into the meltnet network ¡ñ If a node runs out of IP addresses, new containers will not be allocated there until one becomes available again ¡ñ Containers also leverage the NFS volume driver to read/write to a shared Network Attached Storage 31

- 32. Output 32

![Pipework

¡ñ Tool for connecting together containers in arbitrarily complex scenarios

¡ñ https://github.com/jpetazzo/pipework

¡ñ Allows to create a new network interface inside a container and set

networking parameters (IP address, netmask, gateway, ...)

¡ð This new interface becomes the default one for the container

23

$ pipework <hostinterface> [-i containerinterface] <guest>

<ipaddr>/<subnet>[@default_gateway] [macaddr][@vlan]

$ pipework <hostinterface> [-i containerinterface] <guest>

dhcp [macaddr][@vlan]

¡ñ If you want to use both IPv4 and IPV6, the IPv6 interface has to be

created first](https://image.slidesharecdn.com/janusdockerfriendsorfoe-190924202508/85/Janus-docker-friends-or-foe-23-320.jpg)