強化学習勉強会?論文紹介(Kulkarni et al., 2016)

- 1. 第32回 強化学習勉強会 Hierarchical Deep Reinforcement Learning: Integrating Temporal Abstraction and Intrinsic Motivation. Kulkarni et al., NIPS2016

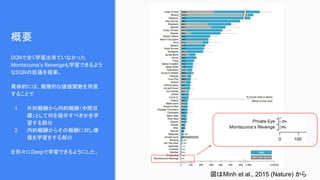

- 3. 概要 DQNで全く学習出来ていなかった Montezuma’s Revengeも学習できるよう なDQNの拡張を提案。 具体的には、階層的な価値関数を用意 することで 1. 外的報酬から内的報酬(中間目 標)として何を提示すべきかを学 習する部分 2. 内的報酬からその報酬に対し価 値を学習をする部分 を別々にDeepで学習できるようにした。 図はMinh et al., 2015 (Nature) から

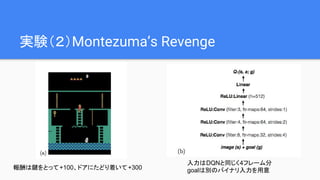

- 4. Montezuma’s Revenge ● 障害物を交わしたりしながら、お宝を集めるゲーム ● 次のステージ(左上右上とか)に行くには、鍵をとる必要がある ● 鍵を取る(+100)、次のステージに行く( +300) ● 通常のDANが0.0、Gorilla DQNが4.16(DeepMindがこのあと同じ NIPS2016の論文でMontezuma’s RevengeにおけるSoTAを叩き出 した) 特徴としては、報酬が 1. Sparse 2. Delayed

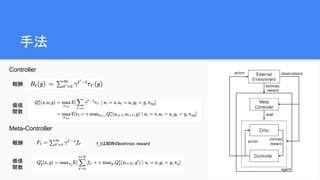

- 6. 手法

- 7. 主な関連研究 1. Human-level control through deep reinforcement learning, Minh et al., 2015 (Nature) a. DQN論文 2. Temporal abstraction (Sutton et al., 1998) a. option(例えば、複数のアクションを一度に行なう)によって行動空間の時間軸での抽象化が行 える 3. Universal value function (Schaul et al., 2015) a. 価値関数 V(s) を V(s; g) へと一般化 4. Intrisically Motivated RL (Singh et al., 2004) a. 心理学でいう内発的要因を考慮できるようなモデル 5. Unifying Count-Based Exploration and Intrinsic Motivation. Bellemare et al., 2016 (NIPS) a. DeepMindから出てるNIPS2016の論文。Montezuma’s Revengeでもハイスコア。arXivに出た のは後発。ちなみにこの論文のことは引用してない。

- 8. 学習 Q1もQ2も(s, a, r, 蝉’)のサンプルから勾配法で学习

- 9. アルゴリズム Exploration probability 1. epsilon2 (meta) = 1から段々小さ くしていく 2. epsilon1, g = goalへの到達確率 と独立、一定

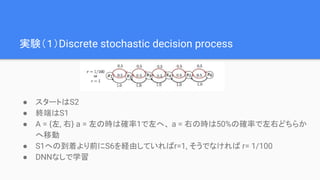

- 10. 実験(1)Discrete stochastic decision process ● スタートはS2 ● 終端はS1 ● A = {左, 右} a = 左の時は確率1で左へ、 a = 右の時は50%の確率で左右どちらか へ移動 ● S1への到着より前にS6を経由していればr=1, そうでなければ r= 1/100 ● DNNなしで学習

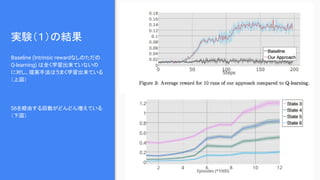

- 11. 実験(1)の結果 Baseline (Intrinsic rewardなしのただの Q-learning) は全く学習出来ていないの に対し、提案手法はうまく学習出来ている (上図) S6を経由する回数がどんどん増えている (下図)

- 13. 実験の細かい設定 ● Goal (Intrinsic motivation) の設定 ○ we built a custom object detector that provides plausible object candidates => おそらく幾つかのオブジェクトを人手で明示している ● Pretraining ○ 最初にmeta-controllerのexploration probabilityを1で固定して、各goal付近でのサンプルを ちゃんと探索する ○ 恐らく先にcontrollerがある程度賢くないと、 meta-controllerが学習出来ない

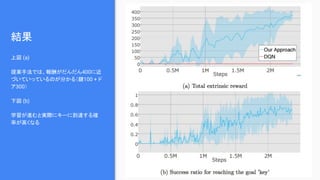

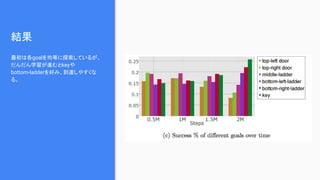

- 14. 結果 上図 (a) 提案手法では、報酬がだんだん400に近 づいていっているのが分かる(鍵100 + ド ア300) 下図 (b) 学習が進むと実際にキーに到達する確 率が高くなる

- 16. Sample trajectory 図の見方: 左上(失敗) 右上 => 左下 => 右下(成功) gif: https://goo.gl/3Z64Ji

- 17. 感想 ● 学習するDNNが二つにはなるが、既存のDQNを大きく変えない形でIMに基づい た学習ができるようになっているのは面白いと思った ● 実験結果が弱い ○ 最初の簡単な実験でも、これで Intrinsic motivationが効いてると主張できてるのか少し謎 ○ Montezuma’s Revenge以外のゲームで上手く行く形になっているのか疑問。 ● (汎用的と主張してはいるが)goalやcriticの決め方が恣意的 ○ タスク依存でユーザが決める必要がある ● DeepMindのやつとどっちが賢いの? ○ これは次のステージに行く前にエピソードを打ち切ってるっぽい ○ ので単純なスコアの比較は出来ないが DeepMindのやつのほうが汎用性が高く見える ○ 動画の動きを見ても DeepMindのやつの方がスムーズで賢そう ○ https://www.youtube.com/watch?v=0yI2wJ6F8r0

- 18. 参考文献 1. Hierarchical Deep Reinforcement Learning: Integrating Temporal Abstraction and Intrinsic Motivation, Kulkarni et al., 2016 (NIPS) (https://arxiv.org/abs/1604.06057) 2. Human-level control through deep reinforcement learning, Minh et al., 2015 (Nature) 3. Universal Value Function Approximators, Schaul et al., 2015 (ICML) 4. Between MDPs and semi-MDPs:A framework for temporal abstractionin reinforcement learning, Sutton et al., 1999 (Artificial Intelligence) 5. Intrinsically Motivated Reinforcement Learning, Singh et al., 2004 6. github.com/EthanMacdonald/h-DQN (https://github.com/EthanMacdonald/h-DQN) 7. Sample trajectory (gif) (https://goo.gl/3Z64Ji)