Learning to Translate with Joey NMT

- 1. Learning to Translate with Joey NMT PyData Meetup Montreal Julia Kreutzer Feb 25, 2021

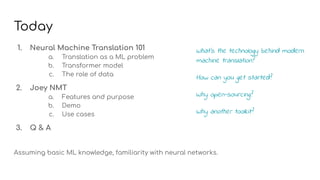

- 2. Today 1. Neural Machine Translation 101 a. Translation as a ML problem b. Transformer model c. The role of data 2. Joey NMT a. Features and purpose b. Demo c. Use cases 3. Q & A Assuming basic ML knowledge, familiarity with neural networks. What's the technology behind modern machine translation? How can you get started? Why open-sourcing? Why another toolkit?

- 3. [Optional] Demo Preparation If you want to train your own translation model during this presentation: 1. Open joey_demo.ipynb on Colab. 2. Create a copy. 3. Select GPU runtime: Runtime -> Change runtime type -> Hardware accelerator: GPU 4. Run all cells: Runtime -> Run all 5. Come back to the talk :) We'll inspect later what's happening there.

- 4. Neural Machine Translation 101

- 5. Translation as a ML Problem Challenges ? Unlimited length ? Structural dependencies ? Unseen words ? Figurative language Seq2Seq ? Modeling sentences (mostly) ? Connections between all words ? Sub-word modeling ? A lot of training data Input: What is a poutine ? Output: Qu'est-ce qu'une poutine ?

- 6. The Transformer "Attention is all you need" Vaswani et al. 2017 Decoder Specialties Source: Vaswani et al. 2017

- 7. Training vs. Inference Conditional language modeling: Predict the next token yt : Ī± given source X and all previous tokens of the reference during training. Ī± given source X and previously predicted tokens during inference. Training with MLE, inference with greedy or beam search.

- 8. Beam Search Source: G. Neubig's course on MT and Seq2Seq Keep the k most likely prediction sequences in each step. ? more expensive than greedy ? more exact Implementation on mini-batches is tricky! k=2

- 9. Words? Pre-processing plays a huge role in NMT. qu'est-ce qu'une poutine ? 4 tokens, 4 types vs qu ' est - ce qu ' une poutine ? 10 tokens, 8 types ? Sub-words instead of words: frequency-based automatic segmentation. ? Algorithms: BPE, unigram LM. ? Implementations: subword-nmt, SentencePiece.

- 10. The Role of Data A "base"-sized Transformer has ~65M weights. How much data does it need? ? It depends! ? Ī░As much as you can ?nd" heuristic ? Beyond parallel data Ī unsupervised NMT Ī data augmentation Ī dictionaries Ī pre-trained embeddings Ī multilingual modeling How similar are source and target language? What kind of quality are you expecting? How complex is the text?

- 11. Evaluation Input: What is a poutine ? Reference: Qu'est-ce qu'une poutine ? Outputs: 1. Est-ce qu'une poutine ? 2. Que-ce une poutine ? 3. Qu'une poutine ? 4. Qu'est-ce qu'un poutin ? 5. C'est qu'une poutine . How should these outputs be ranked / scored?

- 12. Evaluation Input: What is a poutine ? Reference: Qu'est-ce qu'une poutine ? Outputs: 1. Est-ce qu'une poutine ? 59.5 82.8 2. Que-ce une poutine ? 32.0 51.4 3. Qu'une poutine ? 39.4 58.3 4. Qu'est-ce qu'un poutin ? 19.0 74.4 5. C'est qu'une poutine . 32.0 60.8 BLEU: geometric average of token n-gram precisions, brevity penalty ChrF: character n-gram F-score

- 13. Joey NMT Joint work with Jasmijn Bastings, Mayumi Ohta and Joey NMT contributors

- 14. Problem + A lot of code for NMT is online. + Free compute through Colab. + Data is freely available. Is it clean? How long would I have to study it? Are all features documented? How can I run it on Colab? How do I need to prepare data to use it? Does that mean it's accessible?

- 15. Solution Joey NMT: clean, minimalist, documented. ? Much smaller than other toolkits ? Covers core features ? User study on usability ? The core API changes very little. ? Examples, pre-trained models, tutorials, FAQ ? Based on PyTorch Does not do everything, does not grow much.

- 16. Features You can: Ī± train a RNN/Transformer model Ī± on CPU, one or multiple GPUs Ī± monitor the training process Ī± con?gure hyperparameters Ī± store it, load it, test it And more: Ī± follow training recipes Ī± modify the code easily Ī± get inspiration from other extensions Ī± share/load pre-trained models

- 17. It's cute, but can it compete? Quality? ? Comparable to other toolkits. Adoption? ? Not as popular. Innovation? ? More and more research.

- 18. It's cute, but can it compete? Quality? ? Comparable to other toolkits. Adoption? ? Not as popular. Innovation? ? More and more research. It might not be the best choice for ? exact replication of another paper -> use their code instead ? non-seq2seq applications ? performance-critical applications (not optimized for it) ? loading BERT (not implemented)

- 19. Demo

- 20. Cool stuff feat. Joey NMT Grassroots research communities ? Masakhane: NLP for African languages ? Turkic Interlingua: NLP for Turkic languages Extensions ? Reinforcement learning ? Sign language translation ? Speech translation ? Image captioning ? Slack bot More on this list.

- 21. Material ? Neural networks in NLP Ī Y. Goldberg: A Primer on Neural Network Models for Natural Language Processing Ī G. Neubig: CMU CS 11-747: Neural Networks for NLP ? Neural Machine translation Ī P. Koehn: Neural Machine Translation (Draft Chapter of the Statistical MT book) Ī G. Neubig: Tutorial on Neural Machine Translation Ī A. Rush: The Annotated Transformer Ī J. Bastings: The Annotated Encoder-Decoder Ī M. M©╣ller: Seven Recommendations for MT Evaluation ? Joey NMT Ī Joey NMT paper Ī Joey NMT tutorial Ī Masakhane notebooks and YouTube tutorial Ī Turkic Interlingua YouTube tutorial

![[Optional] Demo Preparation

If you want to train your own translation model during this presentation:

1. Open joey_demo.ipynb on Colab.

2. Create a copy.

3. Select GPU runtime: Runtime -> Change runtime type -> Hardware accelerator: GPU

4. Run all cells: Runtime -> Run all

5. Come back to the talk :)

We'll inspect later what's happening there.](https://image.slidesharecdn.com/learningtotranslatewithjoeynmt1-210225221040/85/Learning-to-Translate-with-Joey-NMT-3-320.jpg)