Linking entities via semantic indexing

- 1. #EMEARC17

- 2. #EMEARC17 Linking entities via semantic indexing Shenghui Wang, Rob Koopman

- 3. #EMEARC17 Linking Open Data cloud diagram 2017, by Andrejs Abele, John P. McCrae, Paul Buitelaar, Anja Jentzsch and Richard Cyganiak. http://lod-cloud.net/

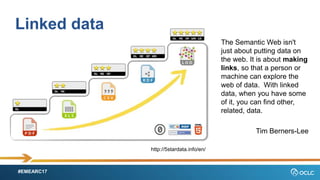

- 4. #EMEARC17 Linked data The Semantic Web isn't just about putting data on the web. It is about making links, so that a person or machine can explore the web of data. With linked data, when you have some of it, you can find other, related, data. Tim Berners-Lee http://5stardata.info/en/

- 5. #EMEARC17

- 6. #EMEARC17

- 7. #EMEARC17

- 8. #EMEARC17

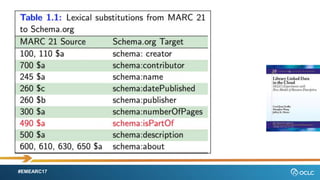

- 9. #EMEARC17 Information locked in free-text 245 [3$c] Translated from the French by Guy Endore, illustrated with lithographs by Yngve Derg

- 10. #EMEARC17

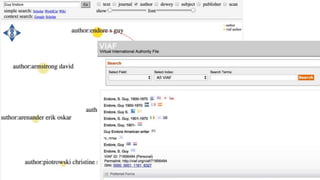

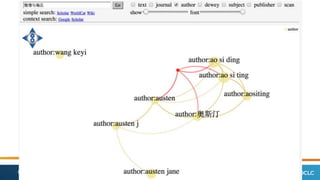

- 11. #EMEARC17 245 [3$c] ( Ying) jian. Ao si ding zhu ; ( ying ) xiu. Tang mu sen tu ; zhou dan yi. 700 [1$a] Ao, Siding. 700 [1$a] Tang, Musen. 700 [1$a] Zhou, Dan.

- 12. #EMEARC17

- 13. #EMEARC17 More links could be recovered ? If we have enough (good) data ? If we have effective and scalable algorithms ? If we have patience

- 14. #EMEARC17 Linking entities via semantic indexing

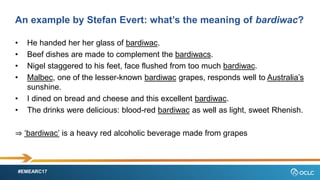

- 15. #EMEARC17 An example by Stefan Evert: whatˇŻs the meaning of bardiwac? ? He handed her her glass of bardiwac. ? Beef dishes are made to complement the bardiwacs. ? Nigel staggered to his feet, face flushed from too much bardiwac. ? Malbec, one of the lesser-known bardiwac grapes, responds well to AustraliaˇŻs sunshine. ? I dined on bread and cheese and this excellent bardiwac. ? The drinks were delicious: blood-red bardiwac as well as light, sweet Rhenish. ? ˇ®bardiwacˇŻ is a heavy red alcoholic beverage made from grapes

- 16. #EMEARC17 Linking entities via semantic indexing ? Statistical Semantics [furnas1983,weaver1955] based on the assumption of ˇ°a word is characterized by the company it keepsˇ± [firth1957] ? Distributional Hypothesis [harris1954, sahlgren2008]: words that occur in similar contexts tend to have similar meanings

- 17. #EMEARC17 LetˇŻs embed entities in a vector space ? Discrete encoding does not help to automatically process the underlying semantics ? Entities (words) are represented in a continuous vector space where semantically similar words are mapped to nearby points (ˇ®are embedding nearby each otherˇŻ) ? A desirable property: computable similarity

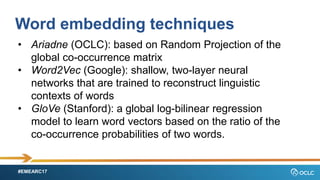

- 18. #EMEARC17 Word embedding techniques Two main categories of approaches: ? Global co-occurrence count-based method, such as Latent Semantic Analysis ? Local context predictive methods, such as neural probabilistic language models

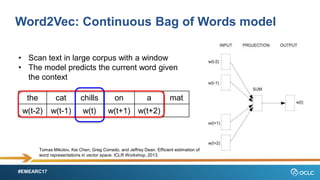

- 19. #EMEARC17 Word2Vec: Continuous Bag of Words model ? Scan text in large corpus with a window ? The model predicts the current word given the context the cat chills on a mat w(t-2) w(t-1) w(t) w(t+1) w(t+2) Tomas Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. Efficient estimation of word representations in vector space. ICLR Workshop, 2013.

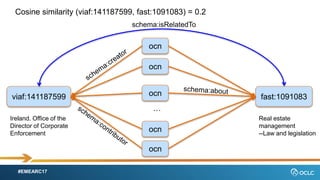

- 20. #EMEARC17 When an entity becomes a vector ? Similarity or relatedness can be computed automatically ¨C Cosine similarity ? Such similarity/relatedness can be used to link an entity to its most related entities via schema:isRelatedTo ? Such links can be complementary to existing triples

- 21. #EMEARC17 viaf:141187599 fast:1091083 Real estate management --Law and legislation ocn ocn ocn ocn ocn Ireland. Office of the Director of Corporate Enforcement ˇ Cosine similarity (viaf:141187599, fast:1091083) = 0.2 schema:isRelatedTo

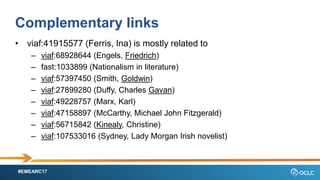

- 22. #EMEARC17 Complementary links ? viaf:41915577 (Ferris, Ina) is mostly related to ¨C viaf:68928644 (Engels, Friedrich) ¨C fast:1033899 (Nationalism in literature) ¨C viaf:57397450 (Smith, Goldwin) ¨C viaf:27899280 (Duffy, Charles Gavan) ¨C viaf:49228757 (Marx, Karl) ¨C viaf:47158897 (McCarthy, Michael John Fitzgerald) ¨C viaf:56715842 (Kinealy, Christine) ¨C viaf:107533016 (Sydney, Lady Morgan Irish novelist)

- 23. #EMEARC17

- 24. #EMEARC17 Word embedding techniques ? Ariadne (OCLC): based on Random Projection of the global co-occurrence matrix ? Word2Vec (Google): shallow, two-layer neural networks that are trained to reconstruct linguistic contexts of words ? GloVe (Stanford): a global log-bilinear regression model to learn word vectors based on the ratio of the co-occurrence probabilities of two words.

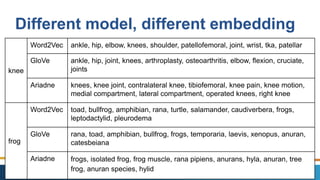

- 25. #EMEARC17 Different model, different embedding knee Word2Vec ankle, hip, elbow, knees, shoulder, patellofemoral, joint, wrist, tka, patellar GloVe ankle, hip, joint, knees, arthroplasty, osteoarthritis, elbow, flexion, cruciate, joints Ariadne knees, knee joint, contralateral knee, tibiofemoral, knee pain, knee motion, medial compartment, lateral compartment, operated knees, right knee frog Word2Vec toad, bullfrog, amphibian, rana, turtle, salamander, caudiverbera, frogs, leptodactylid, pleurodema GloVe rana, toad, amphibian, bullfrog, frogs, temporaria, laevis, xenopus, anuran, catesbeiana Ariadne frogs, isolated frog, frog muscle, rana pipiens, anurans, hyla, anuran, tree frog, anuran species, hylid

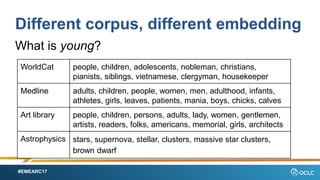

- 26. #EMEARC17 Different corpus, different embedding What is young? WorldCat people, children, adolescents, nobleman, christians, pianists, siblings, vietnamese, clergyman, housekeeper Medline adults, children, people, women, men, adulthood, infants, athletes, girls, leaves, patients, mania, boys, chicks, calves Art library people, children, persons, adults, lady, women, gentlemen, artists, readers, folks, americans, memorial, girls, architects Astrophysics stars, supernova, stellar, clusters, massive star clusters, brown dwarf

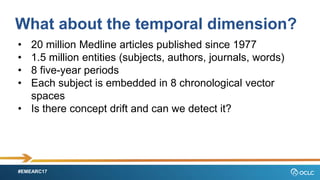

- 27. #EMEARC17 What about the temporal dimension? ? 20 million Medline articles published since 1977 ? 1.5 million entities (subjects, authors, journals, words) ? 8 five-year periods ? Each subject is embedded in 8 chronological vector spaces ? Is there concept drift and can we detect it?

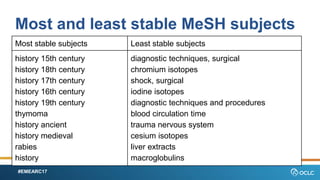

- 28. #EMEARC17 Most and least stable MeSH subjects Most stable subjects Least stable subjects history 15th century history 18th century history 17th century history 16th century history 19th century thymoma history ancient history medieval rabies history diagnostic techniques, surgical chromium isotopes shock, surgical iodine isotopes diagnostic techniques and procedures blood circulation time trauma nervous system cesium isotopes liver extracts macroglobulins

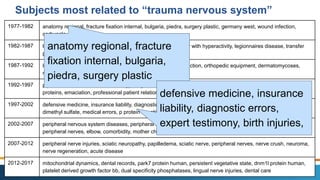

- 29. #EMEARC17 Subjects most related to ˇ°trauma nervous systemˇ± 1977-1982 anatomy regional, fracture fixation internal, bulgaria, piedra, surgery plastic, germany west, wound infection, carbuncle, burns 1982-1987 legionellosis, povidone, tropocollagen, attention deficit disorder with hyperactivity, legionnaires disease, transfer psychology 1987-1992 leg injuries, neurosurgical procedures, arm injuries, wound infection, orthopedic equipment, dermatomycoses, multiple trauma, candidiasis cutaneous, fractures closed 1992-1997 piperacillin, tazobactam, microbiology, diagnostic errors, sorption detoxification, arthroplasty, hsp40 heat shock proteins, emaciation, professional patient relations 1997-2002 defensive medicine, insurance liability, diagnostic errors, expert testimony, birth injuries, maleic anhydrides, dimethyl sulfate, medical errors, p protein hepatitis b virus 2002-2007 peripheral nervous system diseases, peripheral nerve injuries, neurologic examination, male, recovery of function, peripheral nerves, elbow, comorbidity, mother child relations 2007-2012 peripheral nerve injuries, sciatic neuropathy, papilledema, sciatic nerve, peripheral nerves, nerve crush, neuroma, nerve regeneration, acute disease 2012-2017 mitochondrial dynamics, dental records, park7 protein human, persistent vegetative state, dnm1l protein human, platelet derived growth factor bb, dual specificity phosphatases, lingual nerve injuries, dental care anatomy regional, fracture fixation internal, bulgaria, piedra, surgery plastic defensive medicine, insurance liability, diagnostic errors, expert testimony, birth injuries,

- 30. #EMEARC17 Summary ? Semantic indexing helps to discover links between entities ? Links might have to be time stamped ? Free-text in metadata is a promising but challenging source ? No perfect algorithms yet but lots of on-going research

![#EMEARC17

Information locked in free-text

245 [3$c] Translated from the French by

Guy Endore, illustrated with lithographs

by Yngve Derg](https://image.slidesharecdn.com/semanticindexingemearc17-170227102951/85/Linking-entities-via-semantic-indexing-9-320.jpg)

![#EMEARC17

245 [3$c] ( Ying) jian. Ao si ding

zhu ; ( ying ) xiu. Tang mu sen tu ;

zhou dan yi.

700 [1$a] Ao, Siding.

700 [1$a] Tang, Musen.

700 [1$a] Zhou, Dan.](https://image.slidesharecdn.com/semanticindexingemearc17-170227102951/85/Linking-entities-via-semantic-indexing-11-320.jpg)

![#EMEARC17

Linking entities via semantic indexing

? Statistical Semantics [furnas1983,weaver1955] based on

the assumption of ˇ°a word is characterized by the

company it keepsˇ± [firth1957]

? Distributional Hypothesis [harris1954, sahlgren2008]:

words that occur in similar contexts tend to have similar

meanings](https://image.slidesharecdn.com/semanticindexingemearc17-170227102951/85/Linking-entities-via-semantic-indexing-16-320.jpg)