Learning Latent Variable Gaussian Graphical Models

- 1. Learning Latent Variable Gaussian Graphical Models Meng, Z., Eriksson, B., Hero III, A. O. ICML2014 2014/07/01 @harapon

- 2. (sparse) GGMのモチベーション ? Large p, small n problem – 正則化のため低次元構造を課すのが一般的 アプローチ(Negahban et al. 2012) ? ガウス分布データにおける中心的問題は inverse covariance matrix(精度行列) の推定 観測 サンプル数 n データの次元数p

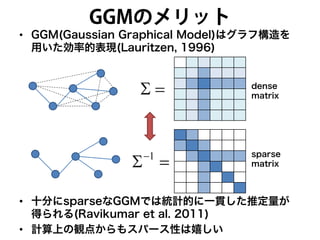

- 3. GGMのメリット ? GGM(Gaussian Graphical Model)はグラフ構造を 用いた効率的表現(Lauritzen, 1996) ? 十分にsparseなGGMでは統計的に一貫した推定量が 得られる(Ravikumar et al. 2011) ? 計算上の観点からもスパース性は嬉しい =Σ =Σ?1 dense matrix sparse matrix

- 4. GGMの課題 ? しかし,真のデータ分布はsparse GGMで はうまく近似できていないかもしれない! ? そこで,標準的なsparse GGMを拡張した 高次元GGMとして,global effectとlocal interactionの双方を扱うモデルを考える ? だがglobal effectを導入すると周辺化した ときにその精度行列がスパースではなくなる. ? そうなると,悲しいことに,現在のsparse GGMの計算法が適用できなくなる

- 5. LVGGMの提案 ? この問題を解決するために LVGGM(Latent Variable GGM)を提案 – global effectは潜在変数で – local interactionはsparse GGMで表現 ? LVGGMの周辺化精度行列はsparseと low-rank structureが保たれるので, regularized ML approachが使える – これはsparse GGMの良い学習法 (Chandrasekaran et al. 2012)

- 6. LVGGMの嬉しさの結論を先に ? 対数尤度関数がほぼ強い凸性をもつので 非漸近パラメータのエラー境界が得られ る. ? これはunstructured dense GGMの エラー境界と比べると十分に速く収束

- 7. 関連研究 ? sparse inverse covariance matrixをもつ GGMのL1-regularized maximum likelihood estimatorの学習問題はgraphical lasso (Glasso)の問題と知られる – Friedman et al. (2008) – Ravilumar et al. (2011) ? あるincoherence conditionの下でのmodel selection consistencyについて研究(sparsistency) – Rothman et al. (2008) ? 別のアプローチとしてmulti-resolution extension of GGM – Choi et al. (2010) – Choi et al. (2011):グラフィカルモデルの潜在的 な木構造を考慮 – どちらのモデルも計算効率的な学習と推論 – しかし潜在構造は木構造制約

- 8. LVGGMの定義 ? 潜在変数の導入 – sparse GGMはデータに対して強い仮定で あったため,real world observationを うまく説明するためにスパース性を破壊 ? 変数定義 – p次元観測ベクトル – r次元潜在変数ベクトル Ox Lx ),( LO xxx =

- 9. LVGGMの共分散行列?精度行列 ? 共分散行列 ? 精度行列 ? 観測変数の共分散行列 – 潜在変数で周辺化するとガウス分布のまま ? 観測変数の精度行列 ? このような構造のモデルをLVGGMと呼ぶ Ω 1? Ω=J OO,Ω=Σ OLLLLOOO JJJJ , 1 ,,, 1 ?? ?=Σ=Θ LS += (※シューアの補行列) スパース行列 低ランク行列

- 10. LVGGMの共分散行列?精度行列 ? 潜在変数の数は観測変数の数と比べて 十分小さいと仮定(r << p) ? 観測変数と潜在変数の間にはスパースの 仮定は不要 ? よってp p行列のLは低ランクかつ密行列 ? このように周辺化精度行列 を – sparse matrix S – low-rank dense matrix L – に分解することが推定のためのkey property Θ

- 11. Regularized ML estimation of LVGGM ? nサンプル に対してデータ行列 ? 負の対数尤度関数は – ここで はサンプルの共分散行列 ? 正則化最尤推定は次の目的関数を最小化 – 正則化関数 はsparse + low-rank構造 を維持するように設計される nxxx L,, 21 X )det(log,?);( Θ?ΘΣ=Θ XL XX n T1? ≡Σ )();( Θ+Θ RXL λ )(ΘR

- 12. Regularized ML estimation of LVGGM ? Chandrasekaren et al. (2012)と同様 次のような正則化最尤推定を考える ? これは凸最適化問題であり,Ma et al. (2013)の方法を使えば解ける! – 詳細はMa et al.(2013)参照 )(Tr);(min 1, LSXLSL LS μλ +++ ..ts 0fL? 0, 0 > + μλ fLS

- 13. 推定パラメータのerror bound ? 推定パラメータのerror boundを理論的 に証明 ? 評価実験でも確認

- 14. Summary ? GGMの良さであるスパース性に加えて, 潜在変数でglobal effectも考慮できる モデルの提案 ? うまい形で定式化することで疎行列と低 ランク行列に分解 ? おかげでパラメータ推定が既往研究の方 法を使える上に,精度保証された推定パ ラメータが得られる ? GGMの問題点が解決!

- 15. References 1. Lauritzen, S.L. Graphical models, volume 17. Oxford University Press, USA, 1996. 2. Ravikumar, Pradeep, Wainwright, Martin J, Raskutti, Garvesh, and Yu, Bin. High- dimensional covariance estimation by minimizing l1-penalized log-determinant di- vergence. Electronic Journal of Statistics, 5:935-980, 2011. 3. Chandrasekaran, Venkat, Parrilo, Pablo A, and Willsky, Alan S. Latent variable graphical model selection via convex optimization. Annals of Statistics, 40(4):1935-1967, 2012. 4. Friedman, Jerome, Hastie, Trevor, and Tibshirani, Robert. Sparse inverse covariance estimation with the graphical lasso. Biostatistics, 9(3):432-441, 2008. 5. Rothman, Adam J, Bickel, Peter J, Levina, Elizaveta, and Zhu, Ji. Sparse permutation invariant covariance estimation. Electronic Journal of Statistics, 2:494-515, 2008. 6. Choi, Myung Jin, Chandrasekaran, Venkat, and Willsky, Alan S. Gaussian multiresolution models: Exploiting sparse Markov and covariance structure. Signal Processing, IEEE Transactions on, 58(3):1012-1024, 2010. 7. Choi, Myung Jin, Tan, Vincent YF, Anandkumar, Animashree, and Willsky, Alan S. Learning latent tree graphical models. Journal of Machine Learning Research, 12:1729-1770, 2011. 8. Ma, Shiqian, Xue, Lingzhou, and Zou, Hui. Alternating direction methods for latent variable Gaussian graphical model selection. Neural computation, 25(8):2172-2198, 2013.

![[DL輪読会]Control as Inferenceと発展](https://cdn.slidesharecdn.com/ss_thumbnails/20191004-191204055019-thumbnail.jpg?width=560&fit=bounds)