BEAR: Mining Behaviour Models from User-Intensive Web Applications

- 1. Mining Behaviour Models from User-Intensive Web Applications Carlo Ghezzi carlo.ghezzi@polimi.it Politecnico di Milano, Italy (IT) Mauro Pezz├© mauro.pezze@usi.ch Universit├Ā della Svizzera Italiana, Lugano (CH) Michele Sama michele@swiftkey.net Touchtype Ltd, UK Giordano Tamburrelli giordano.tamburrelli@usi.ch Universit├Ā della Svizzera Italiana, Lugano (CH)

- 2. Scalability Privacy Security Users Modern Web Applications ŌĆó Millions of interactions per day ŌĆó Manage sensible data ŌĆó Secure economic transactions ŌĆó Capture/measure user behaviours

- 3. ŌĆó UserŌĆÖs behaviours cannot be predicted at design time. ŌĆó Only released applications allow us to collect statistics ŌĆó Multiple and heterogeneous navigational behaviours that depend on several factors ŌĆó Behaviours may unpredictably change over time User behaviours

- 4. ŌĆó Monitoring+analysis/mining ŌĆó Little support from a general software engineering perspective Related work Google AnalyticsLink PredictionWeb Caching

- 5. ŌĆó General abstraction to support software engineers ŌĆó Automated and non-ambigous analysis tool ŌĆó Support for different user classes ŌĆó Other key features: ŌĆó extensibility (domain specific analysis) ŌĆó incrementality ŌĆó applicable to legacy systems What is missing

- 6. ŌĆó Exploit formal models to capture and quantitatively analyse user behaviors ŌĆó Focus on RESTful architectures ŌĆó Based on log file mining applicable to legacy systems Formal Methods Web Development + Our Idea

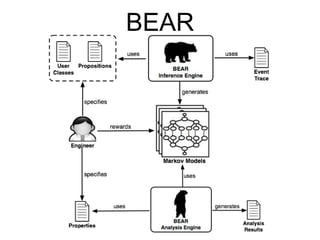

- 7. ŌĆó User classes ŌĆó Give semantics to events in the log file ŌĆó Infer user-behaviour models (DTMC) ŌĆó Queries the models Ingredients

- 8. BEAR

- 9. A real-world case study ŌĆó Small example, but general enough: ŌĆó URL with parameters ŌĆó URL with parametric structure URL Description /home/ Homepage of findyourhouse.com /anncs/sales/ The first page that shows the sales announcements. /anncs/sales/?page=< n> Nth page of sales announcements /anncs/sales/< id> / Detailed view of the sales announcement /anncs/renting/ The first page that shows the renting announcements. /anncs/renting/?page=< n> Nth page of renting announcements /anncs/renting/< id> / Detailed view of the renting announcement /search/ Page containing the results of a search /admin/.../ WebsiteŌĆÖs control panel /admin/login/ Login page that allows to access the control panel. /contacts/ URL with the form to contact a sales agent. /contacts/submit/ Contact form submitted has been submitted. Page that describes the website terms of use.

- 10. ŌĆó A set of atomic propositions (AP) give semantics to the entries in the log ŌĆó Declarative approach: @BearFilter URLs Ō×ö Atomic Propositions @BearFilter(regex="^/anncs/sales/(w+)/$") public static Proposition void filterSales(LogLine line){ return new Proposition("sales_anncs"); } @BearFilter(regex="^/admin/login/$") public static Proposition void filterLogin(LogLine line){ if(logLine.getHTTPStatusCode == "302") return new Proposition("login_success"); else return new Proposition("login_fail"); }

- 11. URLs Ō×ö Atomic Propositions URL Atomic Propositions /home/ homepage /anncs/sales/ sales_page, page_1 /anncs/sales/?page=< n> sales_page, page_n /anncs/sales/< id> / sales_anncs /anncs/renting/ renting_page, page_1 /anncs/renting/?page=< n> renting_page, page_n renting_anncs

- 12. ŌĆó Code fragments called classifiers to specify user classes ŌĆó Declarative approach: @BearClassifier Identify User Classes @BearClassifier(name="userAgent") public static String UserAgentClassifier(LogLine logline) { return logline.getAgent(); } {(userAgent = ŌĆ£Mozilla/5.0...ŌĆØ), (location = ŌĆ£BostonŌĆØ)}

- 13. ŌĆó BEAR infers a set of DTMCs ŌĆó Sequential and incremental process ŌĆó An independent DTMC for each user class Infer the models

- 14. IP TIMESTAMP URL 1.1.1.1 - [20/Dec/2013:15:35:02] - /home/ 2.2.2.2 - [20/Dec/2013:15:35:07] - /admin/login/ 1.1.1.1 - [20/Dec/2013:15:35:12] - /anncs/sales/1756/ 2.2.2.2 - [20/Dec/2013:15:35:19] - /admin/edit/ Infer the models

- 16. ŌĆó Rewards: domain specific metrics of interests ŌĆó Number of announcements displayed ŌĆó DB Queries Annotating the models extensibility

- 17. ŌĆó Probabilistic Computation Tree Logic (PCTL) augmented with rewards ŌĆó BEAR Properties = scope + PCTL formula Specifying the properties {userAgent = ŌĆ£(.ŌłŚ)Mozilla(.ŌłŚ)ŌĆØ}P=?[F contact_requested] {userAgent = ŌĆ£(.ŌłŚ)(Android|iOS)(.ŌłŚ)ŌĆØ}R=?[F end] generality

- 18. Querying the models automation ŌĆó Scope identifies the set of relevant DTMCs among the inferred models ŌĆó BEAR analysis engine compose selected DTMCs into single one ŌĆó PCTL verification performed with PRISM on the composed model

- 19. Model Composition ŌĆó Union of the sets of states of the input DTMCs ŌĆó Law of total probability to compute transitions

- 20. ŌĆó Detecting navigational anomalies: ŌĆó A difference between the actual and the expected user navigation actions. ŌĆó Comparing the BEAR models with the site map: {}P =?[(X si)]{sj} ŌĆó Measuring behaviours and attitudes ŌĆó {}P =?[(F sales_anncs) & (!(F renting_anncs))] ŌĆó {(?!(.ŌłŚ)(Android | iOS))(.ŌłŚ)}R=?[F end {sales_anncs}] BEAR at work

- 21. BEAR: performance ŌĆó Variable number of states ŌĆó Variable length of log file

- 22. BEAR: performance ŌĆó Variable number of DTMCs ŌĆó Variable number of states

- 23. ŌĆó More expressive formalisms ŌĆó Self-adaptive applications Summary ŌĆó Formal analysis of user behaviours in web apps ŌĆó Validation on a real case study ŌĆó On-going validation on a mobile app

Editor's Notes

- #3: Web applications characterised by: large, evolving, and heterogenous populations of users heavy dependence on the interactions with the users User requirements: evolving needs, attitudes, navigation profiles, preferences

- #4: UserŌĆÖs behaviours cannot be fully anticipated at design time. Only released applications allow us to collect statistics Users may have multiple and heterogeneous navigational behaviours that depend on several factors Behaviours may unpredictably change over time thus invalidating the analysis efforts Several classes of users with distinct user behaviours coexist at the same time

- #5: Monitoring the usage of the system and subsequently mining possible interaction patterns Little support from a general software engineering perspective Limited flexibility (e.g., Google Analytics) Ad-hoc techniques: link-prediction (Sarukkai et al., 2000) data caching (Yang et al., 2003)

- #6: General abstraction to support software engineers in maintaining and adapting user-intensive Web applications Automated and non-ambigous analysis tool Support for different user classes Other key features: extensibility (domain specific analysis) incrementality applicable to legacy systems

- #8: Partition user population into significant classes. Give semantics to the interaction events (URLs) occurring in the log file Use the events to infer semantically rich user-behaviour models (DTMC) Support queries on behaviour models

- #9: Declaratively give semantics to the URLs occurring in the log file Declaratively characterise the population of users by identifying a set of relevant features to cluster them into distinct classes. Incrementally infer a set of discrete time Markov chains (DTMCs) Annotating the states of the models to represent domain specific quantities Specifying the properties of the interaction patterns (PCTL). Analysing the models

- #10: RESTful web application: findyourhouse.com Real estate announcements (sales, rentals) we had full access to both the application and the log file we conducted our experiments on the data collected during the normal operation of the application.

- #11: BEAR associates semantics et of atomic propositions (AP) that indicate what can be assumed as valid when a certain entry in the log file is found. Declarative approach: annotation @BearFilter

- #12: The first filter associates the proposition homepage to the homepage URL. The second filter associates the proposition sales anncs to the URLs that matches the regular expression in its an- notation, and indicates that the URLs are related to sales announcements. The third filter associates the propositions login success and login fail to URLs that correspond to lo- gin attempts, depending on the HTTP status code of the request. The fourth filter associates the propositions con- trol panel to the pages of the control panel.

- #14: BEAR infers a set of DTMCs that represent the usersŌĆÖ behaviours. The inference process works sequentially and incrementally on the log file as a data stream. The inference engine generates an independent DTMC for each user class defined by the classifiers.

- #17: Rewards: domain specific metrics of interests. An example: To give special recognition to the states that display a large number of announcements. This can be easily achieved by annotating the propositions with rewards that depend on the number of announcements they are related with. They assign rewards to propositions in the setup phase, and do not need to know the structure and the number of the inferred models, which are inferred only later while monitoring the application behaviour.

- #18: PCTL is probabilistic branching-time temporal logic based on the classic CTL logic that predicates on a state of a Markov process. The PCTL properties depend only on the propositions, and designers can specify them without knowing the structure of the inferred models in terms of states or transitions.

- #20: where Pi(uk) corresponds to the probability for a user (associated to the selected user classes) that exited state si to belong to the specific user class uk.

- #21: The BEAR analysis engine indicated that 48% of users are interested in sales announcements only, and 20% users are interested in renting announcements only. ge of users look both for sales and renting announce- ments. With these data, the designers decided to change the homepage that initially displayed a random set of an- nouncements, and now displays renting and sales announce- ment proportionally to the measured usersŌĆÖ behaviors.

- #22: We generated synthetic log files composed of 10, 000 lines generated ad-hoc to produce DTMCs with an increasing number of states. Figure 4(a) shows that the BEAR inference engine can produce a DTMC of 150 states analyzing a log files of 10, 000 lines in few sec- onds. Figure 4(b) illustrates instead the execution time of processing log files of increasing length. The figure reports the execution time for log files that produce a DTMC of 50 states. BEAR processes a log file of 100, 000 lines in around 60 seconds. inference engine.

![IP TIMESTAMP URL

1.1.1.1 - [20/Dec/2013:15:35:02] - /home/

2.2.2.2 - [20/Dec/2013:15:35:07] - /admin/login/

1.1.1.1 - [20/Dec/2013:15:35:12] - /anncs/sales/1756/

2.2.2.2 - [20/Dec/2013:15:35:19] - /admin/edit/

Infer the models](https://image.slidesharecdn.com/icse2014-150424020404-conversion-gate02/85/BEAR-Mining-Behaviour-Models-from-User-Intensive-Web-Applications-14-320.jpg)

![ŌĆó Probabilistic Computation Tree Logic (PCTL)

augmented with rewards

ŌĆó BEAR Properties = scope + PCTL formula

Specifying the properties

{userAgent = ŌĆ£(.ŌłŚ)Mozilla(.ŌłŚ)ŌĆØ}P=?[F contact_requested]

{userAgent = ŌĆ£(.ŌłŚ)(Android|iOS)(.ŌłŚ)ŌĆØ}R=?[F end]

generality](https://image.slidesharecdn.com/icse2014-150424020404-conversion-gate02/85/BEAR-Mining-Behaviour-Models-from-User-Intensive-Web-Applications-17-320.jpg)

![ŌĆó Detecting navigational anomalies:

ŌĆó A difference between the actual and the expected user navigation

actions.

ŌĆó Comparing the BEAR models with the site map:

{}P =?[(X si)]{sj}

ŌĆó Measuring behaviours and attitudes

ŌĆó {}P =?[(F sales_anncs) & (!(F renting_anncs))]

ŌĆó {(?!(.ŌłŚ)(Android | iOS))(.ŌłŚ)}R=?[F end {sales_anncs}]

BEAR at work](https://image.slidesharecdn.com/icse2014-150424020404-conversion-gate02/85/BEAR-Mining-Behaviour-Models-from-User-Intensive-Web-Applications-20-320.jpg)