MLOps-From-Model-centric-to-Data-centric-AI.pdf

- 1. MLOps: From Model-centric to Data-centric AI Andrew Ng

- 2. AI system = Code + Data (model/algorithm) Andrew Ng

- 3. Inspecting steel sheets for defects Baseline system: 76.2% accuracy Target: 90.0% accuracy Andrew Ng Examples of defects

- 4. Audience poll: Should the team improve the code or the data? Andrew Ng Poll results:

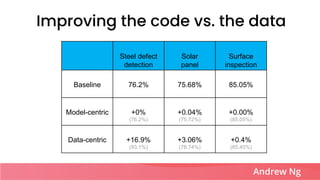

- 5. Steel defect detection Solar panel Surface inspection Baseline 76.2% 75.68% 85.05% Model-centric +0% (76.2%) +0.04% (75.72%) +0.00% (85.05%) Data-centric +16.9% (93.1%) +3.06% (78.74%) +0.4% (85.45%) Improving the code vs. the data Andrew Ng

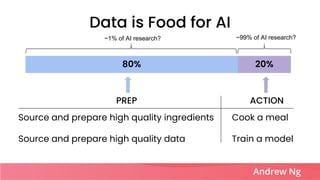

- 6. 80% 20% PREP ACTION Source and prepare high quality ingredients Source and prepare high quality data Cook a meal Train a model Data is Food for AI Andrew Ng ~1% of AI research? ~99% of AI research?

- 7. Lifecycle of an ML Project Define project Scope project Define and collect data Collect data Training, error analysis & iterative improvement Train model Andrew Ng Deploy, monitor and maintain system Deploy in production

- 8. Scoping: Speech Recognition Andrew Ng Decide to work on speech recognition for voice search Define project Scope project Collect data Train model Deploy in production

- 9. Andrew Ng Collect Data: Speech Recognition Is the data labeled consistently? ŌĆ£Um, todayŌĆÖs weatherŌĆØ ŌĆ£UmŌĆ” todayŌĆÖs weatherŌĆØ ŌĆ£TodayŌĆÖs weatherŌĆØ Scope project Collect data Train model Deploy in production Define and collect data

- 10. Labeling instruction: Use bounding boxes to indicate the position of iguanas Iguana Detection Example Andrew Ng

- 11. ŌĆó Ask two independent labelers to label a sample of images. ŌĆó Measure consistency between labelers to discover where they disagree. ŌĆó For classes where the labelers disagree, revise the labeling instructions until they become consistent. Making data quality systematic: MLOps Andrew Ng

- 12. Labeler consistency example Labeler 1 Labeler 2 Steel defect detection (39 classes). Class 23: Foreign particle defect. Andrew Ng

- 13. Labeler consistency example Labeler 1 Labeler 2 Steel defect detection (39 classes). Class 23: Foreign particle defect. Andrew Ng

- 14. Model-centric view Collect what data you can, and develop a model good enough to deal with the noise in the data. Data-centric view The consistency of the data is paramount. Use tools to improve the data quality; this will allow multiple models to do well. Making it systematic: MLOps Andrew Ng Hold the data fixed and iteratively improve the code/model. Hold the code fixed and iteratively improve the data.

- 15. Audience poll: Think about the last supervised learning model you trained. How many training examples did you have? Please enter an integer. Poll results: Andrew Ng

- 16. Kaggle Dataset Size Andrew Ng

- 17. Speed (rpm) Voltage Speed (rpm) Voltage ŌĆó Small data ŌĆó Noisy labels Small Data and Label Consistency ŌĆó Big data ŌĆó Noisy labels ŌĆó Small data ŌĆó Clean (consistent) labels Andrew Ng Speed (rpm) Voltage

- 18. You have 500 examples, and 12% of the examples are noisy (incorrectly or inconsistently labeled). The following are about equally effective: ŌĆó Clean up the noise ŌĆó Collect another 500 new examples (double the training set) With a data centric view, there is significant of room for improvement in problems with <10,000 examples! Theory: Clean vs. noisy data Andrew Ng

- 19. 0.3 0.4 0.5 0.6 0.7 0.8 250 500 750 1000 1250 1500 Accuracy (mAP) Number of training examples Clean Noisy Example: Clean vs. noisy data Note: Big data problems where thereŌĆÖs a long tail of rare events in the input (web search, self-driving cars, recommender systems) are also small data problems. Andrew Ng

- 20. Andrew Ng Train model: Speech Recognition Scope project Collect data Train model Deploy in production Model-centric view How can I tune the model architecture to improve performance? Data-centric view How can I modify my data (new examples, data augmentation, labeling, etc.) to improve performance? Training, error analysis & iterative improvement Error analysis shows your algorithm does poorly in speech with car noise in the background. What do you do?

- 21. Andrew Ng Train model: Speech Recognition Making it systematic ŌĆō iteratively improving the data: ŌĆó Train a model ŌĆó Error analysis to identify the types of data the algorithm does poorly on (e.g., speech with car noise) ŌĆó Either get more of that data via data augmentation, data generation or data collection (change inputs x) or give more consistent definition for labels if they were found to be ambiguous (change labels y) Scope project Collect data Train model Deploy in production

- 22. Deploy, monitor and maintain system Andrew Ng Deploy: Speech Recognition Monitor performance in deployment, and flow new data back for continuous refinement of model. ŌĆó Systematically check for concept drift/data drift (performance degradation) ŌĆó Flow data back to retrain/update model regularly Scope project Collect data Train model Deploy in production

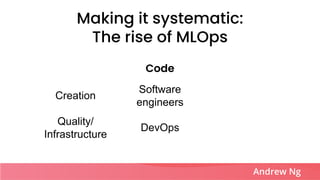

- 23. AI systems Code Data Creation Software engineers ML engineers Quality/ Infrastructure DevOps MLOps = + Making it systematic: The rise of MLOps Andrew Ng

- 24. AI systems Code Data Creation Software engineers ML engineers Quality/ Infrastructure DevOps MLOps = + Making it systematic: The rise of MLOps Andrew Ng

- 25. Andrew Ng Traditional software vs AI software Scope project Develop code Deploy in production Traditional software AI software Scope project Collect data Train model Deploy in production

- 26. How do I define and collect my data? How do I modify data to improve model performance? What data do I need to track concept/data drift? Andrew Ng MLOps: Ensuring consistently high- quality data Scope project Collect data Train model Deploy in production

- 27. Audience poll: Who do you think is best qualified to take on an MLOps role? Poll results: Andrew Ng

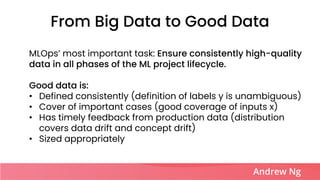

- 28. MLOpsŌĆÖ most important task: Ensure consistently high-quality data in all phases of the ML project lifecycle. Good data is: ŌĆó Defined consistently (definition of labels y is unambiguous) ŌĆó Cover of important cases (good coverage of inputs x) ŌĆó Has timely feedback from production data (distribution covers data drift and concept drift) ŌĆó Sized appropriately From Big Data to Good Data Andrew Ng

- 29. Important frontier: MLOps tools to make data-centric AI an efficient and systematic process. Takeaways: Data-centric AI Andrew Ng MLOpsŌĆÖ most important task is to make high quality data available through all stages of the ML project lifecycle. AI system = Code + Data Data-centric AI How can you systematically change your data (inputs x or labels y) to improve performance? Model-centric AI How can you change the model (code) to improve performance?