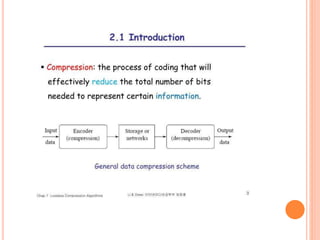

Multimedia lossless compression algorithms

- 2. BASICS OF INFORMATION THEORY RUN-LENGTH CODING VARIABLE-LENGTH CODING SHANNONŌĆōFANO ALGORITHM HUFFMAN CODING EXTENDED HUFFMAN CODING EXAMPLE DICTIONARY-BASED CODING LZW ALGORITHM DETAILS ARITHMETIC CODING BASIC ARITHMETIC CODING ALGORITHM Outline

- 8. RUN-LENGTH CODING Memoryless Source: an information source that is indepen- dently distributed. Namely, the value of the current symbol does not depend on the values of the previously appeared symbols. Instead of assuming memoryless source, Run-Length Coding (RLC) exploits memory present in the information source. Rationale for RLC: if the information source has the prop- erty that symbols tend to form continuous groups, then such symbol and the length of the group can be coded.

- 19. EXTENDED HUFFMAN EXAMPLE As an explicit example of the power of Extended Huffman Coding, let us construct a binary Huffman code for a source S with just three symbols A, B, and C, having probabilities 0.6, 0.3, and 0.1, respectively. That is, here we have n = 3. We are interested in what the average codeword length is, in bits per symbol. To start with, in comparison let us first look at the value of the entropy of this source, and the bitrate given by Huffman coding for single symbols, not blocks.

- 40. Thank you