Multimedia searching

- 1. Digital shapes content-based searching & retrieval Web Science Course (Fall 2011) Laura Papaleo https://www.linkedin.com/in/laurapapaleo/ laura.papaleo@gmail.com

- 2. Outline ’üĮ Digital shapes definition ’üĮ Content-based retrieval basics ’üĮ Image retrieval ’üĮ Video retrieval ’üĮ 3D model retrieval 2

- 3. Multimedia content short introduction Laura Papaleo | laura.papaleo@gmail.com

- 4. Image and Digital Image ’üĮ An image is an artifact that has a similar appearance to some subject - usually a physical object/person (wikipedia). ’üĮ Images may be two-dimensional (e.g. photograph) or three-dimensional (statue, hologram, ŌĆ”). ’üĮ 2D Digital Image: ’üĮ Numeric representation of a two-dimensional image. Without qualifications, the term "digital image" usually refers to raster images also called bitmap images ’üĮ 3D Digital image (3D model): ’üĮ a mathematical representation of any three- dimensional surface of object (either inanimate or living) 4

- 5. Video and Digital Video ’üĮ Video is the technology of electronically maintain a sequence of still images representing scenes in motion. ’üĮ Digital video comprises a series of orthogonal bitmap digital images (frames) displayed in rapid succession at a constant rate. 5

- 6. In a more general sense: Digital Shapes 6 ’üĮ Multidimensional media characterized by a visual appearance in a space of 2, 3, or more dimensions. ’üĮ Examples: images, 3D models, videos, animations, and so on. ’āĀ they can be acquired from real environments/objects or synthetically created.

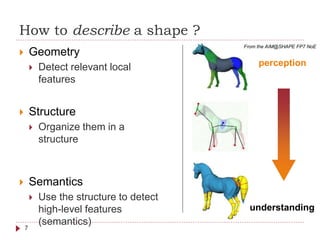

- 7. How to describe a shape ? 7 ’üĮ Geometry ’üĮ Detect relevant local features ’üĮ Structure ’üĮ Organize them in a structure ’üĮ Semantics ’üĮ Use the structure to detect high-level features (semantics) perception understanding From the AIM@SHAPE FP7 NoE

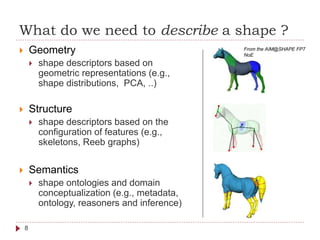

- 8. What do we need to describe a shape ? 8 ’üĮ Geometry ’üĮ shape descriptors based on geometric representations (e.g., shape distributions, PCA, ..) ’üĮ Structure ’üĮ shape descriptors based on the configuration of features (e.g., skeletons, Reeb graphs) ’üĮ Semantics ’üĮ shape ontologies and domain conceptualization (e.g., metadata, ontology, reasoners and inference) From the AIM@SHAPE FP7 NoE

- 9. Digital shapes searching Basics Laura Papaleo | laura.papaleo@gmail.com

- 10. Content-based retrieval (CBR) ’üĮ It is related to the problem of searching for digital shapes in large databases (as the web) using their actual content ’üĮ First defined in 1992 by Kato et al. for images (A sketch retrieval method for full color image database-query by visual example - Pattern Recognition). ’üĮ Known also as query by content (QBC) and content-based visual information retrieval (CBVIR) ’üĮ Techniques, tools and algorithms used originate from statistics, pattern recognition, signal processing, computer vision, computer graphics, geometry modeling and so on. e.g. for images 10

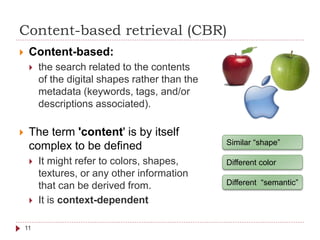

- 11. Content-based retrieval (CBR) ’üĮ Content-based: ’üĮ the search related to the contents of the digital shapes rather than the metadata (keywords, tags, and/or descriptions associated). ’üĮ The term 'content' is by itself complex to be defined ’üĮ It might refer to colors, shapes, textures, or any other information that can be derived from. ’üĮ It is context-dependent Similar ŌĆ£shapeŌĆØ Different color Different ŌĆ£semanticŌĆØ 11

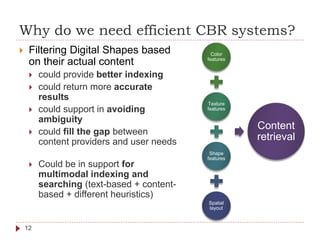

- 12. Why do we need efficient CBR systems? ’üĮ Filtering Digital Shapes based on their actual content ’üĮ could provide better indexing ’üĮ could return more accurate results ’üĮ could support in avoiding ambiguity ’üĮ could fill the gap between content providers and user needs ’üĮ Could be in support for multimodal indexing and searching (text-based + content- based + different heuristics) Color features Texture features Shape features Spatial layout Content retrieval 12

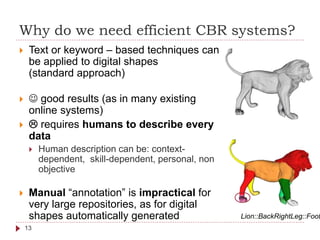

- 13. Why do we need efficient CBR systems? ’üĮ Text or keyword ŌĆō based techniques can be applied to digital shapes (standard approach) ’üĮ ’üŖ good results (as in many existing online systems) ’üĮ ’üī requires humans to describe every data ’üĮ Human description can be: context- dependent, skill-dependent, personal, non objective ’üĮ Manual ŌĆ£annotationŌĆØ is impractical for very large repositories, as for digital shapes automatically generated Lion::BackRightLeg::Foot 13

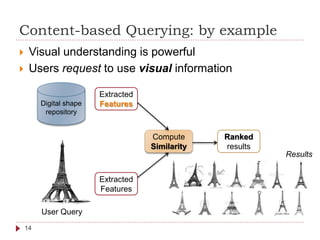

- 14. Content-based Querying: by example ’üĮ Visual understanding is powerful ’üĮ Users request to use visual information Digital shape repository Extracted Features Compute Similarity User Query Extracted Features Ranked results 14 Results

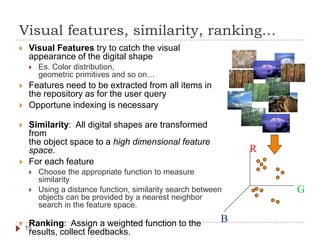

- 15. Visual features, similarity, rankingŌĆ” 15 ’üĮ Visual Features try to catch the visual appearance of the digital shape ’üĮ Es. Color distribution, geometric primitives and so onŌĆ” ’üĮ Features need to be extracted from all items in the repository as for the user query ’üĮ Opportune indexing is necessary ’üĮ Similarity: All digital shapes are transformed from the object space to a high dimensional feature space. ’üĮ For each feature ’üĮ Choose the appropriate function to measure similarity ’üĮ Using a distance function, similarity search between objects can be provided by a nearest neighbor search in the feature space. ’üĮ Ranking: Assign a weighted function to the results, collect feedbacks. R B G

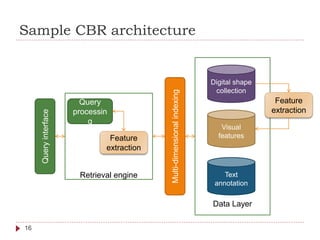

- 16. Data Layer Retrieval engine Sample CBR architecture Digital shape collection Visual features Text annotation Multi-dimensionalindexing Query processin g Queryinterface Feature extraction 16 Feature extraction

- 17. Other query methods ’üĮ Browsing by examples (multiple inputs) ’üĮ Browsing categories (customized/hierarchical) ’üĮ Querying by region (rather than the entire digital shape) ’üĮ Querying by visual sketch ’üĮ Querying by specific features ’üĮ Multimodal queries (e.g. combining touch, voice, etc.) 17

- 18. Image Searching & Retrieval Basics Laura Papaleo | laura.papaleo@gmail.com

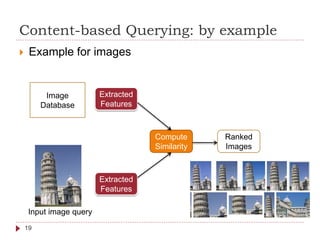

- 19. Content-based Querying: by example ’üĮ Example for images Image Database Extracted Features Compute Similarity Input image query Extracted Features Ranked Images 19

- 20. Similarity measures for images ’üĮ Measures that must solely be based on the information included in the digital representation of the images. ’üĮ Common technique: Extract a set of visual features Visual features fall into one of the following categories ’üĮ Colour ’üĮ Texture ’üĮ ShapeVisual Information Retrieval, Del Bimbo A., Morgan-Kaufmann, 1999 20

- 21. Similarity measures for images ’üĮ All images are transformed from the object space to a high dimensional feature space. ’üĮ In this space every image is a point with the coordinate representing its features characteristics ’üĮ Similar images are ŌĆ£nearŌĆØ in space ’üĮ The definition of an appropriate distance function is crucial for the success of the feature transformation. ’üĮ Some examples for distance metrics are ’üĮ The Euclidean distance [Niblack 1993], ’üĮ The Manhattan distance [Stricker and Orengo 1995] ’üĮ The distance between two points measured along axes at right angles ’üĮ The maximum norm [Stricker and Orengo 1995], ’üĮ The quadratic function [Hafner et alii 1995], ’üĮ Earth Mover's Distance [Rubner, Tomasi, and Guibas 2000], ’üĮ Deformation Models [Keysers et alii 2007b]. 21

- 22. Visual Features Extraction ’üĮ What are relevant visual features for images? ’üĮ Primitive features ’üĮ Mean color (RGB) ’üĮ Color Histogram ’üĮ Semantic features ’üĮ Color Layout, texture etcŌĆ” ’üĮ Domain specific features ’üĮ Face recognition, ’üĮ fingerprint matching ’üĮ etcŌĆ” General features 22

- 23. Color: Distance measures ’üĮ Based on color similarity ’üĮ Obtained by computing a color histogram for each image ’üĮ Computing the difference among the histogramsŌĆ” ’üĮ Current research (Color layout) ’üĮ segment color proportion by region and by spatial relationship among several color regions. ’üĮ NOTE: Examining images on colors is the most used techniques because it does not depend on image size or orientation. 23

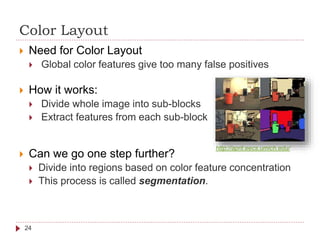

- 24. Color Layout ’üĮ Need for Color Layout ’üĮ Global color features give too many false positives ’üĮ How it works: ’üĮ Divide whole image into sub-blocks ’üĮ Extract features from each sub-block ’üĮ Can we go one step further? ’üĮ Divide into regions based on color feature concentration ’üĮ This process is called segmentation. 24 http://april.eecs.umich.edu/

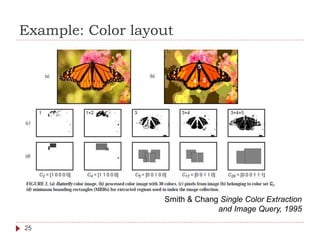

- 25. Example: Color layout Smith & Chang Single Color Extraction and Image Query, 1995 25

- 26. Texture measures ’üĮ Texture measures look for visual patterns in images. ’üĮ Texture is a difficult concept to represent. ’üĮ Identification in images achieved by modeling texture as a two-dimensional gray level variation. ’üĮ The relative brightness of pairs of pixels is computed such that degree of contrast, regularity, coarseness and directionality may be estimated 26

- 27. Texture classification ’üĮ Most accepted classification of textures based on psychology studies ŌĆō Tamura representation ’üĮ Coarseness ’üĮ relates to distances of notable spatial variations of grey levels, that is, implicitly, to the size of the primitive elements (texels) forming the texture ’üĮ Contrast ’üĮ measures how grey levels q; q = 0, 1, ..., qmax, vary in the image g and to what extent their distribution is biased to black or white ’üĮ Degree of directionality ’üĮ measured using the frequency distribution of oriented local edges against their directional angles ’üĮ Linelikeness, Regularity & Roughness a combination of the above threeŌĆ” ’üĮ http://www.cs.auckland.ac.nz/compsci708s1c/lectures/Glect- html/topic4c708FSC.htm#tamura H. Tamura, et al.. Texture features corresponding to visual perception. IEEE Transactions1978 27

- 28. Shape-based measures ’üĮ Shape refers to the shape of a particular region in an image. ’üĮ Shapes are often determined by applying segmentation or edge detection to an image. ’üĮ In some case accurate shape detection will require human intervention because methods like segmentation are very difficult to completely automate. 28

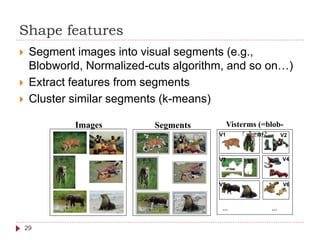

- 29. Shape features ’üĮ Segment images into visual segments (e.g., Blobworld, Normalized-cuts algorithm, and so onŌĆ”) ’üĮ Extract features from segments ’üĮ Cluster similar segments (k-means) Visterms (=blob- tokens) ŌĆ” ŌĆ” Images Segments V1 V2 V3 V4V1 V5 V6 29

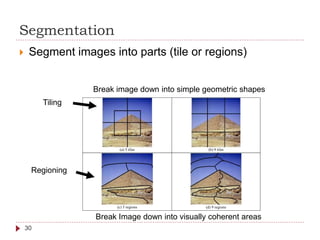

- 30. Segmentation ’üĮ Segment images into parts (tile or regions) (a) 5 tiles (b) 9 tiles (c) 5 regions (d) 9 regions Tiling Regioning Break Image down into visually coherent areas Break image down into simple geometric shapes 30

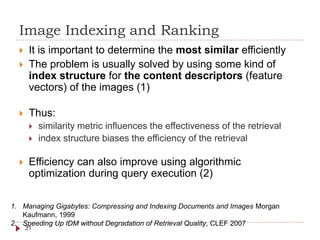

- 31. Image Indexing and Ranking ’üĮ It is important to determine the most similar efficiently ’üĮ The problem is usually solved by using some kind of index structure for the content descriptors (feature vectors) of the images (1) ’üĮ Thus: ’üĮ similarity metric influences the effectiveness of the retrieval ’üĮ index structure biases the efficiency of the retrieval ’üĮ Efficiency can also improve using algorithmic optimization during query execution (2) 1. Managing Gigabytes: Compressing and Indexing Documents and Images Morgan Kaufmann, 1999 2. Speeding Up IDM without Degradation of Retrieval Quality, CLEF 2007 31

- 32. Examples

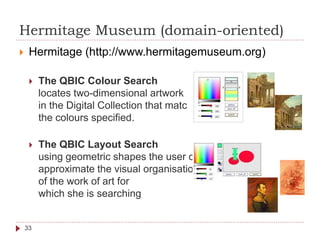

- 33. Hermitage Museum (domain-oriented) ’üĮ Hermitage (http://www.hermitagemuseum.org) ’üĮ The QBIC Colour Search locates two-dimensional artwork in the Digital Collection that match the colours specified. ’üĮ The QBIC Layout Search using geometric shapes the user can approximate the visual organisation of the work of art for which she is searching 33

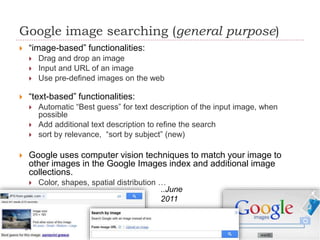

- 34. Google image searching (general purpose) ’üĮ ŌĆ£image-basedŌĆØ functionalities: ’üĮ Drag and drop an image ’üĮ Input and URL of an image ’üĮ Use pre-defined images on the web ’üĮ ŌĆ£text-basedŌĆØ functionalities: ’üĮ Automatic ŌĆ£Best guessŌĆØ for text description of the input image, when possible ’üĮ Add additional text description to refine the search ’üĮ sort by relevance, ŌĆ£sort by subjectŌĆØ (new) ’üĮ Google uses computer vision techniques to match your image to other images in the Google Images index and additional image collections. ’üĮ Color, shapes, spatial distribution ŌĆ” ..June 2011 34

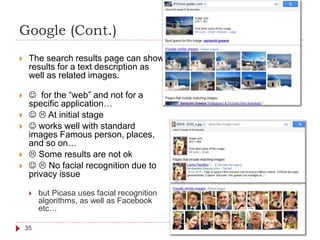

- 35. Google (Cont.) ’üĮ The search results page can show results for a text description as well as related images. ’üĮ ’üŖ for the ŌĆ£webŌĆØ and not for a specific applicationŌĆ” ’üĮ ’üŖ ’üī At initial stage ’üĮ ’üŖ works well with standard images Famous person, places, and so onŌĆ” ’üĮ ’üī Some results are not ok ’üĮ ’üŖ ’üī No facial recognition due to privacy issue ’üĮ but Picasa uses facial recognition algorithms, as well as Facebook etcŌĆ” 35

- 37. Motivation ’üĮ There is an amazing growth in the amount of digital video data in recent years. ’üĮ Lack of tools for classify and retrieve video content ’üĮ There exists a gap between low-level features and high- level semantic content. ’üĮ To let machine understand video is important and challenging. 37

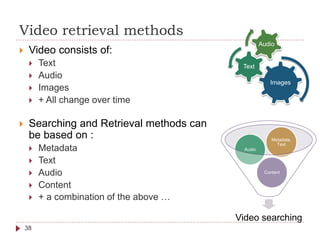

- 38. Video retrieval methods ’üĮ Video consists of: ’üĮ Text ’üĮ Audio ’üĮ Images ’üĮ + All change over time ’üĮ Searching and Retrieval methods can be based on : ’üĮ Metadata ’üĮ Text ’üĮ Audio ’üĮ Content ’üĮ + a combination of the above ŌĆ” Images Text Audio Video searching Content Audio Metadata, Text 38

- 39. Metadata, Text & Audio-based Methods ’üĮ Metadata-based ’üĮ Video is indexed and retrieved based on structured metadata information by using a traditional DBMS ’üĮ Metadata examples are the title, author, producer, director, date, types of video. ’üĮ Text-based ’üĮ Video is indexed and retrieved based on associated subtitles (text) using traditional IR techniques for text documents. ’üĮ Transcripts and subtitles are already exist in many types of video such as news and movies, eliminating the need for manual annotation. ’üĮ Audio-based ’üĮ Video indexed and retrieved based on associated soundtracks using the methods for audio indexing and retrieval. ’üĮ Speech recognition is applied if necessary. 39

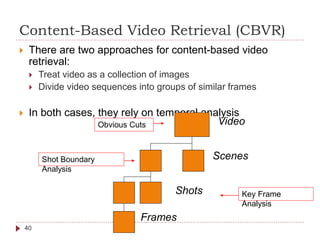

- 40. Content-Based Video Retrieval (CBVR) ’üĮ There are two approaches for content-based video retrieval: ’üĮ Treat video as a collection of images ’üĮ Divide video sequences into groups of similar frames ’üĮ In both cases, they rely on temporal analysis Video Scenes Shots Frames Key Frame Analysis Shot Boundary Analysis Obvious Cuts 40

- 41. Query by example for video 41 ’üĮ Image query input ’üĮ Feature extraction according to the repository ’üĮ If video as a sequence of images, search for ŌĆ£similar imagesŌĆØ according to the extracted features ’üĮ If video as group of similar frames, search for ŌĆ£similarŌĆØ among the representative of each frames group ’üĮ Rank and return the results ’üĮ Video query input ’üĮ Analyse and extract feature characteristics ’üĮ For each representative image proceed as before

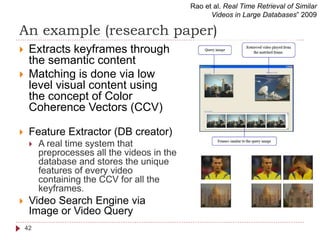

- 42. An example (research paper) ’üĮ Extracts keyframes through the semantic content ’üĮ Matching is done via low level visual content using the concept of Color Coherence Vectors (CCV) ’üĮ Feature Extractor (DB creator) ’üĮ A real time system that preprocesses all the videos in the database and stores the unique features of every video containing the CCV for all the keyframes. ’üĮ Video Search Engine via Image or Video Query Rao et al. Real Time Retrieval of Similar Videos in Large DatabasesŌĆØ 2009 42

- 43. 3D models searching & retrieval Basics Laura Papaleo | laura.papaleo@gmail.com

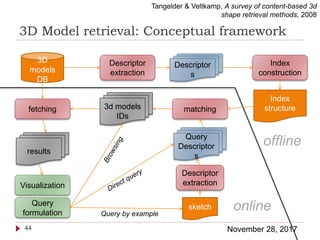

- 44. 3D Model retrieval: Conceptual framework November 28, 201744 Tangelder & Veltkamp, A survey of content-based 3d shape retrieval methods, 2008 3D models DB Descriptor extraction Descriptor s Index construction Index structurefetching matching Query formulation sketch Descriptor extraction Query Descriptor s Visualization results 3d models IDs online offline Query by example

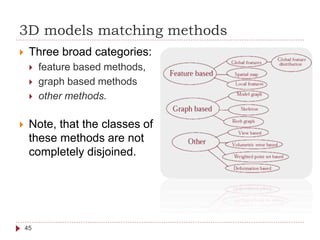

- 45. 3D models matching methods ’üĮ Three broad categories: ’üĮ feature based methods, ’üĮ graph based methods ’üĮ other methods. ’üĮ Note, that the classes of these methods are not completely disjoined. 45

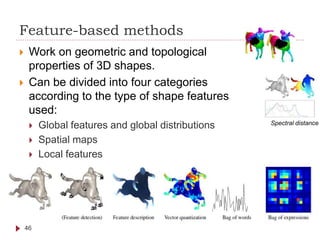

- 46. Feature-based methods ’üĮ Work on geometric and topological properties of 3D shapes. ’üĮ Can be divided into four categories according to the type of shape features used: ’üĮ Global features and global distributions ’üĮ Spatial maps ’üĮ Local features 46 Spectral distance

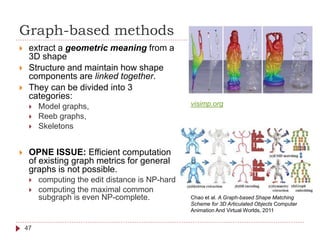

- 47. Graph-based methods ’üĮ extract a geometric meaning from a 3D shape ’üĮ Structure and maintain how shape components are linked together. ’üĮ They can be divided into 3 categories: ’üĮ Model graphs, ’üĮ Reeb graphs, ’üĮ Skeletons ’üĮ OPNE ISSUE: Efficient computation of existing graph metrics for general graphs is not possible. ’üĮ computing the edit distance is NP-hard ’üĮ computing the maximal common subgraph is even NP-complete. 47 Chao et al. A Graph-based Shape Matching Scheme for 3D Articulated Objects Computer Animation And Virtual Worlds, 2011 visimp.org

- 48. Princeton Shape Repository ’üĮ http://shape.cs.princeton.edu/search.html 48

- 49. McGill 3D Shape Benchmark 49 ’üĮ http://www.cim.mcgill.ca/~shape/benchMark/ ’üĮ It offers a repository for testing 3D shape retrieval algorithms. ’üĮ Emphasis on including articulating parts.

- 50. Observations & OPEN ISSUES 50 ’üĮ Good literature for images ’üĮ Open research for video and 3D models ’üĮ CBS ŌĆ£usableŌĆØ in domain specific application ’üĮ Open research for general purpose CBS (on the web) ’üĮ Open research for multimodal searching ’üĮ Ranking and feedback, new frontiers with the advent of Web 2.0 and Web 3.0 ’üĮ Cooperative environment could support the creation of a global ŌĆ£well annotated digital worldŌĆØ ’üĮ Accountability problems ’üĮ Trusting ’üĮ History, provenance is importantŌĆ”

- 51. Observations & OPEN ISSUES 51 ’üĮ Open research: Adaptive visualization of the results according to the userŌĆÖ needs ’üĮ Image and abstract could be useful in specific conditions ’üĮ 3D model online browsing could be important in other conditions ’üĮ Video preview? Or? ’üĮ The same for the querying interfaceŌĆ” HCI issuesŌĆ” ’üĮ Web searching performances: open research in on-the- fly indexing of videos and 3D models ’üĮ Open issue: relevant portions of result digital shapes should be usable as new query simply by selecting a portion (and then ŌĆ£find similar itemsŌĆØ) ’üĮ Interactive selection of portions of images, video and 3D models

![Similarity measures for images

’üĮ All images are transformed from the object space to a high

dimensional feature space.

’üĮ In this space every image is a point with the coordinate representing

its features characteristics

’üĮ Similar images are ŌĆ£nearŌĆØ in space

’üĮ The definition of an appropriate distance function is crucial for the

success of the feature transformation.

’üĮ Some examples for distance metrics are

’üĮ The Euclidean distance [Niblack 1993],

’üĮ The Manhattan distance [Stricker and Orengo 1995]

’üĮ The distance between two points measured along axes at right angles

’üĮ The maximum norm [Stricker and Orengo 1995],

’üĮ The quadratic function [Hafner et alii 1995],

’üĮ Earth Mover's Distance [Rubner, Tomasi, and Guibas 2000],

’üĮ Deformation Models [Keysers et alii 2007b].

21](https://image.slidesharecdn.com/multimedia-searching08-171128111514/85/Multimedia-searching-21-320.jpg)