Murpy's Machine Learing: 10. Directed Graphical Model

- 1. ML study 4th

- 2. 10.1 Introduction ? ? ?? ?? ?? ? joint distribution p(x|”╚)? ??? ????? ??? ? ???? ? Chain rule ? Conditional Independence(CI) ? Graphical Model ? ?? ??? ???? ?, ?? ??? ??? ????? ??? ? ???? ? maginalization ? ??? ?????? ??? ????? ??? ? ???? ? Factorized posterior

- 4. 10.1.2 Conditional independence ?? ???? ??? ???? ????

- 5. 10.1.3 Graphical models ? graphical model (GM)? Cl ???? joint distribution? ???? ???. ? ???? node?? ?? ??? ????. ? edge? ??? Cl ??? ????.

- 6. 10.1.4 Graph terminology Descendent Ancestor Parent X Y1 Y2 Non-descendent

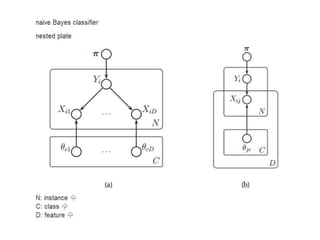

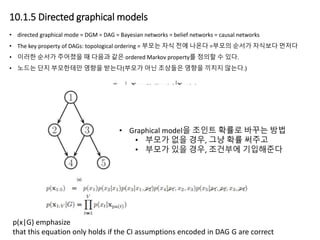

- 7. 10.1.5 Directed graphical models ? directed graphical mode = DGM = DAG = Bayesian networks = belief networks = causal networks ? The key property of DAGs: topological ordering = ??? ?? ?? ??? =??? ??? ???? ??? ? ??? ??? ???? ? ??? ?? ordered Markov property? ??? ? ??. ? ??? ?? ????? ??? ???(??? ?? ???? ??? ??? ???.) ? Graphical model? ??? ??? ??? ?? ? ??? ?? ??, ?? ?? ??? ? ??? ?? ??, ???? ????? p(x|G) emphasize that this equation only holds if the CI assumptions encoded in DAG G are correct

- 8. ? ?? ?? ??? ? ??? ???? ??? ?? ??? ??? d-separated ??? ??

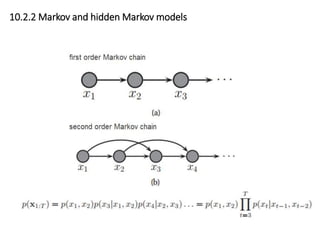

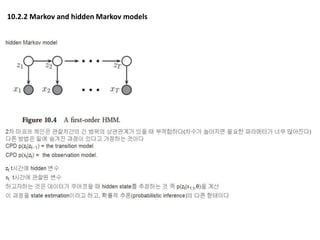

- 10. 10.2.2 Markov and hidden Markov models

- 11. 10.2.2 Markov and hidden Markov models

- 12. Case study, Deep learning(RBM) for Collaborative Filtering likelihood learning= MLE w.r.t W ?? ???? MCMC? gibbs sampling ??? ? ? h= 0 ?? 1 V = [0 0 1 0 0] //?? 3??

- 13. ? GM? ?? ?? ??(joint probability distribution)? ???? ??? ??? ?? ? ?? ??? ???? ?, ??? ? ? ???? ??? ?? ? HMM? ?? ??, ??(speech signal)??? hidden state (word)? ???? ?? ??? ????. ?? ?? p(x1:V|”╚)? ?? ??? ?? ??? ??? ??? ?? ?? ??(visible) ?? xv ???( hidden) ??, ? ? ?? ?? ?? ???? ?, ??? ?? posterior? ??? ??? ??: ? ??? ??? ?? ? ???? ???? ???? ??. ? query variables, xq: value we wish to know nuisance variables xn: ?? ? ?? ? nuisance ??? marginalize???? ?? ??? ?? ?? ? 10.3 Inference

- 16. 10.4 Learning Structure learning : DGM? ??? ?? = ?? ?? ????? ?? ??? ??, chapter 26 ????? ?????? ? ???? ?????. LDA

- 19. ?? ??

- 20. ???? tck: t?? ??? c?? ????? k?? state c ?? ????? ???? ? t??? ??? k?? ?? ”╚tck? hyperparamter multinomial(”╚tc) multinomial-dirichlet ??? ??? factorized? posterior? dirichlet ??? ??? posterior? ???? ?? 4?? ?? CPT ??? ???? ??? DGM?? ?? ???? ?

- 21. ?? ??? ? ? theta? ???? ?? graphical model(=joint distribution? ??? ??)? learning ? joint distribution? ???? ?? ??? ?? CPT? ??? ??. ? ???? ????? ???? graphical model? learning? ?? ??(factorized posterior)

- 22. 10.4.3 learning with missing and/or latent variables ? ???? missing ???? latent ??? ???, likelihood? ? ?? ????? ?? ? ?? convex??? ? ???(11.3?? ???) ? ? local optimal? MLE? MAP? ?? ??? ??. ? parameters? ???? ??? ? ?????. ?? ?? ??? ??? ???. ?

- 23. 10.5 Conditional independence properties of DGMs CI ??? ??? ??? edge? ????(ci??? ???? ???? sparse???) ?? ???? ?? ?? p(??? sparse? ???)? ??, ???? ?? ?? ??? ?? ??? ?? ??? ci?? ? ??? ?? ??? G(p??? ? sparse? ???)? ???, ? ???? ?? ? ? p? ??? ? ??. I(p) ?? ???? ci??? ??? ? ???? ???, ?? p ??? ??? ??? ? G? p? imap??? ?? G? p? graphical model? ??? ? ??? ??? CI???? Chain rule???? ????? ????

- 24. X1 X3 X2 X4 Minimal I-Map Example ? If is a minimal I-Map ? Then, these are not I-Maps: X1 X3 X2 X4 X1 X3 X2 X4 ? CI? true?? p? ???? CI? ???

- 25. 10.5.1 d-separation and the Bayes Ball algorithm (global Markov properties)

- 26. The Bayes ball algorithm(Shachter 1998) ? E? ???? ?, A? B??? d-???? ???? ??? ?? ? A? ? ??? ?? ??, ?? ??? ??? ???, ?? ?? B? ?? ??? ???? ??

- 27. The Bayes ball algorithm(Shachter 1998)

- 28. The Bayes ball algorithm(Shachter 1998) ??? ?? ??

- 32. 10.5.2 Other Markov properties of DGMs ?? ?? ??t ???? ?? From the d-separation criterion, one can conclude that

- 33. ordered Markov property, topological ordering?? ??t?? ?? ??? ?? ?? ??

- 34. ?? ???? ? ????? ??(??)? ??(?? ??)? ???? ??? ??? ??? ? global Markov property G ? the ordered Markov property O ? directed local Markov property L ? d-separated ???? ??? G? ???? ?? G <->L <-> O ??(Koller and Friedman 2009) ? G? true p? i-map?? ?? p? ??? G? ?? ??? ?? factorize ? ? ?? (F??) ? F = O ((Koller and Friedman 2009) for the proof), ? G = L = O = F ? d-separated -> G -> O -> L -> F ? ??? ?, ??? ??? ???? ??? CI ??? ? ???? ??? ? ? ? ??? G? ??? ????? ?? p? ci??? ??? ??? ??? compact?? factorize? ? ??? ? ??? ???? ?(??? ?? ??) ?????? ? ? ?? theorem

- 35. 10.5.3 Markov blanket and full conditionals d-??? ??? ? ? ???? ??? ???? d-?? ???? ????

- 36. ? full conditional posterior? ??? ???? ?? ??

![Case study, Deep learning(RBM) for Collaborative Filtering

likelihood

learning= MLE w.r.t W

?? ???? MCMC? gibbs sampling ??? ? ?

h= 0 ?? 1

V = [0 0 1 0 0] //?? 3??](https://image.slidesharecdn.com/4-140313015546-phpapp02/85/Murpy-s-Machine-Learing-10-Directed-Graphical-Model-12-320.jpg)