第1回狈滨笔厂読み会?関西発表资料

- 1. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets Xi Chen1,2, Yan Duan1,2, Rein Houthooft1,2, John Schulman1,2, Ilya Sutskever2, Pieter Abbeel1,2 @NIPS読み会?関西 2016/11/12 担当者: 大阪大学 堀井隆斗 1 UC Berkeley, Department of Electrical Engineering and Computer Science 2 OpenAI

- 2. ? 氏名 – 堀井隆斗 (大阪大学工学研究科 浅田研究室) ? 研究内容: – 人の情動発達過程のモデル化 – HRIにおける情動コミュニケーション ? 論文選択理由 – 生成モデルの最新動向を知りたい – 教師なし最高 – 研究内容にかなり関係する 自己紹介 Multimodal Deep Boltzmann Machine 情動の表現獲得 情動推定 情動表出 1/19 NHK総合 SFリアル#2アトムと暮らす日

- 3. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda 2/19

- 4. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda 2/19

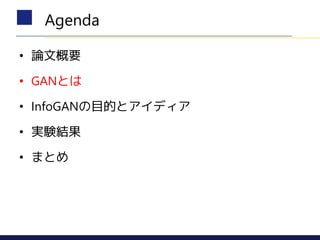

- 5. ? 目的 – データ生成のモデルとして優秀なGANに,「わかりやすい」情報を 表現する特徴量を「簡単に」獲得させる ? キーアイディア – GANの生成ベクトルに特徴を表現する隠れ符号を埋め込む – 隠れ符号と生成分布の相互情報量最大化を学習の目的関数に追加 ? 結果 – 複数の画像データセットにおいて解釈可能な特徴量を獲得した 論文概要 3/19

- 6. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda

- 7. ? GAN: Generative Adversarial Networks – Generator(G)とDiscriminator(D)を戦わせて生成精度の向 上を図るモデル GANとは? [Goodfellow+, 2014] Generator(G) Discriminator(D)Data True data or Generated data

- 8. ? GAN: Generative Adversarial Networks – Generator(G)とDiscriminator(D)を戦わせて生成精度の向 上を図る生成モデル ? G: 生成用ベクトル?からデータを生成 ? D: 対象データが本物(データセット)か 偽物(Gによって生成)かを識別 GANとは 目的関数 [Goodfellow+, 2014] データセットのデータ を「本物」と識別 生成されたデータを「偽物」と識別 4/19

- 9. ? Deep Convolutional GAN (DCGAN) – GとDにCNNを利用 ? 活性化関数が特殊だったり するが同じアイディア – Zのベクトル演算による新規画像生成 GANによるデータ生成 I [Randford+, 2015] zの空間中に様々な特徴量が獲得されている! 5/19

- 11. ? GAN, DCGAN (Chainer-DCGAN) – 頑張って探す! ? 欲しい特徴や対向する特徴を含むデータ を生成するzを探す 特徴量は如何にして獲得されるか ? <-ラベルがないとどうしようもない… [Kingma+, 2014] ? 半教師あり学習(Semi-supervised Learning with Deep Generative Models) – 少数のラベル付データを利用 ? ラベルデータをzの一部として学習 顔の向きを変える 特徴ベクトル 7/19

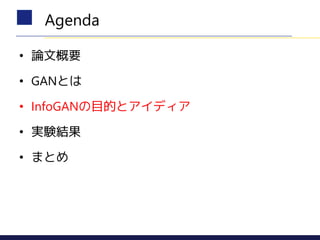

- 12. ? GANでの特徴量獲得 – 生成ベクトル?の空間中に 生成画像の特徴が表現される ? ?の各次元の特徴は解釈しづらい ? 解釈可能な表現は生成画像を確認 して探すしかない ? 教師あり学習では一部の特徴しか 学習できない 問題点まとめ ? ? ス タ イ ル カテゴリ 教師なし学習で解釈可能な特 徴量を「簡単」に獲得したい!! 8/19

- 13. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda

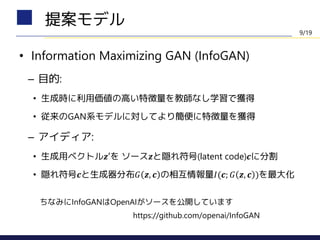

- 14. ? Information Maximizing GAN (InfoGAN) – 目的: ? 生成時に利用価値の高い特徴量を教師なし学習で獲得 ? 従来のGAN系モデルに対してより簡便に特徴量を獲得 – アイディア: ? 生成用ベクトル?′を ソース?と隠れ符号(latent code)?に分割 ? 隠れ符号?と生成器分布? ?, ? の相互情報量?(?; ? ?, ? )を最大化 提案モデル 9/19 ちなみにInfoGANはOpenAIがソースを公開しています https://github.com/openai/InfoGAN

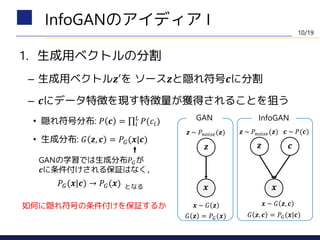

- 15. 1. 生成用ベクトルの分割 – 生成用ベクトル?′を ソース?と隠れ符号?に分割 – ?にデータ特徴を現す特徴量が獲得されることを狙う ? 隠れ符号分布: ? ? = ? ? ?(??) ? 生成分布: ? ?, ? = ??(?|?) InfoGANのアイディア I ? ? ? ? ? ? ~ ? ? ? ~ ? ?, ? ? ~ ??????(?) ? ~ ??????(?) ? ~ ?(?) ? ? = ??(?) ? ?, ? = ??(?|?) GAN InfoGAN GANの学習では生成分布??が ?に条件付けされる保証はなく, ??(?|?) → ??(?) となる 如何に隠れ符号の条件付けを保証するか 10/19

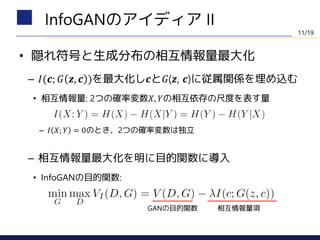

- 16. ? 隠れ符号と生成分布の相互情報量最大化 – ?(?; ? ?, ? )を最大化し?と?(?, ?)に従属関係を埋め込む ? 相互情報量: 2つの確率変数?, ?の相互依存の尺度を表す量 – ? ?; ? = 0のとき,2つの確率変数は独立 – 相互情報量最大化を明に目的関数に導入 ? InfoGANの目的関数: InfoGANのアイディア II GANの目的関数 相互情報量項 11/19

- 17. ? 相互情報量?(?; ? ?, ? )を最大化 – 計算に?(?|?)が必要になるので直接最大化できない – 補助分布?(?|?)を用いて下限を求める ? Variational Information Maximizationを利用 – 補助分布導入時にKL情報量で下限を設定 変分相互情報量最大化 I [Barber and Agakov, 2003] 12/19

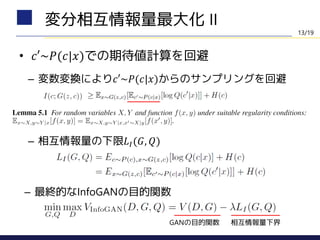

- 18. ? ?′~?(?|?)での期待値計算を回避 – 変数変換により?′~?(?|?)からのサンプリングを回避 変分相互情報量最大化 II – 相互情報量の下限??(?, ?) – 最終的なInfoGANの目的関数 GANの目的関数 相互情報量下界 13/19

- 19. ? 補助分布?(?|?)の選定 – ?は識別器?のネットワークを流用 ? GANからの学習コストの増加は非常に小さい – ?の最上位層に条件付き分布を表現する全結合層を追加 ? カテゴリカルな隠れ符号: softmax ? 連続値の隠れ符号: factored Gaussian ? パラメータ? ? 離散値隠れ符号: ?=1がおすすめ ? 連続値隠れ符号: ?は小さいほうがおすすめ 実装 14/19

- 20. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda

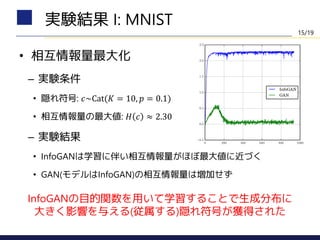

- 21. ? 相互情報量最大化 – 実験条件 ? 隠れ符号: ?~Cat(? = 10, ? = 0.1) ? 相互情報量の最大値: ? ? ≈ 2.30 – 実験結果 ? InfoGANは学習に伴い相互情報量がほぼ最大値に近づく ? GAN(モデルはInfoGAN)の相互情報量は増加せず 実験結果 I: MNIST InfoGANの目的関数を用いて学習することで生成分布に 大きく影響を与える(従属する)隠れ符号が獲得された 15/19

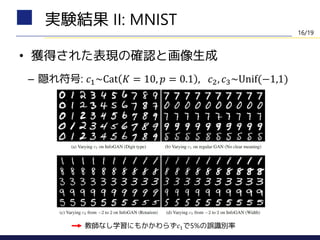

- 22. ? 獲得された表現の確認と画像生成 – 隠れ符号: ?1~Cat ? = 10, ? = 0.1 , ?2, ?3~Unif(?1,1) 実験結果 II: MNIST 教師なし学習にもかかわらず?1で5%の誤識別率 16/19

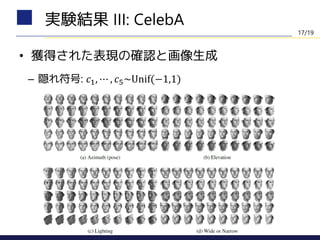

- 23. ? 獲得された表現の確認と画像生成 – 隠れ符号: ?1, ? , ?5~Unif(?1,1) 実験結果 III: CelebA 17/19

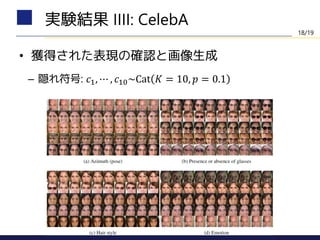

- 24. ? 獲得された表現の確認と画像生成 – 隠れ符号: ?1, ? , ?10~Cat ? = 10, ? = 0.1 実験結果 IIII: CelebA 18/19

- 25. ? 論文概要 ? GANとは ? InfoGANの目的とアイディア ? 実験結果 ? まとめ Agenda

- 26. ? GANによる解釈可能な特徴量表現を獲得するInfoGAN – 生成ベクトル?のソースと隠れ符号?の明示的な分割 – 隠れ符号と生成分布の相互情報量?を最大化し従属性を保証 – ?の下限を計算する補助分布?は識別器?を流用してコストを抑える – 様々なデータセットで獲得された表現と生成画像を確認 ? 限界と次の課題 – 教師なし学習なのでデータの分布に内在する特徴のみ抽出可 – VAEなど他モデルへの応用や半教師あり学習の改善 – 階層的な表現の獲得 – マルチモーダル情報の扱い まとめ 19/19

- 27. [Goodfellow+, 2014] Ian J. Goodfellow, Jean Pouget-Abadiey, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozairz, Aaron Courville, and Yoshua Bengio, Generative Adversarial Nets,NIPS2014 [Randford+, 2015] Alec Radford, Luke Metz, and Soumith Chintala, Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks, ICLR 2016 [Kingma+, 2014] Diederik P. Kingm, Danilo J. Rezendey, Shakir Mohamedy, and Max Welling, Semi- supervised Learning with Deep Generative Models, NIPS2014 [Barber and Agakov, 2003] David Barber and Felix Agakov, The IM Algorithm : A variational approach to Information Maximization, NIPS2003 Chainer-DCGAN: http://mattya.github.io/chainer-DCGAN/, ChainerによるDCGANのデモ 参考文献

Editor's Notes

- The GAN formulation uses a simple factored continuous input noise vector z, while imposing no restrictions on the manner in which the generator may use this noise. As a result, it is possible that the noise will be used by the generator in a highly entangled way, causing the individual dimensions of z to not correspond to semantic features of the data.

![? GAN: Generative Adversarial Networks

– Generator(G)とDiscriminator(D)を戦わせて生成精度の向

上を図るモデル

GANとは?

[Goodfellow+, 2014]

Generator(G) Discriminator(D)Data True data

or

Generated data](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-7-320.jpg)

![? GAN: Generative Adversarial Networks

– Generator(G)とDiscriminator(D)を戦わせて生成精度の向

上を図る生成モデル

? G: 生成用ベクトル?からデータを生成

? D: 対象データが本物(データセット)か

偽物(Gによって生成)かを識別

GANとは

目的関数

[Goodfellow+, 2014]

データセットのデータ

を「本物」と識別

生成されたデータを「偽物」と識別

4/19](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-8-320.jpg)

![? Deep Convolutional GAN (DCGAN)

– GとDにCNNを利用

? 活性化関数が特殊だったり

するが同じアイディア

– Zのベクトル演算による新規画像生成

GANによるデータ生成 I

[Randford+, 2015]

zの空間中に様々な特徴量が獲得されている!

5/19](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-9-320.jpg)

![? GAN, DCGAN (Chainer-DCGAN)

– 頑張って探す!

? 欲しい特徴や対向する特徴を含むデータ

を生成するzを探す

特徴量は如何にして獲得されるか

?

<-ラベルがないとどうしようもない…

[Kingma+, 2014]

? 半教師あり学習(Semi-supervised Learning with Deep Generative Models)

– 少数のラベル付データを利用

? ラベルデータをzの一部として学習

顔の向きを変える

特徴ベクトル

7/19](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-11-320.jpg)

![? 相互情報量?(?; ? ?, ? )を最大化

– 計算に?(?|?)が必要になるので直接最大化できない

– 補助分布?(?|?)を用いて下限を求める

? Variational Information Maximizationを利用

– 補助分布導入時にKL情報量で下限を設定

変分相互情報量最大化 I

[Barber and Agakov, 2003]

12/19](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-17-320.jpg)

![[Goodfellow+, 2014] Ian J. Goodfellow, Jean Pouget-Abadiey, Mehdi Mirza, Bing Xu, David Warde-Farley,

Sherjil Ozairz, Aaron Courville, and Yoshua Bengio, Generative Adversarial

Nets,NIPS2014

[Randford+, 2015] Alec Radford, Luke Metz, and Soumith Chintala, Unsupervised Representation

Learning with Deep Convolutional Generative Adversarial Networks, ICLR 2016

[Kingma+, 2014] Diederik P. Kingm, Danilo J. Rezendey, Shakir Mohamedy, and Max Welling, Semi-

supervised Learning with Deep Generative Models, NIPS2014

[Barber and Agakov, 2003] David Barber and Felix Agakov, The IM Algorithm : A variational approach to

Information Maximization, NIPS2003

Chainer-DCGAN: http://mattya.github.io/chainer-DCGAN/, ChainerによるDCGANのデモ

参考文献](https://image.slidesharecdn.com/nipshorii-161112145530/85/1-NIPS-27-320.jpg)