NVIDIA deep learning最新情報in沖縄

- 1. エヌビディア合同会社 ディープラーニング部 部長 井﨑 武士 NVIDIA GPU ディープラーニング 最新情報 IN 沖縄 2017年2月04日

- 2. 2 創業1993年 共同創立者兼CEO ジェンスン?フアン (Jen-Hsun Huang) 1999年 NASDAQに上場(NVDA) 1999年にGPUを発明 その後の累計出荷台数は10億個以上 2015年度の売上高は46億8,000万ドル 社員は世界全体で9,100人 約7,300件の特許を保有 本社は米国カリフォルニア州サンタクララ

- 4. 4 AGENDA Deep Learning とは? なぜGPUがDeep Learningに向いているのか NVIDIA Deep Learningプラットフォーム 最新研究事例(Deep Learning Institute 2016より)

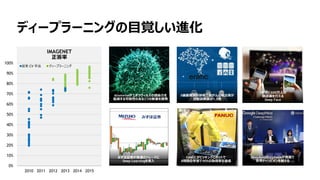

- 5. 5 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 2009 2010 2011 2012 2013 2014 2015 2016 ディープラーニングの目覚しい進化 IMAGENET 正答率 従来 CV 手法 ディープラーニング DeepMindのAlphaGoが囲碁で 世界チャンピオンを越える Atomwiseがエボラウィルスの感染力を 低減する可能性のある2つの新薬を開発 FANUCがピッキングロボットで 8時間の学习で90%の取得率を達成 X線画像読影診断で肺がんの検出率が 読影診断医の1.5倍 みずほ証券が株価のトレードに Deep Learningを導入 1秒間に600万人の 顔認識を行える Deep Face

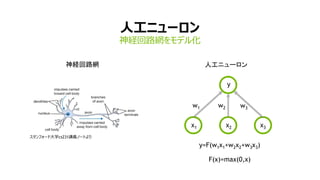

- 6. 6 人工ニューロン 神経回路網をモデル化 スタンフォード大学cs231講義ノートより 神経回路網 w1 w2 w3 x1 x2 x3 y y=F(w1x1+w2x2+w3x3) F(x)=max(0,x) 人工ニューロン

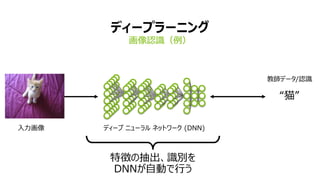

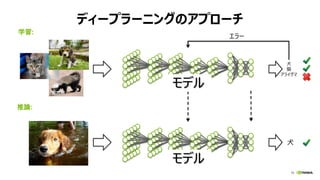

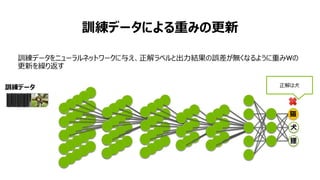

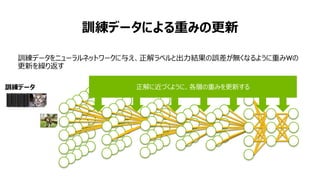

- 9. 9 ディープラーニング 画像認識(例) 入力画像 教師データ/認識 “猫” ディープ ニューラル ネットワーク (DNN) 特徴の抽出、識別を DNNが自動で行う

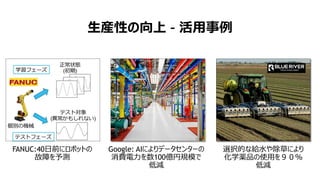

- 13. 生産性の向上 – 活用事例 Google: AIによりデータセンターの 消費電力を数100億円規模で 低減 FANUC:40日前にロボットの 故障を予測 選択的な給水や除草により 化学薬品の使用を90% 低減

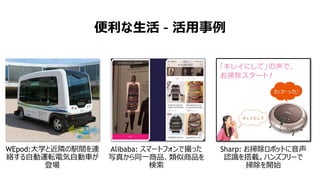

- 14. 便利な生活 – 活用事例 Alibaba: スマートフォンで撮った 写真から同一商品、類似商品を 検索 WEpod:大学と近隣の駅間を連 絡する自動運転電気自動車が 登場 Sharp: お掃除ロボットに音声 認識を搭載。ハンズフリーで 掃除を開始

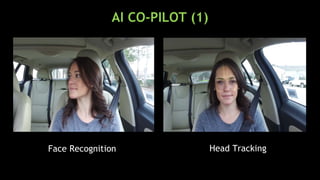

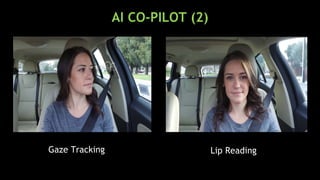

- 15. AI CO-PILOT (1) Face Recognition Head Tracking

- 16. AI CO-PILOT (2) Lip ReadingGaze Tracking

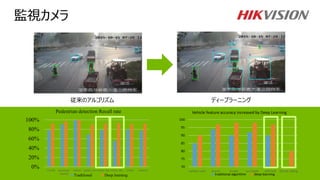

- 18. 安心?安全な生活 – 活用事例 Paypal:不正決済の検出の 誤報率が50%低減 herta Security:スマート監視 カメラにより空港やショッピング モールの公共安全を向上 vRad:CTスキャン画像により、潜 在的に頭蓋内の出血の可能性 が高い箇所を特定し、予防

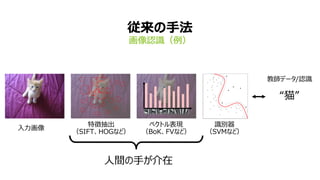

- 19. 20 DEEP LEARNING INSIGHT 従来のアルゴリズム ディープラーニング 0% 20% 40% 60% 80% 100% overall passenger channel indoor public area sunny day rainny day winter summer Pedestrian detection Recall rate Traditional Deep learning 70 75 80 85 90 95 100 vehicle color brand model sun blade safe belt phone calling Vehicle feature accuracy increased by Deep Learning traditional algorithm deep learning 監視カメラ

- 21. 22 ディープラーニングを加速する3要因 DNN GPUビッグデータ 1分間に100 時間の ビデオがアップロード 日々3.5億イメージ がアップロード 1時間に2.5兆件の 顧客データが発生 0.0 0.5 1.0 1.5 2.0 2.5 3.0 2008 2009 2010 2011 2012 2013 2014 NVIDIA GPU x86 CPU TFLOPS TORCH THEANO CAFFE MATCONVNET PURINEMOCHA.JL MINERVA MXNET*

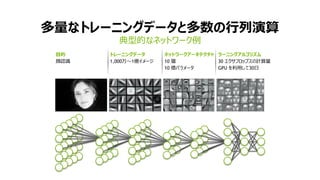

- 22. 23 典型的なネットワーク例 多量なトレーニングデータと多数の行列演算 目的 顔認識 トレーニングデータ 1,000万~1億イメージ ネットワークアーキテクチャ 10 層 10 億パラメータ ラーニングアルゴリズム 30 エクサフロップスの計算量 GPU を利用して30日

- 23. 24 NVIDIA Deep Learning プラットフォーム

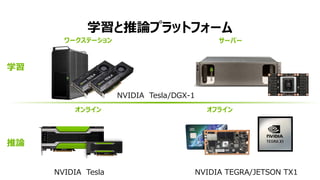

- 24. 25 学习と推論プラットフォーム ワークステーション サーバー NVIDIA Tesla NVIDIA TEGRA/JETSON TX1 学习 推論 NVIDIA Tesla/DGX-1 オンライン オフライン X

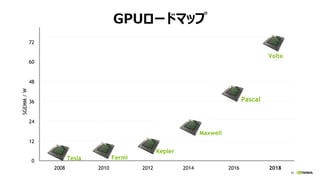

- 25. 26 GPUロードマップSGEMM/W 2012 20142008 2010 2016 48 36 12 0 24 60 2018 72 Tesla Fermi Kepler Maxwell Pascal Volta

- 26. 27 倍精度 5.3TF | 単精度 10.6TF | 半精度 21.2TF TESLA P100 ハイパースケールデータセンターのための 世界で最も先進的な GPU

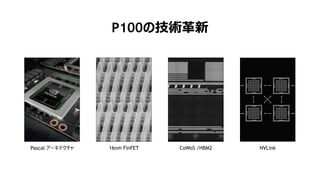

- 27. 28 P100の技術革新 16nm FinFETPascal アーキテクチャ CoWoS /HBM2 NVLink

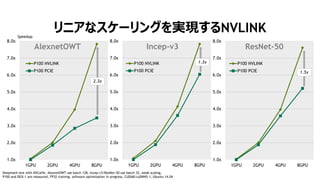

- 28. 29 リニアなスケーリングを実現するNVLINK 1.0x 2.0x 3.0x 4.0x 5.0x 6.0x 7.0x 8.0x 1GPU 2GPU 4GPU 8GPU AlexnetOWT P100 NVLINK P100 PCIE Deepmark test with NVCaffe. AlexnetOWT use batch 128, Incep-v3/ResNet-50 use batch 32, weak scaling, P100 and DGX-1 are measured, FP32 training, software optimization in progress, CUDA8/cuDNN5.1, Ubuntu 14.04 1.0x 2.0x 3.0x 4.0x 5.0x 6.0x 7.0x 8.0x 1GPU 2GPU 4GPU 8GPU Incep-v3 P100 NVLINK P100 PCIE 1.0x 2.0x 3.0x 4.0x 5.0x 6.0x 7.0x 8.0x 1GPU 2GPU 4GPU 8GPU ResNet-50 P100 NVLINK P100 PCIE Speedup 2.3x 1.3x 1.5x

- 29. 30NVIDIA CONFIDENTIAL. DO NOT DISTRIBUTE. NVIDIA DGX-1 世界初ディープラーニング スーパーコンピューター ディープラーニング向けに設計 170 TF FP16 8個 Tesla P100 ハイブリッド?キューブメッシュ 主要なAIフレームワークを加速

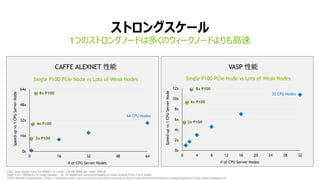

- 30. 31 0x 16x 32x 48x 64x 0 16 32 48 64 ストロングスケール 1つのストロングノードは多くのウィークノードよりも高速 VASP 性能 2x P100 CPU: Dual Socket Intel E5-2680v3 12 cores, 128 GB DDR4 per node, FDR IB VASP 5.4.1_05Feb16, Si-Huge Dataset. 16, 32 Nodes are estimated based on same scaling from 4 to 8 nodes Caffe AlexNet scaling data: https://software.intel.com/en-us/articles/caffe-training-on-multi-node-distributed-memory-systems-based-on-intel-xeon-processor-e5 CAFFE ALEXNET 性能 4x P100 8x P100 Single P100 PCIe Node vs Lots of Weak Nodes # of CPU Server Nodes Speed-upvs1CPUServerNode 0x 2x 4x 6x 8x 10x 12x 0 4 8 12 16 20 24 28 32 2x P100 8x P100 Single P100 PCIe Node vs Lots of Weak Nodes # of CPU Server Nodes Speed-upvs1CPUServerNode 4x P100 64 CPU Nodes 32 CPU Nodes

- 31. 32 Fastest AI Supercomputer in TOP500 4.9 Petaflops Peak FP64 Performance 19.6 Petaflops DL FP16 Performance 124 NVIDIA DGX-1 Server Nodes Most Energy Efficient Supercomputer #1 on Green500 List 9.5 GFLOPS per Watt 2x More Efficient than Xeon Phi System Rocket for Cancer Moonshot CANDLE Development Platform Optimized Frameworks DGX-1 as Single Common Platform INTRODUCING DGX SATURNV World’s Most Efficient AI Supercomputer

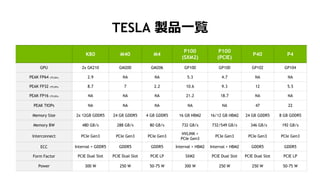

- 32. 33 K80 M40 M4 P100 (SXM2) P100 (PCIE) P40 P4 GPU 2x GK210 GM200 GM206 GP100 GP100 GP102 GP104 PEAK FP64 (TFLOPs) 2.9 NA NA 5.3 4.7 NA NA PEAK FP32 (TFLOPs) 8.7 7 2.2 10.6 9.3 12 5.5 PEAK FP16 (TFLOPs) NA NA NA 21.2 18.7 NA NA PEAK TIOPs NA NA NA NA NA 47 22 Memory Size 2x 12GB GDDR5 24 GB GDDR5 4 GB GDDR5 16 GB HBM2 16/12 GB HBM2 24 GB GDDR5 8 GB GDDR5 Memory BW 480 GB/s 288 GB/s 80 GB/s 732 GB/s 732/549 GB/s 346 GB/s 192 GB/s Interconnect PCIe Gen3 PCIe Gen3 PCIe Gen3 NVLINK + PCIe Gen3 PCIe Gen3 PCIe Gen3 PCIe Gen3 ECC Internal + GDDR5 GDDR5 GDDR5 Internal + HBM2 Internal + HBM2 GDDR5 GDDR5 Form Factor PCIE Dual Slot PCIE Dual Slot PCIE LP SXM2 PCIE Dual Slot PCIE Dual Slot PCIE LP Power 300 W 250 W 50-75 W 300 W 250 W 250 W 50-75 W TESLA 製品一覧

- 33. 34 TEGRA JETSON TX1 モジュール型スーパーコンピュー ター 主なスペック GPU 1 TFLOP/s 256コア Maxwell CPU 64ビット ARM A57 CPU メモリ 4 GB LPDDR4 | 25.6 GB/s ストレージ 16 GB eMMC Wifi/BT 802.11 2x2 ac / BT Ready ネットワーク 1 Gigabit Ethernet サイズ 50mm x 87mm インターフェース 400ピン ボード間接続コネクタ 消費電力 最大10W Under 10 W for typical use cases

- 34. 35 NVIDIA DRIVE PX 2 12 CPUコア | Pascal GPU | 8 TFLOPS | 24 DL TOPS | 16nm FF | 250W | リキッドクーリング方式 世界初 自動運転向けAIスーパーコンピュータ

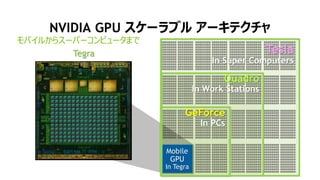

- 35. 36 NVIDIA GPU スケーラブル アーキテクチャ モバイルからスーパーコンピュータまで Tesla In Super Computers Quadro In Work Stations GeForce In PCs Mobile GPU In Tegra Tegra

- 36. 37 エヌビディア ディープラーニング プラットフォーム COMPUTER VISION SPEECH AND AUDIO BEHAVIOR Object Detection Voice Recognition Translation Recommendation Engines Sentiment Analysis DEEP LEARNING MATH LIBRARIES cuBLAS cuSPARSE GPU-INTERCONNECT NCCLcuFFT Mocha.jl Image Classification DEEP LEARNING SDK FRAMEWORKS APPLICATIONS GPU PLATFORM CLOUD GPU Tesla P100 Tesla K80/M40/M4 P100/P40/P4 Jetson TX1 SERVER DGX-1 TensorRT DRIVEPX2

- 37. 38 エヌビディア DIGITS DETECTION SEGMENTATION CLASSIFICATION

- 39. 最新の研究事例

- 40. Quanzheng Li Associate Professor, Massachusetts General Hospital DEEP LEARNING ON METASTASIS DETECTION OF BREAST CANCER USING DGX-1 SESSION 1

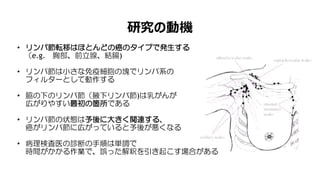

- 42. 研究の動機 ? リンパ節転移はほとんどの癌のタイプで発生する (e.g. 胸部、前立腺、結腸) ? リンパ節は小さな免疫細胞の塊でリンパ系の フィルターとして動作する ? 脇の下のリンパ節(腋下リンパ節)は乳がんが 広がりやすい最初の箇所である ? リンパ節の状態は予後に大きく関連する、 癌がリンパ節に広がっていると予後が悪くなる ? 病理検査医の診断の手順は単調で 時間がかかる作業で、誤った解釈を引き起こす場合がある

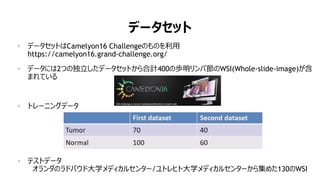

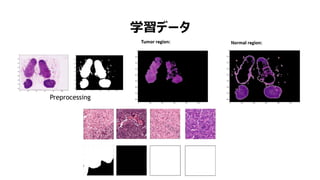

- 43. ? データセットはCamelyon16 Challengeのものを利用 https://camelyon16.grand-challenge.org/ ? データには2つの独立したデータセットから合計400の歩哨リンパ節のWSI(Whole-slide-image)が含 まれている ? トレーニングデータ ? テストデータ オランダのラドバウド大学メディカルセンター/ユトレヒト大学メディカルセンターから集めた130のWSI データセット

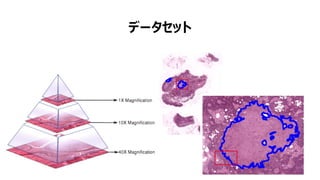

- 44. データセット

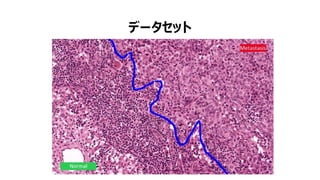

- 45. データセット

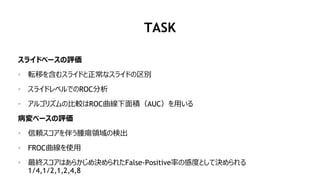

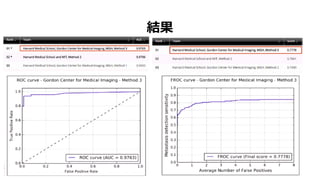

- 46. TASK スライドベースの評価 ? 転移を含むスライドと正常なスライドの区別 ? スライドレベルでのROC分析 ? アルゴリズムの比較はROC曲線下面積(AUC)を用いる 病変ベースの評価 ? 信頼スコアを伴う腫瘍領域の検出 ? FROC曲線を使用 ? 最終スコアはあらかじめ決められたFalse-Positive率の感度として決められる 1/4,1/2,1,2,4,8

- 47. FRAMEWORK

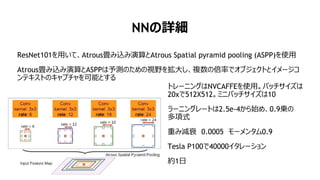

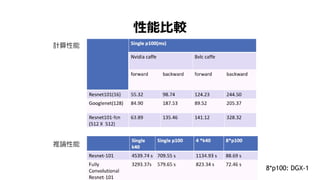

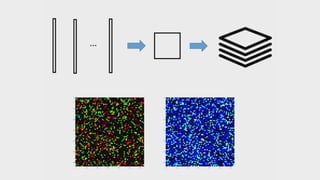

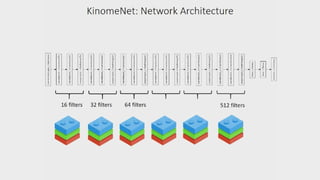

- 49. NNの詳細 ResNet101を用いて、Atrous畳み込み演算とAtrous Spatial pyramid pooling (ASPP)を使用 Atrous畳み込み演算とASPPは予測のための視野を拡大し、複数の倍率でオブジェクトとイメージコ ンテキストのキャプチャを可能とする トレーニングはNVCAFFEを使用。パッチサイズは 20xで512X512。ミニバッチサイズは10 ラーニングレートは2.5e-4から始め、0.9乗の 多項式 重み減衰 0.0005 モーメンタム0.9 Tesla P100で40000イタレーション 約1日

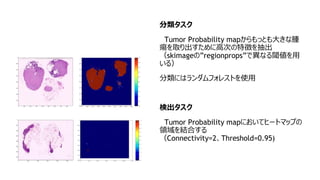

- 50. 分類タスク Tumor Probability mapからもっとも大きな腫 瘍を取り出すために高次の特徴を抽出 (skimageの”regionprops”で異なる閾値を用 いる) 分類にはランダムフォレストを使用 検出タスク Tumor Probability mapにおいてヒートマップの 領域を結合する (Connectivity=2、Threshold=0.95)

- 52. 结果

- 53. Joon Son Chung et al, Department of Engineering Science, University of Oxford. Google DeepMind LIP READING SENTENCES IN THE WILD SESSION 2 https://arxiv.org/pdf/1611.05358v1.pdf

- 54. LIP READING

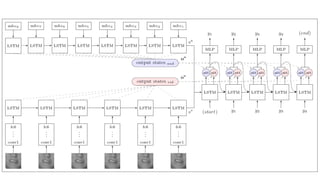

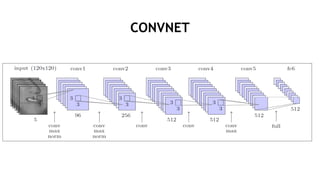

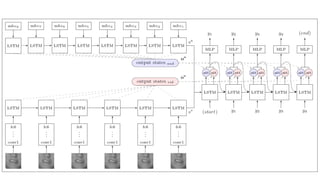

- 56. CONVNET

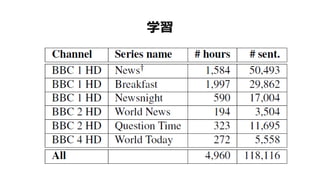

- 58. 学习

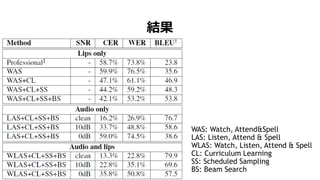

- 59. 结果 WAS: Watch, Attend&Spell LAS: Listen, Attend & Spell WLAS: Watch, Listen, Attend & Spell CL: Curriculum Learning SS: Scheduled Sampling BS: Beam Search

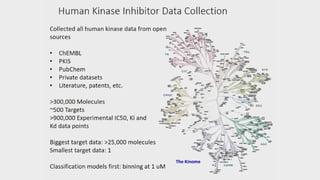

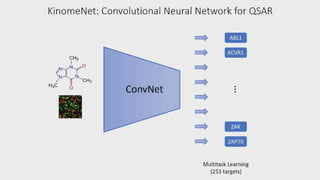

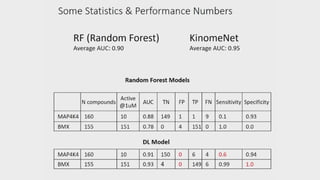

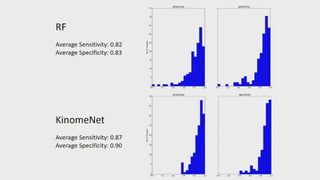

- 60. Olexandr Isayev Research Assistant Professor, University of North Carolina at Chapel Hill ACCURATE PREDICTION OF PROTEIN KINASE INHIBITORS WITH DEEP CONVOLUTIONAL NEURAL NETWORKS SESSION 3

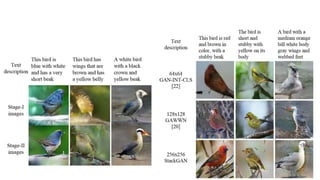

- 71. Han Zhang et al, Department of Computer Science, Rutgers University et al. STACKGAN: TEXT TO PHOTO-REALISTIC IMAGE SYNTHESIS WITH STACKED GENERATIVE ADVERSARIAL NETWORKS SESSION 4 https://arxiv.org/pdf/1612.03242v1.pdf

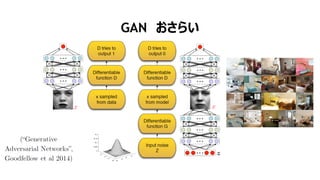

- 72. GAN おさらい

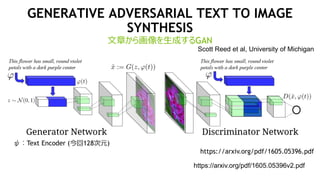

- 73. GENERATIVE ADVERSARIAL TEXT TO IMAGE SYNTHESIS 文章から画像を生成するGAN ψ:Text Encoder (今回128次元) https://arxiv.org/pdf/1605.05396v2.pdf Scott Reed et al, University of Michigan https://arxiv.org/pdf/1605.05396.pdf

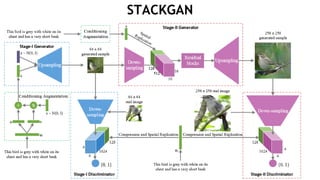

- 75. STACKGAN

- 77. Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel Dept. of Electrical Engineering and Computer Sciences, UC Berkeley VALUE ITERATION NETWORKS SESSION 5

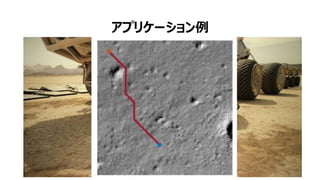

- 78. アプリケーション例

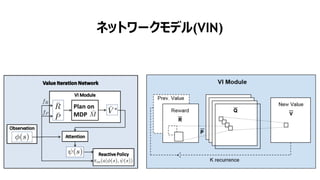

- 80. ネットワークモデル(痴滨狈)

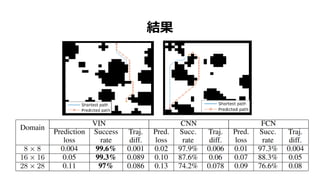

- 81. 结果