Oozie hugnov11

- 1. Oozie Evolution Gateway to Hadoop Eco-System Mohammad Islam

- 2. Agenda âĒâŊ What is Oozie? âĒâŊ What is in the Next Release? âĒâŊ Challenges âĒâŊ Future Works âĒâŊ Q&A

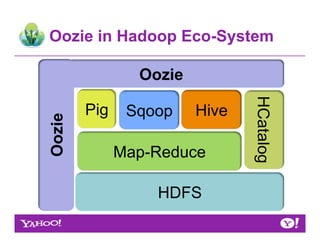

- 3. Oozie in Hadoop Eco-System Oozie HCatalog Pig Sqoop Hive Oozie Map-Reduce HDFS

- 4. Oozie : The Conductor

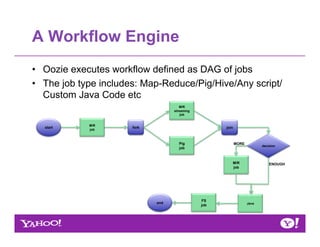

- 5. A Workflow Engine âĒâŊ Oozie executes workflow defined as DAG of jobs âĒâŊ The job type includes: Map-Reduce/Pig/Hive/Any script/ Custom Java Code etc M/R streaming job M/R start fork join job Pig MORE decision job M/R ENOUGH job FS end Java job

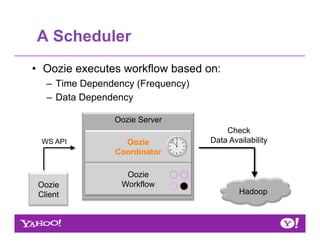

- 6. A Scheduler âĒâŊ Oozie executes workflow based on: ââŊ Time Dependency (Frequency) ââŊ Data Dependency Oozie Server Check WS API Oozie Data Availability Coordinator Oozie Oozie Workflow Client Hadoop

- 7. REST-API for Hadoop Components âĒâŊ Direct access to Hadoop components ââŊ Emulates the command line through REST API. âĒâŊ Supported Products: ââŊ Pig ââŊ Map Reduce

- 8. Three Questions âĶ Do you need Oozie? Q1 : Do you have multiple jobs with dependency? Q2 : Does your job start based on time or data availability? Q3 : Do you need monitoring and operational support for your jobs? If any one of your answers is YES, then you should consider Oozie!

- 9. What Oozie is NOT âĒâŊ Oozie is not a resource scheduler âĒâŊ Oozie is not for off-grid scheduling oâŊ Note: Off-grid execution is possible through SSH action. âĒâŊ If you want to submit your job occasionally, Oozie is an option. oâŊ Oozie provides REST API based submission.

- 10. Oozie in Apache Main Contributors

- 11. Oozie in Apache âĒâŊ Y! internal usages: ââŊ Total number of user : 375 ââŊ Total number of processed jobs â 750K/ month âĒâŊ External downloads: ââŊ 2500+ in last year from GitHub ââŊ A large number of downloads maintained by 3rd party packaging.

- 12. Oozie Usages Contd. âĒâŊ User Community: ââŊ Membership âĒâŊ Y! internal - 286 âĒâŊ External â 163 ââŊ Message (approximate) âĒâŊ Y! internal â 7/day âĒâŊ External â 8/day

- 13. Next Release âĶ âĒâŊ Integration with Hadoop 0.23 âĒâŊ HCatalog integration ââŊ Non-polling approach

- 14. Usability âĒâŊ Script Action âĒâŊ Distcp Action âĒâŊ Suspend Action âĒâŊ Mini-Oozie for CI ââŊ Like Mini-cluster âĒâŊ Support multiple versions ââŊ Pig, Distcp, Hive etc.

- 15. Reliability âĒâŊ Auto-Retry in WF Action level âĒâŊ High-Availability ââŊ Hot-Warm through ZooKeeper

- 16. Manageability âĒâŊ Email action âĒâŊ Query Pig Stats/Hadoop Counters ââŊ Runtime control of Workflow based on stats ââŊ Application-level control using the stats

- 17. Challenges : Queue Starvation âĒâŊ Which Queue? ââŊ Not a Hadoop queue issue. ââŊ Oozie internal queue to process the Oozie sub-tasks. ââŊ Oozieâs main execution engine. âĒâŊ User Problem : ââŊ Jobâs kill/suspend takes very long time.

- 18. Challenges : Queue Starvation Technical Problem: âĒâŊ Before execution, every task acquires lock on the job id. âĒâŊ Specialhigh-priority tasks (such as Kill or Suspend) couldnât get the lock and therefore, starve. In Queue J1 J2 J1 J1 J2 J1(H) J2 J1 Starvation for High Priority Task!

- 19. Challenges : Queue Starvation Resolution: âĒâŊAdd the high priority task in both the interrupt list and normal queue. âĒâŊ Before de-queue, check if there is any task in the interrupt list for the same job id. If there is one, execute that first. In Queue J1 J2 J1 J1 J2 J1(H) J2 J1 finds a task in interrupt queue In Interrupt List J1(H)

- 20. Oozie Futures âĒâŊ Easy adoption ââŊ Modeling tool ââŊ IDE integration ââŊ Modular Configurations âĒâŊ Allow job notification through JMS âĒâŊ Event-based data processing âĒâŊ Prioritization ââŊ By user, system level.

- 21. Take Away .. âĒâŊ Oozie is ââŊ In Apache! ââŊ Reliable and feature-rich. ââŊ Growing fast.

- 22. Q&A Mohammad K Islam kamrul@yahoo-inc.com http://incubator.apache.org/oozie/

- 23. Who needs Oozie? âĒâŊ Multiple jobs that have sequential/ conditional/parallel dependency âĒâŊ Need to run job/Workflow periodically. âĒâŊ Need to launch job when data is available. âĒâŊ Operational requirements: ââŊ Easy monitoring ââŊ Reprocessing ââŊ Catch-up

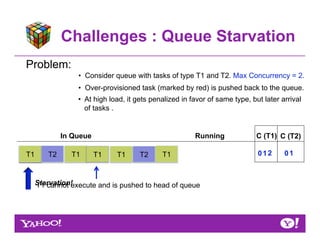

- 24. Challenges : Queue Starvation Problem: âĒâŊ Consider queue with tasks of type T1 and T2. Max Concurrency = 2. âĒâŊ Over-provisioned task (marked by red) is pushed back to the queue. âĒâŊ At high load, it gets penalized in favor of same type, but later arrival of tasks . In Queue Running C (T1) C (T2) T1 T2 T1 T1 T1 T2 T1 012 01 Starvation! T1 cannot execute and is pushed to head of queue

- 25. Challenges : Queue Starvation Resolution: âĒâŊ Before de-queuing any task, check its concurrency. âĒâŊ If violated, skip and get the next task. In Queue Running C (T1) C (T2) T1 T2 T1 T1 T1 T2 T1 012 01 2 Enqueue T2 now T1 cannot execute, so skip by one normallyfront T1 now executes node to