Practical solutions to implementing "Born Connected" data systems

Download as PPTX, PDF1 like595 views

Presentation in the Earth and Space Science Informatics stream at the 2015 American Geophysical Union Fall Meeting.

1 of 24

Download to read offline

Recommended

Fusion and fission

Fusion and fissionKella Randolph

╠²

Nuclear power plants work by releasing huge amounts of energy when they cause atoms to break apart. This is fission. The sun and other stars combine atoms, making their mass smaller and releasing enormous amounts of heat and energy.Microsoft Tests a Renewable Energy-Powered Data Center at the Bottom of the O...

Microsoft Tests a Renewable Energy-Powered Data Center at the Bottom of the O...Abaram Network Solutions

╠²

Microsoft estimates that more than half of the worldŌĆÖs population lives within about 120 miles of the coast. Therefore by placing data centers near coastal cities, data would have a shorter distance to travel to reach its destination.Data Mining with Weka - Certification

Data Mining with Weka - Certificationbitoven

╠²

This statement of completion certifies that Beethoven Adelson Plaisir successfully finished the online, non-credit course "Data Mining with Weka" provided by the University of Waikato on February 28, 2017, which covered machine learning algorithms, representing learned models, filtering data, classification methods, data visualization, and training, testing and evaluation. However, this statement does not represent or confer credit towards a University of Waikato qualification or verify the person's identity.Managing data for marine sciences

Managing data for marine sciencesAdam Leadbetter

╠²

A lecture to the National University of Ireland, Galway honours year and masters students in oceanography (14th November 2016) on the basics of marine data management.Using Erddap as a building block in Ireland's Integrated Digital Ocean

Using Erddap as a building block in Ireland's Integrated Digital OceanAdam Leadbetter

╠²

The document discusses using Erddap as part of Ireland's Integrated Digital Ocean platform. Erddap is used to aggregate data from various sources and provide it to users through standardized APIs and web interfaces. This allows diverse data and applications to interoperate through common access points and data flows, minimizing the distances between different technologies and systems. The Marine Institute of Ireland has implemented this approach to integrate ocean observation data and provide open access through their Digital Ocean portal.Where Linked Data meets Big Data: Applying standard data models to environmen...

Where Linked Data meets Big Data: Applying standard data models to environmen...Adam Leadbetter

╠²

This document discusses applying standard data models to environmental data streams from ocean observations. It presents examples of encoding oceanographic observation data using semantic web standards like the W3C Observation and Measurement ontology. These approaches aim to integrate live sensor data with linked open data to support interoperability across scientific domains.Full Scale Data Handling in Shipping: A Big Data Solution

Full Scale Data Handling in Shipping: A Big Data SolutionLokukaluge Prasad Perera

╠²

Various industrial challenges in full scale data handling situations in shipping are considered in this study. These large scale data handling approaches are often categorized as "Big Data" challenges; therefore various solutions to overcome such situations are identified. The proposed approach consists of a marine engine centered data flow path with various data handling layers to address the same challenges. These layers are categorized as: sensor fault detection, data classification, data compression, data transmission and receiver, data expansion, integrity verification, and data regression. The functionalities of each data handling layer with respect to ship performance and navigation information of a selected vessel are discussed and additional challenges that are encountered during this process are also summarized. Hence, these results can be used to develop data analytics that are related to energy efficiency and system reliability applications of shipping.Linked Ocean Data - Exploring connections between marine datasets in a Big Da...

Linked Ocean Data - Exploring connections between marine datasets in a Big Da...Adam Leadbetter

╠²

Adam Leadbetter works for the Marine Institute in Ireland and is interested in data management, oceanography, and long-distance running. The document provides his contact information and describes his interests using RDF triples. It also includes several links to resources about ocean data, sensors, observations, and semantic web standards for observational data.VCO Simulation with Cadence Spectre

VCO Simulation with Cadence SpectreHoopeer Hoopeer

╠²

Electrical and Electronics website links

VCO Simulation with Cadence Spectre

Neural Networks and Statistical Learning

Voltage Controlled Oscillators

Application Note. PLL jitter measurements

Oscillator Phase Noise:

Link22Virtualization Landscape & Cloud Computing

Virtualization Landscape & Cloud ComputingAdhish Pendharkar

╠²

The document discusses the virtualization landscape in the financial industry. It addresses how virtualization relates to cloud computing, the challenges of regulations/standards, sustaining resiliency during flip/flops between production and DR sites, integrating legacy systems, and storage issues. The document also outlines where the industry currently stands with cloud services and active/active setups, and concludes by stating that financial institutions are embracing new technologies but fully embracing public cloud remains uncertain due to regulatory requirements.Where did my layer come from? The semantics of data release

Where did my layer come from? The semantics of data releaseAdam Leadbetter

╠²

This document discusses the semantics of spatial data release and provenance metadata. It introduces Adam Leadbetter from the Marine Institute and provides several relevant links on topics like linked data, the PROV ontology, and information on data publication and citation. Several citations and the author's contact details are also included.Bringing Energy into the Sharing Economy

Bringing Energy into the Sharing EconomyNexergy

╠²

Nexergy CEO Darius Salgo's presentation from the All Energy conference, Oct 2017. In the presentation he outlines the shift from a one-way to two-way distributed energy future, and the value of new tools like local energy trading in better managing the grid.How to Build Consistent and Scalable Workspaces for Data Science Teams

How to Build Consistent and Scalable Workspaces for Data Science TeamsElaine K. Lee

╠²

This document discusses how to build consistent and scalable workspaces for data science teams. It recommends identifying system requirements, stabilizing dependencies, increasing test coverage, and using continuous integration to ensure resources are available. It also suggests creating a pool of worker machines and asynchronous task queue to scale workloads. This allows tasks to run in isolated, identical environments and provides flexible use of cloud computing resources. Benefits include guaranteed task environments, extensibility, and a reusable command line interface. Examples of use cases provided are quality assurance testing and parallelizable data and model tasks.Growth Engineering

Growth EngineeringAkshika Wijesundara

╠²

The session I conducted at the Pre-Bootcamp series of AI-Driven Sri Lanka.

The following topics were covered:

ŌĆó Growth engineering / Hacking

ŌĆó Dave McClureŌĆÖs Pirate Metrics / Growth funnel

ŌĆó Growth Framework

ŌĆó Data architecture

ŌĆó Azure monitor logs

ŌĆó A/B testing

ŌĆó How to get into data science

Presentation talk: https://youtu.be/sxQxOlK5aGI

XLDB South America Keynote: eScience Institute and Myria

XLDB South America Keynote: eScience Institute and MyriaUniversity of Washington

╠²

This document summarizes a presentation about Myria, a relational algorithmics-as-a-service platform developed by researchers at the University of Washington. Myria allows users to write queries and algorithms over large datasets using declarative languages like Datalog and SQL, and executes them efficiently in a parallel manner. It aims to make data analysis scalable and accessible for researchers across many domains by removing the need to handle low-level data management and integration tasks. The presentation provides an overview of the Myria architecture and compiler framework, and gives examples of how it has been used for projects in oceanography, astronomy, biology and medical informatics.SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...

SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...SERENEWorkshop

╠²

SERENE 2014 School on Engineering Resilient Cyber Physical Systems

Talk: Measurement-Driven Resilience Design of Cloud-Based Cyber-Physical Systems, by Imre KocsisSERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...

SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...Henry Muccini

╠²

SERENE 2014 School on Engineering Resilient Cyber Physical Systems (http://serene.disim.univaq.it/2014/autumnschool/)Virtual Appliances, Cloud Computing, and Reproducible Research

Virtual Appliances, Cloud Computing, and Reproducible ResearchUniversity of Washington

╠²

This document discusses the roles that cloud computing and virtualization can play in reproducible research. It notes that virtualization allows for capturing the full computational environment of an experiment. The cloud builds on this by providing scalable resources and services for storage, computation and managing virtual machines. Challenges include costs, handling large datasets, and cultural adoption issues. Databases in the cloud may help support exploratory analysis of large datasets. Overall, the cloud shows promise for improving reproducibility by enabling sharing of full experimental environments and resources for computationally intensive analysis.Developing Sakai 3 style tools in Sakai 2.x

Developing Sakai 3 style tools in Sakai 2.xAuSakai

╠²

The document discusses developing Sakai 3 style tools in Sakai 2.x. It provides an overview of the Mandatory Subject Information project which aims to integrate subject outlines into Sakai using AJAX technology for improved usability and consistency. Examples are given of how AJAX can improve the development workflow and a sample outline management tool is demonstrated, including the JSON response structure and client-side processing.>>>>>>>

>>>>>>>gamingspitfire321

╠²

This document appears to be a student's project file on developing a School Management System. It includes sections like preface, certificate, acknowledgement, introduction, objectives, source code, and output. The project aims to create an automated system to enhance management of a school. It allows maintaining student and staff records, tracking attendance, and facilitating communication between stakeholders. The system is developed using Python and SQL for the backend database. It offers features like admission, updating details, generating transfer certificates for students and hiring, updating, deleting employee records.W-JAX Keynote - Big Data and Corporate Evolution

W-JAX Keynote - Big Data and Corporate Evolutionjstogdill

╠²

A look at corporate evolution from the industrial revolution to the information age - with a focus on how Big Data will make an impact.

Presented at W-JAX Java Conference in Munich Germany, 11-8-11Ndsa 2013-abrams-integrating-repositories-for-data-sharing

Ndsa 2013-abrams-integrating-repositories-for-data-sharingUniversity of California Curation Center

╠²

The thorough integration of information technology and resources into scientific workflows has nurtured a new paradigm of data-intensive science. However, far too much research activity still takes place in silos, to the detriment of open scientific inquiry and advancement. Data-intensive science would be facilitated by more universal adoption of good data management practices ensuring the ongoing viability and usability of all legitimate research outputs, including data, and the encouragement of data publication and sharing for reuse. The centerpiece of such data sharing is the digital repository, acting as the foundation for external value-added services supporting and promoting effective data acquisition, publication, discovery, and dissemination. Since a general-purpose curation repository will not be able to offer the same level of specialized user experience provided by disciplinary tools and portals, a layered model built on a stable repository core is an appropriate division of labor, taking best advantage of the relative strengths of the concerned systems.

The Merritt repository, operated by the University of California Curation Center (UC3) at the California Digital Library (CDL), functions as a curation core for several data sharing initiatives, including the eScholarship open access publishing platform, the DataONE network, and the Open Context archaeological portal. This presentation with highlight two recent examples of external integration for purposes of research data sharing: DataShare, an open portal for biomedical data at UC, San Francisco; and Research Hub, an Alfresco-based content management system at UC, Berkeley. They both significantly extend MerrittŌĆÖs coverage of the full research data lifecycle and workflows, both upstream, with augmented capabilities for data description, packaging, and deposit; and downstream, with enhanced domain-specific discovery. These efforts showcase the catalyzing effect that coupled integration of curation repositories and well-known public disciplinary search environments can have on research data sharing and scientific advancement.

Isab 11 for_slideshare

Isab 11 for_slideshareRichard Adams

╠²

This presentation describes the current status of SBSI in May 2011, and was presented at CSBE's annual scientific advisory board visit.Webinar: How Microsoft is changing the game with Windows Azure

Webinar: How Microsoft is changing the game with Windows AzureCommon Sense

╠²

The Windows Azure Common Sense Webinar! Solution Specialist with Microsoft Nate Shea-han will present ŌĆ£How Microsoft is changing the game with Windows AzureŌĆ£.

Learn the difference between Azure (PAAS) and Infrastructure As A Service and standing up virtual machines; how the datacenter evolution is driving down the cost of enterprise computing and about the modular datacenter and containers.

NateŌĆÖs focus area is on cloud offerings focalized on the Azure platform. He has a strong systems management and security background and has applied knowledge in this space to how companies can successfully and securely leverage the cloud as organization look to migrate workloads and application to the cloud. Nate currently resides in Houston, Tx and works with customers in Texas, Oklahoma, Arkansas and Louisiana.

The webinar is intended for:CIOs, CTOs, IT Managers, IT Developers, Lead Developers

Theits 2014 iaa s saas strategic focus

Theits 2014 iaa s saas strategic focusGreg Turmel

╠²

Presentation on Infrastructure as a Service (IaaS) and Software as a Service (SaaS): Projects in Tennessee Higher Education that are being done to reduce the overall cost to students improving ROI / TCO of existing systems and support.Chaos Patterns

Chaos PatternsBruce Wong

╠²

This document discusses chaos engineering and patterns for architecting distributed systems to fail gracefully. It introduces concepts like chaos monkey which intentionally introduces failures into systems to test resilience. Fallback patterns are discussed to handle failures through sacrificing accuracy or latency. The document advocates embracing a culture of chaos engineering to proactively test systems rather than only fixing failures reactively.Cloud computing for business

Cloud computing for businessAzure Group

╠²

Clive Gold from EMC shares his slides on how cloud computing benefits business and how it is being used successfully in the marketEvent driven-automation and workflows

Event driven-automation and workflowsDmitri Zimine

╠²

Presentation at the International Industry-Academia Workshop on Cloud Reliability and Resilience. 7-8 November 2016, Berlin, Germany.

Organized by EIT Digital and Huawei GRC, Germany.

Twitter: @CloudRR2016

Failures happen. Building resilient cloud infrastructure requires an end-to-end automated approach to failure remediation. This approach must go beyond the current DevOps model of monitoring the system and getting engineers alerted when a failure condition occurs.

Recently, event driven automation and workflows re-emerged as a way to automate troubleshooting, remediation, and a variety of Day-2 operations. Facebook famously uses FBAR to "save 16,000 engineer-hours, a day, in ops". Similar approaches had been reported by other hyper-scale cloud providers. Open-source auto-remediation platforms like StackStorm are replacing legacy Runbook automation products, and have been successfully used to automate applications, networks, security, and cloud infrastructure.

In this presentation we give a brief history of workflow automation, overview the common architecture ingredients of a typical event driven automation framework, compare and contrast alternative approaches to day-2 automation, and, most importantly, share real-world use cases and examples of applying event driven automation in operations.United by a Common Language

United by a Common LanguageAdam Leadbetter

╠²

A short summary of the history and evolution of the use of controlled vocabularies in oceanographic and marine science data managementThe Place of Schema.org in Linked Ocean Data

The Place of Schema.org in Linked Ocean DataAdam Leadbetter

╠²

This document discusses using Schema.org to describe marine data and link ocean data on the web. It provides background on linked data and Schema.org. It describes work done by various organizations to apply Schema.org to describe datasets, organizations, projects, and other marine data. This includes developing schemas and cataloging various types of marine data. Future work is discussed, such as supporting tabular data and linking to other vocabularies for different data types.More Related Content

Similar to Practical solutions to implementing "Born Connected" data systems (20)

VCO Simulation with Cadence Spectre

VCO Simulation with Cadence SpectreHoopeer Hoopeer

╠²

Electrical and Electronics website links

VCO Simulation with Cadence Spectre

Neural Networks and Statistical Learning

Voltage Controlled Oscillators

Application Note. PLL jitter measurements

Oscillator Phase Noise:

Link22Virtualization Landscape & Cloud Computing

Virtualization Landscape & Cloud ComputingAdhish Pendharkar

╠²

The document discusses the virtualization landscape in the financial industry. It addresses how virtualization relates to cloud computing, the challenges of regulations/standards, sustaining resiliency during flip/flops between production and DR sites, integrating legacy systems, and storage issues. The document also outlines where the industry currently stands with cloud services and active/active setups, and concludes by stating that financial institutions are embracing new technologies but fully embracing public cloud remains uncertain due to regulatory requirements.Where did my layer come from? The semantics of data release

Where did my layer come from? The semantics of data releaseAdam Leadbetter

╠²

This document discusses the semantics of spatial data release and provenance metadata. It introduces Adam Leadbetter from the Marine Institute and provides several relevant links on topics like linked data, the PROV ontology, and information on data publication and citation. Several citations and the author's contact details are also included.Bringing Energy into the Sharing Economy

Bringing Energy into the Sharing EconomyNexergy

╠²

Nexergy CEO Darius Salgo's presentation from the All Energy conference, Oct 2017. In the presentation he outlines the shift from a one-way to two-way distributed energy future, and the value of new tools like local energy trading in better managing the grid.How to Build Consistent and Scalable Workspaces for Data Science Teams

How to Build Consistent and Scalable Workspaces for Data Science TeamsElaine K. Lee

╠²

This document discusses how to build consistent and scalable workspaces for data science teams. It recommends identifying system requirements, stabilizing dependencies, increasing test coverage, and using continuous integration to ensure resources are available. It also suggests creating a pool of worker machines and asynchronous task queue to scale workloads. This allows tasks to run in isolated, identical environments and provides flexible use of cloud computing resources. Benefits include guaranteed task environments, extensibility, and a reusable command line interface. Examples of use cases provided are quality assurance testing and parallelizable data and model tasks.Growth Engineering

Growth EngineeringAkshika Wijesundara

╠²

The session I conducted at the Pre-Bootcamp series of AI-Driven Sri Lanka.

The following topics were covered:

ŌĆó Growth engineering / Hacking

ŌĆó Dave McClureŌĆÖs Pirate Metrics / Growth funnel

ŌĆó Growth Framework

ŌĆó Data architecture

ŌĆó Azure monitor logs

ŌĆó A/B testing

ŌĆó How to get into data science

Presentation talk: https://youtu.be/sxQxOlK5aGI

XLDB South America Keynote: eScience Institute and Myria

XLDB South America Keynote: eScience Institute and MyriaUniversity of Washington

╠²

This document summarizes a presentation about Myria, a relational algorithmics-as-a-service platform developed by researchers at the University of Washington. Myria allows users to write queries and algorithms over large datasets using declarative languages like Datalog and SQL, and executes them efficiently in a parallel manner. It aims to make data analysis scalable and accessible for researchers across many domains by removing the need to handle low-level data management and integration tasks. The presentation provides an overview of the Myria architecture and compiler framework, and gives examples of how it has been used for projects in oceanography, astronomy, biology and medical informatics.SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...

SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...SERENEWorkshop

╠²

SERENE 2014 School on Engineering Resilient Cyber Physical Systems

Talk: Measurement-Driven Resilience Design of Cloud-Based Cyber-Physical Systems, by Imre KocsisSERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...

SERENE 2014 School: Measurement-Driven Resilience Design of Cloud-Based Cyber...Henry Muccini

╠²

SERENE 2014 School on Engineering Resilient Cyber Physical Systems (http://serene.disim.univaq.it/2014/autumnschool/)Virtual Appliances, Cloud Computing, and Reproducible Research

Virtual Appliances, Cloud Computing, and Reproducible ResearchUniversity of Washington

╠²

This document discusses the roles that cloud computing and virtualization can play in reproducible research. It notes that virtualization allows for capturing the full computational environment of an experiment. The cloud builds on this by providing scalable resources and services for storage, computation and managing virtual machines. Challenges include costs, handling large datasets, and cultural adoption issues. Databases in the cloud may help support exploratory analysis of large datasets. Overall, the cloud shows promise for improving reproducibility by enabling sharing of full experimental environments and resources for computationally intensive analysis.Developing Sakai 3 style tools in Sakai 2.x

Developing Sakai 3 style tools in Sakai 2.xAuSakai

╠²

The document discusses developing Sakai 3 style tools in Sakai 2.x. It provides an overview of the Mandatory Subject Information project which aims to integrate subject outlines into Sakai using AJAX technology for improved usability and consistency. Examples are given of how AJAX can improve the development workflow and a sample outline management tool is demonstrated, including the JSON response structure and client-side processing.>>>>>>>

>>>>>>>gamingspitfire321

╠²

This document appears to be a student's project file on developing a School Management System. It includes sections like preface, certificate, acknowledgement, introduction, objectives, source code, and output. The project aims to create an automated system to enhance management of a school. It allows maintaining student and staff records, tracking attendance, and facilitating communication between stakeholders. The system is developed using Python and SQL for the backend database. It offers features like admission, updating details, generating transfer certificates for students and hiring, updating, deleting employee records.W-JAX Keynote - Big Data and Corporate Evolution

W-JAX Keynote - Big Data and Corporate Evolutionjstogdill

╠²

A look at corporate evolution from the industrial revolution to the information age - with a focus on how Big Data will make an impact.

Presented at W-JAX Java Conference in Munich Germany, 11-8-11Ndsa 2013-abrams-integrating-repositories-for-data-sharing

Ndsa 2013-abrams-integrating-repositories-for-data-sharingUniversity of California Curation Center

╠²

The thorough integration of information technology and resources into scientific workflows has nurtured a new paradigm of data-intensive science. However, far too much research activity still takes place in silos, to the detriment of open scientific inquiry and advancement. Data-intensive science would be facilitated by more universal adoption of good data management practices ensuring the ongoing viability and usability of all legitimate research outputs, including data, and the encouragement of data publication and sharing for reuse. The centerpiece of such data sharing is the digital repository, acting as the foundation for external value-added services supporting and promoting effective data acquisition, publication, discovery, and dissemination. Since a general-purpose curation repository will not be able to offer the same level of specialized user experience provided by disciplinary tools and portals, a layered model built on a stable repository core is an appropriate division of labor, taking best advantage of the relative strengths of the concerned systems.

The Merritt repository, operated by the University of California Curation Center (UC3) at the California Digital Library (CDL), functions as a curation core for several data sharing initiatives, including the eScholarship open access publishing platform, the DataONE network, and the Open Context archaeological portal. This presentation with highlight two recent examples of external integration for purposes of research data sharing: DataShare, an open portal for biomedical data at UC, San Francisco; and Research Hub, an Alfresco-based content management system at UC, Berkeley. They both significantly extend MerrittŌĆÖs coverage of the full research data lifecycle and workflows, both upstream, with augmented capabilities for data description, packaging, and deposit; and downstream, with enhanced domain-specific discovery. These efforts showcase the catalyzing effect that coupled integration of curation repositories and well-known public disciplinary search environments can have on research data sharing and scientific advancement.

Isab 11 for_slideshare

Isab 11 for_slideshareRichard Adams

╠²

This presentation describes the current status of SBSI in May 2011, and was presented at CSBE's annual scientific advisory board visit.Webinar: How Microsoft is changing the game with Windows Azure

Webinar: How Microsoft is changing the game with Windows AzureCommon Sense

╠²

The Windows Azure Common Sense Webinar! Solution Specialist with Microsoft Nate Shea-han will present ŌĆ£How Microsoft is changing the game with Windows AzureŌĆ£.

Learn the difference between Azure (PAAS) and Infrastructure As A Service and standing up virtual machines; how the datacenter evolution is driving down the cost of enterprise computing and about the modular datacenter and containers.

NateŌĆÖs focus area is on cloud offerings focalized on the Azure platform. He has a strong systems management and security background and has applied knowledge in this space to how companies can successfully and securely leverage the cloud as organization look to migrate workloads and application to the cloud. Nate currently resides in Houston, Tx and works with customers in Texas, Oklahoma, Arkansas and Louisiana.

The webinar is intended for:CIOs, CTOs, IT Managers, IT Developers, Lead Developers

Theits 2014 iaa s saas strategic focus

Theits 2014 iaa s saas strategic focusGreg Turmel

╠²

Presentation on Infrastructure as a Service (IaaS) and Software as a Service (SaaS): Projects in Tennessee Higher Education that are being done to reduce the overall cost to students improving ROI / TCO of existing systems and support.Chaos Patterns

Chaos PatternsBruce Wong

╠²

This document discusses chaos engineering and patterns for architecting distributed systems to fail gracefully. It introduces concepts like chaos monkey which intentionally introduces failures into systems to test resilience. Fallback patterns are discussed to handle failures through sacrificing accuracy or latency. The document advocates embracing a culture of chaos engineering to proactively test systems rather than only fixing failures reactively.Cloud computing for business

Cloud computing for businessAzure Group

╠²

Clive Gold from EMC shares his slides on how cloud computing benefits business and how it is being used successfully in the marketEvent driven-automation and workflows

Event driven-automation and workflowsDmitri Zimine

╠²

Presentation at the International Industry-Academia Workshop on Cloud Reliability and Resilience. 7-8 November 2016, Berlin, Germany.

Organized by EIT Digital and Huawei GRC, Germany.

Twitter: @CloudRR2016

Failures happen. Building resilient cloud infrastructure requires an end-to-end automated approach to failure remediation. This approach must go beyond the current DevOps model of monitoring the system and getting engineers alerted when a failure condition occurs.

Recently, event driven automation and workflows re-emerged as a way to automate troubleshooting, remediation, and a variety of Day-2 operations. Facebook famously uses FBAR to "save 16,000 engineer-hours, a day, in ops". Similar approaches had been reported by other hyper-scale cloud providers. Open-source auto-remediation platforms like StackStorm are replacing legacy Runbook automation products, and have been successfully used to automate applications, networks, security, and cloud infrastructure.

In this presentation we give a brief history of workflow automation, overview the common architecture ingredients of a typical event driven automation framework, compare and contrast alternative approaches to day-2 automation, and, most importantly, share real-world use cases and examples of applying event driven automation in operations.Ndsa 2013-abrams-integrating-repositories-for-data-sharing

Ndsa 2013-abrams-integrating-repositories-for-data-sharingUniversity of California Curation Center

╠²

More from Adam Leadbetter (20)

United by a Common Language

United by a Common LanguageAdam Leadbetter

╠²

A short summary of the history and evolution of the use of controlled vocabularies in oceanographic and marine science data managementThe Place of Schema.org in Linked Ocean Data

The Place of Schema.org in Linked Ocean DataAdam Leadbetter

╠²

This document discusses using Schema.org to describe marine data and link ocean data on the web. It provides background on linked data and Schema.org. It describes work done by various organizations to apply Schema.org to describe datasets, organizations, projects, and other marine data. This includes developing schemas and cataloging various types of marine data. Future work is discussed, such as supporting tabular data and linking to other vocabularies for different data types.Connected Ocean Data

Connected Ocean DataAdam Leadbetter

╠²

Adam Leadbetter is an expert in data management, oceanography, and long-distance running who works for the Marine Institute in Ireland. He is interested in connecting ocean data and emerging technologies to advance oceanography.Linking Open Data in Ireland's Digital Ocean

Linking Open Data in Ireland's Digital OceanAdam Leadbetter

╠²

A presentation given during the Marine Institute's in-company event as part of the Galway AtlanTEC Festival of Technology, May 2016.Ocean Data Interoperability Platform - Vocabularies: DOIs for NVS Controlled ...

Ocean Data Interoperability Platform - Vocabularies: DOIs for NVS Controlled ...Adam Leadbetter

╠²

Ocean Data Interoperability Platform

A short presentation as a discussion starter. How might we implement Persistent Identifiers for the SKOS Concepts in hte NERC Vocabulary Server?Ocean Data Interoperability Platform - Big Data: Velocity

Ocean Data Interoperability Platform - Big Data: VelocityAdam Leadbetter

╠²

Ocean Data Interoperabilkity Platform - May 2016 workshop in Boulder CO.

Session introduction and an update on work in the streaming data realm.Research Vessel Data Management

Research Vessel Data ManagementAdam Leadbetter

╠²

A presentation to the Research Vessel Users Workshop at the Marine Institute, Ireland on 28th April 2016. Highlighting recent progress and future directions in managing data from the fleet.Linked Ocean Data

Linked Ocean DataAdam Leadbetter

╠²

Lecture to the Ocean Teacher Global Academy course on Research Data Management in November 2015. Topics covered include the history of data formats in marine data management; introduction to the Semantic Web and Linked Data; current state of the art in Linked Ocean Data; and future research directions in Linked Data and Big Data combinations.Let's talk about data: Citation and publication

Let's talk about data: Citation and publicationAdam Leadbetter

╠²

This document discusses citation and publication of data from various marine research organizations. It provides links to sites hosting Irish marine data and research on data infrastructure. It addresses issues like making data openly accessible, ensuring catalogue entries are citable, and having organizational policies for persistent storage. The document asks for questions and lists upcoming workshops to further discuss working with marine research data.Linked Ocean Data

Linked Ocean DataAdam Leadbetter

╠²

Presentation to INSIGHT @ NUI Galway (16th November 2015) on Linked Data and streaming data in an oceanographic contextIrish Integrated Digital Ocean

Irish Integrated Digital OceanAdam Leadbetter

╠²

A 5-minute lightning talk at the 2015 INFOMAR seminar, highlighting the concept and public demonstrator for Ireland's Digital Ocean concept: moving beyond data cataloguing to a coherent platform for exploring marine data and information.Ocean Data Interoperability Platform - Big Data - Streams & Workflows

Ocean Data Interoperability Platform - Big Data - Streams & WorkflowsAdam Leadbetter

╠²

This document summarizes differences between 20th century and 21st century data processing approaches. In the 20th century, single machines were used for one-to-one communication with fixed schemas and encodings, while the 21st century utilizes distributed processing with publish-subscribe patterns, replication for fault tolerance, and schema management with evolvable encodings. It also lists further work such as investigating architectures for reprocessing historic data, incorporating standards like Sensor Web Enablement and OM-JSON, deploying to mobile/remote platforms, and investigating Apache NiFi.Ocean Data Interoperability Platform - Big Data

Ocean Data Interoperability Platform - Big DataAdam Leadbetter

╠²

Introduction to the Big Data & Model Workflows session at the ODIP-II workshop, Paris, September 2015Vocabulary Services in EMODNet and SeaDataNet

Vocabulary Services in EMODNet and SeaDataNetAdam Leadbetter

╠²

Presentation to the Climate Information Portal (CLIP-C) workshop on developing scientific data portals.

Covering why vocabularies; history of vocabularies in marine data management; overview of vocabulary usage in faceted searchOceans of Linked Data

Oceans of Linked DataAdam Leadbetter

╠²

This document discusses linking oceanographic data on the web. It provides several examples of URLs and metadata for ocean data, instruments, and projects. It also lists the LinkedOceanData GitHub page, which aims to serve datasets and publish ocean data on the web for increased access and reuse. The author is identified as Adam Leadbetter from the British Oceanographic Data Centre.Oceans of Data

Oceans of DataAdam Leadbetter

╠²

The document discusses oceans of data and provides information about ocean data networks and centers like OceanNet, SeaDataNet, and IODE. It emphasizes the importance of serving datasets to users, properly citing datasets, and publishing datasets to make them accessible and usable by others. Contact information is provided for the author Adam Leadbetter from the British Oceanographic Data Centre.Semantically supporting data discovery, markup and aggregation in EMODnet

Semantically supporting data discovery, markup and aggregation in EMODnetAdam Leadbetter

╠²

1) The document discusses creating aggregated parameters and exposing the underlying semantic model for discoverability and interoperability across various ocean data projects.

2) It describes the process of semantically aggregating parameters which includes deciding on the aggregated parameter name and codes to include from the Parameter Usage Vocabulary.

3) Exposing the semantic relationships through RDF/XML drivers and keeping governance informed of changes will allow software to dynamically retrieve aggregated parameter definitions.We Have "Born Digital" - Now What About "Born Semantic"?

We Have "Born Digital" - Now What About "Born Semantic"?Adam Leadbetter

╠²

The document discusses efforts to semantically annotate ocean observational data from the point of collection. This includes prototyping the annotation of SeaBird CTD data with RDFa and collaborating with sensor manufacturers to map file headers to SKOS concepts. The goal is to better describe and assess data quality for specific uses and enable (near) real-time linked data. Two approaches are outlined: building community semantics or reusing existing resources, with common ground being to embed semantics in OGC sensor web enablement documents.Oceans of Linked Data?

Oceans of Linked Data?Adam Leadbetter

╠²

The document discusses linking oceanographic data on the web using semantic technologies. It introduces the concept of a "Linked Ocean Data Cloud" to make ocean data more accessible and usable by connecting related data from different sources. The author advocates for using common vocabularies and ontologies to describe ocean data to facilitate integration and discovery across datasets.Semantically Aggregating Marine Science Data

Semantically Aggregating Marine Science DataAdam Leadbetter

╠²

Presented at the American Geophysical Union Fall Meeting 2014 (8th - 13th December) in San Francisco.Recently uploaded (20)

(Journal Club) - Sci-fate Characterizes the Dynamics of Gene Expression in Si...

(Journal Club) - Sci-fate Characterizes the Dynamics of Gene Expression in Si...David Podorefsky, PhD

╠²

Journal Club (4/27/20)

Sci-fate Characterizes the Dynamics of Gene Expression in Single CellsTelescope equatorial mount polar alignment quick reference guide

Telescope equatorial mount polar alignment quick reference guidebartf25

╠²

Telescope equatorial mount polar alignment quick reference guide. Helps with accurate alignment and improved guiding for your telescope. Provides a step-by-step process but in a summarized format so that the quick reference guide can be reviewed and the steps repeated while you are out under the stars with clear skies preparing for a night of astrophotography imaging or visual observing.Leafcurl viral disease presentation.pptx

Leafcurl viral disease presentation.pptxMir Ali M

╠²

This ppt shows about viral disease in plants and vegetables.It shows different species of virus effect on plants along their vectors which carries those tiny microbes.Plant tissue culture- In-vitro Rooting.ppt

Plant tissue culture- In-vitro Rooting.pptlaxmichoudhary77657

╠²

In vitro means production in a test tube or other similar vessel where culture conditions and medium are controlled for optimum growth during tissue culture.

It is a critical step in plant tissue culture where roots are induced and developed from plant explants in a controlled, sterile environment.

║▌║▌▀Ż include factors affecting In-vitro Rooting, steps involved, stages and In vitro rooting of the two genotypes of Argania Spinosa in different culture media.(Chapter Summary) Molecular Biology of the Cell, Chapter 18: Cell Death

(Chapter Summary) Molecular Biology of the Cell, Chapter 18: Cell DeathDavid Podorefsky, PhD

╠²

Molecular Biology of the Cell, Chapter 18: Cell Death

(August 23rd, 2020)Propagation of electromagnetic waves in free space.pptx

Propagation of electromagnetic waves in free space.pptxkanmanivarsha

╠²

The material discusses about electromagnetic wave propagation in free space and discusses about orientation of E and H vectorsDirect Gene Transfer Techniques for Developing Transgenic Plants

Direct Gene Transfer Techniques for Developing Transgenic PlantsKuldeep Gauliya

╠²

This presentation will explain all the methods adopted for developing transgenic plant using direct gene transfer technique.(Journal Club) Focused ultrasound excites neurons via mechanosensitive calciu...

(Journal Club) Focused ultrasound excites neurons via mechanosensitive calciu...David Podorefsky, PhD

╠²

Journal Club (2/11/21)

Focused ultrasound excites neurons via mechanosensitive calcium accumulation and ion channel amplificationRespiration & Gas Exchange | Cambridge IGCSE Biology

Respiration & Gas Exchange | Cambridge IGCSE BiologyBlessing Ndazie

╠²

This IGCSE Biology presentation explains respiration and gas exchange, covering the differences between aerobic and anaerobic respiration, the structure of the respiratory system, gas exchange in the lungs, and the role of diffusion. Learn about the effects of exercise on breathing, how smoking affects the lungs, and how respiration provides energy for cells. A perfect study resource for Cambridge IGCSE students preparing for exams!ABA_in_plant_abiotic_stress_mitigation1.ppt

ABA_in_plant_abiotic_stress_mitigation1.pptlaxmichoudhary77657

╠²

║▌║▌▀Ż describe the role of ABA in plant abiotic stress mitigation. ║▌║▌▀Ż include role of ABA in cold stress, drought stress and salt stress mitigation along with role of ABA in stomatal regulation. first law of thermodynamics class 12(chemistry) final.pdf

first law of thermodynamics class 12(chemistry) final.pdfismitguragain527

╠²

this is best note of thermodynamics chemsitry

(Journal Club) - Integration of multiple lineage measurements from the same c...

(Journal Club) - Integration of multiple lineage measurements from the same c...David Podorefsky, PhD

╠²

Journal Club (3/18/22)

Integration of multiple lineage measurements from the same cell reconstructs parallel tumor evolutionExcretion in Humans | Cambridge IGCSE Biology

Excretion in Humans | Cambridge IGCSE BiologyBlessing Ndazie

╠²

This IGCSE Biology presentation covers excretion in humans, explaining the removal of metabolic wastes such as carbon dioxide, urea, and excess salts. Learn about the structure and function of the kidneys, the role of the liver in excretion, ultrafiltration, selective reabsorption, and the importance of homeostasis. Includes diagrams and explanations to help Cambridge IGCSE students prepare effectively for exams!QUIZ 1 in SCIENCE GRADE 10 QUARTER 3 BIOLOGY

QUIZ 1 in SCIENCE GRADE 10 QUARTER 3 BIOLOGYtbalagbis5

╠²

QUIZ 1 in SCIENCE GRADE 10 QUARTER 3 BIOLOGYInvestigational New drug application process

Investigational New drug application processonepalyer4

╠²

This file basically contains information related to IND application process in order to get approval for clinical trials.vibration-rotation spectra of a diatomic molecule.pptx

vibration-rotation spectra of a diatomic molecule.pptxkanmanivarsha

╠²

The material discusses about the energy levels in a molecule which have vibrational and rotational motion.(Journal Club) - Sci-fate Characterizes the Dynamics of Gene Expression in Si...

(Journal Club) - Sci-fate Characterizes the Dynamics of Gene Expression in Si...David Podorefsky, PhD

╠²

(Journal Club) Focused ultrasound excites neurons via mechanosensitive calciu...

(Journal Club) Focused ultrasound excites neurons via mechanosensitive calciu...David Podorefsky, PhD

╠²

(Journal Club) - Integration of multiple lineage measurements from the same c...

(Journal Club) - Integration of multiple lineage measurements from the same c...David Podorefsky, PhD

╠²

Practical solutions to implementing "Born Connected" data systems

- 1. Practical solutions to implementing "Born Connected" data systems Adam Leadbetter, Marine Institute (adam.leadbetter@marine.ie) Justin Buck, British Oceanographic Data Centre Paul Stacey, Institute of Technology Blanchardstown

- 5. ŌĆ£Repositioning data management near data acquisitionŌĆØ Diviacco, Sorribas, Casas Munoz, de Cauwer, Busato, & Scory (2016)

- 7. Semantically Annotate / Connect ASAP! Memory, processing power Power Communications Constrained http://www.techworks.ie/media/cms_page_media/22/TechWorks%20Marine.700x450.jpg

- 8. http://argo.ucsd.edu Sensor Observation Service Observations & Measurements

- 9. http://argo.ucsd.edu Sensor Observation Service Observations & Measurements

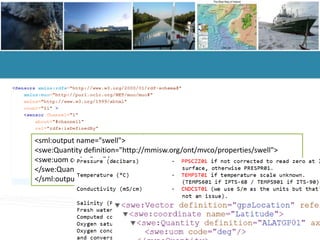

- 10. <sml:output name="swell"> <swe:Quantity definition="http://mmisw.org/ont/mvco/properties/swell"> <swe:uom code="cm"/> </swe:Quantity> </sml:output>

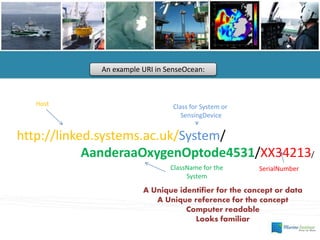

- 11. An example URI in SenseOcean: http://linked.systems.ac.uk/System/ AanderaaOxygenOptode4531/XX34213/ ClassName for the System SerialNumber Class for System or SensingDevice Host A Unique identifier for the concept or data A Unique reference for the concept Computer readable Looks familiar

- 12. Subject Predicate Object <lso:Aanderaa4531OxygenOptodeXXX34> <gr:hasMakeAndModel><lso:Aanderaa4531OxygenOptode> <ssn:onPlatform> < L06:25 > <ssn:hasDeployment> <DeploymentXX6E> < L06:25 > <ssn:inDeployment> <DeploymentXX6E>

- 13. Subject Predicate Object <ObservationXX34> <rdf:type>< oml:Measurement> <ObservationXX34 > <rdfs:label><Observation test 1> <ObservationXX34 > <oml:featureOfInterest><lso:Sea> <ObservationXX34 > <oml:observedProperty><DOXY> <ObservationXX34 > <oml:result> <http://www.bodc.ac.uk/..../..../> <ObservationXX34 ><prov:wasAssociatedWith><Person> <Person><foaf:name>Alex <ObservationXX34 ><prov:wasgeneratedBy><CruiseXXX>

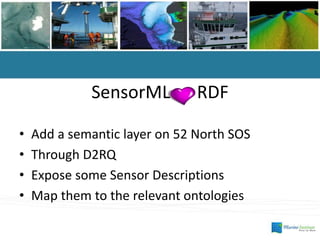

- 14. SensorML RDF ŌĆó Add a semantic layer on 52 North SOS ŌĆó Through D2RQ ŌĆó Expose some Sensor Descriptions ŌĆó Map them to the relevant ontologies

- 24. Adam Leadbetter, Marine Institute, Ireland adam.leadbetter@marine.ie @AdamLeadbetter https://github.com/IrishMarineInstitute/ sensor-observation-service https://github.com/peterataylor/om-json

Editor's Notes

- #2: Acknowledge: Janet Fredericks @ WHOI Damian Smyth & Rob Fuller @ MI Alexandra Kokinakki @ BODC Born Digital -> Born Semantic -> Born Connected

- #3: Why? Traditionally ŌĆō ocean data has been structured, and particularly, linked post fact

- #4: Why? Traditionally ŌĆō ocean data has been structured, and particularly, linked post fact Gliders, Argo floats, ROVs, seafloor observatories break the sustainability of that model

- #5: Why? Traditionally ŌĆō ocean data has been structured, and particularly, linked post fact Gliders, Argo floats, ROVs, seafloor observatories break the sustainability of that model ShepherdŌĆÖs metaphor Do you have the time to go to Dagobah and take the training with the ŌĆ£Big DataŌĆØ age Lesley Wyborn ŌĆ£Data needs to be ŌĆśBorn ConnectedŌĆÖ to enable Transdisciplinary ScienceŌĆØ ŌĆō and beginning at conceived connected! SoŌĆ”

- #8: Extending the ŌĆ£Born SemanticŌĆØ to ultra-constrained observation environments Achieving ŌĆ£Born SemanticŌĆØ data in an ultra-constrained environment presents more difficulties. Communications may be intermittent, very low bandwidth, data-logger must be highly power efficient etc.. Extending the ŌĆ£Born SemanticŌĆØ to ultra-resource constrained environments There has been a recently flurry of development activity around Internet of Things (IoT) technologies. This has lead to a drive for IoT enabling technologies that presents opportunities to further realise the concept of Born Semantic data, pushing the semantic annotation closer to the data capture point. These technologies are all about ŌĆ£squeezing the bitsŌĆØ reducing storage, processing and communication overhead. Low-power, highly efficient operating systems such as TinyOS and Contiki (among others) provide ŌĆ£powerful enoughŌĆØ capabilities to leverage semantic annotation efforts. Fernandez et al. have recently addressed compression of RDF, with the Header-Dictionary-Triples approach that compresses tuple elements into a dictionary, followed by a compressed representation of triples of dictionary keys. This approach is only applicable to large data sets, which is not an option in a constrained environment. Wiselib TupleStore and RDF provider, provides a suitable solution here as it is a light weight flexible data storage solution. The Constrained Application Protocol (CoAP) is a specialised web ╠²transfer protocol for use with constrained embedded systems and networks. CoAP is designed to easily interface with HTTP for integration with the Web with very low overhead, and simplicity for constrained environments. Although HTTP is the defacto standard for RESTful architectures CoAP. CoAP specifies a minimal subset of REST requests (GET, POST, PUT, and DELETE) it also relies on UDP as a transport protocol while providing reliability with a simple built-in retransmission mechanism and so the communications overhead is small compared to HTTP.

- #10: Ocean Data Interoperability Platform 52N plus others Different encodings for SOS results RESTful URLs for SOS access

- #11: EGU 2014 ŌĆō prototypes in RDFa (CTD); SensorML 1.0 (Qartod 2 OGC ŌĆō now re-funded as X-DOMES; Direct embedding concept ids in file headers (Lake Ellswort Drilling Project) or SWE XML definitions (Q2O). Funding from EU SenseOCEAN, BRIDGES, OpenGovIntelligence Funding from SEAI Onto SenseOCEAN ŌĆō slides from BODC

- #12: First step is a sensor / instrument register Built on Fuseki ŌĆō with custom Java API Live in next few months

- #13: SSN = has some issues with alignment later with O&M, which will be introduced in the next slideŌĆō Simon Cox will go into detailsŌĆ”

- #14: Ideally associated with something like an ORCiD not just the personŌĆÖs name

- #15: We have created the models. But we are still gathering metadata from the manufacturers. So will be able to publish some example sensor descriptions soon enough (couple of months).

- #19: Single machine Distributed processing One-to-one communication Publish-subscribe pattern No fault tolerance Replication, auto-recovery Fixed schema, encoding Schema management, evolvable encoding

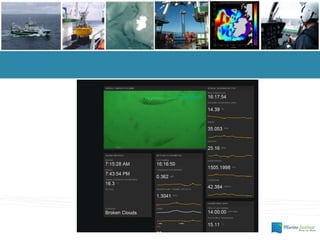

- #22: Simon Cox & Peter Taylor presentation at OGC TC in September 2015 Work ongoing in Ocean Acidification community to use the proposed O&M JSON schema Here is a snapshot from a SOS call to the Galway Bay Cable Observatory

- #24: Adding a JSON-LD context to the output allows us to generate a triple-ified model of the SOS outputŌĆ”